Dell 14 Plus 2-in-1: One-minute review

The Dell 14 Plus 2-in-1 is the latest hybrid laptop from the venerable Windows laptop maker, marking its first 2-in-1 since the company's major rebranding earlier this year, which replaced the Dell Inspiron 14 Plus 2-in-1.

Fortunately, as with the clamshell Dell 14 Plus, Dell's latest 2-in-1 offers very solid performance at a fantastic price. But given that this is a 2-in-1, typically a form factor where the display takes center stage, the dim, lackluster panel makes this a less attractive option as a 2-in-1 than its clamshell sibling.

Starting at $649.99 / £849 / AU$1,498.20, the Dell 14 Plus 2-in-1 does earn its place among the best 2-in-1 laptops this year thanks to its affordable starting price, especially in the US and Australia where the AMD Ryzen AI 300 configurations are available. When these configurations make it to the UK, the prices there ought to be much cheaper to start as well.

Performance-wise, the 14 Plus 2-in-1 isn't much different than the standard 14 Plus, so what you're really looking for here is the versatility that comes with a 2-in-1.

Unfortunately, this versatility is undermined by the display quality, which is much more important on a 2-in-1. So while I found the rather dim FHD+ display on the 14 Plus to be an acceptable compromise to keep the price down, it's a much bigger negative on the 14 Plus 2-in-1.

That's not to say the Dell 14 Plus 2-in-1 is necessarily bad, or even that its display is an absolute dealbreaker. Given its price and level of performance, the display doesn't keep it from being one of the best student laptops on the market right now, and it also remains one of the best Dell laptops on offer currently. Just be prepared to look past a couple of flaws if you decide to pick one up.

Dell 14 Plus: Price & availability

- How much does it cost? Starts at $649.99 / £849 / AU$1,498.20

- When is it available? It's available now

- Where can you get it? You can buy it in the US, UK, and Australia through Dell’s website and other retailers.

Easily the best feature of the Dell 14 Plus 2-in-1 is its excellent pricing. Starting at $649.99 / £849 / AU$1,498.20, there aren't going to be many Windows laptops with solid entry-level specs at this price point. For those who are more price-sensitive (such as students, general users, and enterprise fleet managers), the 14 Plus 2-in-1 really should be at the top of your list if you're in the market for a 2-in-1.

Even better, of course, is that Dell regularly runs sales on its products, so it should be fairly easy to find this laptop for even cheaper (especially around holidays or other major sales events like Amazon Prime Day).

- Value: 5 / 5

Dell 14 Plus 2-in-1: Specs

- Configurations vary considerably between the US, UK, and Australia

- Options for both Intel Core Ultra 200V and AMD Ryzen AI 300 processors

- No discrete graphics options

The starting specs for the Dell 14 Plus 2-in-1 feature 16GB DDR5 RAM, 512GB PCIe SSD storage, and a 16:10 FHD+ (1200p) IPS touchscreen display with 300-nit max brightness. The only difference between the US, UK, and Australia is that the US and Aussies start off with an AMD Ryzen 5 AI 340 processor with Radeon 840M graphics, and the UK starting configuration comes with an Intel Core Ultra 5 226V chip.

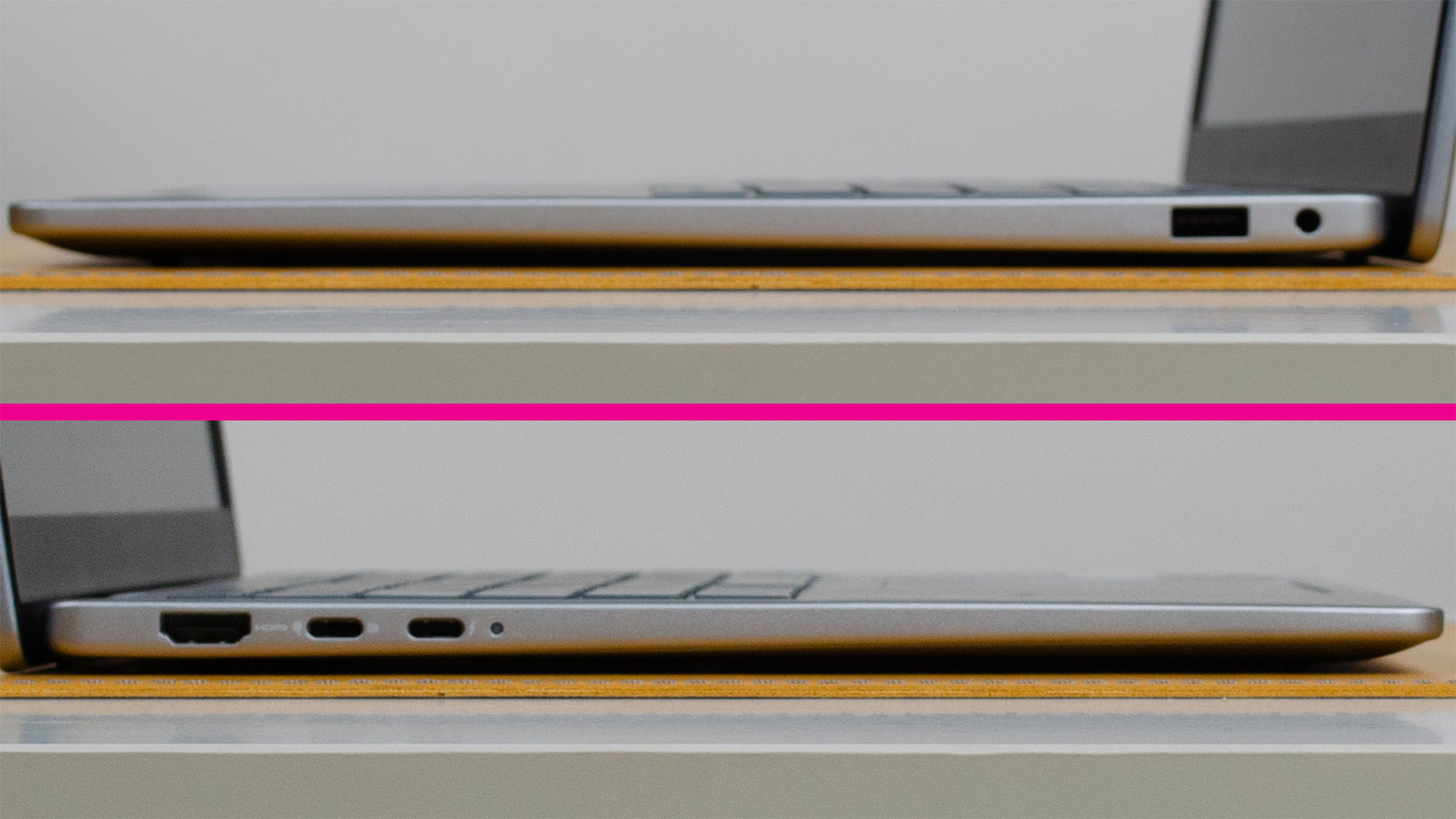

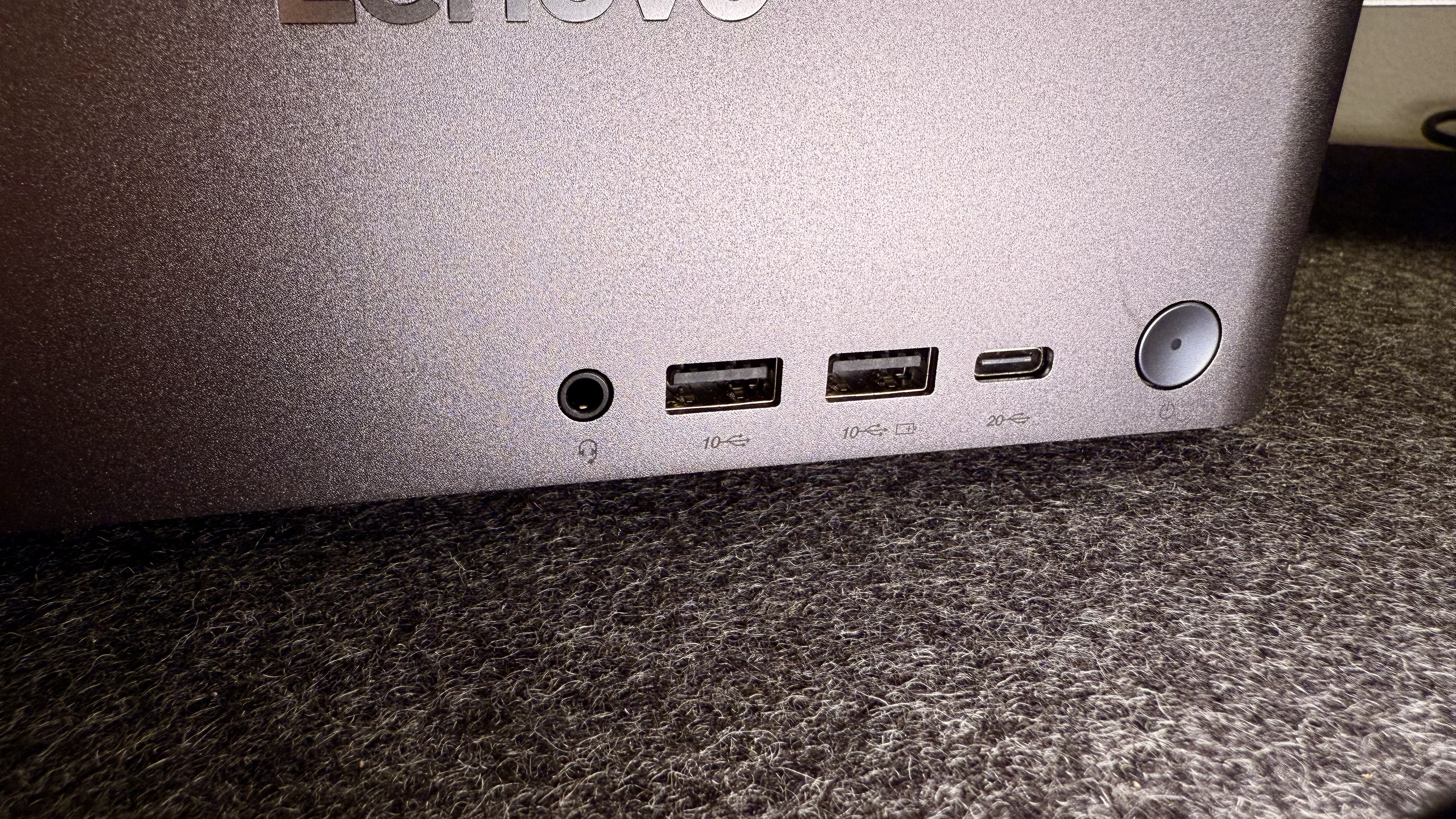

This also means that while the US and Australian starting configuration only has two USB-C Gen 3.2 ports, the UK swaps one of these out for a full Thunderbolt 4 port.

Region | US | UK | Australia |

|---|---|---|---|

Price: | |||

CPU: | AMD Ryzen AI 5 340 | Intel Core Ultra 5 226V | AMD Ryzen AI 5 340 |

GPU: | AMD Radeon 840M Graphics | Intel Arc Xe2 (140V) | AMD Radeon 840M Graphics |

Memory: | 16GB LPDDR5X-7500 | 16GB LPDDR5X-8533 | 16GB LPDDR5X-7500 |

Storage: | 512GB SSD | 512GB SSD | 512GB SSD |

Screen: | 14-inch 16:10 FHD+ (1200p), 300-nit, touch IPS | 14-inch 16:10 FHD+ (1200p), 300-nit, touch IPS | 14-inch 16:10 FHD+ (1200p), 300-nit, touch IPS |

Ports: | 2 x USB-C 3.2 Gen 2 w/ DP and Power Delivery, 1 x USB 3.2 Gen 1 Type-A, 1 x HDMI 1.4, 1 x combo jack | 1 x USB 3.2 Gen 1, 1 x USB 3.2 Gen 2 Type-C w/ DP 1.4 and Power Delivery, 1 x Thunderbolt 4 w/ DP 2.1 and Power Delivery, 1 x HDMI 2.1, 1 x combo jack | 2 x USB-C 3.2 Gen 2 w/ DP and Power Delivery, 1 x USB 3.2 Gen 1 Type-A, 1 x HDMI 1.4, 1 x combo jack |

Battery (WHr): | 64 WHr | 64 WHr | 64 WHr |

Wireless: | WiFi 7, BT 5.4 | WiFi 7, BT 5.4 | WiFi 7, BT 5.4 |

Camera: | 1080p@30fps | 1080p@30fps | 1080p@30fps |

Weight: | 3.35 lb (1.52 kg) | 3.42 lbs (1.55kg) | 3.35 lbs (1.52kg) |

Dimensions: | 12.36 x 8.9 x 0.65 ins | (314 x 226.15 x 16.39mm) | 12.36 x 8.9 x 0.65 ins | (314 x 226.15 x 16.39mm) | 12.36 x 8.9 x 0.65 ins | (314 x 226.15 x 16.39mm) |

For the max spec, the US and UK can configure the 14 Plus 2-in-1 with an Intel Core Ultra 9 288V processor with Intel Arc Xe2 (140V) graphics, while Australia tops out at an AMD Ryzen AI 7 350 processor with Radeon 840M graphics. The US config also maxes out at 32GB DDR5 RAM and 1TB storage, while the UK and Australia max out at 16GB DDR5 RAM, and 512GB and 1TB storage, respectively.

Region | US | UK | Australia |

|---|---|---|---|

Price: | |||

CPU: | Intel Core Ultra 9 288V | Intel Core Ultra 9 288V | AMD Ryzen AI 7 350 |

GPU: | Intel Arc Xe2 (140V) Graphics | Intel Arc Xe2 (140V) Graphics | AMD Radeon 840M Graphics |

Memory: | 32GB LPDDR5X-8533 | 16GB LPDDR5X-7500 | 16GB LPDDR5X-7500 |

Storage: | 1TB NVMe SSD | 512GB NVMe SSD | 1TB NVMe SSD |

Screen: | 14-inch 16:10 FHD+ (1200p), 300-nit, touch IPS | 14-inch 16:10 FHD+ (1200p), 300-nit, touch IPS | 14-inch 16:10 FHD+ (1200p), 300-nit, touch IPS |

Ports: | 1 x USB 3.2 Gen 1, 1 x USB 3.2 Gen 2 Type-C w/ DP 1.4 and Power Delivery, 1 x Thunderbolt 4 w/ DP 2.1 and Power Delivery, 1 x HDMI 2.1, 1 x combo jack | 1 x USB 3.2 Gen 1, 1 x USB 3.2 Gen 2 Type-C w/ DP 1.4 and Power Delivery, 1 x Thunderbolt 4 w/ DP 2.1 and Power Delivery, 1 x HDMI 2.1, 1 x combo jack | 2 x USB-C 3.2 Gen 2 w/ DP and Power Delivery, 1 x USB 3.2 Gen 1 Type-A, 1 x HDMI 1.4, 1 x combo jack |

Battery (WHr): | 64 WHr | 64 WHr | 64 WHr |

Wireless: | WiFi 7, BT 5.4 | WiFi 7, BT 5.4 | WiFi 7, BT 5.4 |

Camera: | 1080p@30fps | 1080p@30fps | 1080p@30fps |

Weight: | 3.42 lbs (1.55kg) | 3.42 lbs (1.55kg) | 3.35 lb (1.52 kg) |

Dimensions: | 12.36 x 8.9 x 0.65 ins | (314 x 226.15 x 16.39mm) | 12.36 x 8.9 x 0.65 ins | (314 x 226.15 x 16.39mm) | 12.36 x 8.9 x 0.65 ins | (314 x 226.15 x 16.39mm) |

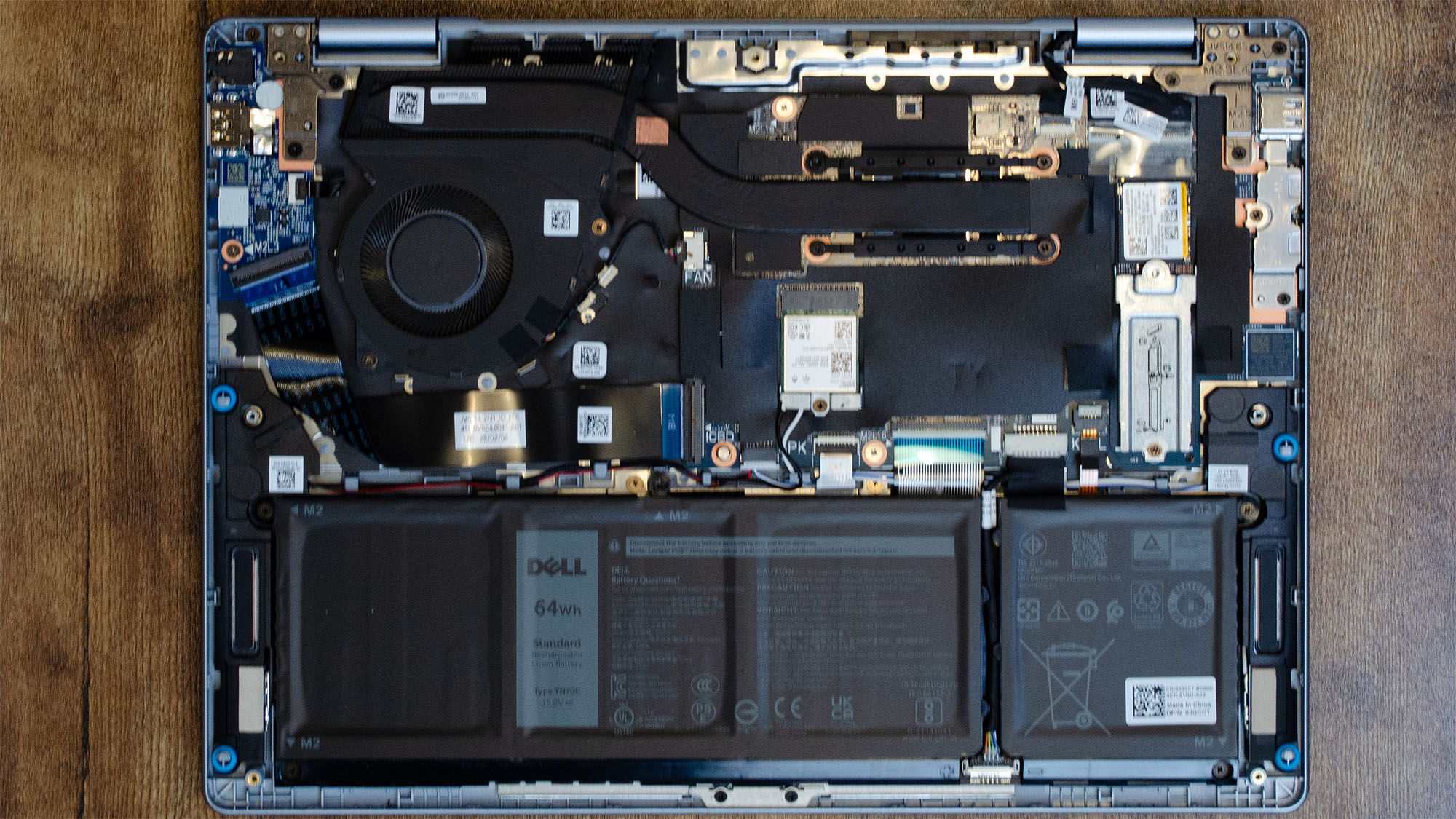

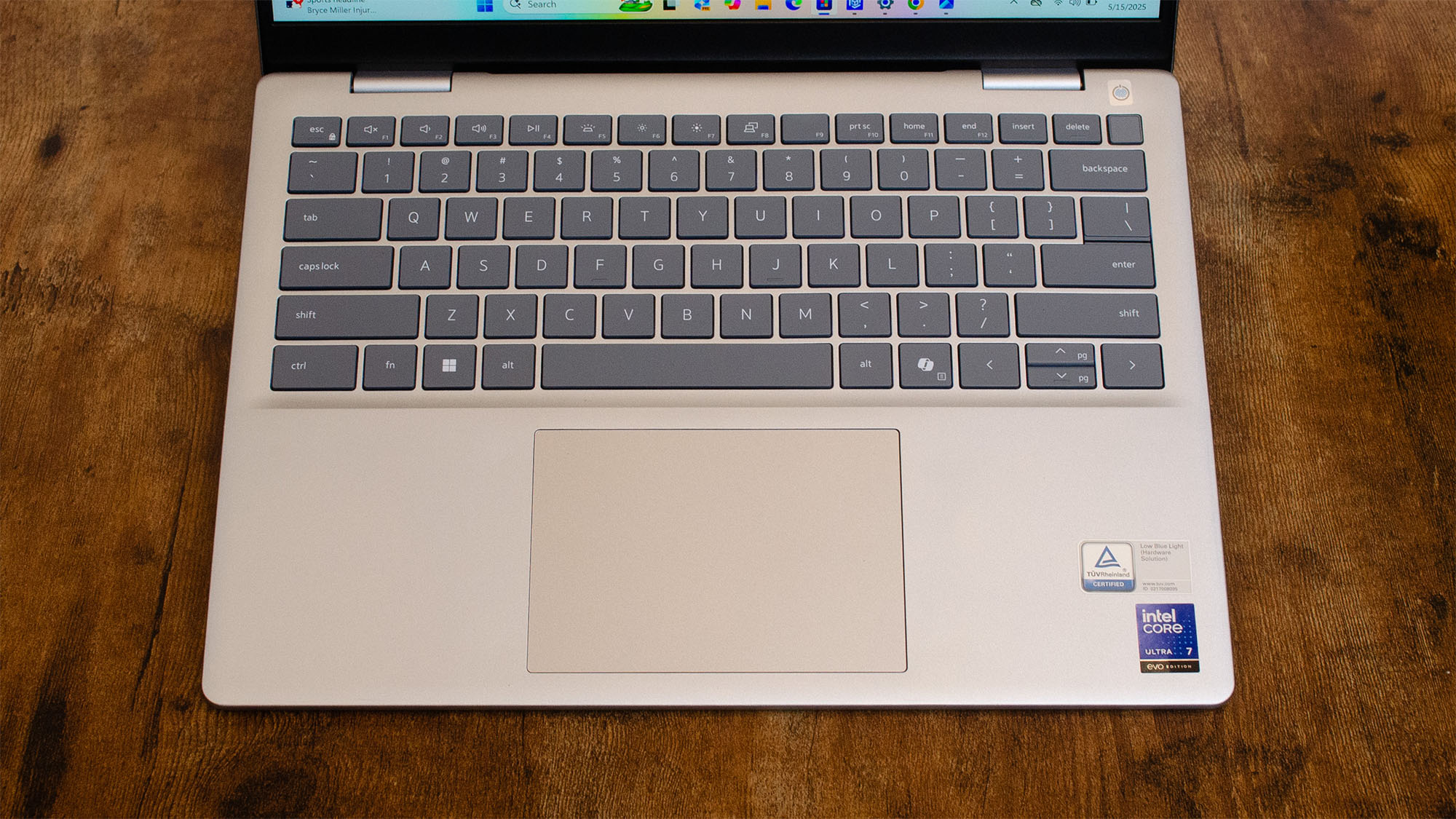

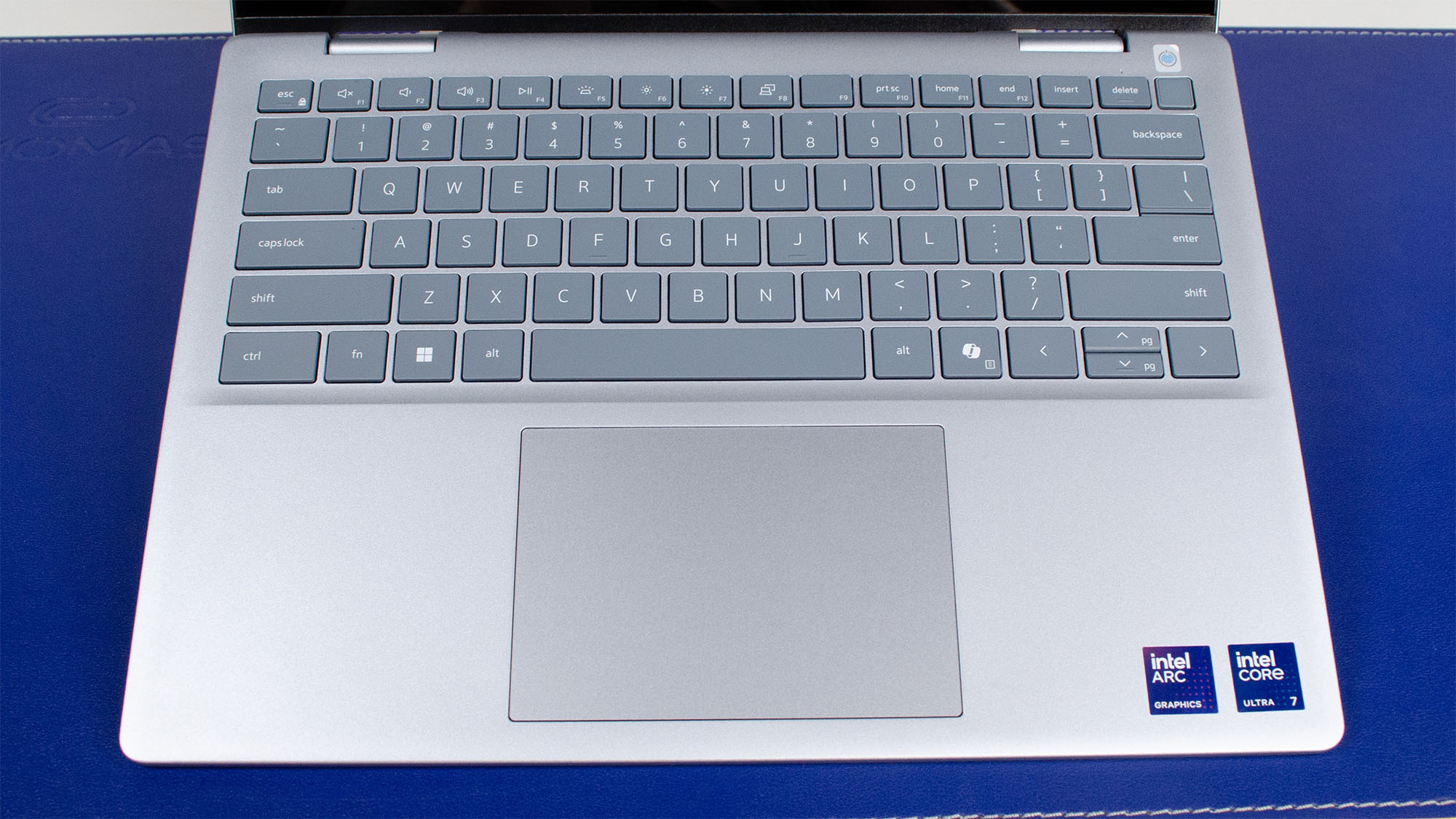

The configuration I reviewed is much more in the middle of the pack, featuring an Intel Core Ultra 7 256V chip, 16GB LPDDR5x RAM, and a 1TB SSD. This specific configuration isn't available in the UK and Australia, but the UK can get close enough (though with half the storage capacity).

Price: | $1,099.99 / £999 / (about AU$1,650, but Intel systems not yet available in Australia) |

CPU: | Intel Core Ultra 7 256V |

GPU: | Intel Arc Xe2 (140V) Graphics |

Memory: | 16GB LPDDR5X-8533 |

Storage: | 1TB NVMe SSD (512GB in the UK) |

Screen: | 14-inch 16:10 FHD+ (1200p), 300 nit, touch IPS |

Ports: | 1 x USB 3.2 Gen 1, 1 x USB 3.2 Gen 2 Type-C w/ DP 1.4 and Power Delivery, 1 x Thunderbolt 4 w/ DP 2.1 and Power Delivery, 1 x HDMI 2.1, 1 x combo jack |

Battery (WHr): | 64 WHr |

Wireless: | WiFi 7, BT 5.4 |

Camera: | 1080p@30fps |

Weight: | 3.42 lbs (1.55kg) |

Dimensions: | 12.36 x 8.9 x 0.67 ins | (314 x 226.15 x 16.95mm) |

- Specs: 4 / 5

Dell 14 Plus 2-in-1: Design

- Thin and light

- Trackpad can feel 'sticky'

- Display isn't great for a 2-in-1

The design of the Dell 14 Plus 2-in-1 is nearly identical to the standard 14 Plus, with the major difference being its 360-degree hinge. Otherwise, it sports a functional design language that, while not premium, doesn't necessarily look or feel cheap either.

The keyboard on the 14 Plus 2-in-1 is functional, if not incredible, but for most people it'll do the job just fine. The trackpad occassionally felt somewhat 'sticky' to me, however, and it's something that kind of gives away the laptop's price point, if I'm being honest. I've felt similar trackpads on much cheaper Chromebooks in the past.

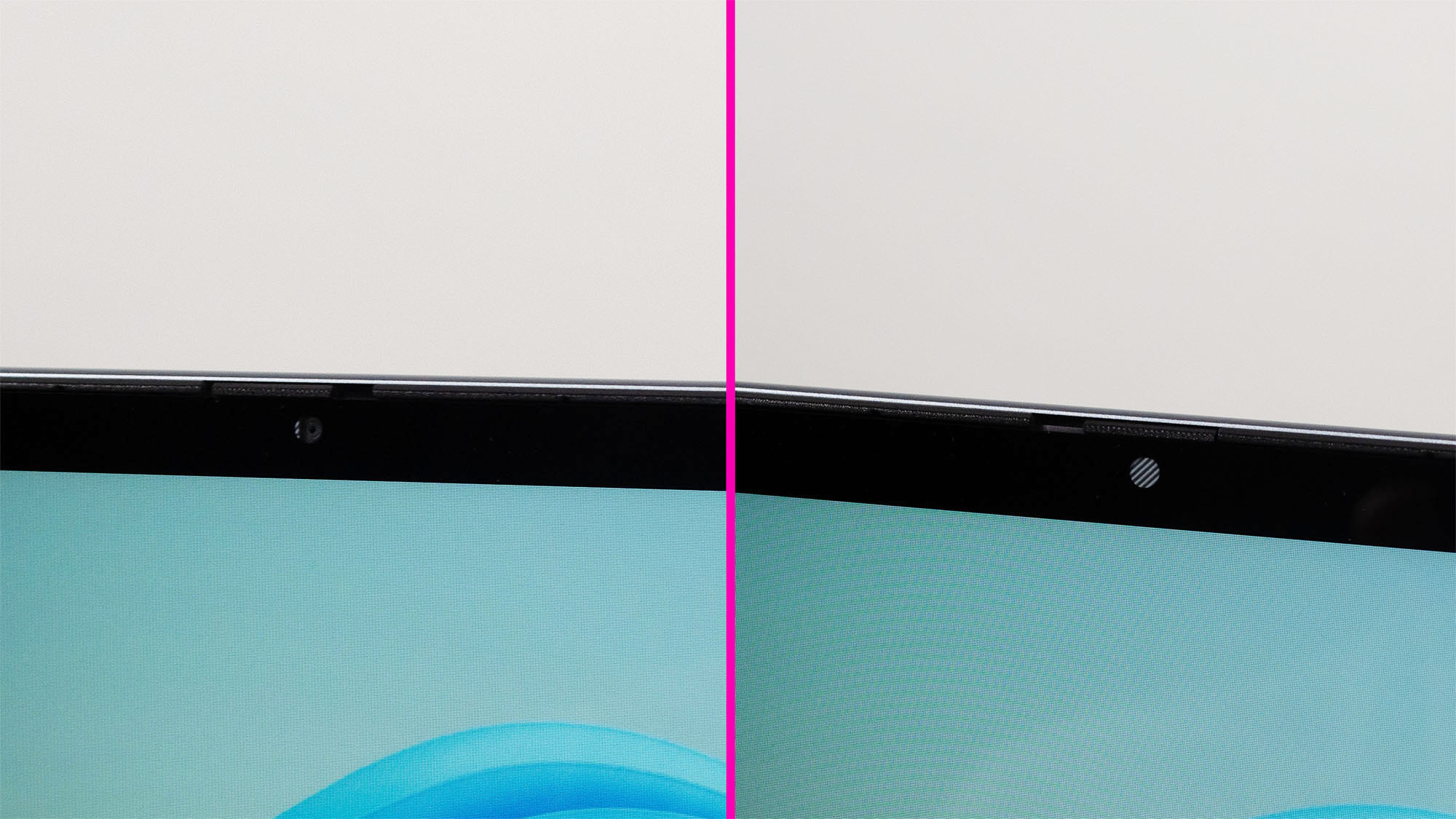

The webcam is a solid 1080p at 30 frames per second, which is pretty much standard nowadays. It does feature a physical privacy shutter though, which is excellent.

For ports, you have a good mix, especially for a laptop this thin, and if you're using an Intel-based configuration, you get a Thunderbolt 4 port, which is very handy. Regardless of the processor brand, though, with USB-C Gen 3.2 ports available, you can display out using DisplayPort 1.4, and all USB-C ports support power delivery.

Where this laptop was more of a letdown than its clamshell cousin is the display. At 300 nits peak brightness and just 67.5% of the sRGB color gamut and only 47.8% DCI-P3 (according to my testing), the color quality and brightness on the display just isn't very good.

It's one thing when the display on a cheap clamshell isn't great, but if you plan on taking notes or maybe even do some sketch work on your 2-in-1, this display is not going to give you the best experience. If you plan on using this laptop for school, try to avoid using it outside on a sunny day, or else you're really going to struggle to see things clearly.

- Design: 3 / 5

Dell 14 Plus 2-in-1: Performance

- Solid performance

- Can do some modest gaming

- Not the best for creative work

Here's how the Dell 14 Plus 2-in-1 performed in our suite of benchmark tests:

Geekbench 6.4 (Single): 2,673; (Multi): 10,880

Crossmark (Overall): 1,708; (Productivity): 1,655; (Creativity): 1,934; (Responsiveness): 1,293

3DMark (Night Raid): 31,074; (Fire Strike): 8,462; (Time Spy): 3,896; (Steel Nomad): 601

Civilization VI Gathering Storm (1080p, Max Graphics, Avg): 53 fps

Civilization VII (1080p, Medium, Avg): 59 fps; (High): 34 fps

Web Surfing Battery Test: 15 hours, 14 minutes

The performance of the Dell 14 Plus 2-in-1 is going to vary quite a bit depending on your configuration, but like the clamshell 14 Plus, the 14 Plus 2-in-1 is a very solid performer when it comes to everyday computing and general productivity tasks that are typical of laptops at this price point.

To be clear, this isn't a professional mobile workstation like the MacBook Pro 14, and definitely isn't one of the best gaming laptops, but if what you're looking for is a laptop that does its job, does it reasonably well, and doesn't try to do too much beyond the everyday, then the 14 Plus 2-in-1 is a very solid pick (especially if you're on a budget).

Thanks to the integrated Intel Arc Xe2 or Radeon 840M graphics (depending on your configuration), you'll also be able to get some casual to moderate gaming out of this laptop, though you'll definitely want to keep things at or below 1080p and reasonable graphics settings.

In my testing, Civilization VII (one of the more graphically demanding sim games out there) managed to get close to 60 FPS on average on medium settings, which is more than enough for a thin and light laptop like this.

This is a 2-in-1, though, and the focus really is on note-taking, drawing, and the like, and for that the responsiveness of the display was good enough for the price, though nothing spectacular.

- Performance: 4 / 5

Dell 14 Plus 2-in-1: Battery Life

- How long does it last on a single charge? 15 hours and 14 minutes

- How long to fully charge it to 100%? 2 hours and 36 minutes

I haven't tested one of the AMD Ryzen AI 300 models of this laptop, but the Intel Core Ultra 256V in my review unit is a very energy-efficient chip, so this laptop's battery life is good enough to rival many of the best laptops of the past few years, though it's not in the top five or anything like that.

It ran for just over 15 hours in my battery test, which involves using a script and custom server to simulate typical web browsing behavior. This is more than enough for a typical work or school day, and with the included 65W charger, it took about two and a half hours to recharge the 64WHr battery to full from empty (though higher wattage chargers will likely get you there faster).

- Battery Life: 4 / 5

Should you buy the Dell 14 Plus 2-in-1?

Category | Notes | Rating | |

|---|---|---|---|

Value | The Dell 14 Plus 2-in-1 offers great value for its price. | 5 / 5 | |

Specs | Available configurations are generally very good. | 4 / 5 | |

Design | The 14 Plus 2-in-1 looks good on the outside, but its display really brings its design down. | 3 / 5 | |

Performance | Everyday computing and productivity performance are solid, but it can't hold up to intense workloads like gaming at high settings. | 4 / 5 | |

Battery Life | Solid battery life capable of many hours of use on one charge. | 4 / 5 |

Buy the Dell 14 Plus 2-in-1 if...

You want solid productivity and general computing performance

The Dell 14 Plus 2-in-1 offers solid performance for most users, especially for the price.

You want a laptop that doesn’t look too cheap

While it isn't going to win any major design awards, it's still a pretty good-looking laptop for its price.

Don't buy it if...

You need a high-performance laptop

While its general performance is very good, you're not going to be able to push it much further than general use and casual PC gaming.

You need a quality display

While the clamshell 14 Plus might have been able to skate by with this display, it's not really good enough for a 2-in-1.

- First reviewed June 2025