ASRock Steel Legend RX 7900 GRE: Three-minute review

Earlier this year, the AMD Radeon RX 7900 GRE (Golden Rabbit Edition), initially exclusive to China, emerged as a formidable mid-range GPU contender upon its global release. It not only competes directly with the Nvidia RTX 4070 in terms of price but also rivals the performance of the 4070 Super.

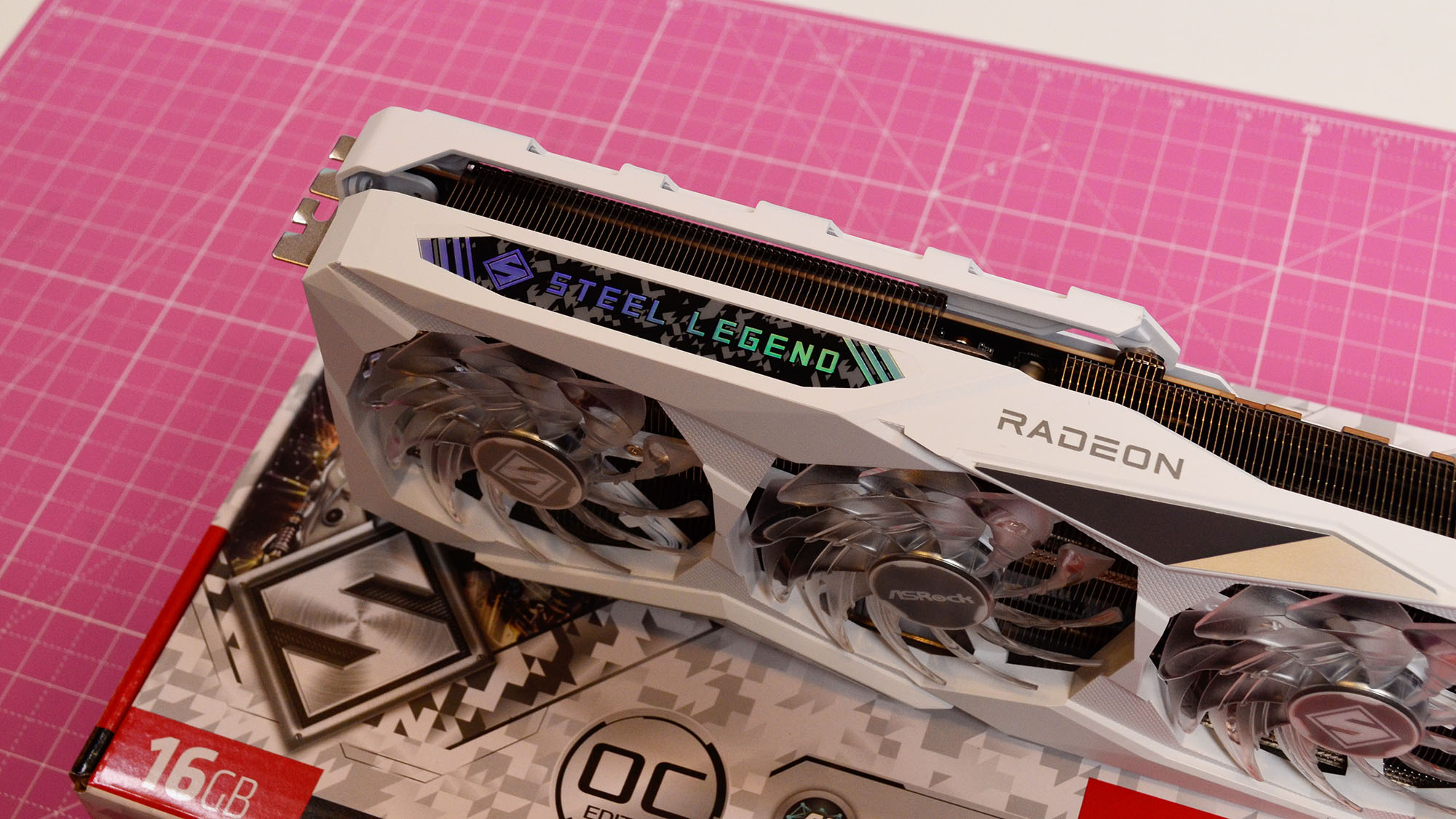

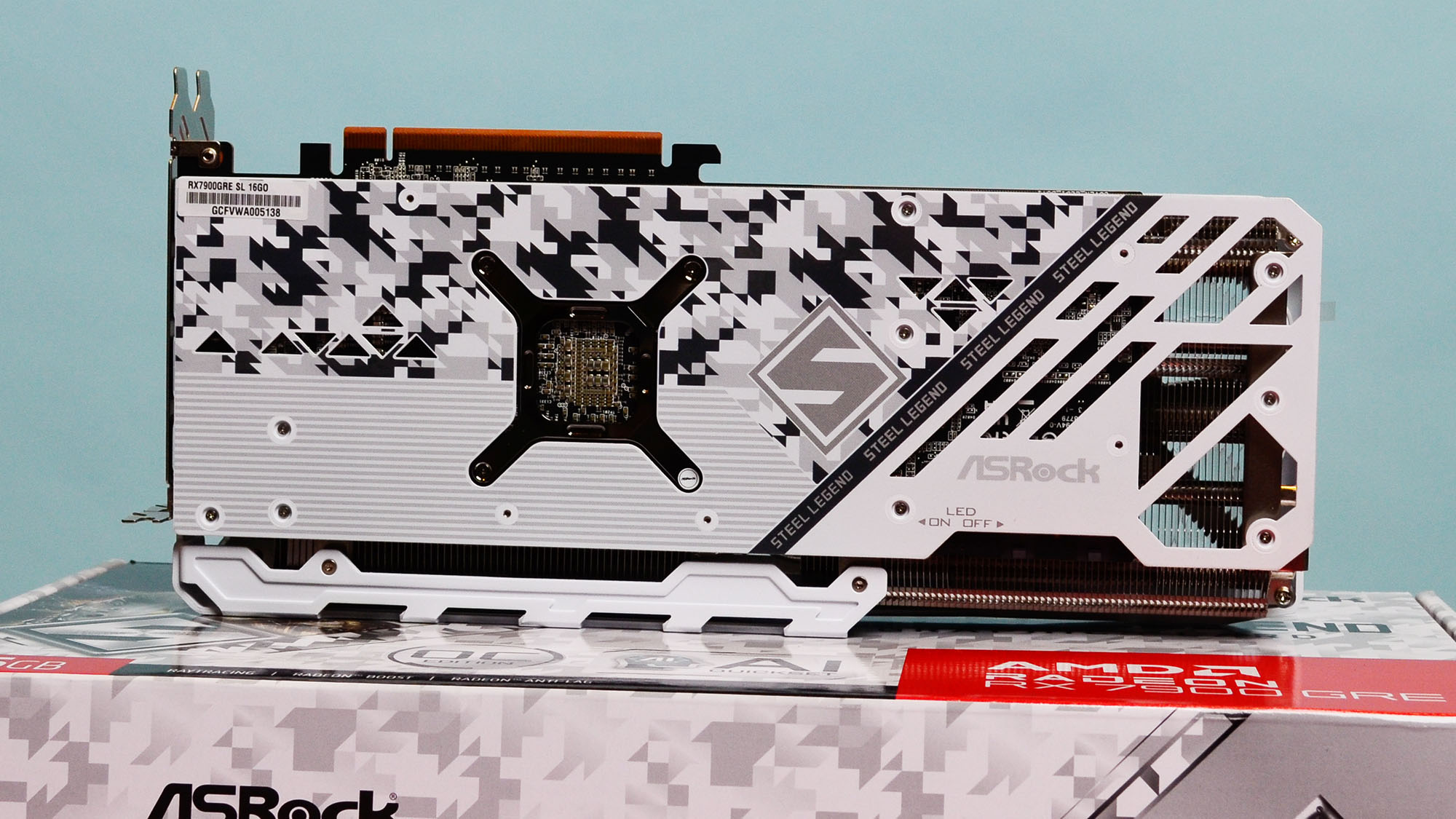

ASRock Steel Legend's version of the RX 7900 GRE retains all the features we appreciated and found lacking in the GPU. This includes 16GB GDDR6 on a 256-bit memory bus, 80 AMD RDNA 3 compute units with RT and AI accelerators, 64MB of AMD Infinity Cache technology, and support for Microsoft DirectX 12 Ultimate.

Priced at $549 (£568.44/AU$1,025), the ASRock Steel Legend RX 7900 GRE stands out as one of the best versions of AMD's mid-range GPU.

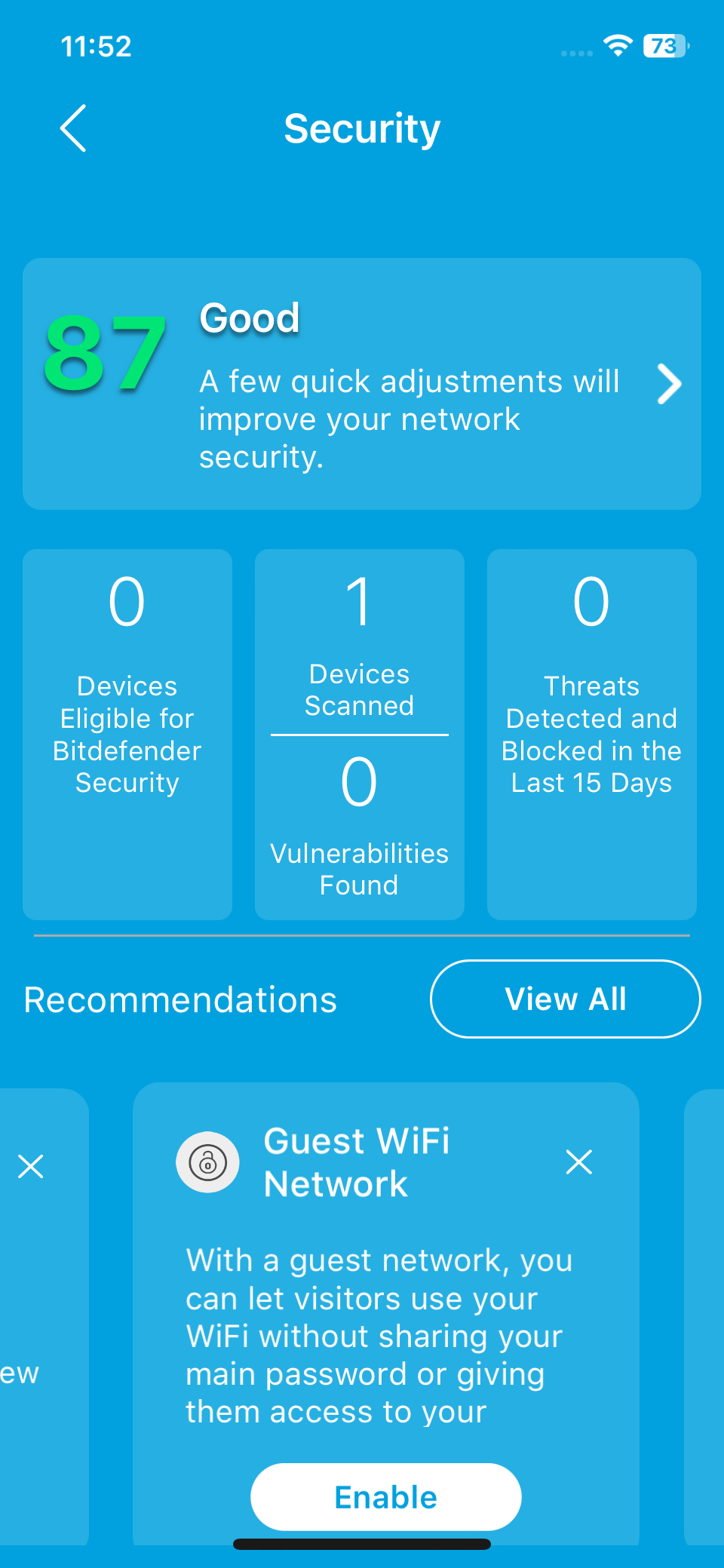

As with many GPUs from the Taipei, Taiwan-based manufacturer, it includes several appealing extras. Customizable RGB lighting is available through ASRock’s Polychrome RGB Sync app.

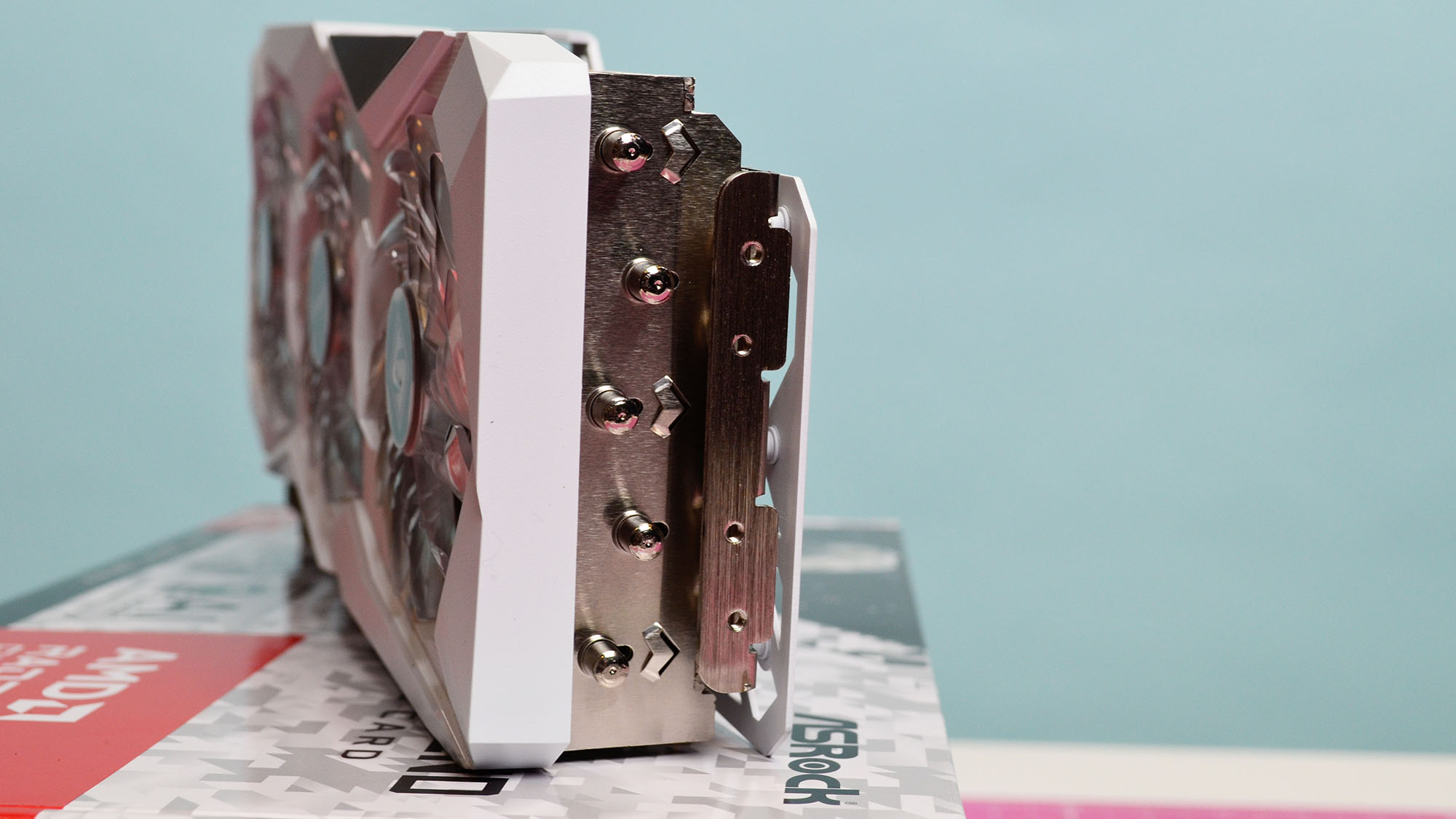

The metal backplate not only enhances durability but also adds a sleek look to custom desktop setups. For cooling, it features a triple-striped axial fan with 0dB silent cooling and an Ultra-fit heat pipe for efficient heat dissipation.

The ASRock Steel Legend RX 7900 GRE boasts higher core (1972MHz) and boost (2,333MHz) clock speeds compared to the version we reviewed last February. While this might yield a slight performance boost, the primary benefit lies in enhanced GPU durability. Buyers can enjoy added visual flair with customizable RGB lighting and an improved cooling solution, all without the concern of accelerated wear on their GPU.

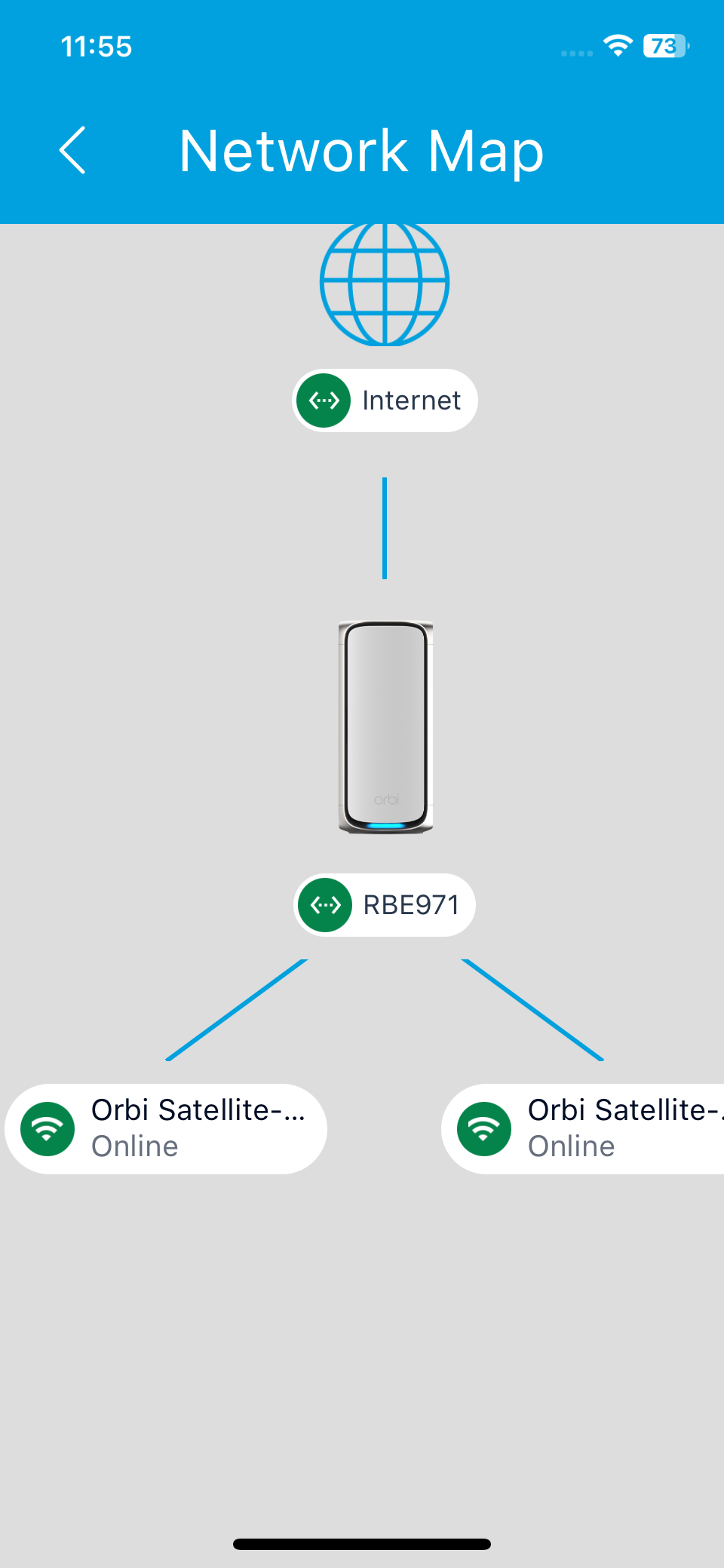

Installation is straightforward, featuring PCI Express 4.0 support and requiring two 8-pin power connectors for its 260W power demand. The card includes three DisplayPort 2.1 ports and one HDMI 2.1 port.

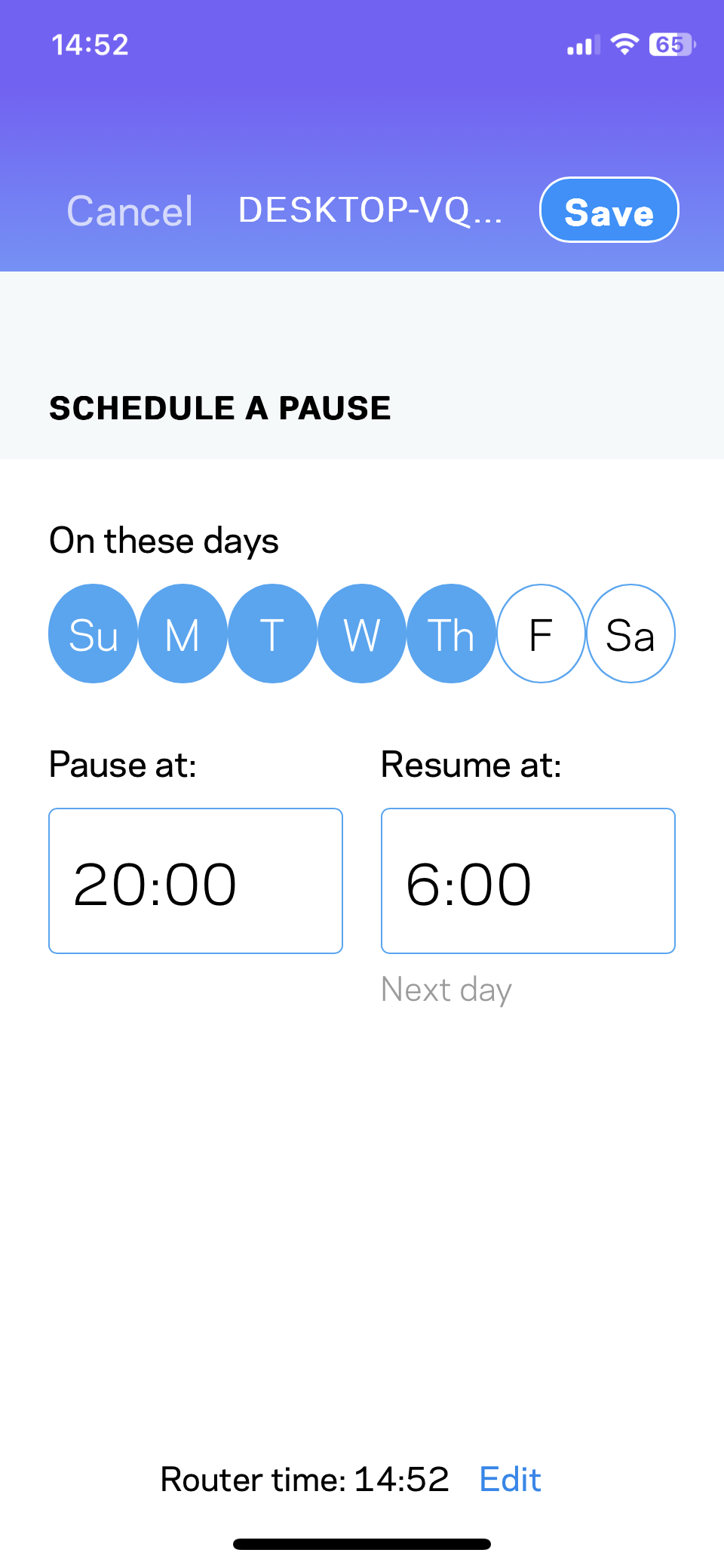

Users will need to download the AMD Software Adrenalin Edition driver for optimal performance. Additionally, ASRock provides the ASRock Tweak 2.0 software for performance tuning and fan control adjustments.

This version of the RX 7900 GRE delivers nearly identical performance, making it ideal for 1440p native resolutions and entry-level 4K gaming, depending on the game. Gamers who also use their desktops for content creation can seamlessly edit high-resolution photos in Photoshop and videos in Premiere Pro without any issues.

Starting with Hellblade 2: Senua’s Saga, considered a high visual benchmark in gaming, we tested at 1440p native resolution with max settings and ray tracing, achieving frame rates between 30-45 fps. Enabling AMD FidelityFX Super Resolution (FSR) at a balanced setting boosted frame rates to 60 fps and above.

While FSR isn't as refined as Nvidia’s DLSS, with some noticeable ghosting issues, the RX 7900 GRE’s larger VRAM can provide better performance for GPU-intensive games.

Games like Cyberpunk 2077 and Forza Motorsport (2023) also saw respectable frame rates at max settings. Aspiring competitive gamers will definitely be able to get higher 100+ frame rates on games like Call of Duty: Modern Warfare III, Counter Strike 2 and Fortnite too.

The ASRock Steel Legend RX 7900 GRE is an enhanced version of an already impressive mid-range GPU. It features customizable RGB lighting, a robust metal backplate, and an efficient cooling system with 0dB silent cooling. This makes it an ideal choice for budget-conscious gamers looking for outstanding 1440p performance.

ASRock Steel Legend RX 7900 GRE: PRICE & AVAILABILITY

The ASRock Steel Legend RX 7900 GRE is now available in the U.S., UK, and Australia for $549 (£568.44, AU$1,025). It can be purchased from online retailers like Amazon and Newegg.

Priced $50 lower than the Nvidia GeForce RTX 4070 Super, the RX 7900 GRE offers 16GB VRAM compared to the 4070 Super’s 12GB, providing a slight advantage for 1440p gaming at native resolution.

However, the 4070 Super excels in Ray Tracing and AI upscaling, enhancing games like Cyberpunk 2077 and Alan Wake II.

ASRock Steel Legend RX 7900 GRE: Specs

Should I Buy ASRock Steel Legend RX 7900 GRE?

Buy the ASRock Steel Legend RX 7900 GRE if…

You require fantastic 1440p performance

The ASRock Steel Legend RX 7900 GRE is a 1440p beast with the ability to get some good performance out of 4K as well.

You need a GPU to make your desktop’s fly

The GPU looks great including the metal back panel and customizable RGB lighting.

You want good cooling components and quiet fans

Alongside the triple fan design that’s pretty quiet even when under stress, the ultra-fit heat pipe really goes a long way of keeping components cool.

Don’t buy if…

You need the best ray tracing and AI upscaling

Compared to NVIDIA’s 4070 Super, the RX 7900 GRE can’t keep up with ray tracing performance and AI resolution upscaling through DLSS.

ASRock Steel Legend RX 7900 GRE: ALSO CONSIDER

Nvidia GeForce RTX 4070 Super

Not only can it handle 1440p resolution just as well as the RX 7900 but excels at ray tracing and AI upscaling.

HOW I TESTED ASRock Steel Legend RX 7900 GRE

I used the ASRock Steel Legend RX 7900 GRE on my main computer for two weeks, playing games like Hellblade 2: Senua's Saga, Armored Core VI: Fires of Rubicon, and Forza Motorsport (2023). I also created video and photo content with Photoshop and Premiere Pro. With specs similar to the RX 7900 GRE we reviewed earlier this year, except for the additional 212Hz boost clock, the benchmarks were almost the same.

I’ve spent the past several years covering laptops and PCs, monitors, and other PC components for Techradar. Outside of gaming, I’ve been proficient in Adobe Suite for over a decade as well.

We pride ourselves on our independence and our rigorous review-testing process, offering up long-term attention to the products we review and making sure our reviews are updated and maintained - regardless of when a device was released, if you can still buy it, it's on our radar.

- First reviewed July 2024