Acer Aspire 16 AI: Two-minute review

The Acer Aspire 16 AI is a large laptop promising powerful AI features in an elegant body. It certainly looks the part, thanks to the premium materials and finish, as well as the impressively thin chassis. It’s also surprisingly light for a laptop of this size, which further improves its portability.

However, the price paid for this litheness is the somewhat flimsy build quality, falling below the standards of the best laptop constructions. There’s a fair amount of flex to the chassis, while the lid hinge doesn’t offer the greatest stability – although it at least managed to stay planted while I typed.

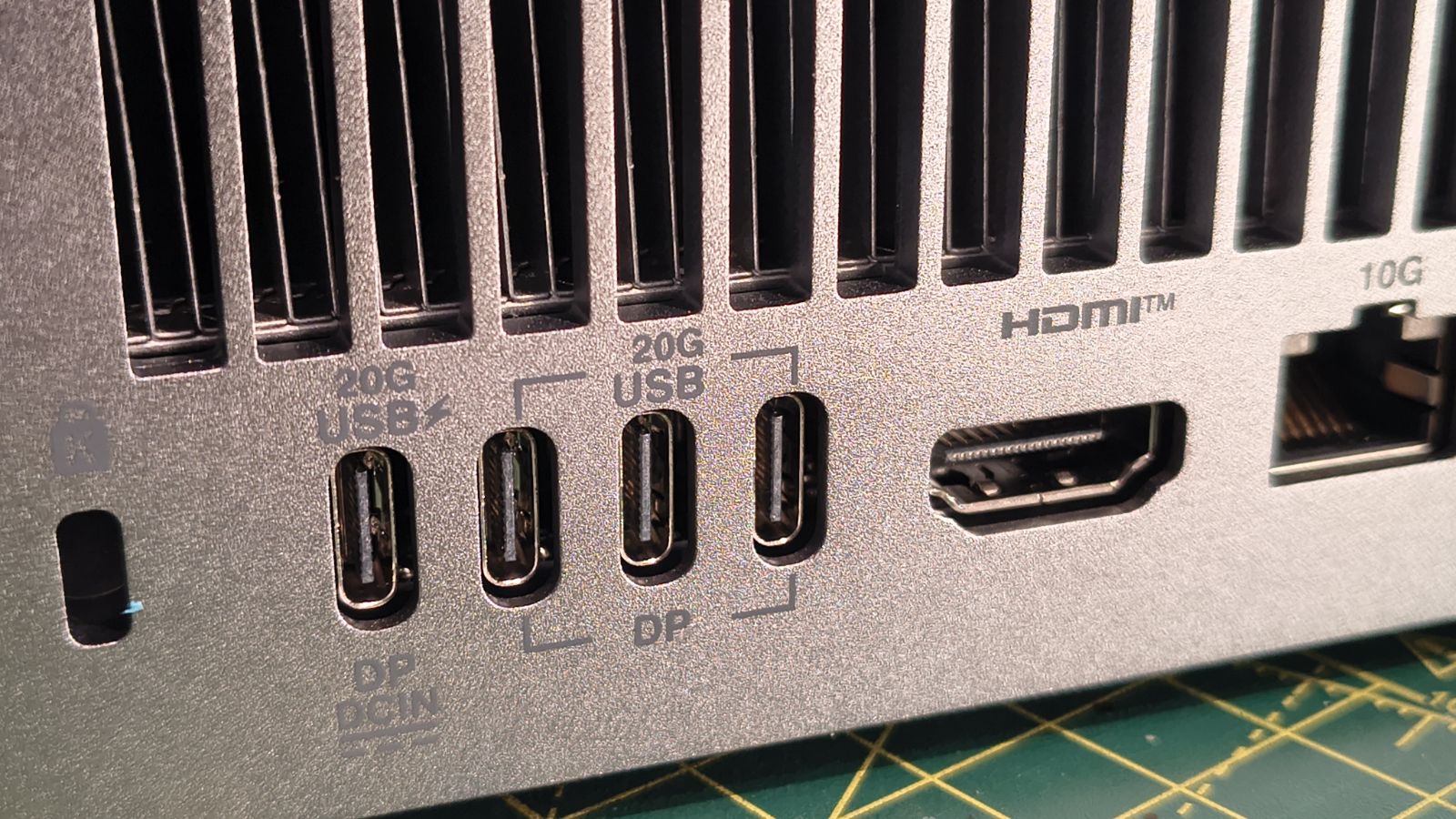

There are a good number of ports on the Aspire 16 AI, including two USB-C and two USB-A ports. However, the former are located closest to you, a choice I usually lament since it means your power adapter has to cross over with any cable you have plugged in to the USB-A port. It’s also a shame that the card reader is only fit for microSDs.

For day-to-day use, the Aspire 16 AI is very capable. It can handle light productivity and 4K streaming without missing a beat. However, the included AI features are disappointing: they’re either too basic in their functionality or fail to work altogether.

Gaming also proved to be a lackluster experience. Its shared memory GPU can just about handle AAA titles on the lowest settings, and even then you won’t exactly be treated to the smoothest frame rates.

Under these kinds of intensive workloads, the Aspire 16 AI can generate a fair amount of heat, but thankfully it’s concentrated underneath, towards the back. Coupled with the hushed fans, the Aspire 16 AI remains comfortable to use in such scenarios.

The display in my review unit, with its OLED technology and 2048 x 1280 resolution, provided a crystal-clear image, rendering colors vividly and delivering high brightness levels. This latter aspect is especially useful for combating reflections, which can be quite prominent.

Thanks to the spacing and satisfying feel of its keys, the keyboard on the Aspire 16 AI is easy to use. However, the number pad keys are too small for my liking, and I wished there was a right Control key, as I find this useful for productivity purposes.

The touchpad is smooth and large, which helps with navigation, but it can get in the way when typing. Also, the one in my review unit felt loose and rattled when clicking, making it awkward to use and suggests signs of poor quality control.

Battery life is somewhat disappointing, and isn’t a patch on that of the smaller 14 AI. In fact, many of its key rivals can outlast it. I only managed to get roughly nine hours from it when playing a movie on a continuous loop.

On the face of it, the Aspire 16 AI might look like good value, but it doesn’t deliver enough to justify its cost. Its slender form and mostly great display aren’t enough to make up for its drawbacks, while other laptops at this price point offer more complete packages.

Acer Aspire 16 AI review: Price & Availability

- $649.99 / £799.99 / AU$1,499

- Available now in various configurations

- Better value rivals exist

The Aspire 16 AI starts from $649.99 / £799.99 / AU$1,499 and is available now. It can be configured with various processors, including Intel and Qualcomm (ARM) chips, with a couple of storage and RAM options to choose from.

Unfortunately, there are better value laptops out there with more power and performance, better suited to heavier workloads. The Apple MacBook Air 13-inch (M4) is one such example. Starting prices aren’t as low, but they’re similar to the higher spec models of the Aspire 16 AI. They also have excellent build quality, making them a better value proposition all things considered.

If you want to stick with Windows, the Asus TUF Gaming A16 Advantage Edition is another alternative. Again, it’s similarly priced to the higher-spec variants of the Aspire 16 AI, but offers much better gaming performance, chiefly thanks to its AMD Radeon RX 7600S GPU. It’s no surprise we think it’s one of the best cheap gaming laptops around right now.

- Value: 3 / 5

Acer Aspire 16 AI review: Specs

Acer Aspire 16 AI Base Config | Acer Aspire 16 AI Review Config | |

Price | $649.99 / £799.99 / AU$1,499 | £949 (about $1,280, AU$1,960) |

CPU | Qualcomm Snapdragon X X1-26-100 (8 cores), 3GHz | AMD Ryzen AI 7 350, 2.0GHz (8 cores) |

GPU | Qualcomm Adreno GPU (shared memory) | AMD Radeon 860M (shared memory) |

RAM | 16GB LPDDR5X | 16GB LPDDR5X |

Storage | 512GB PCI Express NVMe 4.0 (M.2) | 1TB PCI Express NVMe 4.0 (M.2) |

Display | 16-inch WUXGA (1920 x 1200) 16:10 ComfyView (Matte) 120Hz, IPS | 16-inch WUXGA+ (2048 x 1280) OLED, 16:10, 120Hz |

Ports and Connectivity | 2x USB-C (Thunderbolt 4), 2x USB-A, 1x HDMI 2.1, 1x headset jack, 1x microSD, Wi-Fi 7, Bluetooth 5.4 | 2x USB-C (Thunderbolt 4), 2x USB-A, 1x HDMI 2.1, 1x headset jack, 1x microSD, Wi-Fi 7, Bluetooth 5.4 |

Battery | 65Wh | 65Wh |

Dimensions | 14 x 9.8 x 0.6 inch / 355 x 250 x 16mm | 14 x 9.8 x 0.6 inch / 355 x 250 x 16mm |

Weight | 3.4lbs / 1.55kg | 3.4lbs / 1.55kg |

Acer Aspire 16 AI review: Design

- Brilliantly thin and light

- Not the sturdiest

- Touchpad issues

Thanks to its minimal design, the Aspire 16 AI has sleek looks. The low-shine metallic lid also adds to its elegance, befitting its premium price tag.

It’s pleasingly light and slender, too, making it more portable than you might expect for a 16-inch laptop. The bezel for the display is minuscule as well, which helps to maximize its full potential.

There’s a satisfying click when you close the lid on the Aspire 16 AI, something I haven’t encountered on any other laptop before. The hinge also allows for the screen to recline all the back by 180 degrees, something I’m always happy to see.

However, lid stability isn’t the best, as it’s prone to wobbling, although, thankfully, it remains stable while typing on the keyboard. The overall construction of the Aspire 16 AI isn’t especially impressive, either, with the chassis having a fair amount of flex.

Worse still, the touchpad in my review unit had a horrible rattle, as if some part was loose at the bottom section. It’s possible this issue is confined to my review unit alone – perhaps it had been passed around several journalists before it got to me – but the issue still doesn’t speak highly of its build quality or Acer's quality control.

There’s a varied selection of ports on the Aspire 16 AI, spread evenly across both sides. On the left are two USB-C ports, one USB-A port, and an HDMI port. However, I found it inconvenient that the USB-C ports are placed nearest to you, since one has to be used for the power adapter; I much prefer the thick cable for this to trail from the back of the laptop, rather than from the middle, as it does with the Aspire 16 AI.

On the right you’ll find another USB-A port, followed by a combo audio jack and a microSD card reader. It’s a shame the latter can’t accommodate standard SD card sizes, but this is a small grievance.

- Design: 3.5 / 5

Acer Aspire 16 AI review: Performance

- Good productivity and streaming performance

- Poor for gaming

- Useless AI features

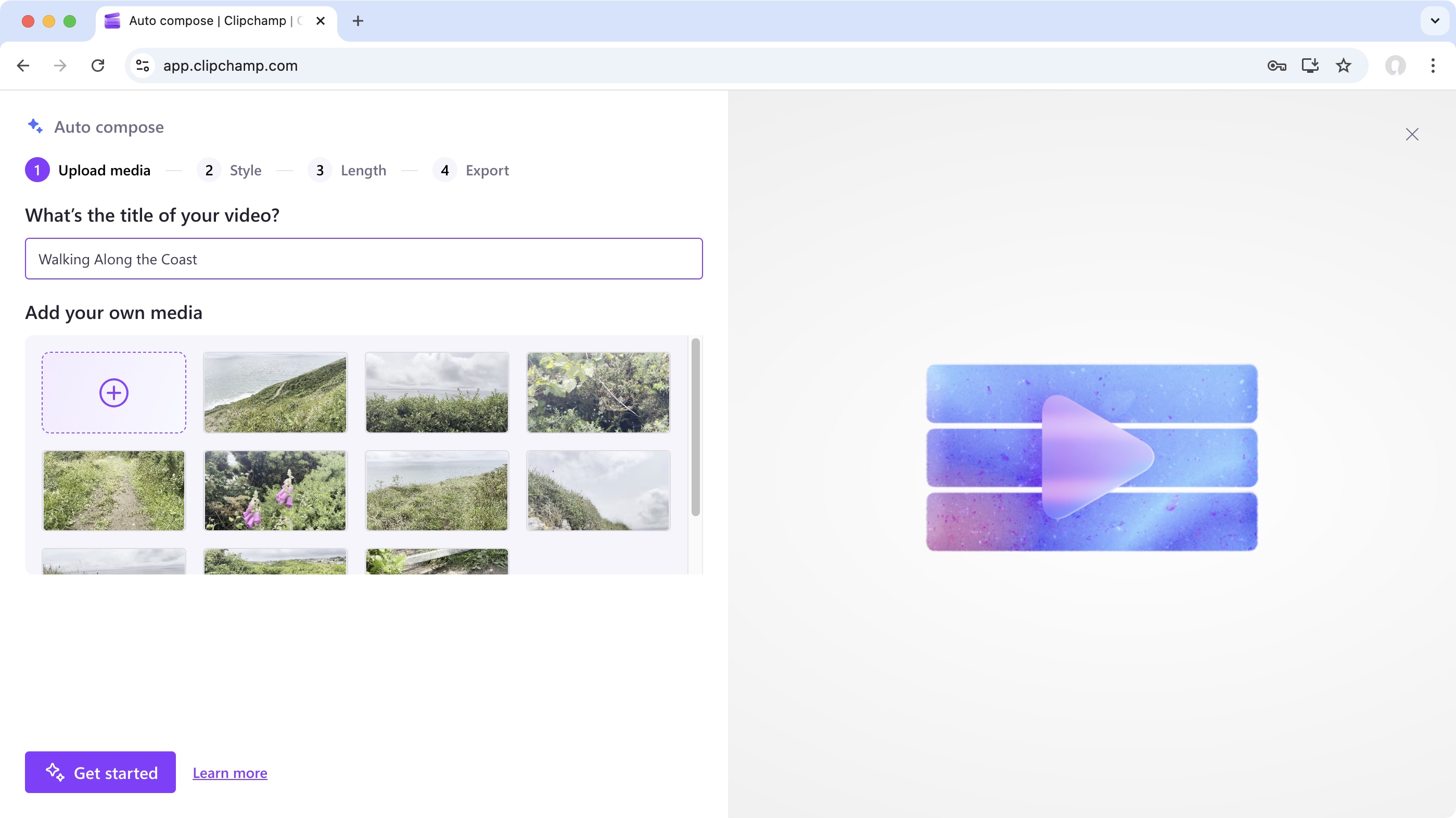

The Aspire 16 AI offers great general performance. It takes light productivity in its stride, from word processing to spreadsheet creation, and multiple browser tabs didn’t cause a problem for me, thanks to the 16GB of RAM in my review unit. Streaming 4K content is well within its grasp, too. I experienced little buffering or slow down, providing a seamless viewing experience in the main.

However, despite what Acer claims, the gaming performance of the Aspire 16 AI is quite poor. With its shared memory, the AMD Radeon GPU didn’t handle AAA titles very well. When I played Cyberpunk 2077 with the default Ray Tracing: Low preset and resolution scaling set to Performance mode, I was getting 20fps on average – not what you’d call playable.

The best I could achieve with the game was about 38fps, but that was at the lowest possible graphics preset and the resolution dropped to 1080p. This at least made it playable, but if you’re expecting to get even moderately close to the performance of the best gaming laptops, you’ll be sorely disappointed.

During my playtime, the Aspire 16 AI generated a fair amount of heat. Fortunately, this was heavily concentrated underneath and at the back, thus steering clear of any parts you might actually touch. Fan noise is also pleasantly subdued.

As when I tested the 14 AI, the AI features the Aspire 16 AI sports are disappointing. The centerpiece appears to be Acer LiveSense, a photo editing and webcam suite with very basic functionality, not to mention a poor UI and frequent glitches.

For more AI features, you’ll have to download Acer Intelligence Space, the brand’s hub. Contrary to when I tested the 14 AI, I managed to install it successfully. However, it didn’t get off to an auspicious start, as a dialog box warned me that I had insufficient memory resources, explaining that it needed 6.5GB free and a total of at least 16GB to execute smoothly.

I proceeded anyway and was greeted with a clear user interface that revealed the various AI apps I could install. However, a large portion of them seem to be incompatible with the Aspire 16 AI, and those that are were once more very limited in their functionality.

On a more positive note, the 2K OLED display in my review unit was as clear and as vibrant as you might expect. The very shiny coating can cause prominent reflections, but these can be mitigated by the screen’s brightness values (especially if you disable the ‘change brightness based on content’ setting).

The keyboard feels premium, too, thanks to the subtle texture and tight fit of the keys themselves. They’re also light, tactile, and reasonably spaced, although perhaps not to the extent of other laptop keyboards. I didn’t find this aspect to be a problem when typing, but I did while gaming, as it made adopting the WASD more uncomfortable for me.

At least the number pad doesn’t eat into the layout space. However, contrary to many full-sized laptop keyboards I’ve experienced, it’s the number pad itself that feels cramped, with its keys being too narrow to be used easily. Another small but notable gripe I have with the keyboard is the absence of a right Control key, which can be frustrating when performing productivity tasks.

The touchpad performs well enough, with its large and smooth surface making for easy navigation. However, thanks to the aforementioned rattle in its bottom portion, clicks felt unpleasant. It can also get in the way while typing: on occasion, the palm of my thumbs would activate the cursor, although thankfully not clicks or taps.

- Performance: 3.5 / 5

Acer Aspire 16 AI review: Battery Life

- Average battery life

- 14 AI battery life much better

- Other rivals are better, too

The battery life of the Aspire 16 AI isn’t particularly impressive. It lasted just over nine hours in our movie playback test, which is a middling result. This is a far cry from the time achieved by the 14 AI, which lasted over twice as long, making the Aspire 16 AI even more disappointing by comparison.

What’s more, plenty of its rivals can beat this score, including the Microsoft Surface Laptop 13-inch, which managed over 17 hours, and the Asus TUF Gaming A16 Advantage Edition, which lasted 11 hours.

- Battery Life: 3.5 / 5

Should I buy the Acer Aspire 16 AI?

Attributes | Notes | Rating |

|---|---|---|

Value | Starting prices are low, but climb up the specs and the value starts to diminish. | 3 / 5 |

Design | Build quality isn’t the best, but it’s impressively thin and light. It looks good, too. | 3.5 / 5 |

Performance | Everyday tasks are dispatched without a hitch, but it can’t cope well with heavier demands, such as gaming. The display is very good, though. | 3.5 / 5 |

Battery Life | Only average, and the smaller 14 AI absolutely obliterates it on this front. | 3 / 5 |

Total | The Aspire 16 AI is a capable workhorse, but its poor GPU, underwhelming AI features, and suspect build quality result in a middling machine. | 3 / 5 |

Buy the Acer Aspire 16 AI if...

You want a large and bright display

The 16-inch OLED on my model looked great, its powerful backlight can overcome its reflective nature.

You want something portable

Despite its large size, the Aspire 16 AI is impressively light and thin, making it easy to carry around.

Don't buy it if...

You’ll be running graphics-intensive apps

The Aspire 16 AI could barely handle AAA gaming at modest settings, saddled as it is with a shared memory GPU.

You want a super-sturdy machine

There’s plenty of flex in the body, and the seemingly broken touchpad on my particular unit was disconcerting.

Acer Aspire 16 AI review: Also Consider

Asus TUF Gaming A16 Advantage Edition

If you’re after more graphical power but don’t want to spend more for it, the TUF Gaming A16 Advantage Edition might be the solution. It comes equipped with an AMD Radeon RX 7600S GPU, which is capable of handling AAA titles smoothly, although you may have to forgo Ray Tracing. Read our full Asus TUF Gaming A16 Advantage Edition review.

Apple MacBook Air 13-inch (M4)

Unusually for an Apple product, this MacBook Air is actually a great budget pick if you’re after a powerful machine, being among the best laptops for video editing for this reason. Its sumptuous design and display are additional feathers in its creative cap. Read our full Apple MacBook Air 13-inch (M4) review.

How I tested the Acer Aspire 16 AI

- Tested for several days

- Used for various tasks

- Plentiful laptop reviewing experience

I tested the Aspire 16 AI for several days, during which time I used it for various tasks, from productivity and browsing to streaming and gaming.

I also ran our series of benchmark tests to assess its all-round performance more concretely, and played a movie on a continuous loop while unplugged to see how long its battery lasted.

I have been using laptops for decades, and have reviewed a large and varied selection of them too, ranging in their form factors, price points, and intended purposes.

- First reviewed: January 2026

- Read more about how we test