AMD Radeon RX 9070: Two-minute review

The AMD Radeon RX 9070 is a card that just might be saved by the economic chaos engulfing the GPU market right now.

With 'normal' price inflation pretty much rampant with every current-gen GPU, the price proposition for the RX 9070 might actually make it an appealing pick for gamers who're experiencing sticker shock when looking for the best graphics card for their next GPU upgrade.

That doesn't mean, unfortunately, that the AMD RX 9070 is going to be one of the best cheap graphics cards going, even by comparison with everything else that's launched since the end of 2024. With an MSRP of $549 / £529.99 / AU$1,229, the RX 9070 is still an expensive card, even if it's theoretically in line with your typical 'midrange' offering.

And, with the lack of an AMD reference card that might have helped anchor the RX 9070's price at Team Red's MSRP, you're going to pretty much be at the mercy of third-party manufacturers and retailers who can charge whatever they want for this card.

Comparatively speaking, though, even with price inflation, this is going to be one of the cheaper midrange GPUs of this generation, so if you're looking at a bunch of different GPUs, without question this one is likely to be the cheapest graphics card made by either AMD or Nvidia right now (yes, that's even counting the RTX 5060 Ti, which is already selling for well above 150% of MSRP in many places).

Does that make this card worth the purchase? Well, that's going to depend on what you're being asked to pay for it. While it's possible to find RX 9070 cards at MSRP, they are rare, and so you're going to have to make a back-of-the-envelope calculation to see if this card is going to offer you the best value in your particular circumstance.

I'm fairly confident, however, that it will. Had I the time to review this card when it first launched in March, I might have scored it lower based on its performance and price proximity to the beefier AMD Radeon RX 9070 XT.

Looking at both of those cards based on their MSRPs, there's no question that the RX 9070 XT is the much better graphics card, so I'd have recommended you spend the extra cash to get that card instead of this one.

Unfortunately, contrary to my hopes, the RX 9070 XT has been scalped almost as badly as the best Nvidia graphics cards of this generation, so that relatively small price difference on paper can be quite large in practice.

Given that reality, for most gamers, the RX 9070 is the best 1440p graphics card going, and can even get you some solid 4K gaming performance for a lot less than you're likely to find the RX 9070 XT or competing Nvidia card, even from the last generation.

If you're looking at this card and the market has returned to sanity and MSRP pricing, then definitely consider going for the RX 9070 XT instead of this card. But barring that happy contingency, given where everything is right now with the GPU market, the RX 9070 is the best AMD graphics card for 1440p gaming, and offers some of the best bang for your (inflationary) buck as you're likely to find today.

AMD Radeon RX 9070: Price & availability

- How much is it? MSRP is $549 / £529.99 / AU$1,229, but retail price will likely be higher

- When can you get it? The RX 9070 is available now

- Where is it available? The RX 9070 is available in the US, UK, and Australia

The AMD Radeon RX 9070 is available now in the US, UK, and Australia for an MSRP of $549 / £529.99 / AU$1,229, respectively, but the price you'll pay for this card from third-party partners and retailers will likely be higher.

Giving credit where it's due, the RX 9070 is the exact same MSRP as the AMD Radeon RX 7900 GRE, which you can argue the RX 9070 is replacing. It's also coming in at the same price as the RTX 5070's MSRP, and as I'll get into in a bit, for gaming performance, the RX 9070 offers a better value at MSRP.

Given how the RTX 5070 can rarely be found at MSRP, the RX 9070 is in an even stronger position compared to its competition.

- Value: 4 / 5

AMD Radeon RX 9070: Specs

- PCIe 5.0

- 16GB VRAM

- Specs & features: 4 / 5

AMD Radeon RX 9070: Design & features

- No AMD reference card

- Will be good for SFF cases

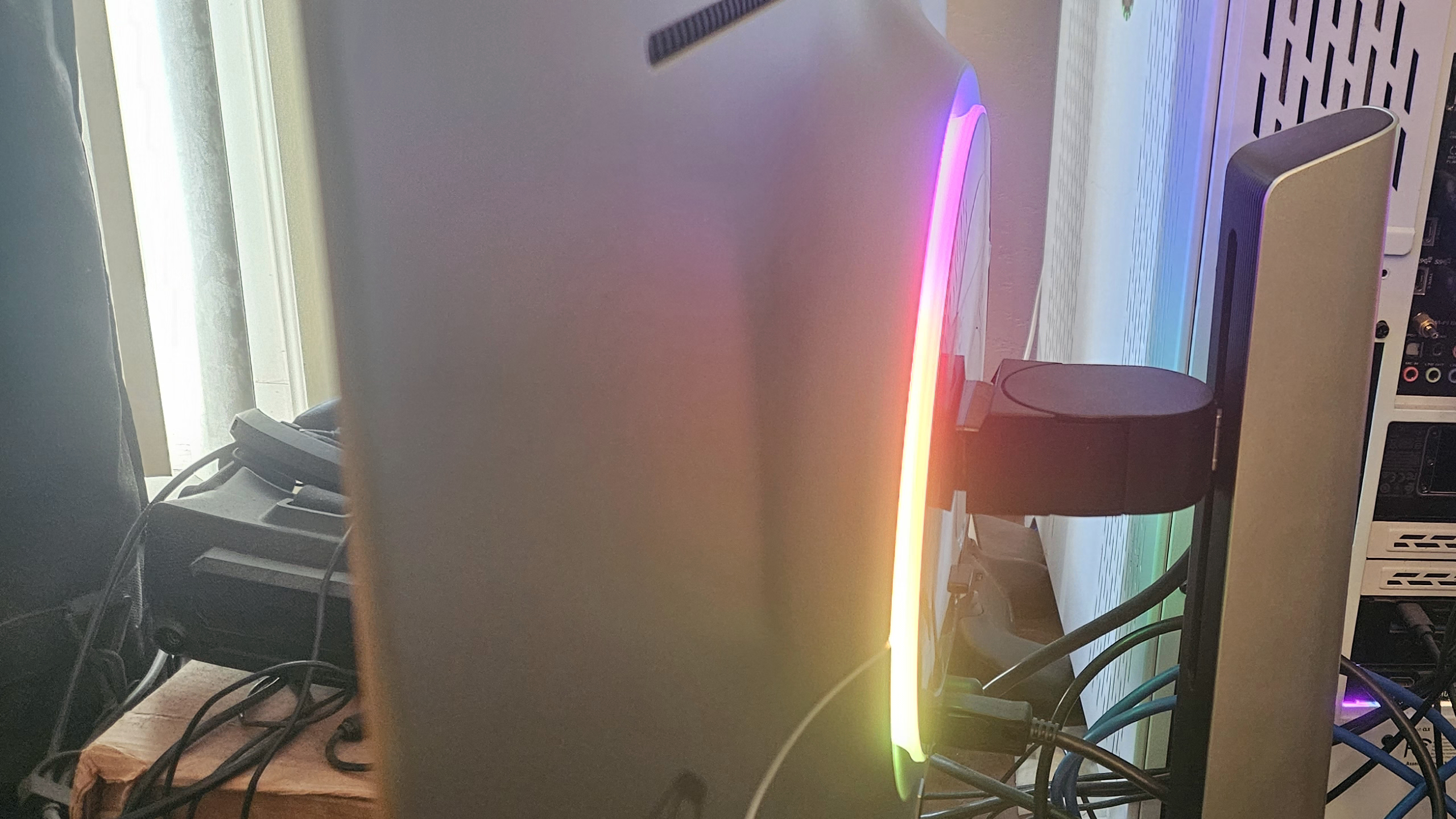

In terms of design, the RX 9070 doesn't have a reference card, so the card I reviewed is the Sapphire Pulse Radeon RX 9070.

This card, in particular, is fairly straightforward with few frills, but for those who don't want a whole lot of RGB lighting in their PC, this is more of a positive than a negative. RGB fans, however, will have to look at other AMD partner cards for their fix.

The card is a noticeably shorter dual-fan design compared to the longer triple-fan RX 9070 XT cards. That makes the RX 9070 a great option for small form factor PC cases.

- Design: 3.5 / 5

AMD Radeon RX 9070: Performance

- About 13% slower than RX 9070 XT

- Outstanding 1440p gaming performance

- Decent 4K performance

The charts shown below offer the most recent data I have for the cards tested for this review. They may change over time as more card results are added and cards are retested. The 'average of all cards tested' includes cards not shown in these charts for readability purposes.

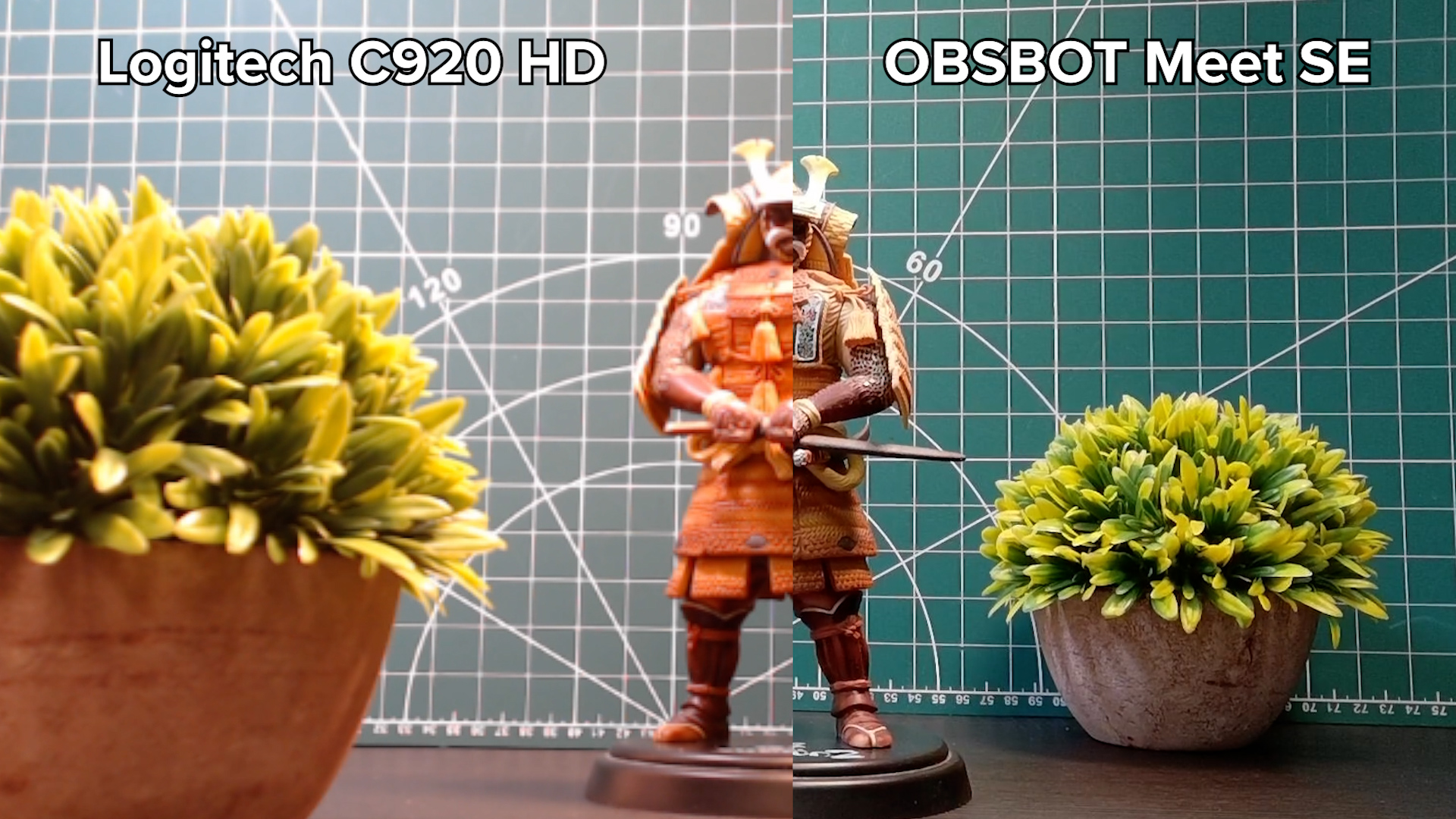

When it comes down to performance, the RX 9070 is a very strong graphics card that is somewhat overshadowed by its beefier 9070 XT sibling, but goes toe-to-toe against the RTX 5070 where it counts for most users, which is gaming.

On the synthetic side, the RTX 9070 puts up some incredibly solid numbers, especially in pure rasterization workloads like 3DMark Steel Nomad, beating out the RTX 5070 by 13%. In ray tracing heavy workloads like 3DMark Speed Way, meanwhile, the RX 9070 manages to comes within 95% of the RTX 5070's performance.

As expected though, the RX 9070's creative performance isn't able to keep up with Nvidia's competing RTX 5070, especially in 3D modeling workloads like Blender. If you're looking for a cheap creative workstation GPU, you're going to want to go for the RTX 5070, no question.

But that's not really what this card is about. AMD cards are gaming cards through and through, and as you can see above, at 1440p, the RX 9070 goes blow for blow with Nvidia's midrange card so that the overall average FPS at 1440p is 114 against Nvidia's 115 FPS average (72 FPS to 76 FPS average minimums/1%, respectively).

Likewise, at 4K, the two cards are effectively tied, with the RX 9070 holding a slight 2 FPS edge over the RTX 5070, on average (50 FPS to 51 FPS minimum/1%, respectively).

Putting it all together, one thing in the Nvidia RTX 5070's favor is that it is able to tie things up with the RX 9070 at about 26 fewer watts under load (284W maximum power draw to the RTX 5070's 258W).

That's not the biggest difference, but even 26W extra power can mean the difference between needing to replace your PSU or sticking with the one you have.

Under normal conditions, I'd argue that this would swing things in favor of Nvidia's GPU, but the GPU market is hardly normal right now, and so what you really need to look at is how much you're being asked to pay for either of these cards. Chances are, you're going to be able to find an RX 9070 for a good bit cheaper than the RTX 5070, and so its value to you in the end is likely going to be higher.

- Performance: 4.5 / 5

Should you buy the AMD Radeon RX 9070?

Buy the AMD Radeon RX 9070 if...

You want a fantastic 1440p graphics card

The RX 9070 absolutely chews through 1440p gaming with frame rates that can fully saturate most 1440p gaming monitors' refresh rates.

You don't want to spend a fortune on a midrange GPU

While the RX 9070 isn't cheap, necessarily, it's among the cheapest midrange cards you can get, even after factoring in scalping and price inflation.

Don't buy it if...

You want great creative performance

While the RX 9070 is a fantastic gaming graphics card, its creative performance (especially for 3D modeling work) lags behind Nvidia midrange cards.

Also consider

AMD Radeon RX 9070 XT

The RX 9070 XT is an absolute barnburner of a gaming GPU, offering excellent 4K performance and even better 1440p performance, especially if you can get it close to MSRP.

Read the full AMD Radeon RX 9070 XT review

Nvidia GeForce RTX 5070

The RTX 5070 essentially ties the RX 9070 in gaming performance in 1440p and 4K gaming, but has better power efficiency and creative performance.

Read the full Nvidia GeForce RTX 5070 review

How I tested the AMD Radeon RX 9070

- I spent about two weeks with the RX 9070

- I used my complete GPU testing suite to analyze the card's performance

- I tested the card in everyday, gaming, creative, and AI workload usage

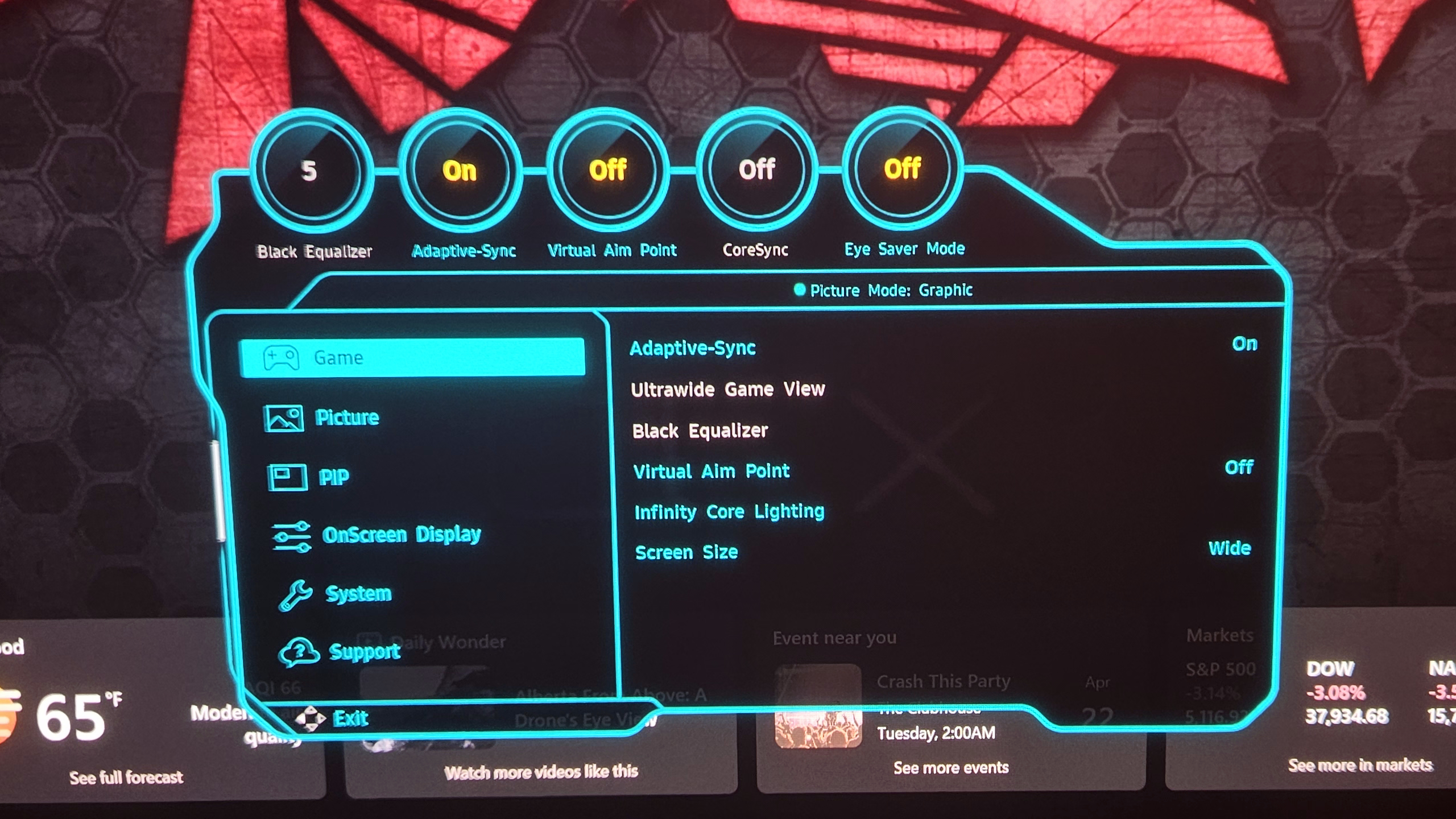

Here are the specs on the system I used for testing:

Motherboard: ASRock Z790i Lightning WiFi

CPU: Intel Core i9-14900K

CPU Cooler: Gigabyte Auros Waterforce II 360 ICE

RAM: Corsair Dominator DDR5-6600 (2 x 16GB)

SSD: Samsung 9100 Pro 4TB SSD

PSU: Thermaltake Toughpower PF3 1050W Platinum

Case: Praxis Wetbench

I spent about two weeks with the AMD RX 9070, using it as my primary workstation GPU for creative work and gaming after hours.

I used my updated benchmarking process, which includes using built-in benchmarks on the latest PC games like Black Myth: Wukong, Cyberpunk 2077, and Civilization VII. I also used industry-standard benchmark tools like 3DMark for synthetic testing, while using tools like PugetBench for Creators and Blender Benchmark for creative workload testing.

I've reviewed more than three dozen graphics cards for TechRadar over the past three years, which has included hundreds of hours of dedicated GPU testing, so you can trust that I'm giving you the fullest picture of a graphics card's performance in my reviews.

- Originally reviewed May 2025