I’m not going to get to have the Acer Veriton GN100 for long, so this is more of a hands-on discussion than an actual review.

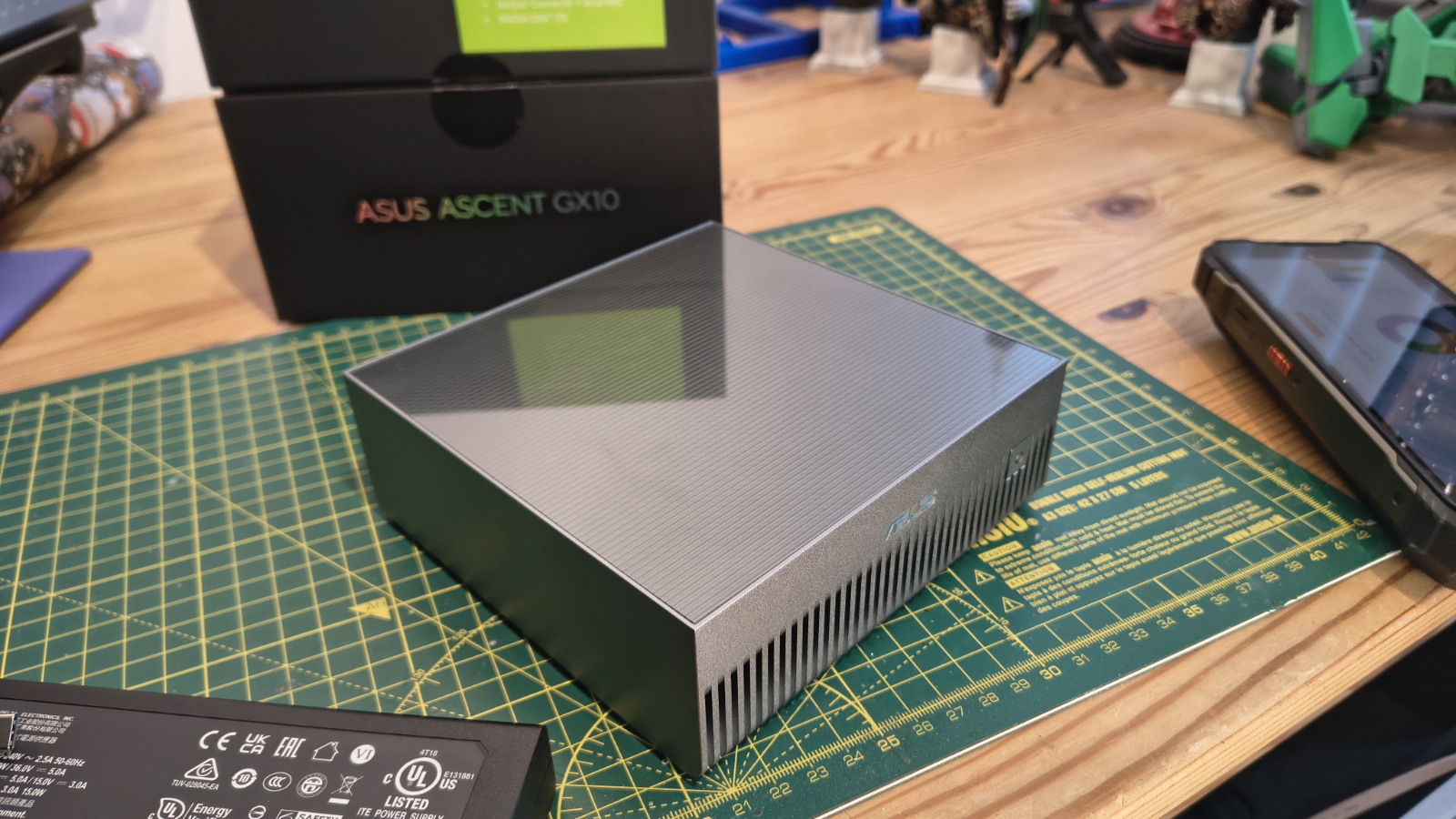

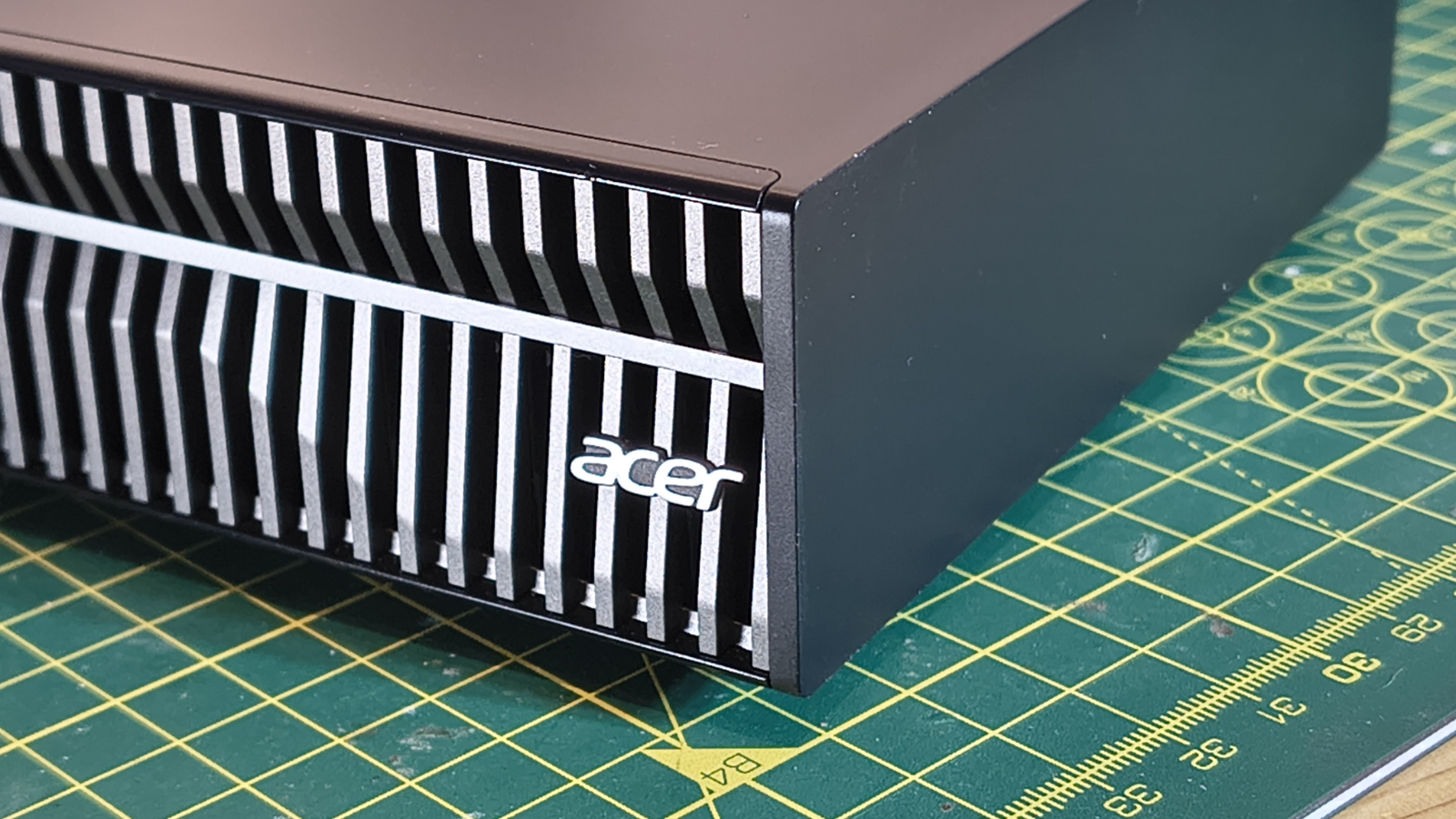

My first reaction, out of the box, to the Acer Veriton GN100 is that it all seems remarkably familiar. An elegant mini-PC style case with a car-grill aesthetic, a selection of USB-C ports alongside a 10GbE LAN port and the mercurial NVIDIA ConnectX-7 SmartNIC.

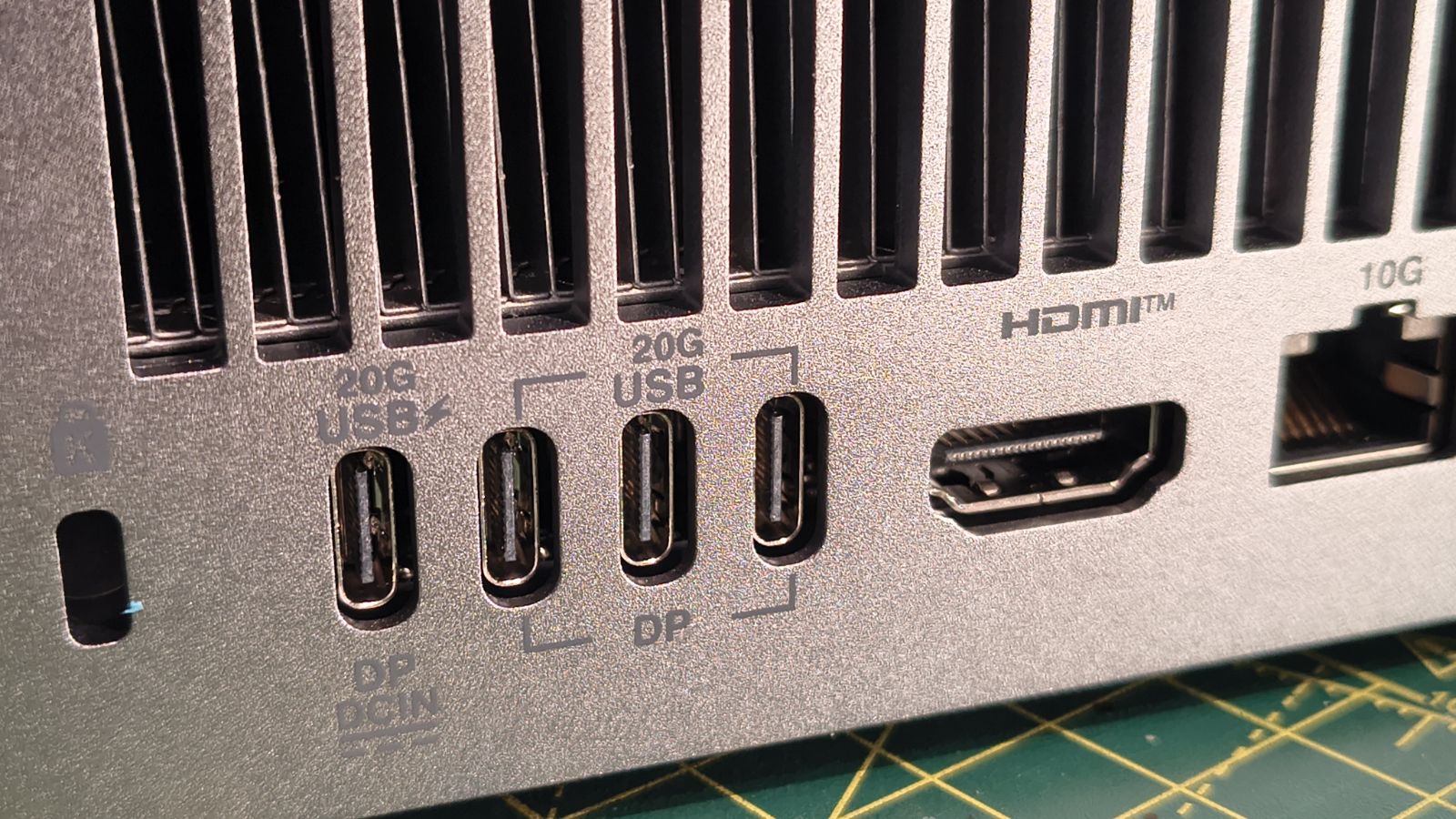

While it’s physically a little smaller, the ports on this machine are identical to those on the ASUS Ascent GX10, as both brands followed Nvidia’s Blackwell system plan exactly.

The only significant difference is that where the Asus provided access to the single M.2 NVMe drive that was installed, the Acer Veriton GN100 is an entirely sealed unit, where whatever storage it has can’t be replaced or upgraded.

Inside is the same Nvidia DGX Spark Personal AI Supercomputer built around the ARM v9.2-A CPU and Blackwell GPU integrated silicon. This, when combined with 128GB of LPDDR5 memory and 4TB of NVMe storage, is collectively called the GB10 platform.

This is a remarkably powerful platform that has uses in data science, medical image analysis, robotics and AI model development. To be clear, this isn’t a Windows PC, and an understanding of Linux is required to use it.

As the specification suggests, this isn’t an inexpensive item, starting at $2999 for the US-supplied hardware, but £3999.99 in Europe. For those who want a highly compact and efficient development environment, especially for AI, the Acer Veriton GN100 is an option, but it isn’t the only machine available using the same platform.

Acer Veriton GN100: Price and availability

- How much does it cost? From $3000, £4000

- When is it out? Available now

- Where can you get it? Available from Acer and online retailers

To avoid any confusion about specifications, Acer decided there would be only one SKU of the Veriton GN100, with 128GB of LPDDR5 and 4TB of storage.

Inexplicably, it costs £3999.99 direct from Acer in the UK, but only $2999.99 from Acer in the US. Why do we pay 82% more for an identical part when the UK doesn't tariff Taiwanese goods, Acer?

Also, this product doesn’t seem to be available elsewhere, so finding it cheaper on Amazon, for example, isn’t currently possible.

The alternatives built around the same platform are the ‘founders edition’ Nvidia DGX Spark Personal AI Supercomputer, ASUS Ascent GX10, Gigabyte AI TOP ATOM Desktop Supercomputer, and MSI EdgeXpert Desktop AI Supercomputer.

The Nvidia DGX Spark Personal AI Supercomputer, as the originator modestly calls it, undercuts the Veriton GN100 in the UK and costs £ 3699.98 for a system with 128GB of RAM and 4TB of storage. But, it’s more expensive for US customers, costing $3999 on Amazon.com.

The ASUS Ascent GX10 price on Amazon.com is $3088.94 for the 1TB storage SKU (GX10-GG0015BN), and $4,149.99 for the 4TB storage model (GX10-GG0016BN).

Even with the current price of M.2 modules, that is a remarkable price hike for the extra storage capacity.

For UK customers, the 1TB ASUS Ascent GX10 model price is £3713.02, but I found it via online retailer SCAN for a tempting £2799.98. SCAN also carries a 2TB option for $3199.99 and the 4TB model for £3638.99.

The Gigabyte AI TOP ATOM Desktop Supercomputer 4TB model sells for £3479.99 from SCAN in the UK, and can be found on Amazon.com for $3999.

And the final model with the same spec as most is the MSI EdgeXpert Desktop AI Supercomputer, selling for £3,598.99 from SCAN in the UK, and $3998.01 on Amazon.com for US customers.

The conclusion is that the US price is difficult to beat, whereas the European pricing is wildly out of what competitors are charging for this technology.

Acer Veriton GN100: Specs

Item

| Spec

|

|---|---|

| ARM v9.2-A CPU (GB10) (20 ARM cores, 10 Cortex-X925, 10 Corex-A725)

|

GPU:

| NVIDIA Blackwell GPU (GB10, integrated)

|

RAM:

| 128 GB LPDDR5x, unified system memory

|

Storage:

| 4TB M.2 NVMe PCIe 4.0 SSD storage

|

Expansion:

| N/A

|

Ports:

| 3x USB 3.2 Gen 2x2 Type-C, 20Gbps, alternate mode (DisplayPort 2.1)

1x USB 3.2 Gen 2x2 Type-C,with PD in(180W EPR PD3.1 SPEC)

1x HDMI 2.1

1x NVIDIA ConnectX-7 SmartNIC

|

Networking:

| 10GbE LAN, AW-EM637 Wi-Fi 7 (Gig+) , Bluetooth 5.4

|

OS:

| Nvidia DGX OS (Ubuntu Linux)

|

PSU:

| 48V 5A 240W

|

Dimensions:

| 150 x 150 x 50.5 mm

|

Weight:

| 1.2kg

|

Acer Veriton GN100: Design

- Oversized NUC

- Connect-7 scalability

- Zero internal access

While the GN100 looks like an oversized NUC mini PC, at 1.2kg, it's heavy, although it is lighter than the ASUS Ascent GX10 by over 200g.

In order to drive the monster silicon inside, Acer included a Delta-made PSU that’s rated to 240W over USB-C.

All the ports are on the back of this system, and nothing is on the front, not even the power button, other than some visual styling and the Acer logo.

These include, identical to the ASUS Ascent GX10, four USB-C ports, one of which is required for the PSU to connect, a single 10GbE LAN port and a single HDMI 2.1 video out.

This arrangement enables a single monitor using HDMI, but additional ones using the USB 3.2 Gen 2x2 ports in DP Alt mode, although one is exclusively needed to power the unit.

Why Nvidia chose USB 3.2 and not USB4 seems curious, since the models and data processed on this unit will eventually need to make it somewhere else, and the best networking on offer is 10GbE, which equals roughly 900MB/s transfer speeds.

And for those working on the hardware, the lack of any USB-A ports for mice or keyboards looks a bit silly.

However, this hardware is intended to be used ‘headless’ using a remote console, so perhaps that isn’t an issue in the greater scheme of things.

Where this design sheds any resemblance to PC hardware is with the inclusion of a ConnectX-7 Smart NIC, a technology acquired by Nvidia when it bought Mellanox Technologies Ltd, an Israeli-American multinational supplier of computer networking products based on InfiniBand and Ethernet.

In this context, ConnectX-7 is like those annoying cables that Nvidia used to make video cards work collectively, when they cared about video cards. Except that the capacity amount of bandwidth that can travel over ConnectX-7 is substantially more.

The port has two receptacles, with each capable of 100GbE, allowing 200GbE to flow between the GN100 and another, doubling the number of AI parameters from 200 billion in a single machine to 400 billion when buddied up to another.

Acer Veriton GN100: Features

- ARM 20-core CPU

- Grace Blackwell GB10

- AI platforms compared

The Nvidia GB10 Grace Blackwell Superchip marks a notable advancement in AI hardware, created through a partnership between Nvidia and ARM. It arises from the growing need for specialised computing platforms to keep pace with the rapid development and deployment of artificial intelligence models. Unlike a typical PC, the GB10 is designed around the ARM v9.2-A architecture, incorporating 20 ARM cores (10 Cortex-X925 and 10 Cortex-A725). This reflects a wider industry move towards ARM-based options, which are more power-efficient than PC processors, and potentially more scalable for AI tasks.

The capabilities of the GB10 are impressive. It combines a robust Nvidia Blackwell GPU with the ARM CPU, achieving up to a petaFLOP of AI performance with FP4 precision. This level of power is especially suitable for training and inference of large language models and diffusion models, which are fundamental to much of today’s generative AI. The system is further supported by 128GB of unified LPDDR5x memory, enabling it to handle demanding AI tasks efficiently.

The caveat to all this power and memory is that PC architectures aren’t designed to exploit them effectively, and Microsoft Windows memory management has long been an issue.

Therefore, to be efficient and communicate effectively with other nodes, the GB10 needs Ubuntu Linux, modified with NVIDIA’s DGX OS, to harness the platform's power and handle multi-node communications.

As I already mentioned, the GB10 delivers up to 1 petaFLOP at FP4 precision, ideal for quantised AI workloads. But that is still less than the multi-petaFLOP performance of NVIDIA’s flagship data centre chips, the Blackwell B200 or GB200.

However, where it goes toe-to-toe is in respect of power efficiency, since this node only consumes around 140W, which is much less than the Blackwell B200, which can consume between 1000W and 1200W per GPU. The GB200 combines two B200 chips and a Grace CPU, and the power demand can bloom to 2,700W. Although these systems might offer up to 20 Petabytes of performance, at around 19 times the power.

The balance here is that the GN100 can sit on your desk without needing any special services or environment, whereas the datacentre hardware needs a specialist location and services to ensure it doesn’t overheat or cause the local electricity network to fail.

In this respect, the GN100 and its counterparts represent the more realistic end of the AI wedge, but how useful they can be is dependent on what you are attempting to do, and if this much power is enough for your purposes.

Acer Veriton GN100: AI Reality Check

In my prior Asus GX10 coverage, I talked at length about AI, and how there are lots of people making a bet that it is the next big thing, and others who are much more critical of the technology and how it's developing.

I’m not going to rehash the obvious flaws of AI, or the lack of a path to address all of those, but I would strongly recommend researching before starting any AI endeavour and creating expectations that either can’t be met with current technology, or the power in this physically small computer.

What I can say is that recent AI releases have substantially improved over previous generations, but access to these advanced models, like ChatGPT 5.3 Codex and Claude 4.0, is ringfenced for paid subscribers using the Cloud.

Obviously, the beauty of a device like the GN100 is that you can download these models and run them on your own hardware, even if getting the most out of them requires them to be connected to the Internet to source information.

For those interested, running GPT-5.3-Codex on this hardware requires you to install Tailscale and a local inference engine like Ollama, pull the codex to the GN100 using the appropriate commands. Then you can open up an Open WebUI from another system, ideally, and use the model.

For anyone familiar with Linux, none of this is especially taxing. But to make it even easier, the ChatGPT team (or is that the AI?) has made a Codex App that does most of the legwork for you.

If you want to try something else, some models come in pre-prepared Docker Containers that can simply be installed and executed, making deployment remarkably straightforward.

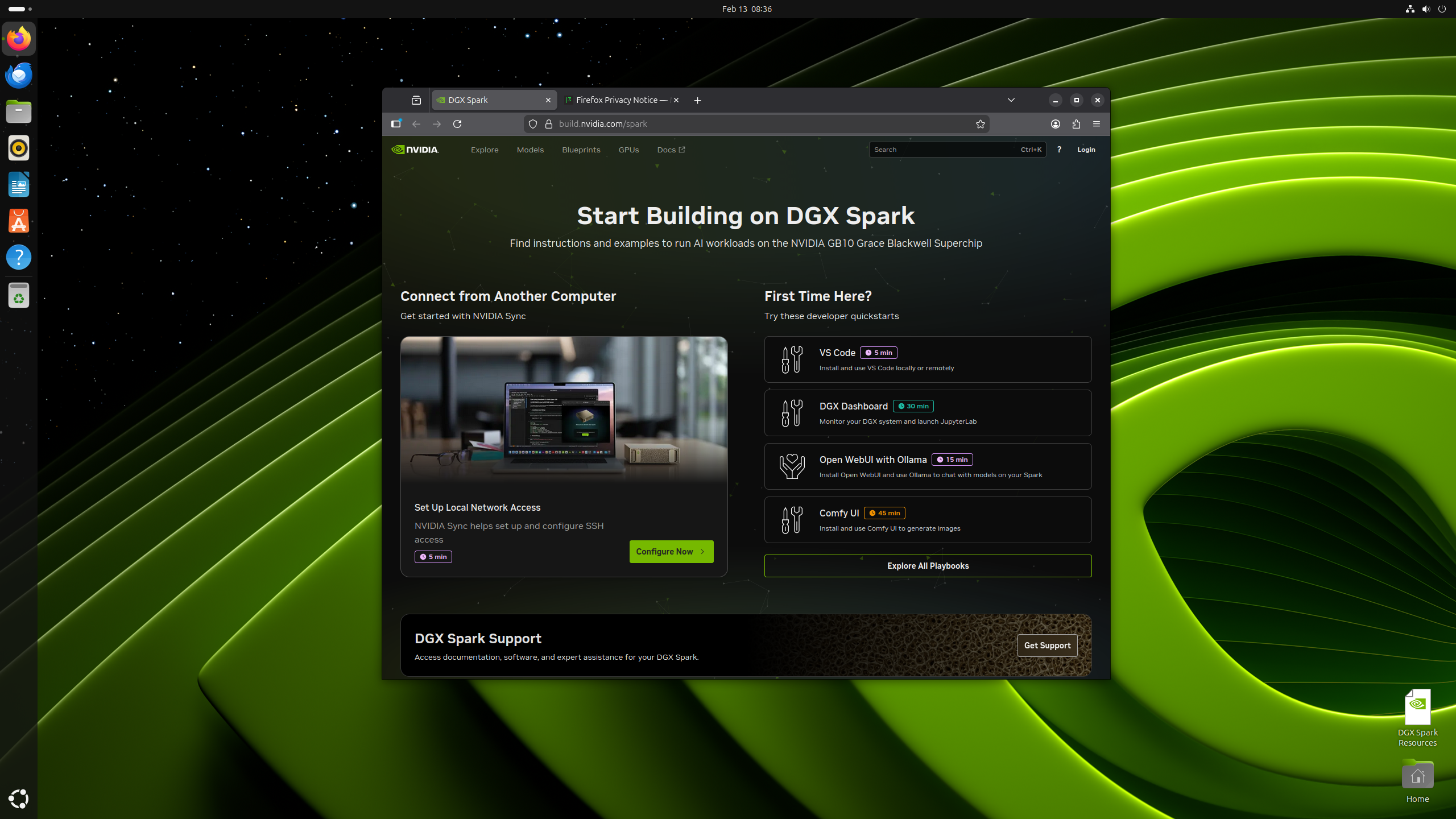

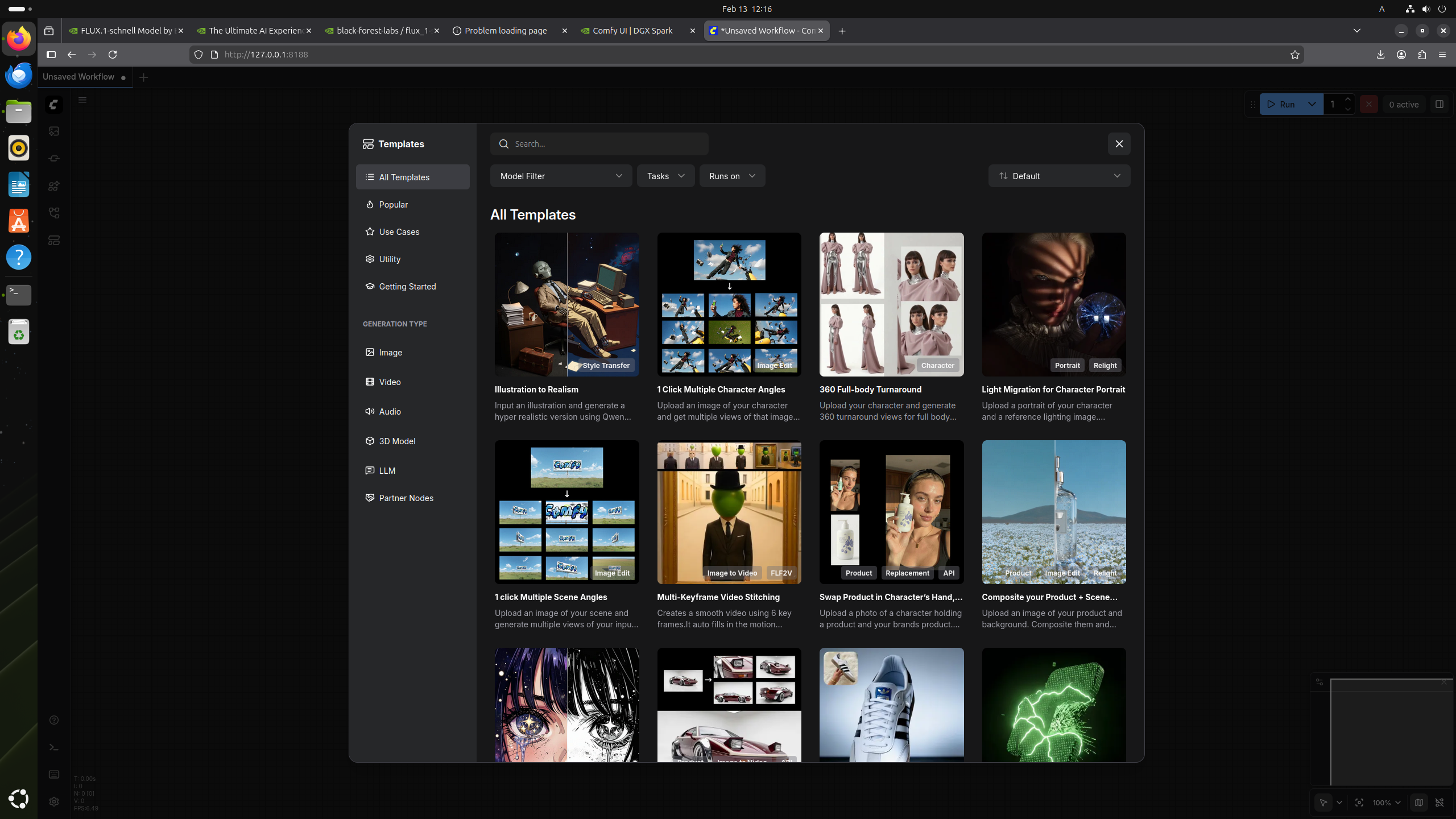

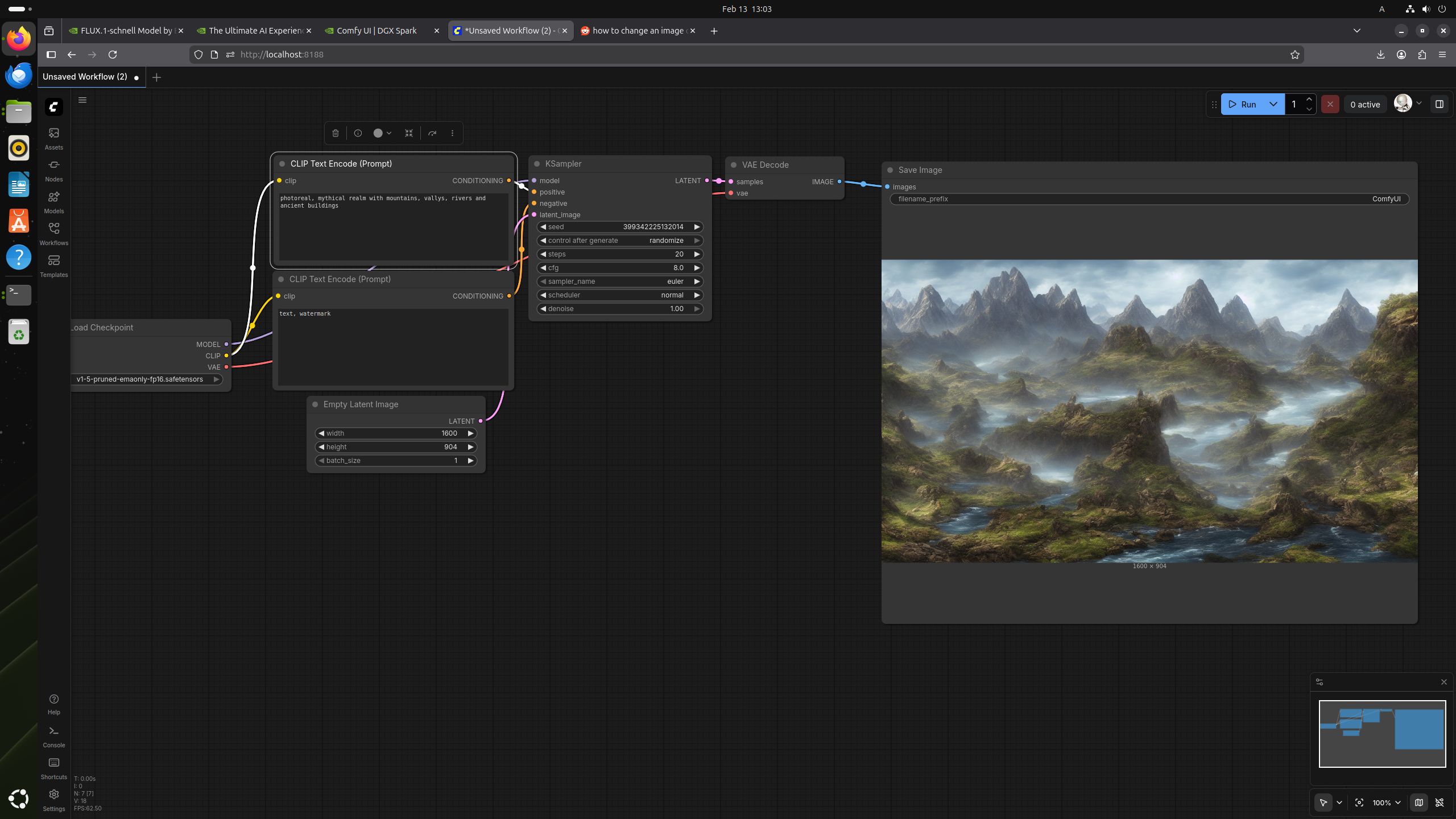

When you first power the system up, you are presented with a web interface created for the Nvidia DGX Spark, and install VS Code, the DGX Dashboard with JupyterLab, Open WebUI with Ollama and Comfy UI.

To be clear, this isn’t like a Windows application install. You are given instructions as to the commands you need to execute, which install the tools and libraries that are needed. Those who don’t use Linux every day will find it a challenge, but eventually, even I managed to get almost everything working, at least enough to load models and create some output. Image generation was especially impressive, although some of the deep thinking models aren’t that responsive if you ask them something genuinely challenging.

Some people might assume that because the code for these models is being run locally on the GN100, the cost of a subscriber model should be cheaper. But I’ve not noticed that so far, you just get better performance and save the creators' electricity bill. What running a model on this hardware gives you is that you own the model, it can’t be removed from you, and there is the potential for you control the model, customising it in a specific and personal way.

For those exploring AI in a serious way its necessary to use the latest models, and that often has a cost implication, even if you own the hardware platform.

What you certainly don’t want to do is install some free model from a couple of years ago, and then be disappointed with the results. The steepness of the curve of development on models is extraordinary, and even versions of the best ones from six months ago have been overtaken by the latest releases.

For those working in this area, using modern AI is like trying to get onto a bus when they don’t stop or even slow down to allow passengers on or off. Being aware of where the model of interest is, and when it's been overtaken, is critical to not being completely out of date before the project is completed.

Acer Veriton GN100: Early verdict

NVIDIA decided to ship its Grace Blackwell technology in an entry-level form and created a blueprint for that in their NVIDIA DGX Spark Personal AI Supercomputer, its partners are delivering their versions, like the Acer Veriton GN100.

Other than the outer case and a few other small choices, it's debatable how much variation we’re likely to see between these machines. It’s not like the GPUs, where the partners get to design variations and even tweak the founders' editions for better performance.

Maybe if these become massively popular, then we might see more variation, like combining two systems in a single box, or blending the technology with DAS storage. But for now, this is where we are.

That said, the Acer version is perfectly serviceable, but when the specifications are so close its mostly about price. For Americans paying $2999 for the GN100, it's probably one of the cheapest options, and for those in Europe, oddly, it's one of the more expensive. Perhaps Acer can fix that for Europeans, but given the rising cost of RAM, it's more likely the USA will have to pay more.

The one weakness of this design is the lack of access to the SSD, and if that’s a deal breaker for you, some other machines do have that capability.

As ever, Acer has delivered a workable solution for demanding computing tasks, but what this brand can’t guarantee is the skills needed to make the most from their platform. Buyers need to appreciate that while the hardware offers more than ten times the AI processing of a high-end PC, making the most of what it can do requires a particular skill set.

For more compact computing, see our guide to the best mini PCs you can buy