Gigabyte Gaming A16 Pro: Two-minute review

Powered by an RTX 5080 and featuring a large 16-inch, 165 Hz display, the A16 Pro delivers excellent gaming performance while doubling as a capable workstation. At the time of writing, there are two A16 Pro variants for sale – both equipped with the Intel Core 7 240H CPU, 32GB of (soldered) 5600MHz LPDDR5x RAM, a 1TB SSD and either an RTX 5070 Ti or, as tested, the RTX 5080.

The large screen folds back through 180 degrees to lie flat, has a 2560 x 1600 resolution, a 3 ms response time, a decent 400 nits of brightness and displays an excellent 100% of the sRGB color gamut. Ports include HDMI 2.1, a 5 Gbps USB-C port with power delivery and DisplayPort 1.4, 2x USB-A 5 Gbps (plus a USB-A 2.0 port), Gigabit Ethernet and a 3.5mm headset jack.

For the Gaming A16 Pro, Gigabyte includes a MUX switch but caps the GPU TGP for the 5080 at 115W. The laptop RTX 5080 can run at up to 150W, so a 115W limit means raw performance sits about halfway between that of an unfettered 5080 and a 5070, and is similar to a 5070 Ti.

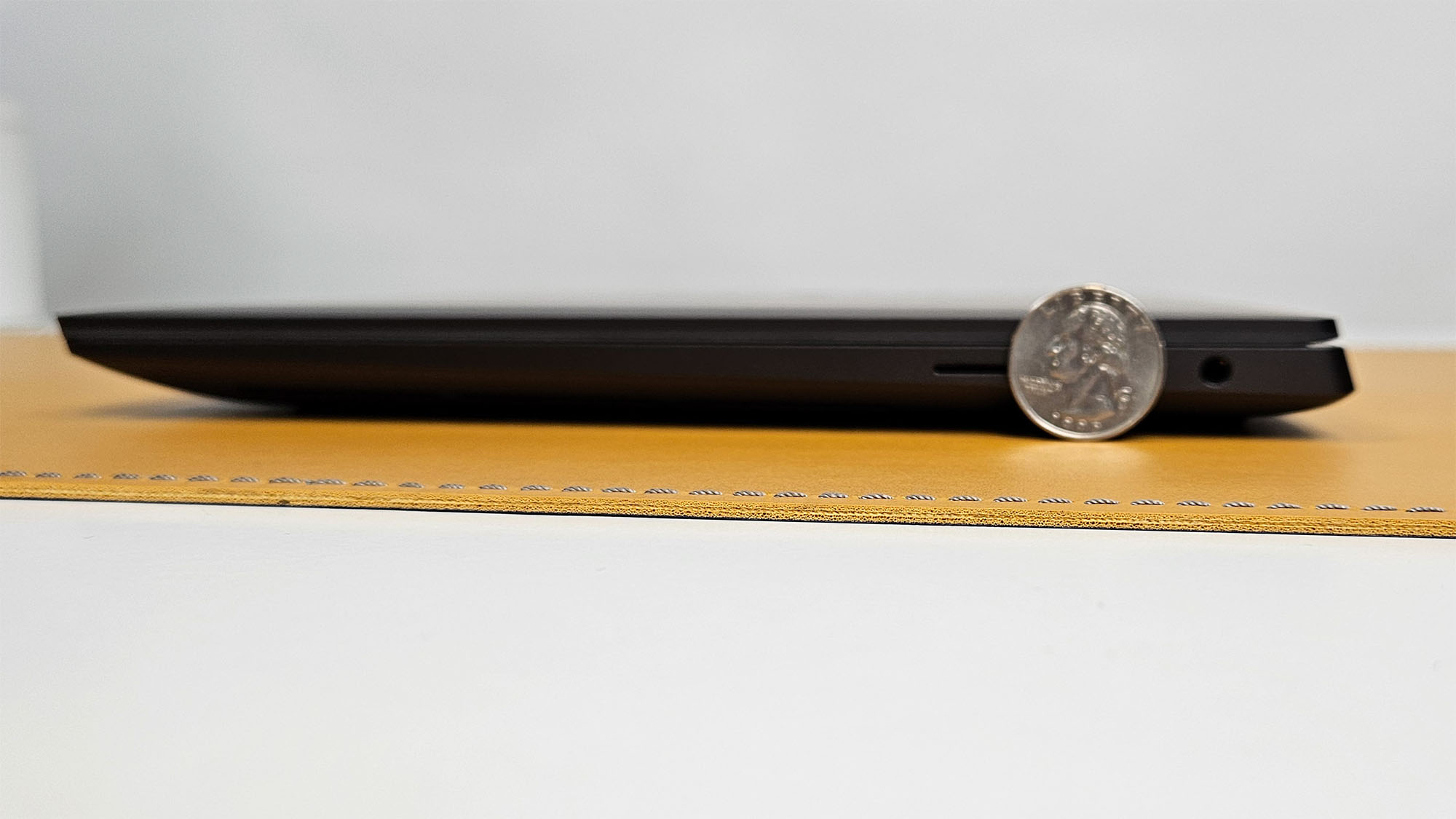

While this seems like a major downside, what matters is performance for your dollar, and the Gaming A16 Pro is cheaper than a lot of higher TGP 5080 machines. At 36 x 26 x 2.3 cm, and weighing 2.3 kg, it’s also slimmer and lighter, making it a better choice for those who want to carry it every day. In fact, most thin and light laptops that feature powerful GPUs limit the TGP to keep heat under control.

For demanding games at the screen's native resolution, you will need to scale back the quality settings to maintain over 60 fps, while older ones will happily run at over 100 fps. To push towards the 165 Hz the screen is capable of, you will need to drop back to 1080p or use frame generation.

It depends on your game (or app) of choice, but in less demanding titles the 115W TGP 5080 is about 25% slower than one at 150W, and up to 22% faster than a 5070. In more intensive games, I saw the CPU create a bottleneck, bringing frame rates closer to that of the 5070. At full tilt, the Gaming A16 Pro emits a fairly loud roar from the cooling fans, but despite this, for sustained loads, performance is limited by the cooling capacity.

The A16 Pro is also a very capable workstation and we measured up to 90W charging via USB-C, so it can provide decent productivity performance without having to lug the larger power brick around. We wish it had a larger battery than the 76Wh models used, as while the 10 hours, 37 minutes of video playback is a decent result, we were disappointed by under five hours of light-duty work.

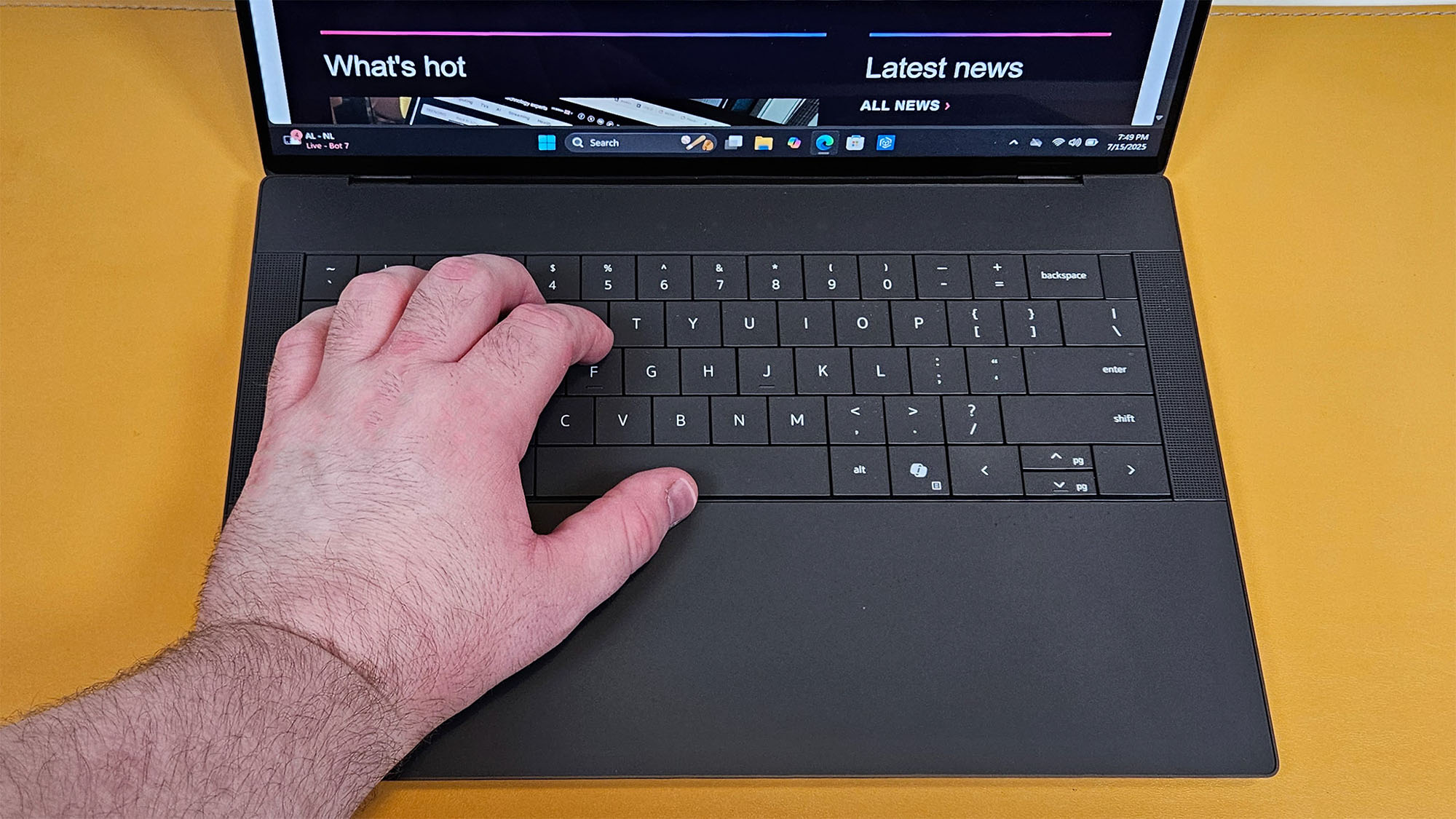

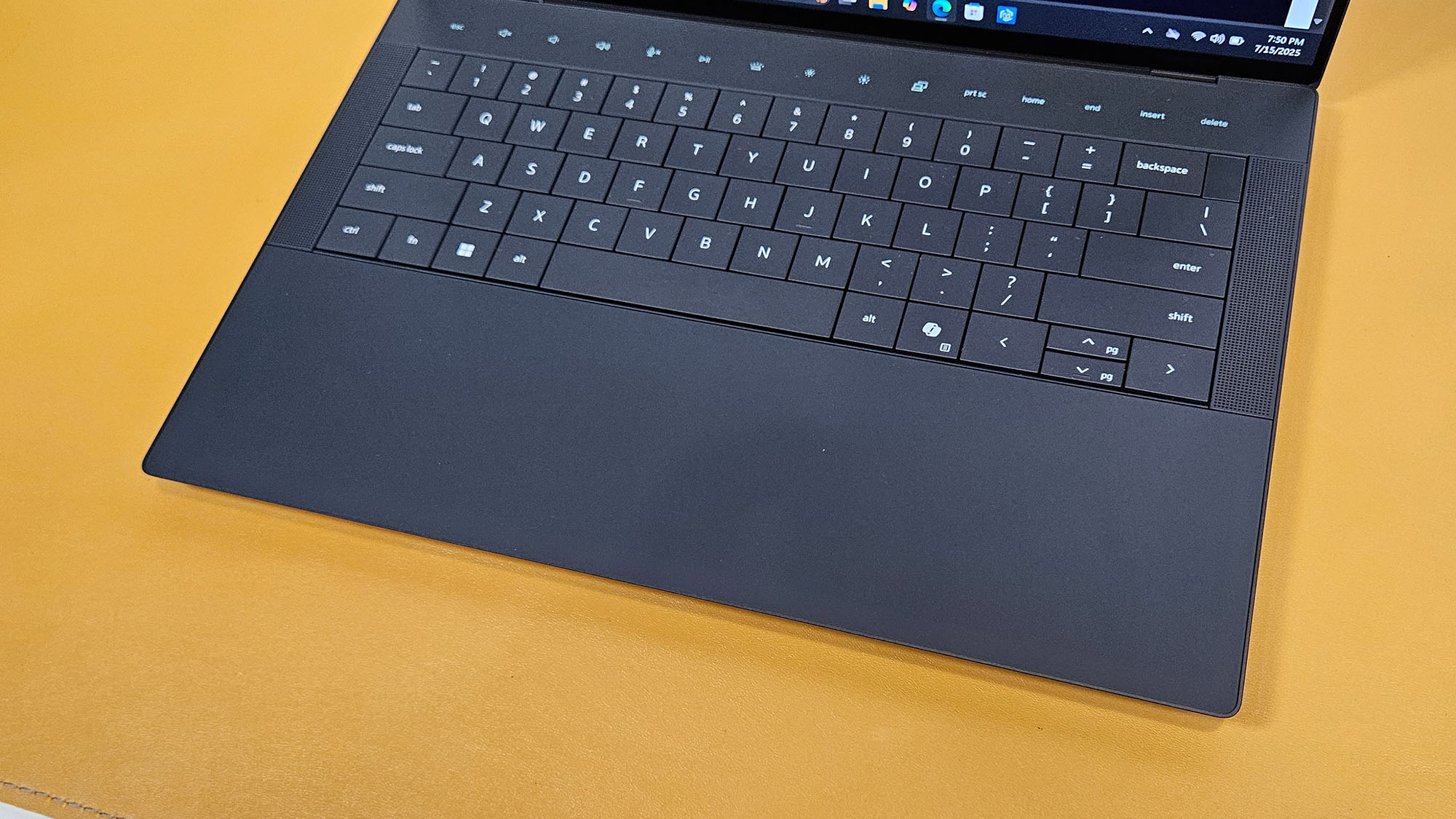

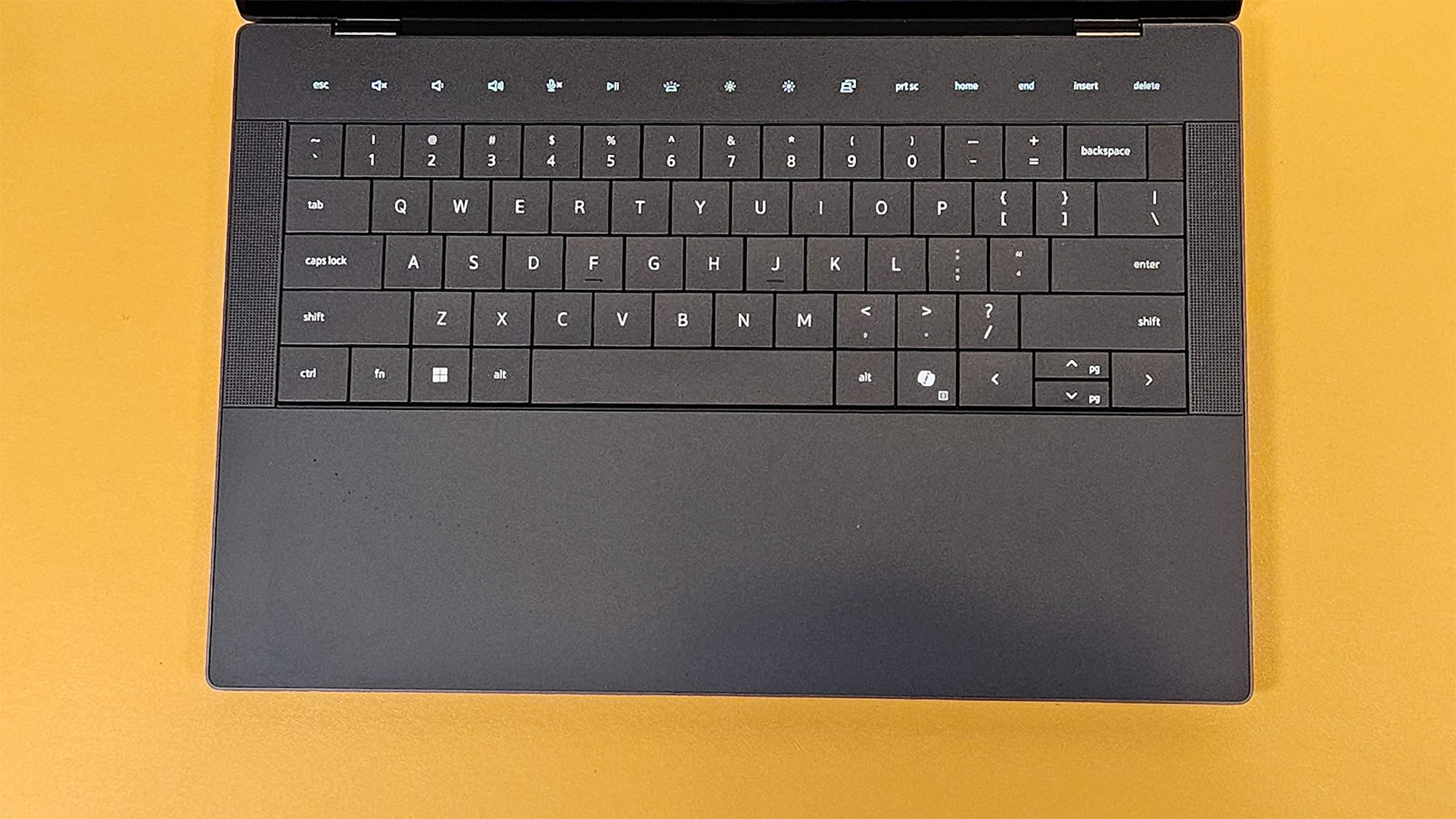

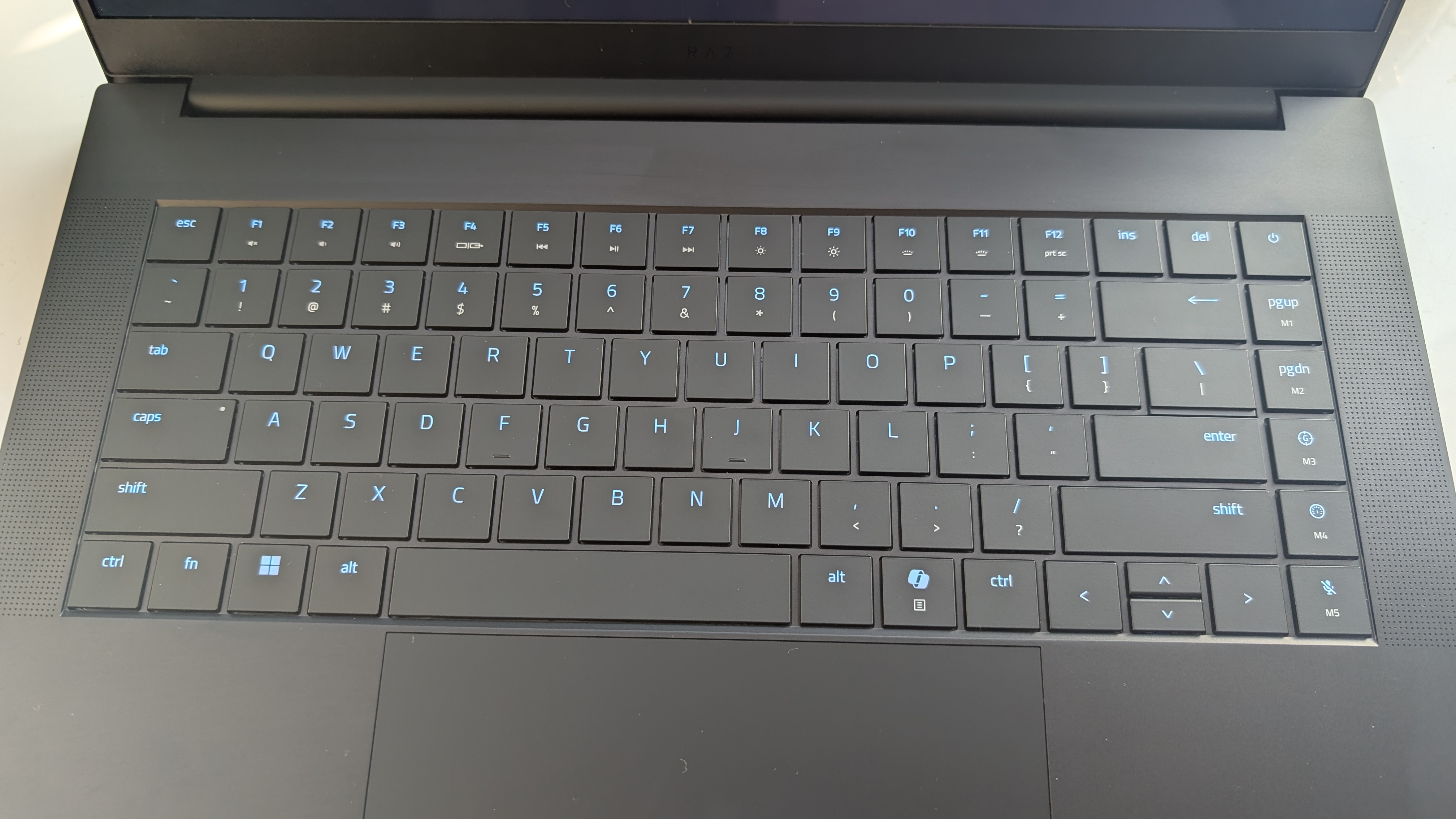

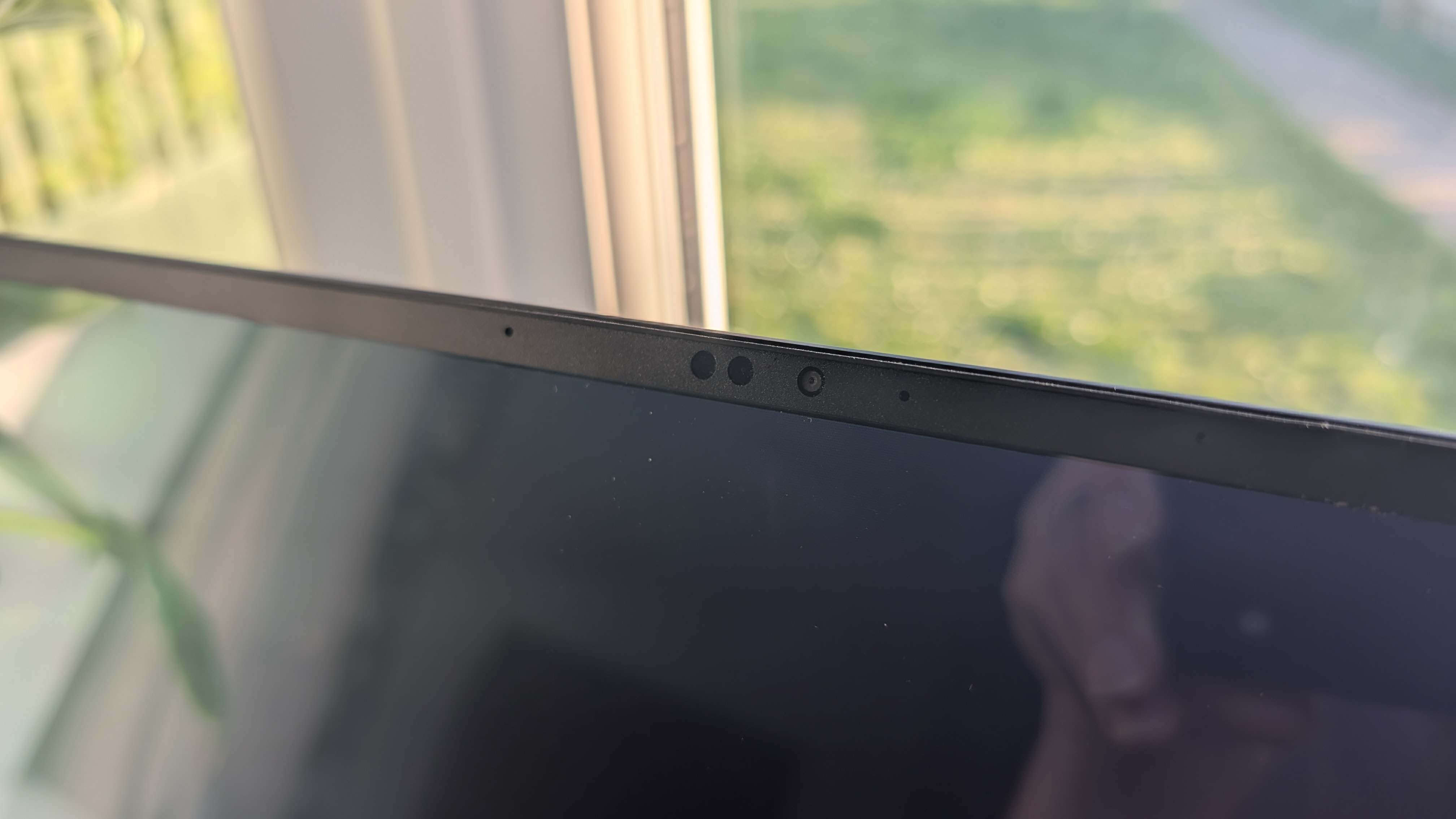

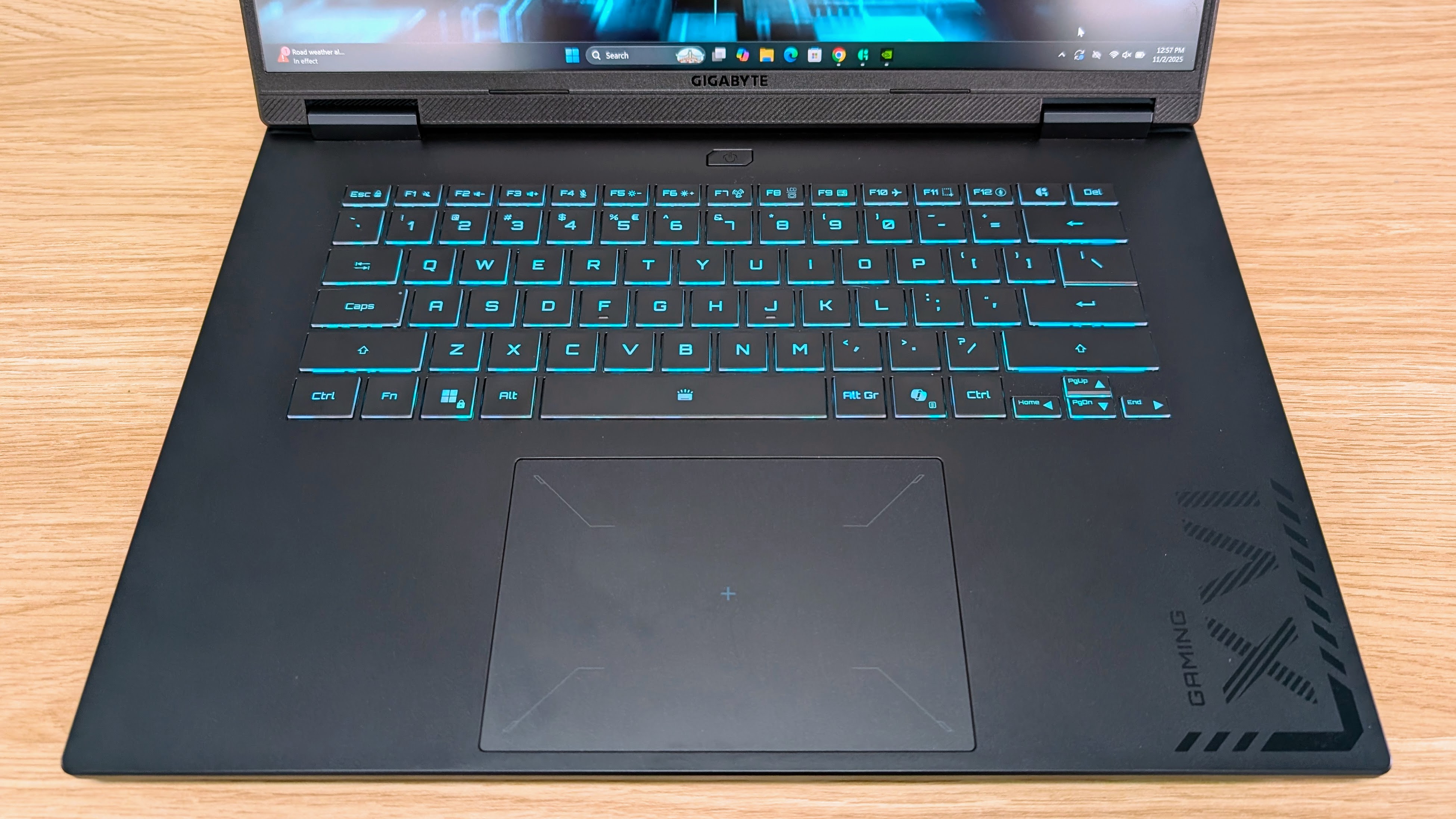

The keyboard and trackpad are both excellent, and the customizable RGB lighting modes are fun but also easily toned down to white if you want to blend in at the office. The 1080p webcam is nothing special in terms of image quality, but it does facial recognition for fast logins.

While the A16 Pro is an excellent machine overall despite a few foibles, the purchase decision comes back to price. If you can buy it for 30% less than a comparable full TGP 5080 machine (or on par or less than a full TGP 5070 Ti laptop) then it’s a solid buy, but otherwise wait for a sale.

Gigabyte Gaming A16 Pro: Price & availability

- How much does it cost? Starting from $1,899 / £1,699 / AU$3,299

- When is it available? It's available now

- Where can you get it? You can get it in the US, UK and Australia

At the full list price, the Gigabyte Gaming A16 Pro doesn’t offer standout value, but third-party retailer prices can be considerably lower, making it a good buy.

Gigabyte does not list a recommended retail price for the A16 Pro in every market, but below is a table of typical non-discounted pricing for the RTX 5080 and 5070 Ti variants at the time of writing.

When on sale, we have seen it at up to 25% less than these prices.

RTX 5080 | RTX 5070 Ti | |

|---|---|---|

US List Price | $2,199 | $1,899 |

UK List Price | £2,099 | £1,799 |

AU List Price | AU$4,299 | AU$3,299 |

- Value score: 4 / 5

Gigabyte Gaming A16 Pro: Specs

Availability is the same in the US, UK and Australia, with the main difference being the inclusion of an RTX 5070 Ti GPU or the RTX 5080 card. If comparing models, be aware there is a non-Pro Gigabyte Gaming A16 available as well that has lower spec and TGP.

Below is the specs list for the A16 Pro models available.

RTX 5070 Ti variant | RTX 5080 variant | |

|---|---|---|

US Price | $1,899 | $2,199 |

UK Price | £1,799 | £2,099 |

AU Price | AU$3,299 | AU$4,299 |

CPU | Intel Core 7 240H | Intel Core 7 240H |

GPU | RTX 5070 Ti | RTX 5080 |

RAM | 32GB LPDDR5x 5600 MHz | 32GB LPDDR5x 5600 MHz |

Storage | 1TB | 1TB |

Display | 2560 x 1600 IPS, 100% sRGB, 400 nits, 165 Hz | 2560 x 1600 IPS, 100% sRGB, 400 nits, 165 Hz |

Ports | 1x USB-C 5 Gbps, DisplayPort 1.4, PD charging, 2x USB-A 5 Gbps, HDMI 2.1, 1 Gb Ethernet, 3.5mm headset jack. | 1x USB-C 5 Gbps, DisplayPort 1.4, PD charging, 2x USB-A 5 Gbps, HDMI 2.1, 1 Gb Ethernet, 3.5mm headset jack. |

Connectivity | Wi-Fi 6E, 802.11ax 2x2 + BT5.2 | Wi-Fi 6E, 802.11ax 2x2 + BT5.2 |

Battery | 76Wh | 76Wh |

Dimensions | 358.3 x 262.5 x 19.45 - 22.99 mm (14.11 x 10.33 x 0.77 - 0.91 inches) | 358.3 x 262.5 x 19.45 - 22.99 mm (14.11 x 10.33 x 0.77 - 0.91 inches) |

Weight | 2.3 kg (5.1 lbs) | 2.3 kg (5.1 lbs) |

- Specs score: 4 / 5

Gigabyte Gaming A16 Pro: Design

- Conservative power ratings

- Customizable lighting

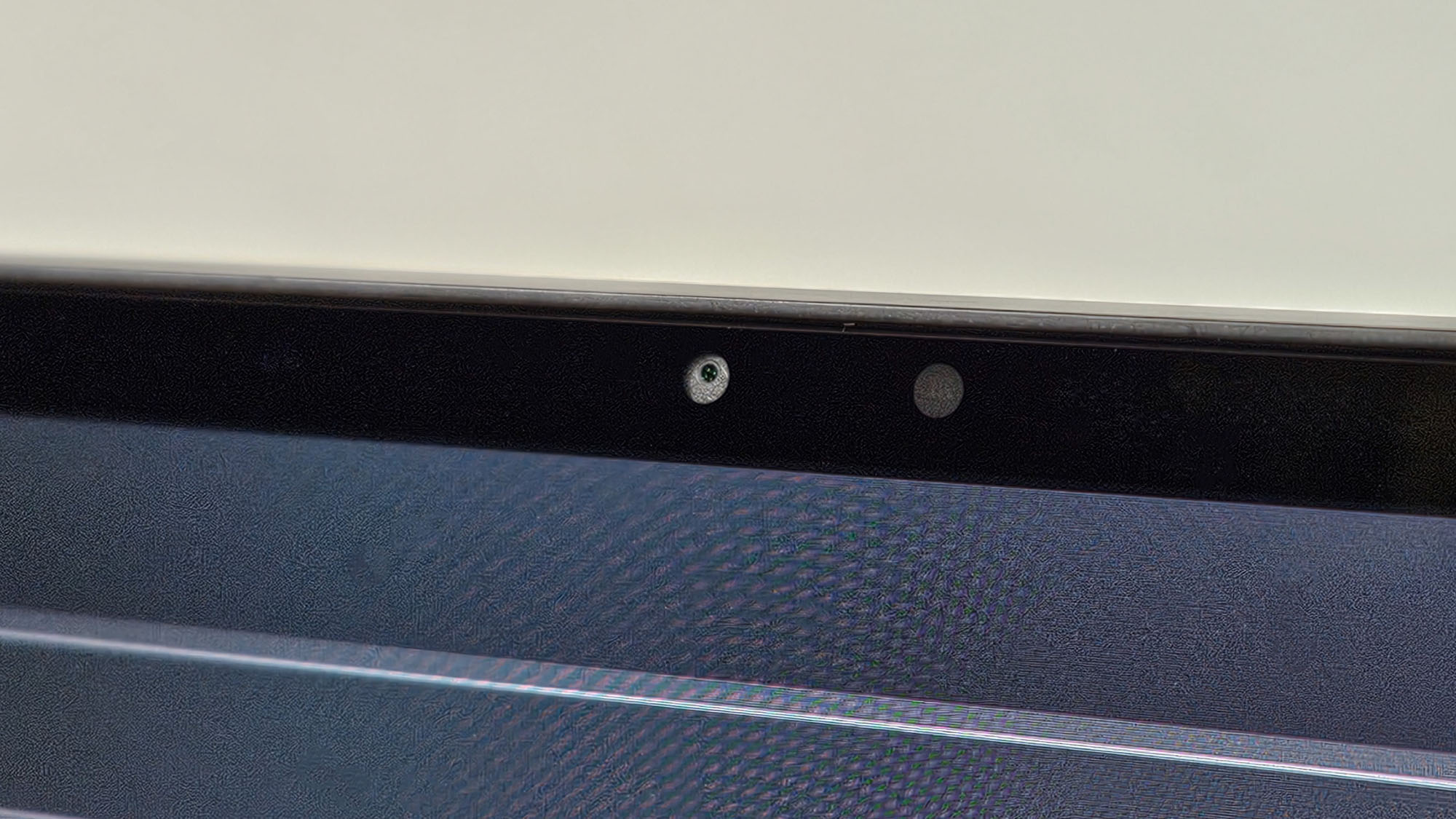

- Facial recognition webcam

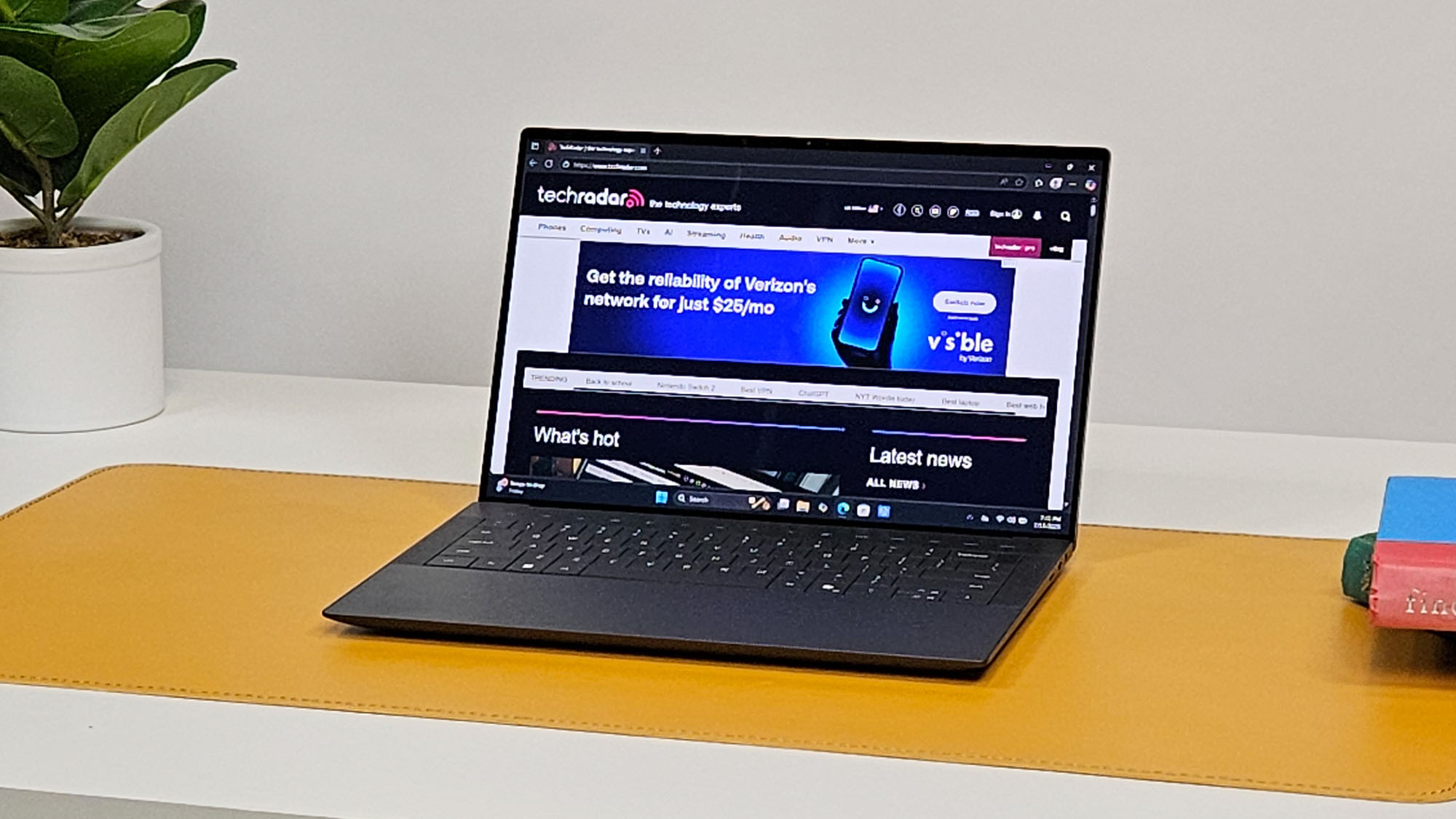

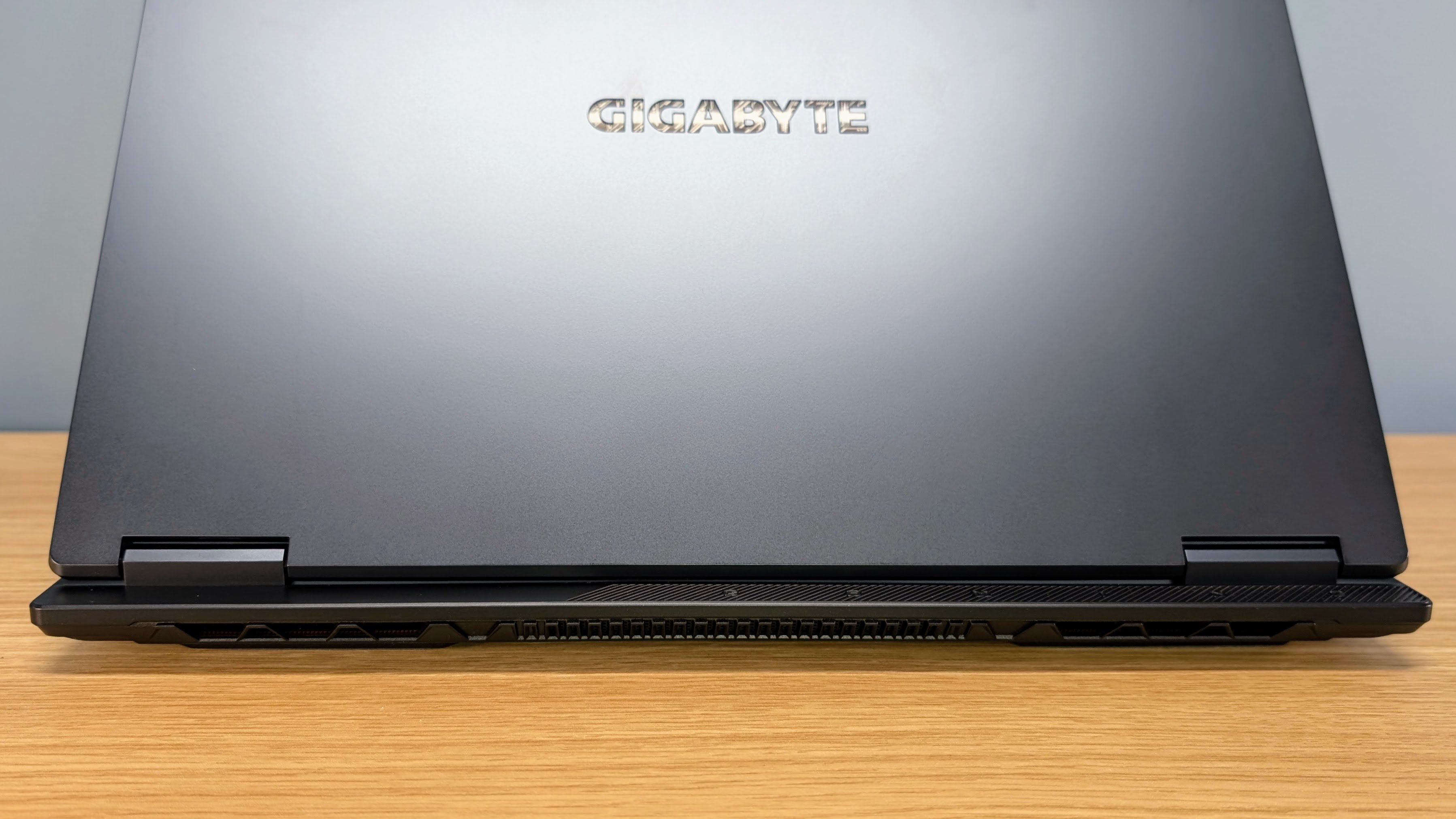

The A16 Pro is a large 16-inch laptop, but the display has fairly slim bezels and overall it fits into a footprint small enough that I think it’s reasonable to carry it on the go every day. This is helped by Gigabyte’s inclusion of 100W USB-C PD charging, so you can leave the big power brick at home if you’re not going to be gaming.

The laptop measures in at 358.3 x 262.5 x 19.45 - 22.99 mm, but this is at the most optimistic points. At the front, I get about 20 mm, and 25 mm at the rear, and 28 mm if you include the feet. On the scale, it weighs 2.36 kg (not far off the 2.3 kg from Gigabyte) and the power brick is another 0.54 kg.

The A16 Pro stands out with a display that can fold through 180 degrees to lie flat. I love this design for a couple of reasons. Firstly, it means you can toggle the display upside down and share it with someone sitting across from you. Secondly, it makes it easy to use the laptop in your own unique way.

For example, with the A16 Pro plugged into a second screen or dock, and using a keyboard and mouse, I liked to open the screen fully, and place the laptop in a vertical stand. That way the laptop screen is raised to the right level to be placed next to a second screen, plus it leaves the vents unobstructed and takes up very little desk space.

The A16 Pro includes a MUX switch for Advanced Optimus graphics switching. This means the laptop can optimize graphics performance and power use automatically based on need, such as shutting down the discrete GPU, without needing to restart when switching modes.

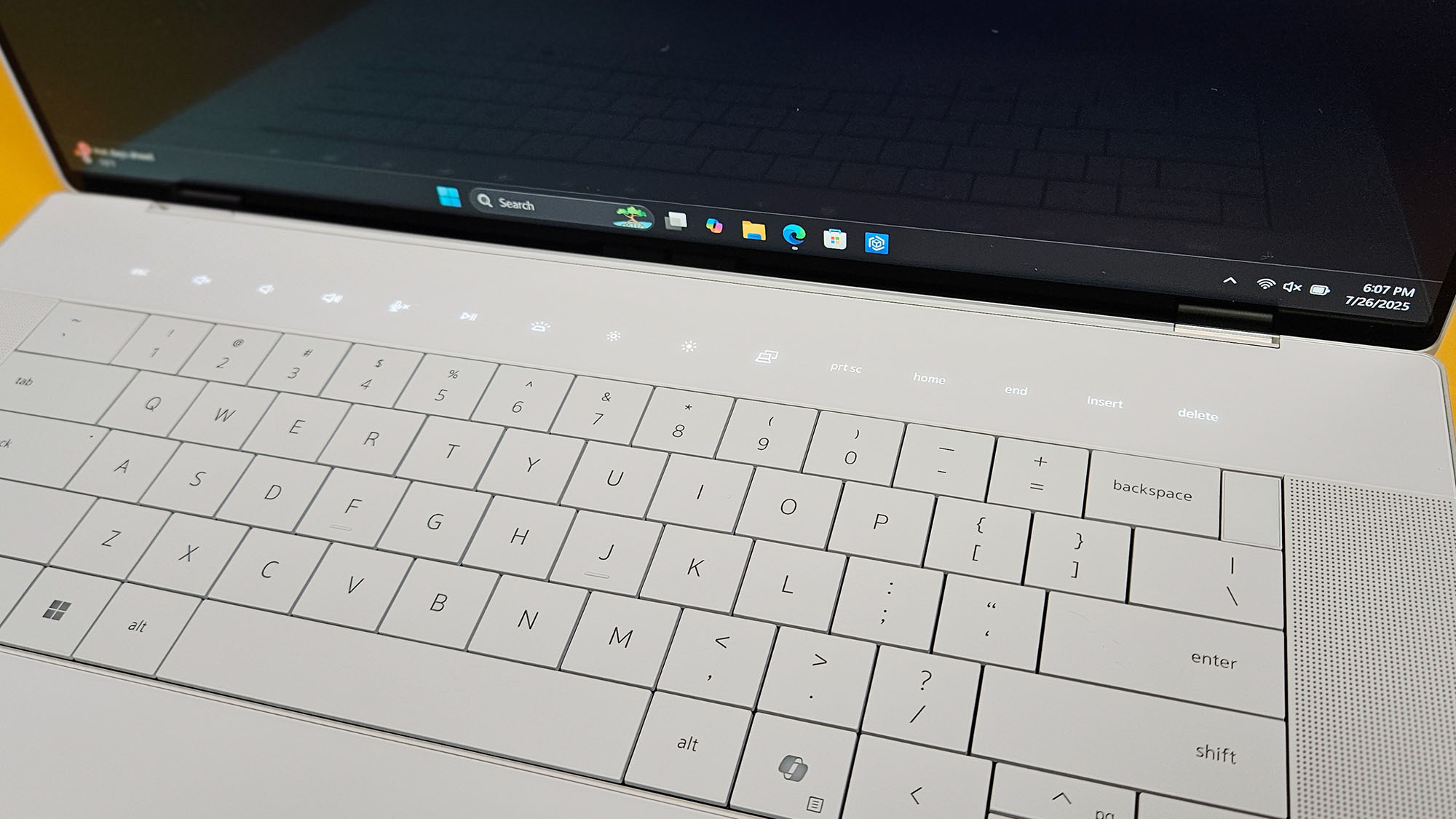

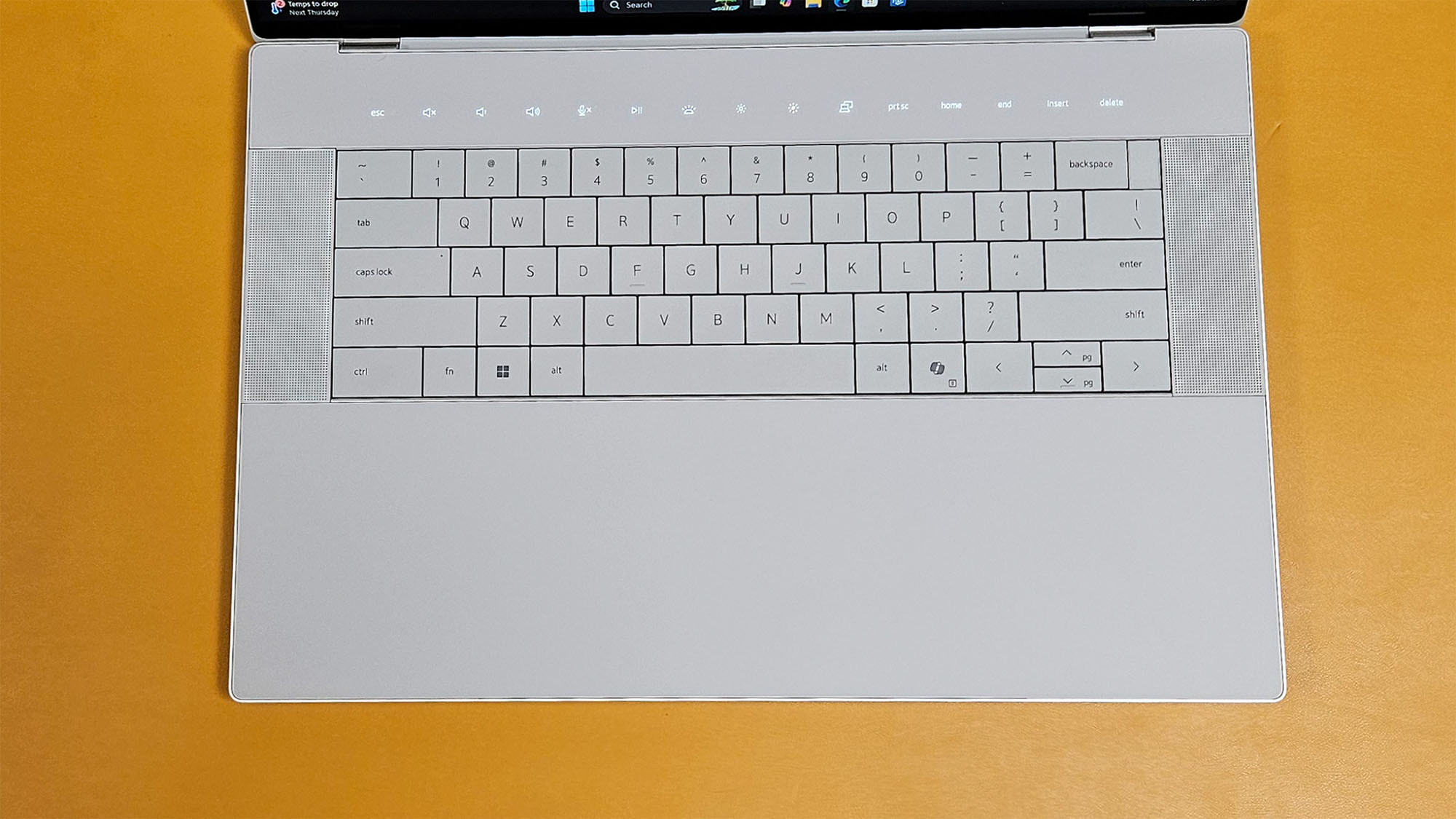

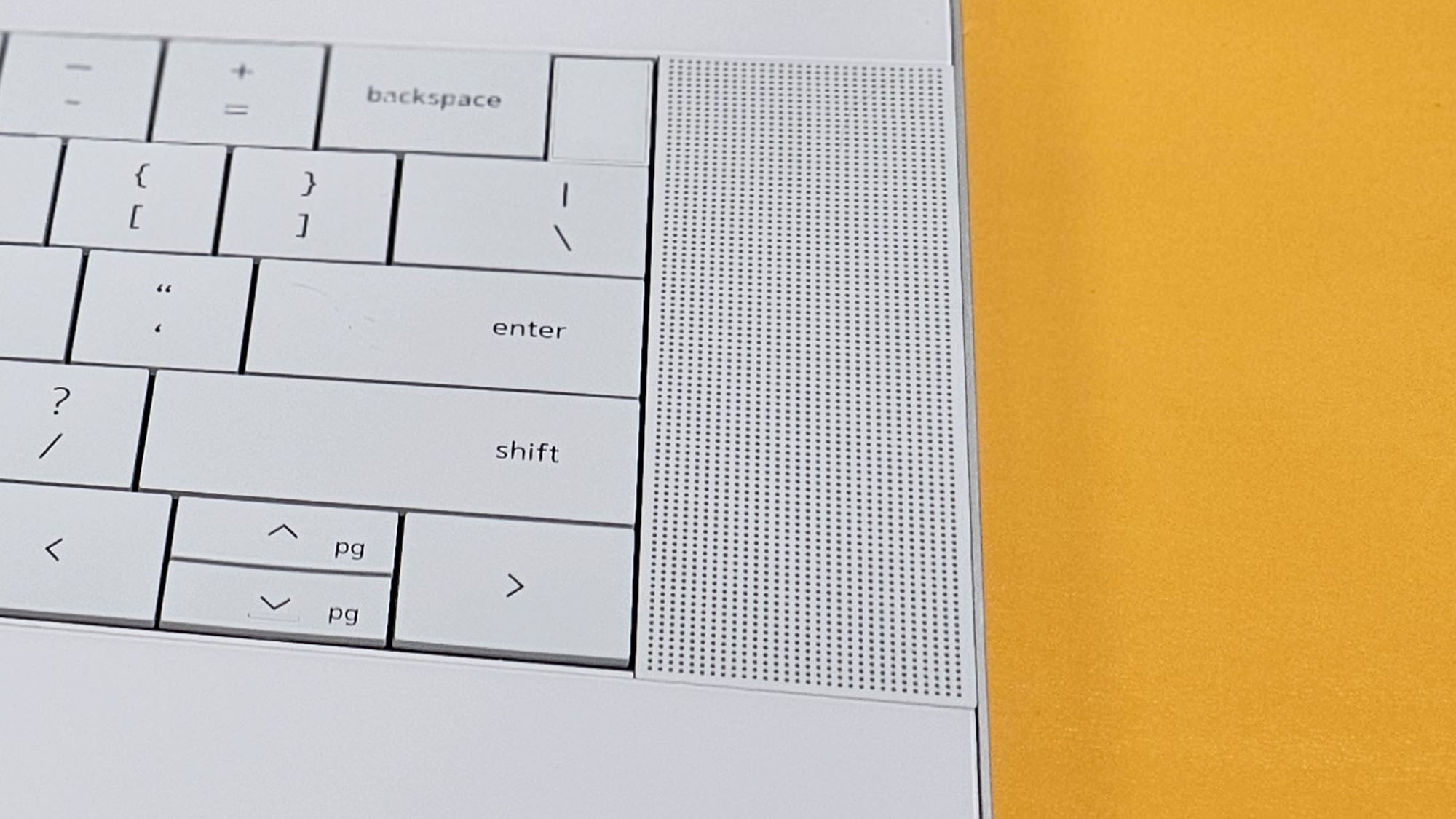

The A16 Pro opts for plastic on the main chassis and display to save weight. While I like the strength of metal, the stiff plastic used is still pretty good and the laptop feels capable of handling any bumps or drops. The laptop is equipped with a large keyboard with 1.7 mm key travel, but there’s no numpad. Still, it’s pleasant enough to type on despite a little bounce. The keyboard has customizable RGB 1-zone backlighting that can be used to add some bling, or toned down to muted colors (or white) if trying to blend in at the office or university.

Port selection is reasonable but not outstanding, with a single USB-C that includes DisplayPort output and USB-C charging. At 5 Gbps, the data rate is lower than I would like and I’d ideally want to see at least one 40 Gbps USB4 port for a laptop in this class, and at the very minimum 20 Gbps USB-C. You also get two 5Gbps USB-A ports, plus a standard USB 2.0 port.

It also has HDMI 2.1, plus Ethernet and a 3.5mm headset jack – though no card reader. The ports are well located on the sides of the laptop, with plenty of spacing between them ensuring easy access.

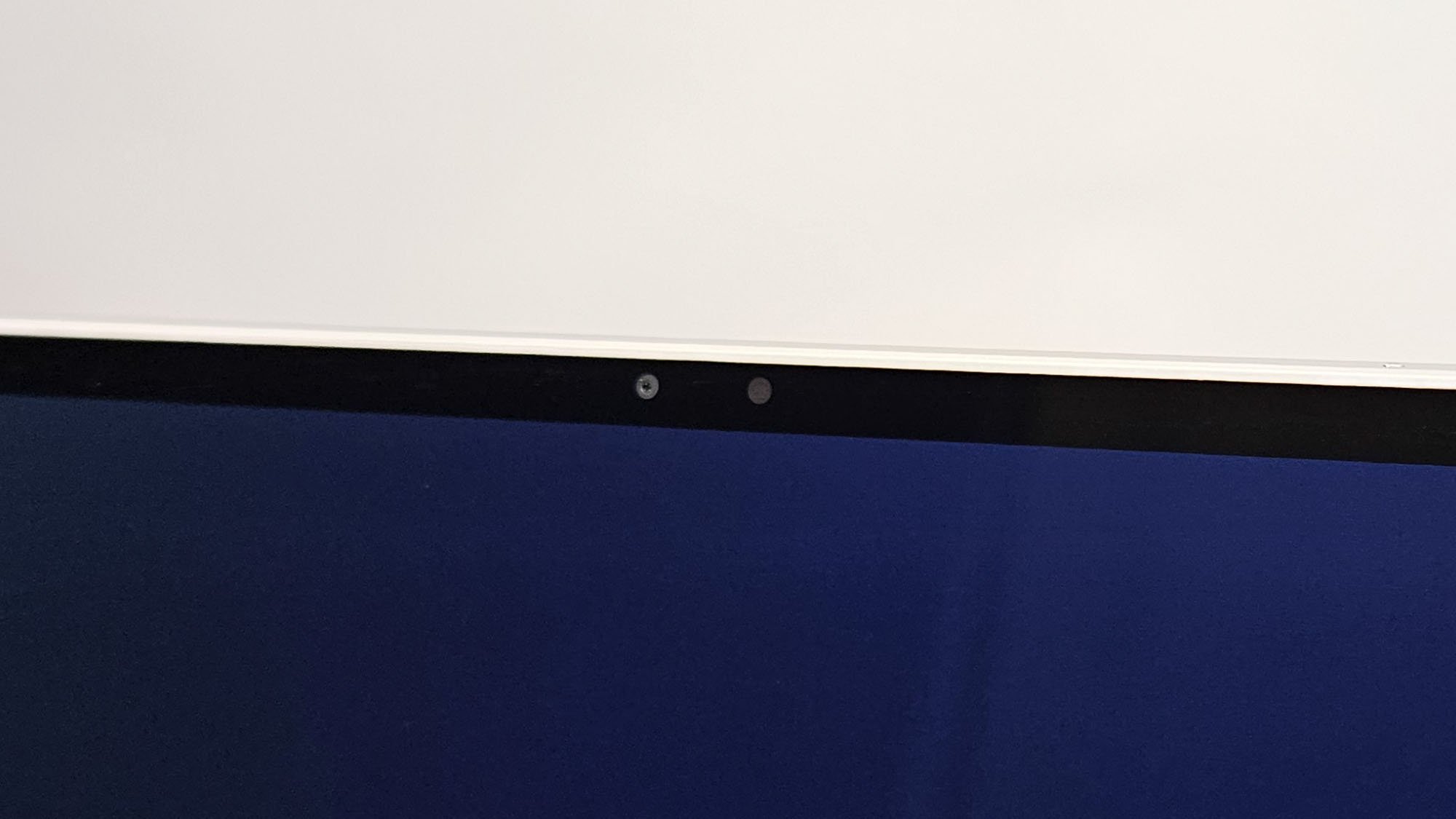

The choice to include Wi-Fi 6E (802.11ax 2x2) means the A16 Pro doesn’t have the absolute fastest networking, but is still relatively future-proof in terms of high-speed connectivity. The A16 Pro doesn’t include a privacy e-shutter on the webcam, but on the plus side it’s capable of facial recognition for fast Windows login.

The 76Wh battery is decently sized but I’d have much preferred to have seen a larger 99Wh battery – as is featured in some competitors – to help eke out a little extra time unplugged.

- Design score: 4 / 5

Gigabyte Gaming A16 Pro: Performance

- Solid gaming results

- Somewhat noisy under load

- Decent CPU performance for workstation use

I’ve tested a range of similarly priced laptops that use the RTX 5080 GPU and, generally speaking, for the same GPU thermal design power (TGP) and similar CPU / RAM spec, gaming results don’t vary a huge amount if the cooling is up to the task. But subtle differences in how manufacturers configure their CPU and GPU power profiles, as well as other design choices, can lead to consistent differences overall.

For the A16 Pro, Gigabyte caps the GPU TGP for the 5080 at 115W. The 5080 can run at up to 150W (plus dynamic boost), so the 115W limit in the A16 Pro means performance sits about halfway between that of an unfettered 5080 and a 5070, and is similar to a 5070 Ti. While this seems like a major downside, what matters is performance for your dollar, and the Gaming A16 Pro is cheaper than a lot of higher TGP 5080 machines. Gigabyte does not confirm the TGP of the RTX 5070 Ti, though it can likely run at the full 115W the GPU is rated for, but without higher dynamic boost power levels.

Now, it’s important to mention that a capped 115W RTX 5080 still has major benefits over a full power 5070 Ti or 5070. You get 16GB of VRAM instead of 12GB or 8GB, which means you can run higher quality textures at 2560 x 1600 and keep ray tracing on in games like Cyberpunk 2077. The 5080 also has a wider 256-bit memory bus, so busy scenes with path-traced lighting or dense city areas will drop the frame rate less than with the 128-bit 5070 or 192-bit 5070 Ti.

The 5080 has more ray-tracing and Tensor hardware too, so DLSS 4 can run at a higher preset without impacting playability. Plus, it’s more powerful for creator work. The GPU will stay relevant longer too, as more new titles call for loads of VRAM, so the 16GB 5080 will handle them better than a 12GB 5070 Ti or an 8GB 5070.

Overall, the A16 Pro manages decent gaming performance but does run at the limits of its cooling. In most scenarios, we found that the CPU hit its thermal throttling point before the GPU, limiting performance. Older or less intense games still tended to have the CPU thermally throttled, but the GPU could still run flat out. On more demanding games like Cyberpunk 2077, the performance was bottlenecked by the CPU and the 5080 was often running up to 20% or so behind its full potential.

The Core 7 240H isn’t a bad CPU, but as a refreshed Raptor Lake-H part launched in late 2024, it’s not the most efficient option. This isn’t a problem normally, but with the limited thermal ability of the laptop, heavy load on the CPU and GPU push heat levels to the point the CPU has to throttle itself.

This can be helped somewhat by scaling back settings that put more load on the CPU, like reducing crowd depth, but ultimately the 5080 is still often limited by the CPU. This meant that for games like Cyberpunk 2077, we struggled to push frame rates to the 165 Hz the display is capable of (with frame generation), even when dropping detail or resolution back.

To give some context, I have compared the A16 Pro benchmark results to the Alienware 16X Aurora with a 5070 and the Alienware 16 Area-51 with a 150W TGP 5080. In synthetic benchmarks (which don’t get CPU bottlenecked), the A16 Pro sits right between the two, as expected. But for gaming benchmarks, the A16 Pro has a smaller lead over the 5070.

Now, this is not necessarily a problem, as, for example, the A16 Pro is significantly cheaper than the RTX 5070 equipped Alienware 16X Aurora, making it a much better bang for buck machine. While we wish Gigabyte had used a more efficient CPU, ultimately the design trade-offs here are fine overall.

Still, it does mean you need to be slightly more careful when comparing pricing, and make sure the A16 Pro is on par with or slightly less than a 5070 Ti-based machine with a more powerful cooling system, and significantly less than a full TGP 5080 laptop.

One potential wildcard is the performance of the A16 Pro with 5070 Ti. While the 5070 Ti will likely experience the same CPU bottleneck as the 5080, the overall performance difference may be small. We will update this review once we can test the 5070 Ti variant or confirm third-party benchmark results.

Gigabyte Gaming A16 Pro | Alienware 16X Aurora | Alienware 16 Area-51 | |

|---|---|---|---|

CPU | Intel Core 7 240H | Intel Ultra 9 275HX | Intel Ultra 9 275HX |

GPU | RTX 5080 (115W TGP) | RTX 5070 (115W TGP) | RTX 5080 (150W TGP) |

RAM | 32GB | 32GB | 32GB |

Battery | 76 Wh | 96 Wh | 96 Wh |

General performance | |||

PCMark 10 - Overall (score) | 7,523 | 8,437 | 8,639 |

Geekbench 6 - Multi-core | 13,503 | 19,615 | 20,244 |

Geekbench 6 - Single-core | 2,744 | 3,068 | 3,149 |

Geekbench 6 - GPU | 177,521 | 136,686 | 213,178 |

Cinebench R24 - CPU Single Core | 117 | 133 | 133 |

Cinebench R24 - CPU Multi Core | 832 | 1,964 | 2,106 |

Battery | |||

PCMark 10 - Battery Work (HH:MM) | 3:19 | 6:01 | 3:09 |

TechRadar video test (HH:MM) | 10:37 | 6:16 | 4:27 |

Graphics performance | |||

3DMark SpeedWay | 4,247 | 3,664 | 5,610 |

3DMark Port Royal | 10,744 | 9,031 | 11,999 |

Steel Nomad | 3,967 | 2,846 | 5,109 |

Cyberpunk 2077 - 1600p RT Low (DLSS) | 84 | 76 | 114 |

Cyberpunk 2077 - 1600p RT Low (DLSS off) | 52 | 50 | 79 |

Cyberpunk 2077 - 1600p RT Ultra (DLSS) | 55 | 54 | 72 |

Cyberpunk 2077 - 1600p RT Ultra (DLSS off) | 25 | 16 | 37 |

Black Myth: Wukong - 1600p Cinematic (DLSS) | 77 | 76 | 104 |

Shadow of the Tomb Raider - 1600p (DLSS off) | 137 | 131 | 175 |

Storage | |||

CrystalDiskMark Read/Write (MB/s) | 6,982 / 6,481 | 6,939 / 6,740 | 6,575 / 5,890 |

Overall, even at 115W the RTX 5080 is a solid choice for 2560 x 1600 gaming, and can run most games at very playable frame rates without dropping back the details. For especially demanding games like Cyberpunk 2077 set to ultra ray tracing and high texture detail, the A16 Pro manages 55 fps, or 84 fps if the ray tracing is on low. Turn on frame generation, and you can be gaming at the display's 165 Hz limit with no problems at all.

While the fan profiles keep the A16 Pro quiet in non-gaming use, at full tilt they are quite loud and if gaming, we highly recommend using a headset. Heat is directed out of both the sides and rear of the laptop, and after an intense gaming session, the trackpad reached a low 24.6°C / 76.3°F, the keyboard hit a toasty 41.3°C / 106.3°F and the underside was the hottest part at 42.4°C / 108.3°F. The keyboard temp here is significantly hotter than many competing gaming laptops, though not at the point it is uncomfortable to use.

The A16 Pro uses the GiMate control software and includes five modes – Balanced, Game, Creator, Power Saving and Online meeting – which vary the performance levels, as well as the amount of noise and heat created. For each mode, you can also adjust the individual settings, like fan control profile, display brightness and more. Stability is also good and I had no glitches or strange behavior from the Gigabyte software, or problems running any games, benchmarks or applications.

The A16 Pro cooling system may struggle with the GPU, but is good enough to let the grunty Intel Core 7 240H CPU use up to 85W in our testing. It’s not as powerful as the Core Ultra series CPUs, or the new AMD Ryzen AI processors, but still makes for a competent workstation when not gaming, and it will happily handle heavy workloads such as video editing.

If you leave the software in charge of profile selection, performance on battery takes a hit compared to being plugged in, and is about 60% slower in CPU workloads.

The A16 Pro can also be run on or charged from USB-C using PD spec 100W (20V/5A) and we saw a max of 90W in use. Unlike the questionable USB-C power profiles on the otherwise excellent Gigabyte 16X, the A16 Pro gives solid performance on USB-C, and is about 50% faster than on battery for CPU workloads and about 35% behind full performance. Gaming on USB-C is about half the performance compared to using the main PSU.

In other words, workstation use feels snappy when running on USB-C (such as when plugged into a dock) or if charging from a power bank, but don’t expect to do much more than casual gaming without the larger PSU.

- Performance score: 4 / 5

Gigabyte Gaming A16 Pro: Battery life and Charging

- 4 hours and 49 minutes of regular use when unplugged

- 10 hours and 37 minutes of video playback

- 58 minutes of gaming

The Gigabyte Gaming A16 Pro uses a 76Wh battery, which is decent size but not quite as good as the 99Wh featured in some competing models.

Still, five hours or so of work unplugged (and around an hour longer if just browsing the internet or watching YouTube videos) is enough to be useful, but still not a great result overall. In contrast, other gaming laptops I've tested with similar spec hardware (including more powerful CPUs) use a 96Wh battery and can last up to 7 hours unplugged. The A16 Pro doesn’t handle medium level loads too well on battery (like video editing), and I saw run times at under three hours. On the plus side, the A16 Pro offers relatively quick charging and it gets back to full charge in under an hour.

The PSU is medium sized (and not as chunky as many gaming laptops) and weighs 537 grams (including the cable), so it has a measurable impact if carried around all day. Fortunately USB-C charging is decent and it could top up in just over an hour. The A16 Pro also charges well from a power bank and a large, but flight safe, 27,000mAh (99Wh) model will just give the laptop a full charge.

- Battery life and charging score: 3.5 / 5

Should you buy the Gigabyte Gaming A16 Pro?

Attributes | Notes | Rating |

|---|---|---|

Value | Expensive at list price, but great value when discounted | 4 / 5 |

Specs | Reasonable but not standout | 3.5 / 5 |

Design | Decent features but some compromises like noisy fans | 4 / 5 |

Performance | Solid gaming and workstation performance | 4 / 5 |

Battery | Short battery life for work but decent video playback | 3.5 / 5 |

Overall | A gaming laptop that is also very well equipped for workstation or creator use – but don’t pay full list price | 4 / 5 |

Buy it if…

You want decent gaming performance in a relatively portable package

The A16 Pro isn’t exactly tiny, but considering the large 16-inch screen and full performance GPU, it’s still pretty good for carrying every day.

You want CPU performance

The Intel Core 7 240H CPU is grunty enough for demanding Uni students, or workstation and creator use.

You want to use it for more than just gaming

Options like the fold-flat screen mean the Gigabyte is also a great option for plugging in alongside a second monitor.

Don’t buy it if...

You want a very portable gaming option

The A16 Pro isn’t too heavy or thick, but if portability is a prime concern, then consider a slimmer model, or a 14-inch gaming machine.

You want a more powerful GPU

The A16 Pro caps the 5080 to 115W, and you will need to look at models like the Aorus Master 16 or 18 for better gaming frame rates.

You want a very affordable laptop

The A16 Pro is a great-value machine (when discounted), but it’s still a pricey laptop. If you crave affordable RTX 5050 and 5060 focused gaming, check out the non-Pro Gigabyte Gaming A16.

Gigabyte Gaming A16 Pro: Also consider

If my Gigabyte Gaming A16 Pro review has you considering other options, here are some more gaming laptops to consider:

Razer Blade 14 (2025)

Smaller and lighter than the 16-inch Gigabyte Gaming A16 Pro, the Razer Blade 14 offers pretty good performance in a small package.

Check out the full Razer Blade 14 (2025) review

Alienware 16 Area-51

A powerful 16-inch gaming laptop that’s sold with an RTX 5060, 5080 or 5090, this is a chunky machine that’s all about performance.

Take a look at the full Alienware 16 Area-51 review

- I tested the Gigabyte Gaming A16 Pro for two weeks

- I used it both on a desk and carried it in a backpack for travel

- I used it for gaming, as well as office productivity work and video editing

I ran the Gigabyte Gaming A16 Pro through the usual comprehensive array of TechRadar benchmarks, as well as using it for actual day-to-day work at a desk and on the go. I used the TechRadar movie test for assessing battery life during video playback, and a range of productivity battery benchmarks to further gauge battery life. I also logged power use in a variety of scenarios, including when charging from USB-C, and tested the laptop with a variety of USB-C chargers and power banks.

Read more about how we test.

- First reviewed in November 2025