Getax ZX10 G2: 30-second review

Getac is one of those companies that likes to keep the names of products the same while changing the underlying hardware. Thankfully, with the new ZX10 release, someone decided to add “G2” to differentiate it from the prior version, even if they are remarkably similar in many respects.

On one level, this is a standard 10.1-inch Android tablet designed for business users who require stock control or a shop floor with mobile computing needs.

What separates this from a typical Android tablet is that it is designed to handle a high level of abuse or a challenging environment without issue, and it features hot-swapable batteries to ensure it is always ready for the next shift.

Like the original ZX10, the focus of the hardware is to provide a powerful SoC, while being less interested in peripheral features, such as the cameras.

What it offers above the prior G1 is a more powerful platform with increased memory, storage, a brighter display, and WiFi 6E communications.

Oddly, it’s running Android 13, not a cutting-edge release, but an improvement over the Android 12 that its predecessor used.

The build quality and accessory selection are second to none, so it’s no surprise that the device’s cost is relatively high. However, even at this price, it might make it into our selection of the best rugged tablets.

A question that business users might reasonably ask about this hardware is how many cheap tablets could we go through for each one of these?

Getax ZX10 G2: price and availability

- How much does it cost? From $1200 / £1175 (plus tax)

- When is it out? Available now

- Where can you get it? From a Getac reseller

Unlike many of the tablets we typically discuss, the Getac ZX80 doesn’t come with a standard price tag, as each device is tailored to meet the specific needs of its owner. The review unit we assessed is likely to start at over $1200 in the USA, not factoring in any accessories, service agreements, or upgrades.

The UK price is a whopping £1175.00 plus VAT, making it one of the most expensive 10-inch tablets around.

Options such as different sensors, cradles, additional batteries, and external chargers can substantially raise the total cost. If your finance department is already wary of Apple equipment pricing, they might need to brace themselves for the investment in this equipment.

That said, the durability of this design, combined with a three-year bumper-to-bumper warranty, implies that most customers should expect good service from this device.

- Value score: 3/5

Getax ZX10 G2: Specs

Item | Spec |

|---|---|

Hardware: | Getac ZX10 G2 |

CPU: | Qualcomm Dragonwing QCS6490 |

GPU: | Adreno 643 |

NPU: | Qualcomm Hexagon Processor |

RAM: | 8GB |

Storage: | 128GB |

Screen: | 10.1-inch TFT LCD 1000 nits |

Resolution: | 1200 x 1920 WUXGA |

SIM: | Dual Nano SIM 5G+ MicroSD option |

Weight: | 906g (1.99lbs) |

Dimensions: | 275 x 192 x 17.9mm (10.8" x 7.56" x 0.7") |

Rugged Spec: | IP67 and MIL-STD-810H |

Rear cameras: | 16.3MP Samsung GN1 Sensor |

Front camera: | 8MP Samsung GD1 |

Networking: | WiFi 6E, Bluetooth 5.2 |

OS: | Android 13 |

Battery: | 4870mAh (extra slot for 2nd battery) |

Getax ZX10 G2: Design

- Sturdy construction

- Unusual layout

- High brightness screen

Getac has extensive experience in making rugged equipment, and the ZX10 G2 is a prime example of how the lessons its engineers have learned are implemented in their recent designs.

The tablet is constructed with a metal chassis encased in a nearly impenetrable reinforced polycarbonate outer shell that has a subtle texture, making it easy to handle.

On paper, the new design is slightly lighter than its G1 predecessor, but that difference is likely due to the battery design, as the screen remains the same 10.1-inch-sized panel as before. This one is slightly brighter at 1000 nits over the 800 nits in the first ZX10.

Getac engineers prefer a form factor that is decidedly skewed towards right-handed users, with the five buttons, including power and volume controls, located on the right front face of the tablet.

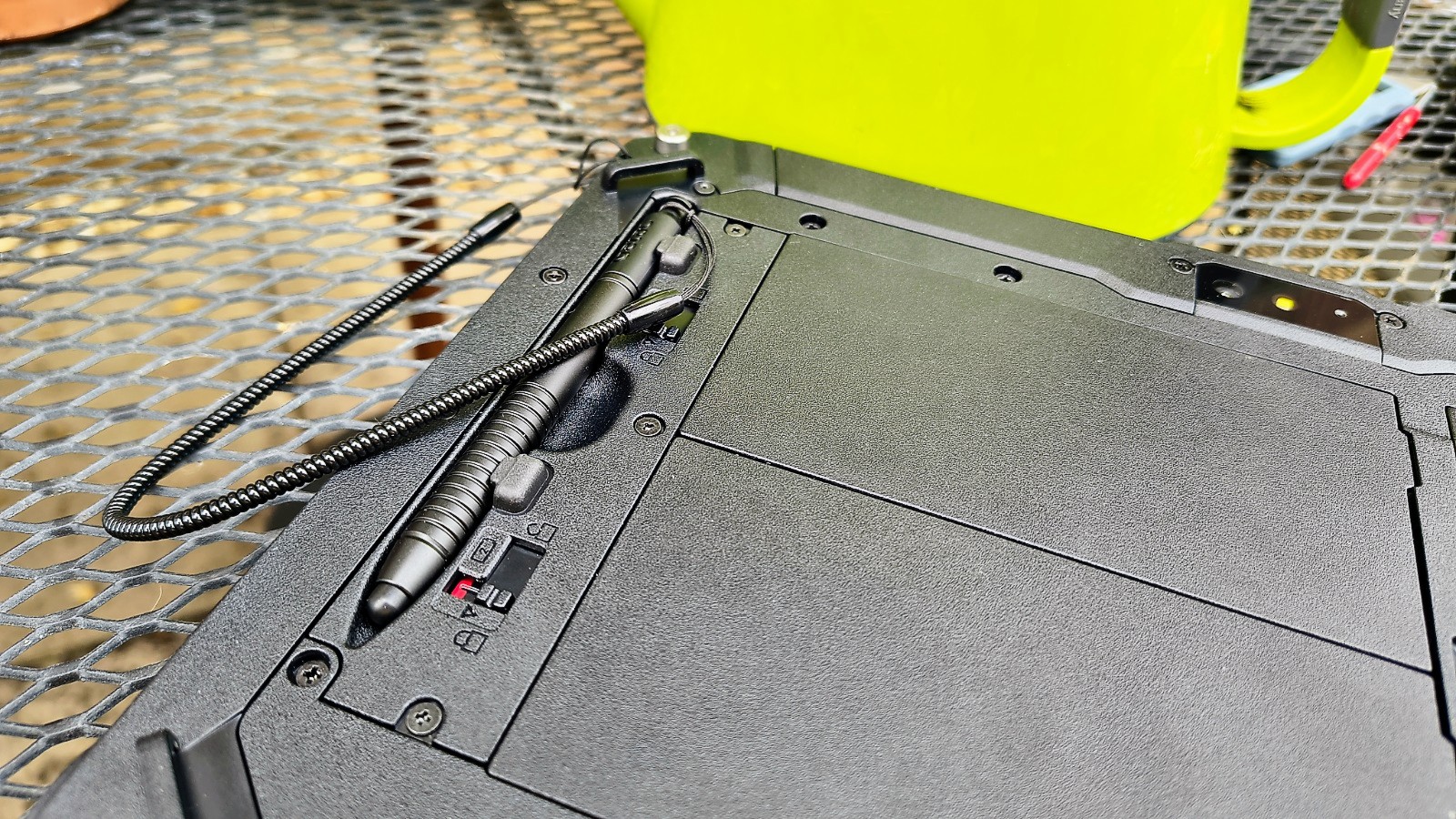

The lanyard-connected stylus is also on the right, although you could rotate the tablet to bring that and the buttons to the left.

But if you do that, then you can’t use the harness accessory, as it uses two metal studs that project proud of the top left and right corners.

The bottom edge of the tablet features an edge connector for docking the unit when it is not in use, and pass-through antenna connections.

The physical connection points along this axis are significant enough that they will lock a hinged keyboard accessory to the ZX10, turning it into an Android laptop.

Another feature of Getac hardware that I appreciate is that the ports that could potentially be impacted by moisture are hidden behind a sealed door that clicks into position when shut.

I’ve seen way too many rugged tablets that use rubber plugs for water/dust proofing, and they will ultimately perish. These Getac covers will last much longer, if not for the working life of the machine.

On our review hardware, the top edge featured a 1D/2D imager barcode reader, which was linked by default to one of the two custom buttons on the left. The other button takes a picture with the camera, but these can be altered to fit the specific use case.

Getac offers smartcard readers and NFC if you specifically need those technologies.

Like the ZX80 I previously reviewed, the screen has an anti-glare coating that makes it relatively easy to see the display even in bright sunlight. However, the filter that applies to the image softens it in a way that won’t attract drone pilots, as it tends to blur the finer details in the image, such as thin branches or wires.

This is a shame, because the 1000 nits of brightness this LumiBond display outputs ticks a lot of boxes for outdoor use in other respects.

On the rear is a slot for the stylus, two slots for batteries, and an access panel for mounting a smartcard reader. The SIM slot is inside the top battery slot, and the MicroSD card slot is in the lower battery bay. I’ll talk more about the batteries later, but the ability to charge them using an external charger and then swap over without rebooting the machine is extremely useful.

Overall, the ZX10 G2 has many positive aspects for industrial and military users who may be looking for a dedicated data capture device or a service support system.

Design score: 4/5

Getax ZX10 G2: Hardware

- Powerhouse SoC

- Limited storage

- Dual battery options

When I reviewed the ZX80, it used a Qualcomm SM7325, aka the Snapdragon 778G 5G Mobile Platform, and again, with this machine, Getac engineers have gone with a Qualcomm SoC.

The Qualcomm Dragonwing QCS6490 is specifically designed for high-performance edge computing. It features up to 8-core Qualcomm Kryo CPUs, an integrated Qualcomm Adreno 643 GPU, and a robust AI engine (NPU + DSP), capable of achieving up to 12 TOPS.

In use, this makes the ZX10 remarkably responsive and reactive to user input, and the machine is capable of local data processing should the mission require it.

In the review machine, it came with 8GB of LPDDR5 memory and 128GB of storage, of which only about 80GB was available after a handful of test apps were loaded.

The amount of storage does seem low, and the Getac specifications do hint that a 256GB model is available for those who don’t want to expand storage using the MicroSD card slot.

Our review machine only had a single 4870mAh battery installed, enabling the total capacity to be doubled with the addition of a second. Getac also offers an enhanced high-capacity battery that can be installed in either slot, delivering a minimum of 9740mAh. While switching to those will offer considerably longer running times, it will also make the tablet more cumbersome to carry.

In the accessories, there is an external battery charger that can keep extra batteries ready for use. Having a policy where, at the start of each shift, the batteries are swapped and placed in the charger should help avoid dead tablets.

I prefer the dual battery arrangement over the external and internal battery model used in the ZX80, because, in theory, this machine never needs to be recharged directly if it isn’t convenient. And, because each battery can be changed independently, it makes it much easier to enhance the running time with either a single extended battery or two.

If the purchaser makes the right accessory purchases, the ZX10 should be able to operate almost indefinitely, and even if away from mains power, a small collection of extra batteries should keep it operating for days at a time.

- Hardware score: 4/5

Getax ZX10 G2: Cameras

- 16.3MP sensor on the rear

- 8MP on the front

- Two cameras in total

The Getac ZX10 G2 has two cameras:

Rear camera: 16.3MP

Front camera: 8MP

As with other Getac hardware, the specification doesn’t detail what the sensors are for the front and rear cameras. However, even without that input, I can say with some certainty that these aren’t the best sensors I’ve seen on a tablet, and they’re a notch below what an entry-level phone was delivering in 2020.

While the Android distribution was compiled for several 16MP sensors, my prior experience suggests that the rear sensor is the Omnivision OV16a10, and the front sensor is the Omnivision OV8856.

Those assertions are based on the ZX80 cameras, as these seem identical.

The one positive feature of the rear Omnivision OV16A10 is that it records 4K video at 30 fps, although there are no frame rate controls available at this resolution. In fact, the camera application has relatively few controls, and it lacks special shooting modes.

Being simple isn’t a bad thing if the system takes care of things like exposure and focus, but the camera app here does practically nothing, even though it has an AI processor sitting idle that could easily identify the subject of an image and how best to capture it.

With still image control, you have a resolution selection and digital zoom, as well as the ability to turn the flash on or off.

To be direct, nobody using this equipment is likely to be distracted from work by the temptation to enhance their photography skills.

Like the ZX80 and its sensors, the images from these cameras are workable, but only if any image is acceptable. However, the camera doesn’t balance light or colour well, and the digital zoom is an abyss of graininess.

Evidently, no special attention was paid to the cameras on this hardware or the capture application, as it was lifted directly from a prior product without any changes.

Getax ZX10 G2 Camera samples

- Camera score: 2/5

Getax ZX10 G2: Performance

- Decent SoC

- GPU is game-friendly

- Slow charging battery

Tablet | Getac ZX10 G2 | Getac ZX80 | |

|---|---|---|---|

SoC | Qualcomm Dragonwing QCS6490 | Snapdragon 778G 5G | |

Adreno 643 | Adreno 643 | ||

Mem/Storage | 8GB/128GB | 8GB/180GB | |

Battery Capacity | mAh | 4870 | 4060 + 4870 |

Geekbench | Single | 1142 | 1137 |

Multi | 3044 | 3056 | |

OpenCL | 2877 | 2891 | |

Vulkan | 3159 | 3159 | |

GFX | Aztec Open Normal | 47 | 44 |

Aztec Vulkan Normal | 51 | 49 | |

Car Chase | 44 | 41 | |

Manhattan 3.1 | 76 | 59 | |

PCMark | 3.0 Score | 9360 | 9521 |

Battery Life | 8h 27m | 15h 24m | |

Charge 30 | Battery 30 mins | 31% | 18% |

Passmark | Score | 14639 | 15029 |

CPU | 6902 | 7097 | |

3DMark | Slingshot OGL | 7777 | 7781 |

Slingshot Ex. OGL | 6761 | Maxed | |

Slingshot Ex. Vulkan | Maxed | Maxed | |

Wildlife | 3387 | 3411 | |

Steel Nomad.L | 312 | 310 |

The obvious comparison for me was to the smaller ZX80 model, which uses a similar platform and delivers nearly identical performance.

Even if you are uninterested in either of these two machines, these results demonstrate that the Dragonwing QCS6490 performs at the same level as the Snapdragon 778G 5G, also by Qualcomm. As they both feature the same memory architecture, core counts and GPU, this isn’t hugely surprising.

Where things get interesting is when we explore battery life, since the ZX10 had only a single 4870mAh battery, whereas the ZX80 had an internally integrated 4060mAh battery plus an external 4870mAh battery.

That extra internal capacity nearly doubles the operating time of the ZX80, although it can’t replace the internal battery, which must be recharged in situ. Had Getac provided the second battery for the ZX10, I’d be surprised if its inclusion would not exceed the run time of the ZX80 by at least an hour, and probably longer.

One result here is highly misleading, and that’s the recharge percentage after 30 minutes. Given the capacity of the ZX80, it recovered approximately 18% of its total 8930mAh, or 1607 mAh. Conversely, the recovered power on the ZX10 was 1,510mAh. Given that the ZX80 has two batteries, not one, it’s safe to conclude that there’s no charging improvement over the ZX80 in the ZX10.

This is a weakness of this design, since it takes more than 90 minutes to fully recharge a 4870mAh battery, and it would be safe to assume double that if you have the second battery. The draw on the power supply is only 20W, which is why it isn’t faster.

I’ve seen phones and tablets with 25000mAh batteries that can recharge much faster than this using 66W power supplies. Getac may have taken the view that slower charging will extend battery life, and therefore, is in the customer’s interest. However, I found it curious that the Chicony-branded PSU included with the machine is rated for 20V at 65W, even though it can only take a third of that power when recharging.

Overall, this is a powerful tablet that offers performance beyond what most tablet makers are currently providing, with the possible exception of the Unihertz Tank Pad 8849 and its Dimensity 8200 platform.

- Performance score: 4/5

Getax ZX10 G2: Final verdict

I liked this design substantially more than the Getac ZX80, as I think it better balances the user experience with the capabilities. However, it’s not without some issues, most noticeably that it’s launched with a three-year-old version of Android.

Also, Getac doesn’t see camera sensors as a selling point, as the ones in this tablet are below what you might expect in a budget phone.

The strengths here include a solid computing platform, interchangeable hot-swap batteries, and a fantastic selection of accessories for docking and carrying the tablet throughout the day. It also comes with a warranty where Getac won’t argue with you about accidental damage for three years.

However, the cost of well-made and engineered equipment, which can withstand being in a warehouse or garden centre, is disturbingly high.

It’s a matter of convincing those senior people who control budgets that devices like the Getac ZX10 G2 ultimately save money with fewer issues and downtime, since the investment is likely to be substantial when deploying these into any decent-sized business.

Should I buy a Getax ZX10 G2?

Attributes | Notes | Rating |

|---|---|---|

Value | Expensive for an Android tablet | 3/5 |

Design | Built to take knocks and keep working | 4/5 |

Hardware | Powerful SoC, dual hot-swap batteries, tons of accessories | 4/5 |

Camera | Poor sensors and grainy results | 2/5 |

Performance | Powerful platform but slow charging | 4/5 |

Overall | Highly durable, but you pay for the privilege | 4/5 |

Buy it if...

Your environment is harsh

Most brands claim IP68, IP69K dust/water resistance, and MIL-STD-810H Certification, but this equipment is built to withstand much more than these dubious endorsements.

It comes with a three-year warranty that includes coverage for accidental damage, which is a testament to the abuse these devices can withstand.

You need a powerful platform

The processor in this Android tablet is at the top end of what is available and delivers a stellar user experience. With this much power available, it’s possible to locally process data before sending it to the Cloud.

Don't buy it if...

You are working on a budget

The price of the tablet is high, and once you’ve included a keyboard, extra batteries, an off-line battery charger and other accessories, the total package might run to $2000 or more. There are more affordable options that offer you more for less.

You need decent photography

The camera sensors in this device are like going back to the past for most Android phone and even tablet users. The results aren’t good, and it’s a weakness in the Getac tablets that I’ve observed so far.

Also Consider

Unihertz Tank Pad 8849

Larger and slightly heavier than the Getac ZX10 G2, this is a powerful Android tablet featuring an impressive 21000 mAh battery and the latest 50MP Sony IMX766 camera sensor. Although it may not offer the accessory selection of the ZX10 G2, at around $600, it’s nearly half the price and a better all-around performer.

Read our full Unihertz Tank Pad 8849 review

Getac ZX80 Rugged Android Tablet

Another super-robust design from Getac aimed at tough environment use. It uses a different Qualcomm SoC, and has a wide selection of accessories, including replaceable batteries.

However, like its ZX10 G2 brother, it’s on the expensive side, so it’s not an impulse purchase.

For more durable devices, we've reviewed all the best rugged phones, the best rugged laptops, and the best rugged hard drives