Nvidia GeForce RTX 5070 Ti: Two-minute review

The Nvidia GeForce RTX 5070 Ti definitely had a high expectation bar to clear after the mixed reception of the Nvidia GeForce RTX 5080 last month, especially from enthusiasts.

And while there are things I fault the RTX 5070 Ti for, there's no doubt that it has taken the lead as the best graphics card most people can buy right now—assuming that scalpers don't get there first.

The RTX 5070 Ti starts at $749 / £729 (about AU$1,050), making its MSRP a good bit cheaper than its predecessor was at launch, the Nvidia GeForce RTX 4070 Ti, as well as the buffed-up Nvidia GeForce RTX 4070 Ti Super.

The fact that the RTX 5070 Ti beats both of those cards handily in terms of performance would normally be enough to get it high marks, but this card even ekes out a win over the Nvidia GeForce RTX 4080 Super, shooting it nearly to the top of the best Nvidia graphics card lists.

As one of the best 4K graphics cards I've ever tested, it isn't without faults, but we're really only talking about the fact that Nvidia isn't releasing a Founders Edition card for this one, and that's unfortunate for a couple of reasons.

For one, and probably most importantly, without a Founders Edition card from Nvidia guaranteed to sell for MSRP directly from Nvidia's website, the MSRP price for this card is just a suggestion. And without an MSRP card from Nvidia keeping AIB partners onside, it'll be hard finding a card at Nvidia's $749 price tag, reducing its value proposition.

Also, because there's no Founders Edition, Nvidia's dual pass-through design to keep the card cool will pass the 5070 Ti by. If you were hoping that the RTX 5070 Ti might be SFF-friendly, I simply don't see how the RTX 5070 Ti fits into that unless you stretch the meaning of small form factor until it hurts.

Those aren't small quibbles, but given everything else the RTX 5070 Ti brings to the table, they do seem like I'm stretching myself a bit to find something bad to say about this card for balance.

For the vast majority of buyers out there looking for outstanding 4K performance at a relatively approachable MSRP, the Nvidia GeForce RTX 5070 Ti is the card you're going to want to buy.

Nvidia GeForce RTX 5070 Ti: Price & availability

- How much is it? MSRP is $749/£729 (about AU$1,050), but with no Founders Edition, third-party cards will likely be higher

- When can you get it? The RTX 5070 Ti goes on sale February 20, 2025

- Where is it available? The RTX 5070 Ti will be available in the US, UK, and Australia at launch

The Nvidia GeForce RTX 5070 Ti goes on sale on February 20, 2025, starting at $749/£729 (about AU$1,050) in the US, UK, and Australia, respectively.

Unlike the RTX 5090 and RTX 5080, there is no Founders Edition card for the RTX 5070 Ti, so there are no versions of this card that will be guaranteed to sell at this MSRP price, which does complicate things given the current scalping frenzy we've seen for the previous RTX 50 series cards.

While stock of the Founders Edition RTX 5090 and RTX 5080 might be hard to find even from Nvidia, there is a place, at least, where you could theoretically buy those cards at MSRP. No such luck with the RTX 5070 Ti, which is a shame.

The 5070 Ti MSRP does at least come in under the launch MSRPs of both the RTX 4070 Ti and RTX 4070 Ti Super, neither of which had Founders Edition cards, so stock and pricing will hopefully stay within the bounds of where those cards have been selling for.

The 5070 Ti's MSRP puts it on the lower-end of the enthusiast-class, and while we haven't seen the price for the AMD Radeon RX 9070 XT yet, it's unlikely that AMD's competing RDNA 4 GPU will sell for much less than the RTX 5070 Ti, but if you're not in a hurry, it might be worth waiting a month or two to see what AMD has to offer in this range before deciding which is the better buy.

- Value: 4 / 5

Nvidia GeForce RTX 5070 Ti: Specs

- GDDR7 VRAM and PCIe 5.0

- Slight bump in power consumption

- More memory than its direct predecessor

Like the rest of the Nvidia Blackwell GPU lineup, there are some notable advances with the RTX 5070 Ti over its predecessors.

First, the RTX 5070 Ti features faster GDDR7 memory which, in addition to having an additional 4GB VRAM than the RTX 4070 Ti's 12GB, means that the RTX 5070 Ti's larger, faster memory pool can process high resolution texture files faster, making it far more capable at 4K resolutions.

Also of note is its 256-bit memory interface, which is 33.3% larger than the RTX 4070 Ti's, and equal to that of the RTX 4070 Ti Super. 64 extra bits might not seem like a lot, but just like trying to fit a couch through a door, even an extra inch or two of extra space can be the difference between moving the whole thing through at once or having to do it in parts, which translates into additional work on both ends.

There's also the new PCIe 5.0 x16 interface, which speeds up communication between the graphics card, your processor, and your SSD. If you have a PCIe 5.0 capable motherboard, processor, and SSD, just make note of how many PCIe 5.0 lanes you have available.

The RTX 5070 Ti will take up 16 of them, so if you only have 16 lanes available and you have a PCIe 5.0 SSD, the RTX 5070 Ti is going to get those lanes by default, throttling your SSD to PCIe 4.0 speeds. Some motherboards will let you set PCIe 5.0 priority, if you have to make a choice.

The RTX 5070 Ti uses slightly more power than its predecessors, but in my testing it's maximum power draw came in at just under the card's 300W TDP.

As for the GPU inside the RTX 5070 Ti, it's built using TSMC's N4P process node, which is a refinement of the TSMC N4 node used by its predecessors. While not a full generational jump in process tech, the N4P process does offer better efficiency and a slight increase in transistor density.

- Specs & features: 5 / 5

Nvidia GeForce RTX 5070 Ti: Design & features

- No Nvidia Founders Edition card

- No dual-pass-through cooling (at least for now)

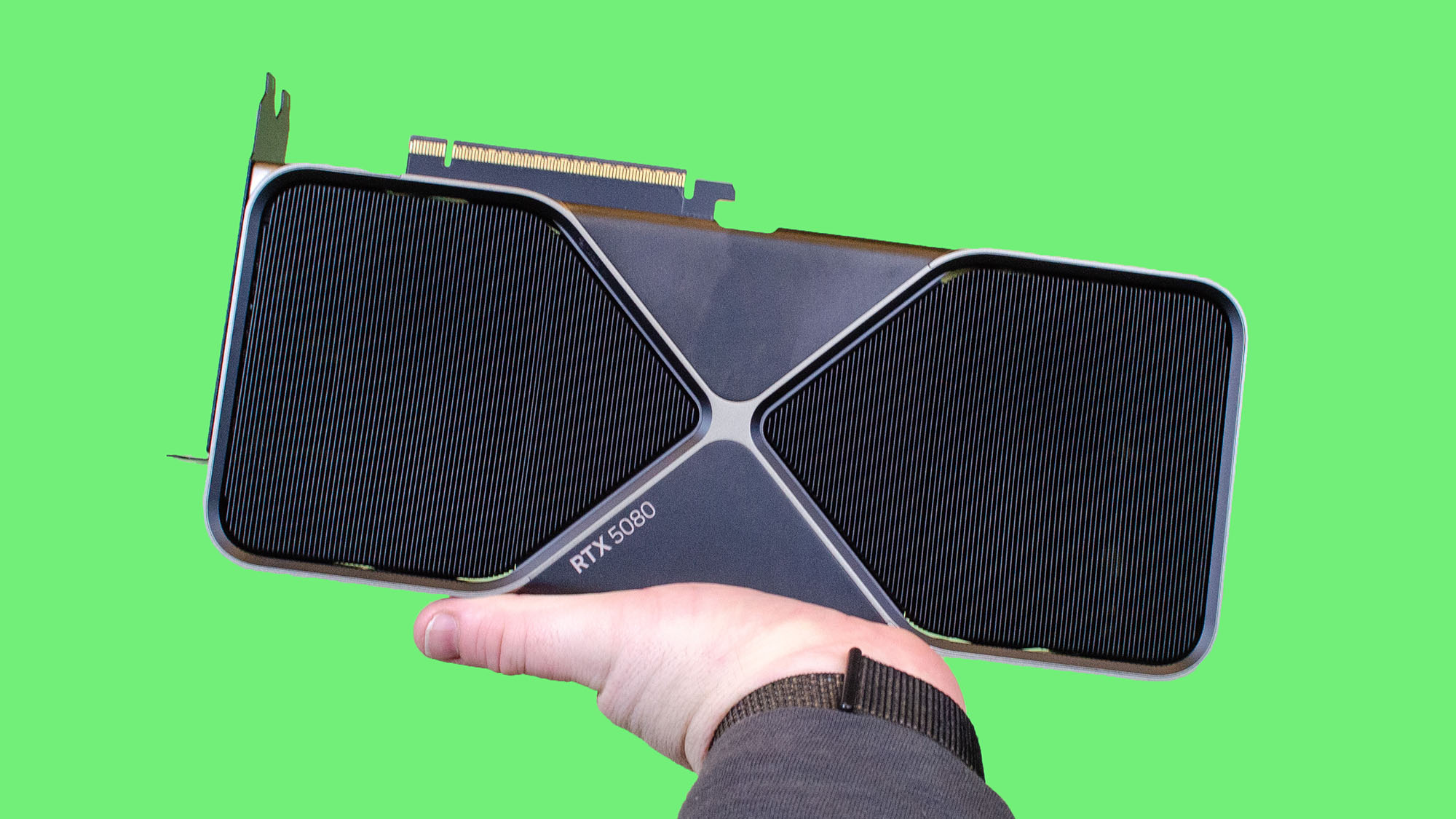

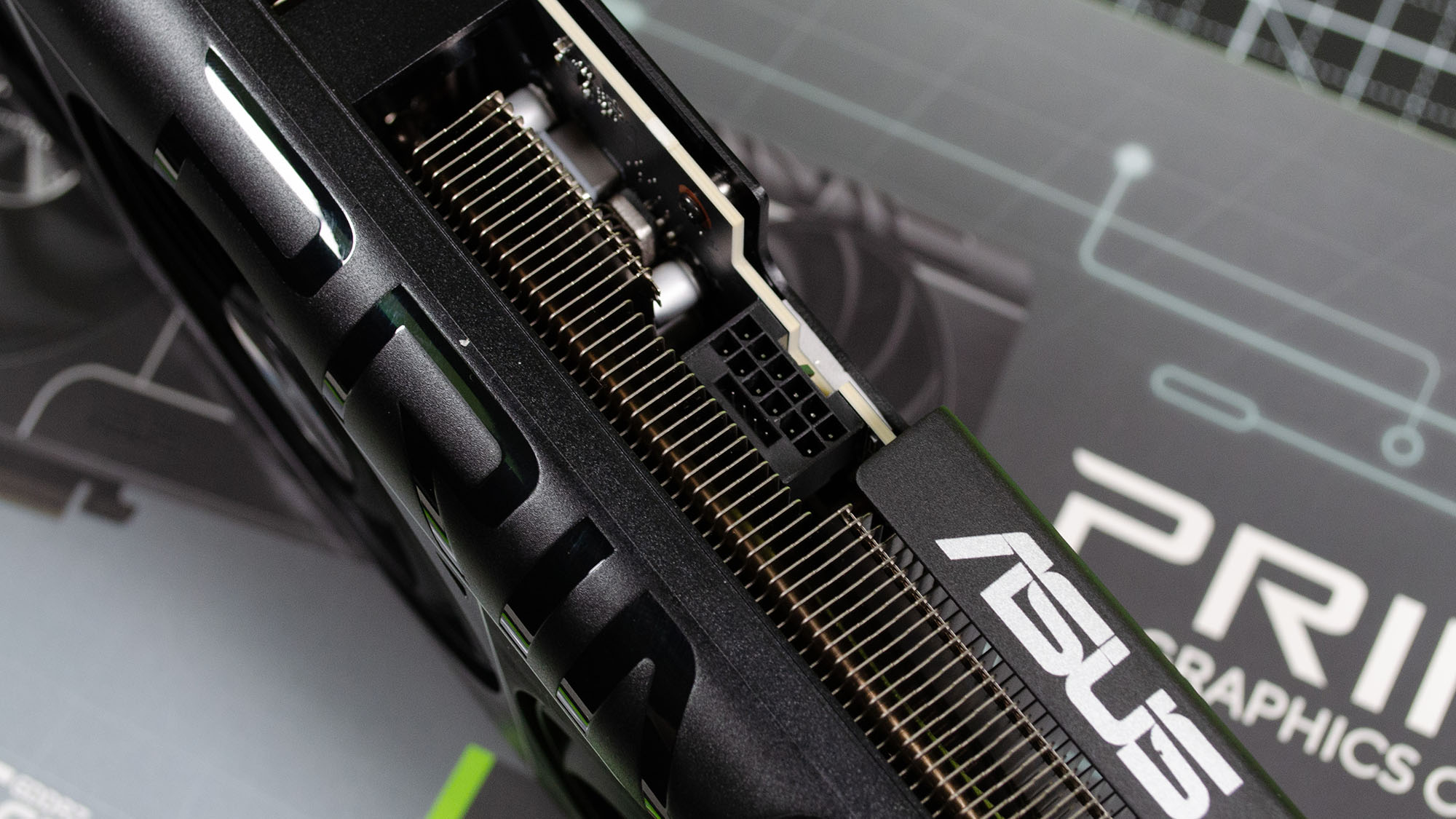

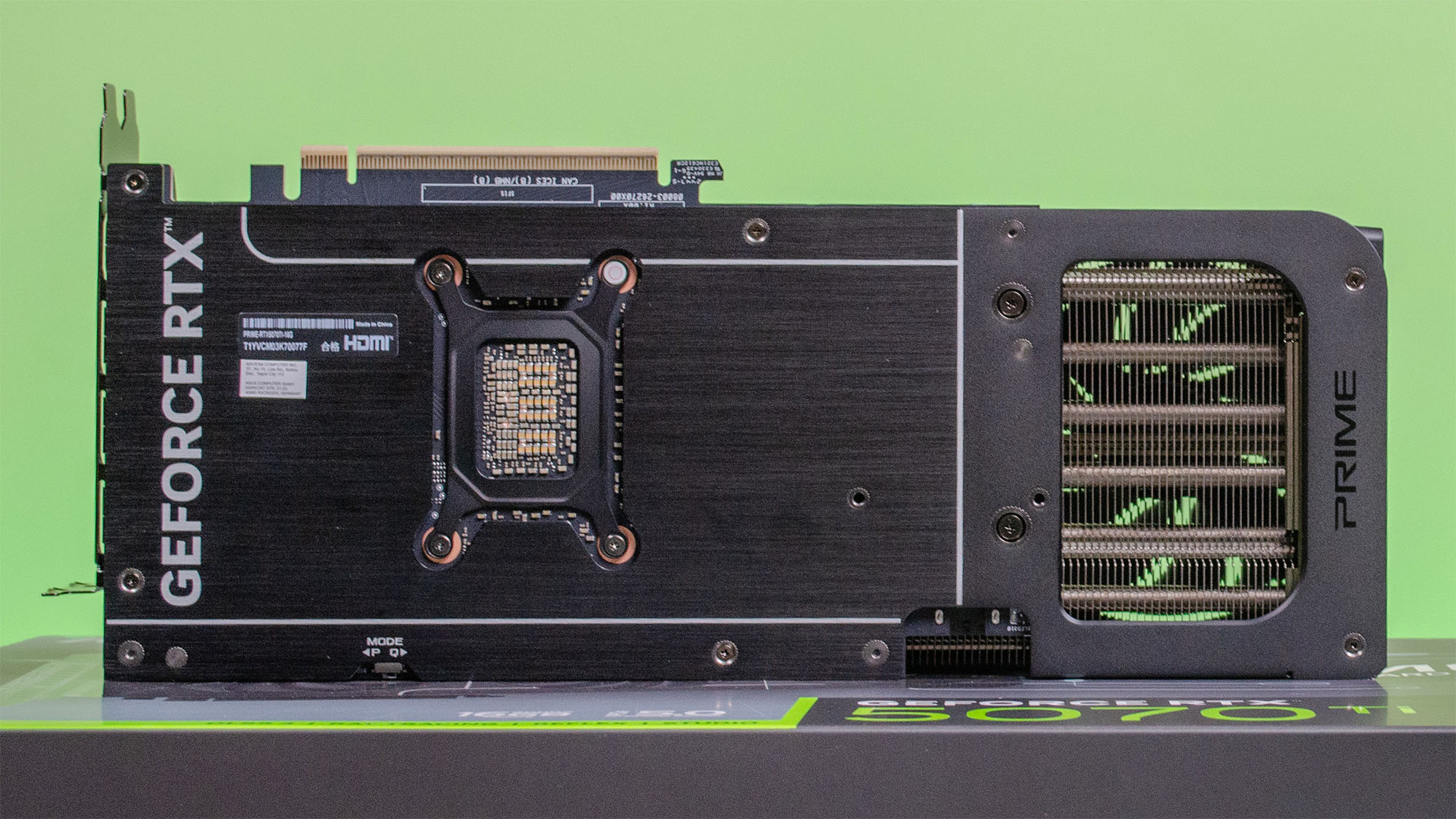

There is no Founders Edition card for the RTX 5070 Ti, so the RTX 5070 Ti you end up with may look radically different than the one I tested for this review, the Asus Prime GeForce RTX 5070 Ti.

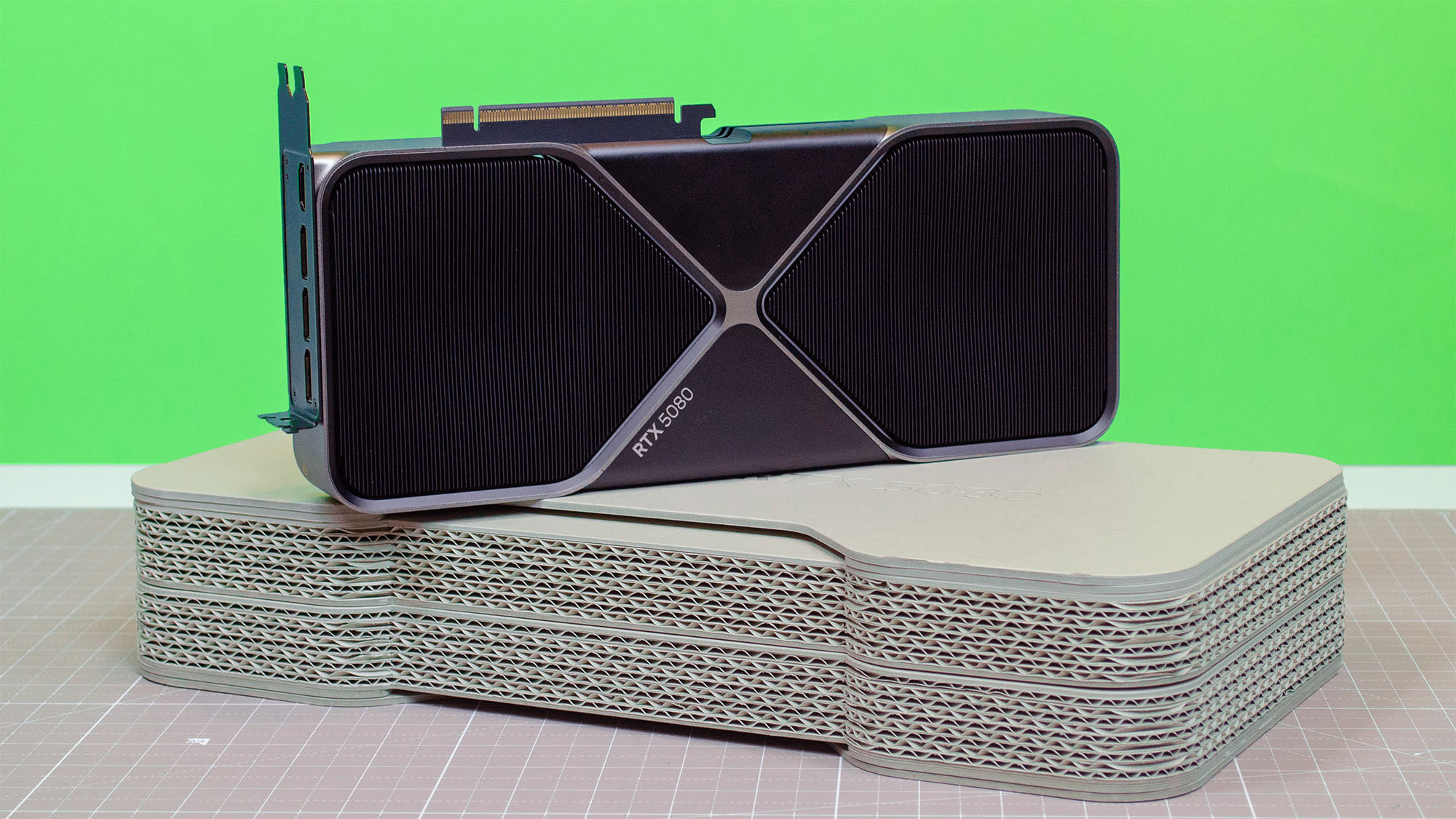

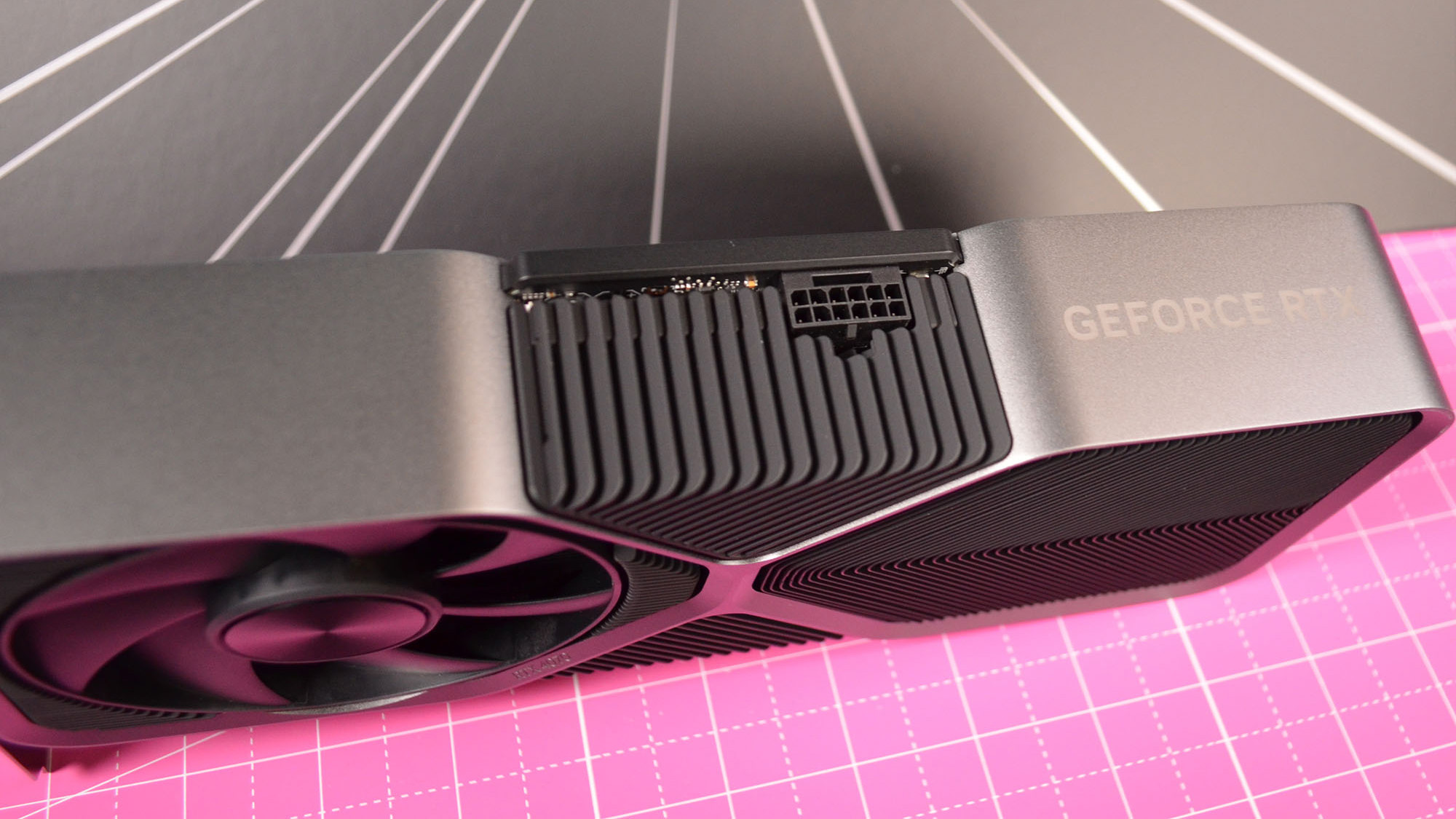

Whatever partner card you choose though, it's likely to be a chonky card given the card's TDP, since 300W of heat needs a lot of cooling. While the RTX 5090 and RTX 5080 Founders Edition cards featured the innovative dual pass-through design (which dramatically shrank the card's width), it's unlikely you'll find any RTX 5070 Ti cards in the near future that feature this kind of cooling setup, if ever.

With that groundwork laid, you're going to have a lot of options for cooling setups, shroud design, and lighting options, though more feature-rich cards will likely be more expensive, so make sure you consider the added cost when weighing your options.

As for the Asus Prime GeForce RTX 5070 Ti, the sleek shroud of the card lacks the RGB that a lot of gamers like for their builds, but for those of us who are kind of over RGB, the Prime's design is fantastic and easily worked into any typical mid-tower case.

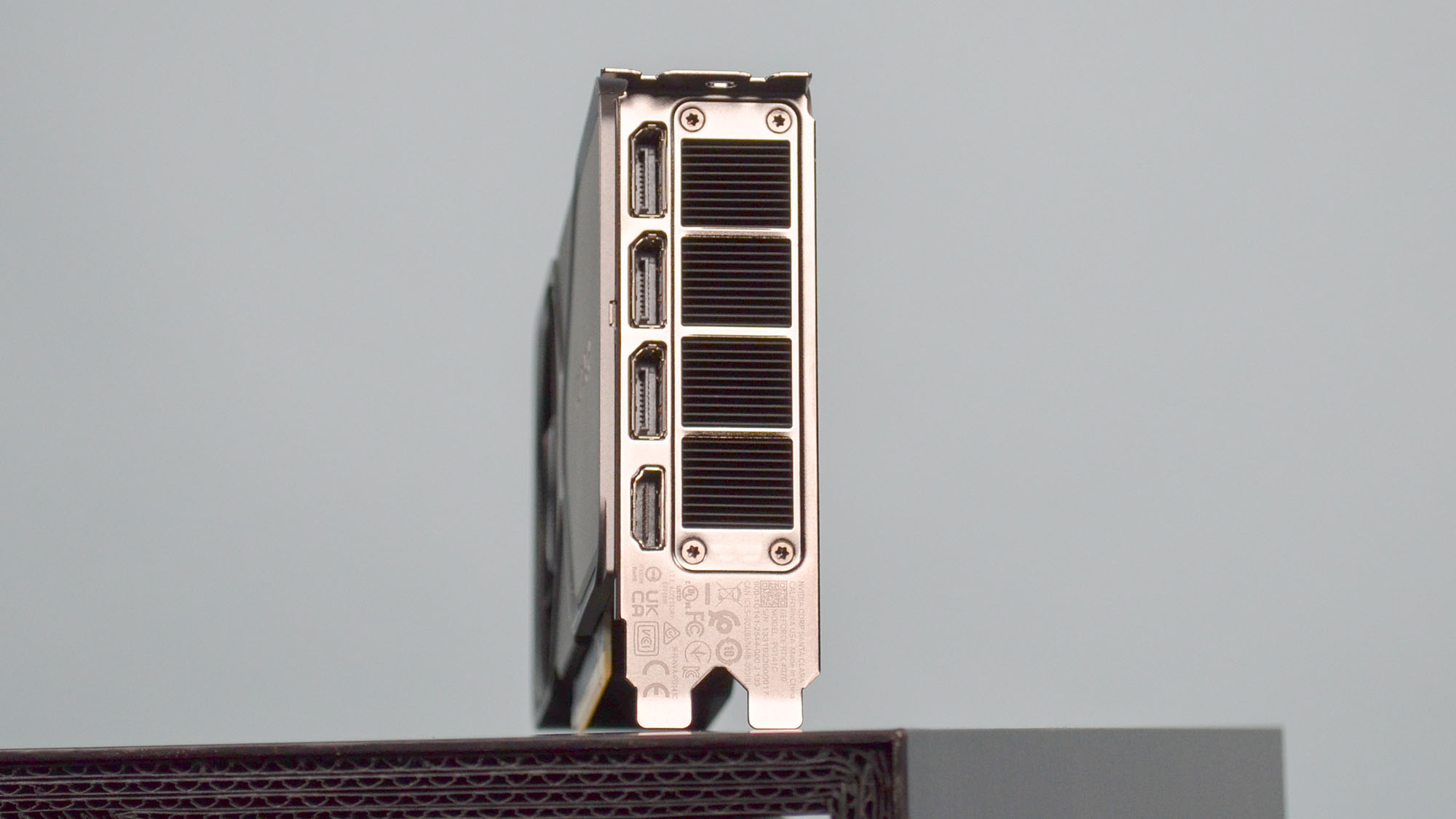

The Prime RTX 5070 Ti features a triple-fan cooling setup, with one of those fans having complete passthrough over the heatsink fins. There's a protective backplate and stainless bracket over the output ports.

The 16-pin power connector rests along the card's backplate, so even if you invested in a 90-degree angled power cable, you'll still be able to use it, assuming your power supply meets the recommended 750W listed on Asus's website. There's a 3-to-1 adapter included with the card, as well, for those who haven't upgraded to an ATX 3.0 PSU yet.

- Design: 4 / 5

Nvidia GeForce RTX 5070 Ti: Performance

- RTX 4080 Super-level performance

- Massive improvement over the RTX 4070 Ti Super

- Added features like DLSS 4 with Multi-Frame Generation

The charts shown below offer the most recent data I have for the cards tested for this review. They may change over time as more card results are added and cards are retested. The 'average of all cards tested' includes cards not shown in these charts for readability purposes.

And so we come to the reason we're all here, which is this card's performance.

Given the...passionate...debate over the RTX 5080's underwhelming gen-on-gen uplift, enthusiasts will be very happy with the performance of the RTX 5070 Ti, at least as far as it relates to the last-gen RTX 4070 Ti and RTX 4070 Ti Super.

Starting with synthetic scores, at 1080p, both the RTX 4070 Ti and RTX 5070 Ti are so overpowered that they get close to CPU-locking on 3DMark's 1080p tests, Night Raid and Fire Strike, though the RTX 5070 Ti does come out about 14% ahead. The RTX 5070 Ti begins to pull away at higher resolutions and once you introduce ray tracing into the mix, with roughly 30% better performance at these higher level tests like Solar Bay, Steel Nomad, and Port Royal.

In terms of raw compute performance, the RTX 5070 Ti scores about 25% better in Geekbench 6 than the RTX 4070 Ti and about 20% better than the RTX 4070 Ti Super.

In creative workloads like Blender Benchmark 4.30, the RTX 5070 Ti pulls way ahead of its predecessors, though the 5070 Ti, 4070 Ti Super, and 4070 Ti all pretty much max out what a GPU can add to my Handbrake 1.9 4K to 1080p encoding test, with all three cards cranking out about 220 FPS encoded on average.

Starting with 1440p gaming, the gen-on-gen improvement of the RTX 5070 Ti over the RTX 4070 Ti is a respectable 20%, even without factoring in DLSS 4 with Multi-Frame Generation.

The biggest complaint that some have about MFG is that if the base frame rate isn't high enough, you'll end up with controls that can feel slightly sluggish, even though the visuals you're seeing are much more fluid.

Fortunately, outside of turning ray tracing to its max settings and leaving Nvidia Reflex off, you're not really going to need to worry about that. The RTX 5070 Ti's minimum FPS for all but one of the games I tested at native 1440p with ray tracing all pretty much hit or exceeded 60 FPS, often by a lot.

Only F1 2024 had a lower-than-60 minimum FPS at native 1440p with max ray tracing, and even then, it still managed to stay above 45 fps, which is fast enough that no human would ever notice any input latency in practice. For 1440p gaming, then, there's absolutely no reason not to turn on MFG whenever it is available since it can substantially increase framerates, often doubling or even tripling them in some cases without issue.

For 4K gaming, the RTX 5070 Ti native performance is spectacular, with nearly every title tested hitting 60 FPS or greater on average, with those that fell short only doing so by 4-5 frames.

Compared to the RTX 4070 Ti and RTX 4070 Ti Super, the faster memory and expanded 16GB VRAM pool definitely turn up for the RTX 5070 Ti at 4K, delivering about 31% better overall average FPS than the RTX 4070 Ti and about 23% better average FPS than the RTX 4070 Ti Super.

In fact, the average 4K performance for the RTX 5070 Ti pulls up pretty much dead even with the RTX 4080 Super's performance, and about 12% better than the AMD Radeon RX 7900 XTX at 4K, despite the latter having 8GB more VRAM.

Like every other graphics card besides the RTX 4090, RTX 5080, and RTX 5090, playing at native 4K with ray tracing maxed out is going to kill your FPS. To the 5070 Ti's credit, though, minimum FPS never dropped so low as to turn things into a slideshow, even if the 5070 Ti's 25 FPS minimum in Cyberpunk 2077 was noticeable.

Turning on DLSS in these cases is a must, even if you skip turning on MFG, but the RTX 5070 Ti's balanced upscaled performance is a fantastic experience.

Leave ray tracing turned off (or set to a lower setting), however, and MFG definitely becomes a viable way to max out your 4K monitor's refresh rate for seriously fluid gaming.

Overall then, the RTX 5070 Ti delivers substantial high-resolution gains gen-on-gen, which should make enthusiasts happy, without having to increase its power consumption all that much.

Of all the graphics cards I've tested over the years, and especially over the past six months, the RTX 5070 Ti is pretty much the perfect balance for whatever you need it for, and if you can get it at MSRP or reasonably close to MSRP, it's without a doubt the best value for your money of any of the current crop of enthusiast graphics cards.

- Performance: 5 / 5

Should you buy the Nvidia GeForce RTX 5070 Ti?

Buy the Nvidia GeForce RTX 5070 Ti if...

You want the perfect balance of 4K performance and price

Assuming you can find it at or close to MSRP, the 4K value proposition on this card is the best you'll find for an enthusiast graphics card.

You want a fantastic creative graphics card on the cheap

While the RTX 5070 Ti doesn't have the RTX 5090's creative chops, it's a fantastic pick for 3D modelers and video professionals looking for a (relatively) cheap GPU.

You want Nvidia's latest DLSS features without spending a fortune

While this isn't the first Nvidia graphics card to feature DLSS 4 with Multi Frame Generation, it is the cheapest, at least until the RTX 5070 launches in a month or so.

Don't buy it if...

You want the absolute best performance possible

The RTX 5070 Ti is a fantastic performer, but the RTX 5080, RTX 4090, and RTX 5090 all offer better raw performance if you're willing to pay more for it.

You're looking for something more affordable

While the RTX 5070 Ti has a fantastic price for an enthusiast-grade card, it's still very expensive, especially once scalpers get involved.

You only plan on playing at 1440p

If you never plan on playing at 4K this generation, you might want to see if the RTX 5070 or AMD Radeon RX 9070 XT and RX 9070 cards are a better fit.

Also consider

Nvidia GeForce RTX 5080

While more expensive, the RTX 5080 features fantastic performance and value for under a grand at MSRP.

Read the full Nvidia GeForce RTX 5080 reviewView Deal

Nvidia GeForce RTX 4080 Super

While this card might not be on the store shelves for much longer, the RTX 5070 Ti matches the RTX 4080 Super's performance, so if you can find the RTX 4080 Super at a solid discount, it might be the better pick.

Read the full Nvidia GeForce RTX 4080 Super reviewView Deal

How I tested the Nvidia GeForce RTX 5070 Ti

- I spent about a week with the RTX 5070 Ti

- I used my complete GPU testing suite to analyze the card's performance

- I tested the card in everyday, gaming, creative, and AI workload usage

Here are the specs on the system I used for testing:

Motherboard: ASRock Z790i Lightning WiFi

CPU: Intel Core i9-14900K

CPU Cooler: Gigabyte Auros Waterforce II 360 ICE

RAM: Corsair Dominator DDR5-6600 (2 x 16GB)

SSD: Crucial T705

PSU: Thermaltake Toughpower PF3 1050W Platinum

Case: Praxis Wetbench

I spent about a week testing the Nvidia GeForce RTX 5070 Ti, using it mostly for creative work and gaming, including titles like Indiana Jones and the Great Circle and Avowed.

I also used my updated suite of benchmarks including industry standards like 3DMark and Geekbench, as well as built-in gaming benchmarks like Cyberpunk 2077 and Dying Light 2.

I also test all of the competing cards in a given card's market class using the same test bench setup throughout so I can fully isolate GPU performance across various, repeatable tests. I then take geometric averages of the various test results (which better insulates the average from being skewed by tests with very large test results) to come to comparable scores for different aspects of the card's performance. I give more weight to gaming performance than creative or AI performance, and performance is given the most weight in how final scores are determined, followed closely by value.

I've been testing GPUs, PCs, and laptops for TechRadar for nearly five years now, with more than two dozen graphics card reviews under my belt in the past three years alone. On top of that, I have a Masters degree in Computer Science and have been building PCs and gaming on PCs for most of my life, so I am well qualified to assess the value of a graphics card and whether it's worth your time and money.

- Originally reviewed February 2025