Acer Predator Triton 17 X: Two-minute review

There's an argument to be made for packing in as much power as possible when it comes to the best gaming laptops, and that's the space the Acer Predator Triton 17 X occupies. For the most part, it forgoes being the sleekest and smallest of its kind to go all-in on pushing boundaries for those with deep enough pockets to take the plunge.

Priced at $3,599.99 / £3,299.99 / AU$7,999, the Acer Predator Triton 17 X isn't a budget pick by any means, but that's the cost of packing in enough horsepower to give even the best gaming PCs a run for their money. While the mobile RTX 4090 doesn't exactly rival what its desktop counterpart can do, the performance margin is within an acceptable ballpark range; you can think of it as similar to an RTX 4080 desktop GPU.

Where this rig stands out from competitors is with its display. The Triton 17 X features a staggering 250Hz refresh rate with a 1600p resolution screen. That 16:10 aspect ratio means you get more real-estate for gaming, and the results are impressive. Fortunately, the components inside this Predator laptop mean you'll be able to push even the latest and most demanding games to superfast frame rates.

No corners have been cut with the quality-of-life features here, either. This laptop is armed with a six-speaker setup, an excellent keyboard, and a healthy port selection, so even when you're not gaming, you'll have a good experience. Just keep in mind that the Triton 17 X is not the most practical notebook with its 3kg / 6.6lbs heft, so it might not be your daily runner to work or school on the side.

Compounding this is the majorly disappointing battery life. The Acer Predator Triton 17 X lasts around two hours at best when enjoying media playback or browsing the web, and about an hour when getting stuck into one of the latest games. You'll want the charger nearby, but if you can overlook these issues then there's a stellar machine underneath it all.

Acer Predator Triton 17 X: Price and availability

- How much does it cost? $3,599.99 / £3,299.99 / AU$7,999

- When is it available? It's out now

- Where can you get it? In the US, UK and Australia

The Acer Predator Triton 17 X is one of the pricier gaming laptops on the market, coming in above the $3,000 / £3,000 mark (and at AU$8,000). Considering the hardware inside, that shouldn't come as a huge surprise, though. Acer isn't pulling any punches from the choice of CPU and GPU, through to the display, RAM, and storage. Simply put, it's far from a cheap gaming laptop, but if you want to be on the bleeding edge and have the cash to splash then it could be worthwhile.

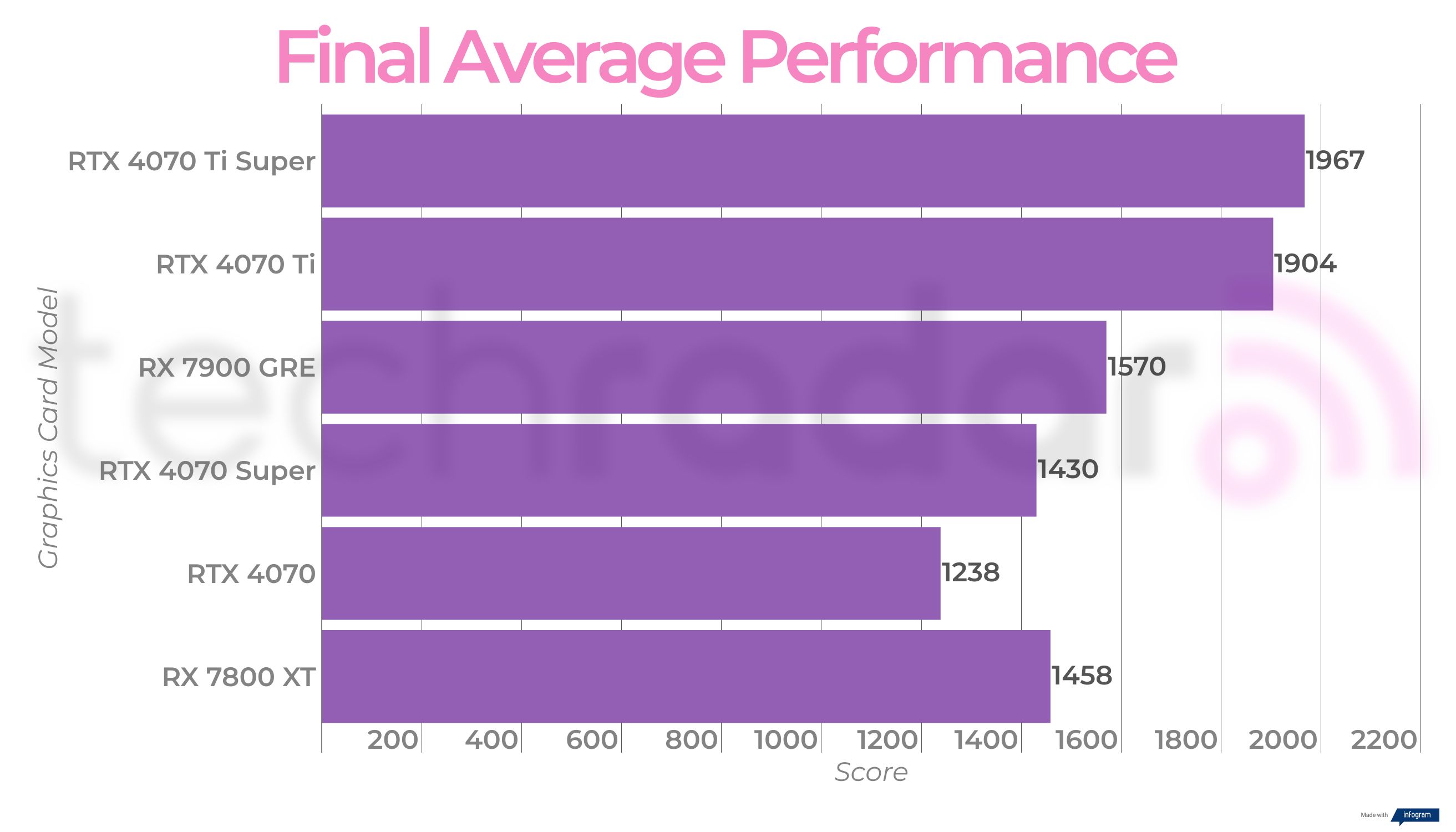

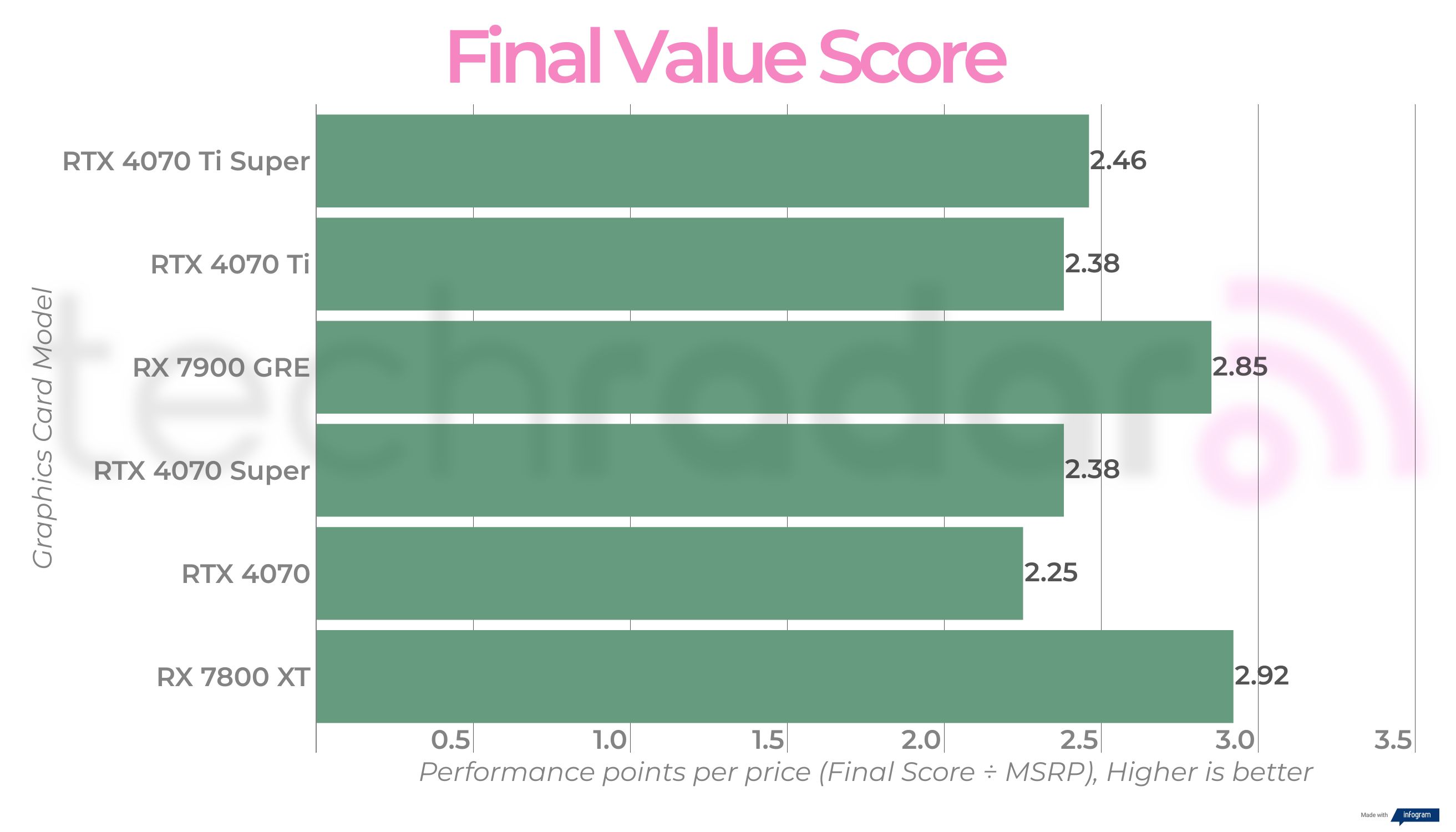

As a frame of reference, the price of entry for the Predator Triton 17 X puts it in league with other top-end offerings such as the Origin EON 16SL when fully specced out, or the Alienware M16 and Razer Blade 16 (2023) in higher configurations. You aren't getting the best value for money on the market, nor the strongest price-to-performance ratio, but in terms of sheer raw power, the Triton 17 X has it in spades.

- Price: 3 / 5

Acer Predator Triton 17 X: Design

- Stunning 250Hz mini-LED display

- Packed with ports

- A bit heavy at 3kg / 6.6lbs

Here's what's inside the Acer Predator Triton 17 X supplied to TechRadar.

CPU: Intel Core i9-13900HX

GPU: Nvidia RTX 4090

RAM: 64GB LPDDR5

Storage: 2TB NVMe Gen 4.0

Display: WQXGA (2560 x 1600) 16:10 IPS 250Hz

Ports: 2x USB 3.2, 2x USB-C, 2.5Gb Ethernet, 3.5mm audio jack, microSD card slot

Wireless: Wi-Fi 6E; Bluetooth 5.1

Weight: 3kg / 6.6lbs

Dimensions: 28 x 38.04 x 2.19cm (LxWxH)

The most notable thing about the Acer Predator Triton 17 X at first glance is the display which is certainly a leading model as far as gaming laptops go. This portable powerhouse packs in a 16:10 WQXGA (2,560 x 1,600 resolution) screen meaning more real-estate is available for gaming than 16:9 can offer. It's bolstered by a 250Hz refresh rate and is Nvidia G-sync compatible, so there's no screen tearing.

It's not the first laptop to feature a mini-LED display, but it is an excellent example of the panel tech in action. While not quite as vivid as OLED, it is considerably brighter, and the 1,000 local dimming zones do a solid job of standing in with similar black levels. Considering the hardware inside, an RTX 4090 mobile GPU backed up by an Intel 13th-gen Core i9 processor, you'll be able to take advantage of that high refresh rate, too.

Acer's design philosophy for this machine is "excellent in excess" and that's clearly demonstrated with the hardware packed into a portable form factor. Mind you, this rig weighs in at 3kg / 6.6lbs making it one of the heavier models on the market. With a 17-inch screen, it's fairly large as well, and while technically portable, the 17 X is unlikely to be something you'll commonly be slinging into a bag. It's more of an out-and-out desktop replacement.

While you're likely to plug in one of the best gaming keyboards and best gaming mice, the Acer Predator Triton 17 X features a solid keyboard and trackpad for casual web browsing and typing. It offers pleasant multi-zone RGB lighting which looks the part when playing in darker environments. The trackpad isn't as nice as some of the glass ones you'll find on a similarly priced Razer Blade, but it gets the job done. Again, a dedicated mouse will do the trick better.

No expense was spared on the connectivity front here, either. There are two USB-C ports, two USB 3.2 ports, 2.5Gb Ethernet, an SD card reader, and a 3.5mm audio jack. You'll have no shortage of options for either work or play, and it's good that the manufacturer chose function over form in this respect, as some thinner laptops can sacrifice port selection to achieve their svelte nature.

- Design: 4 / 5

Acer Predator Triton 17 X: Performance

- Unparalleled 1080p and 1440p gaming performance

- Silky smooth refresh rate

- Gets very hot and loud

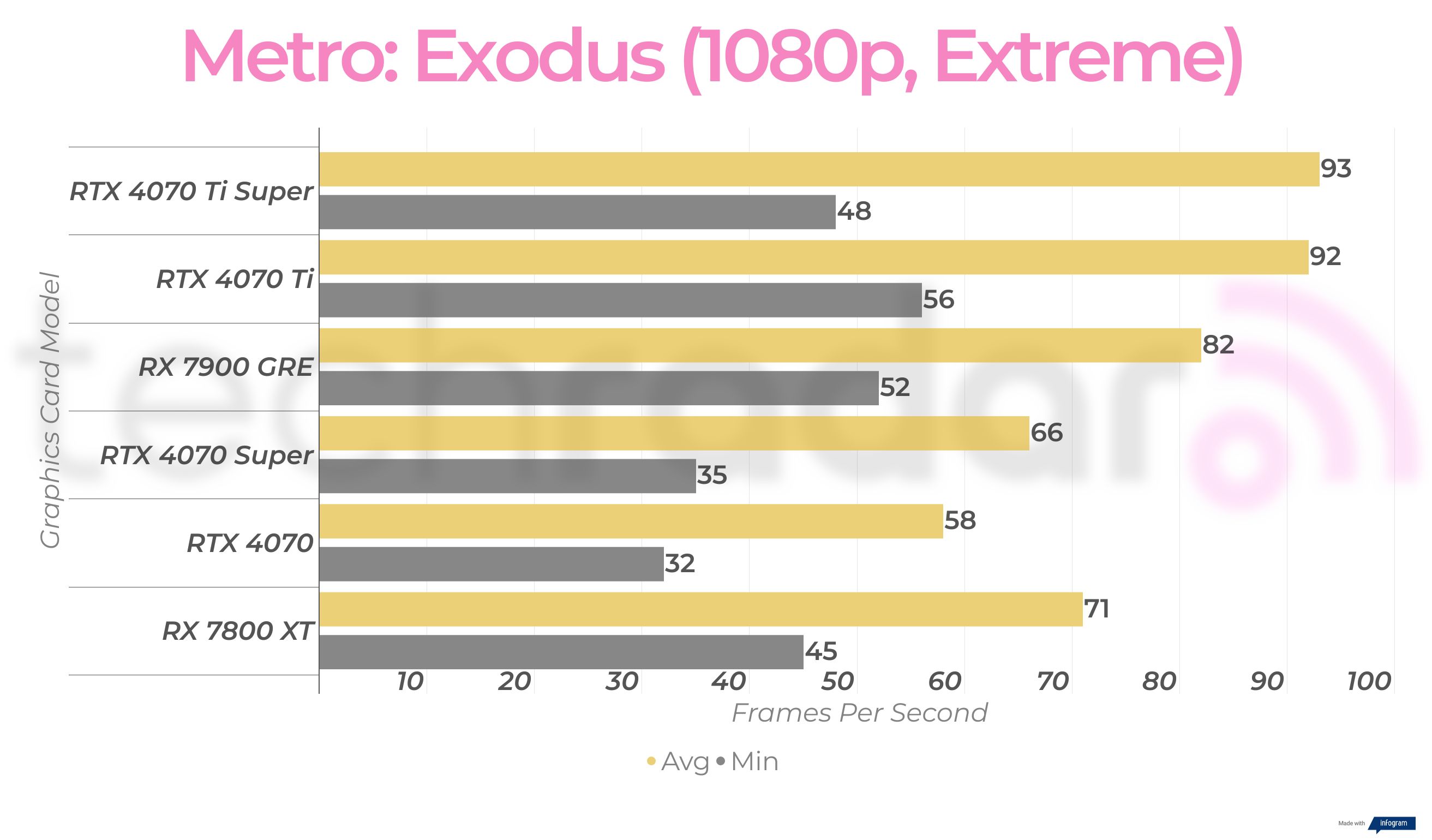

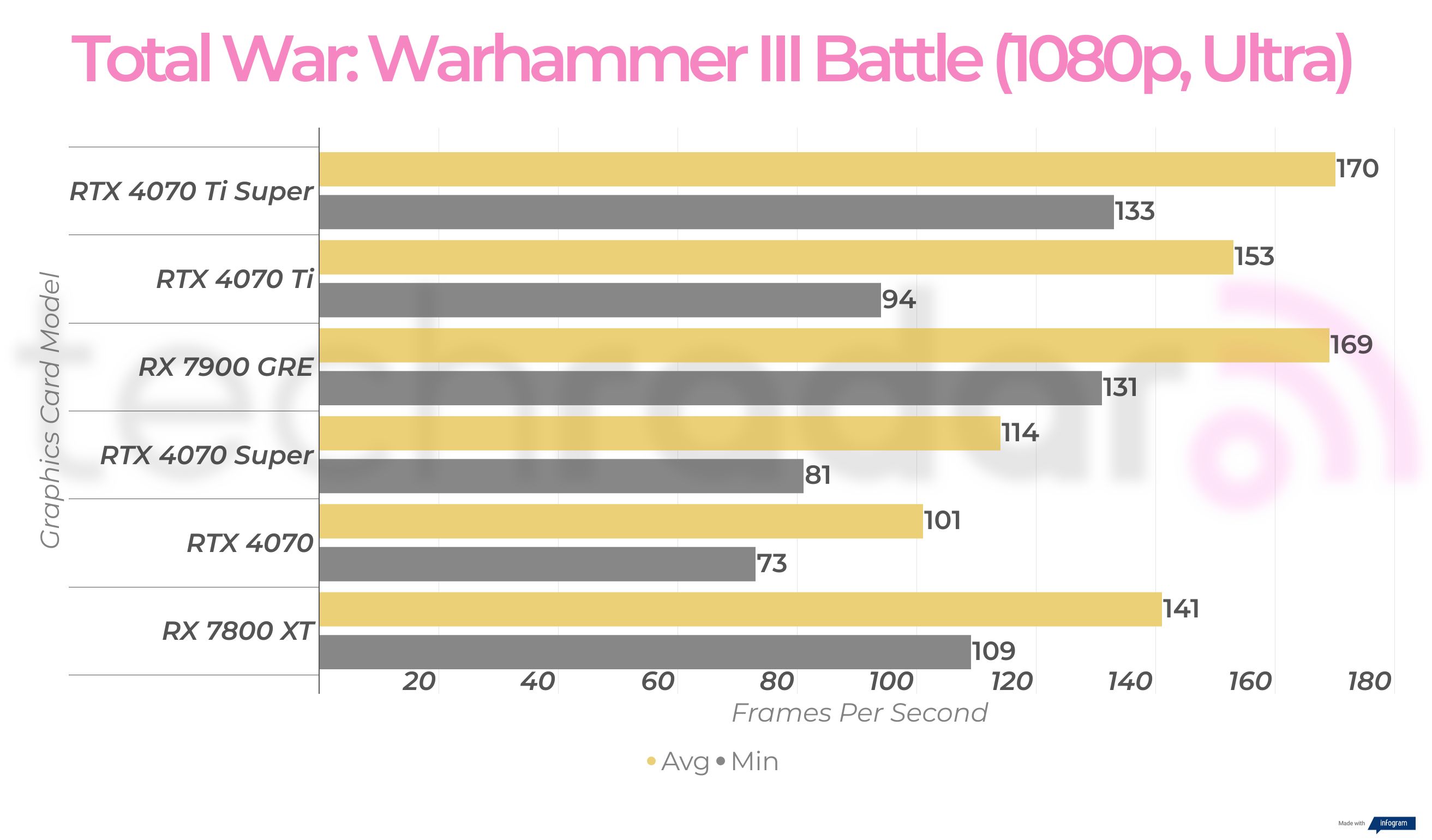

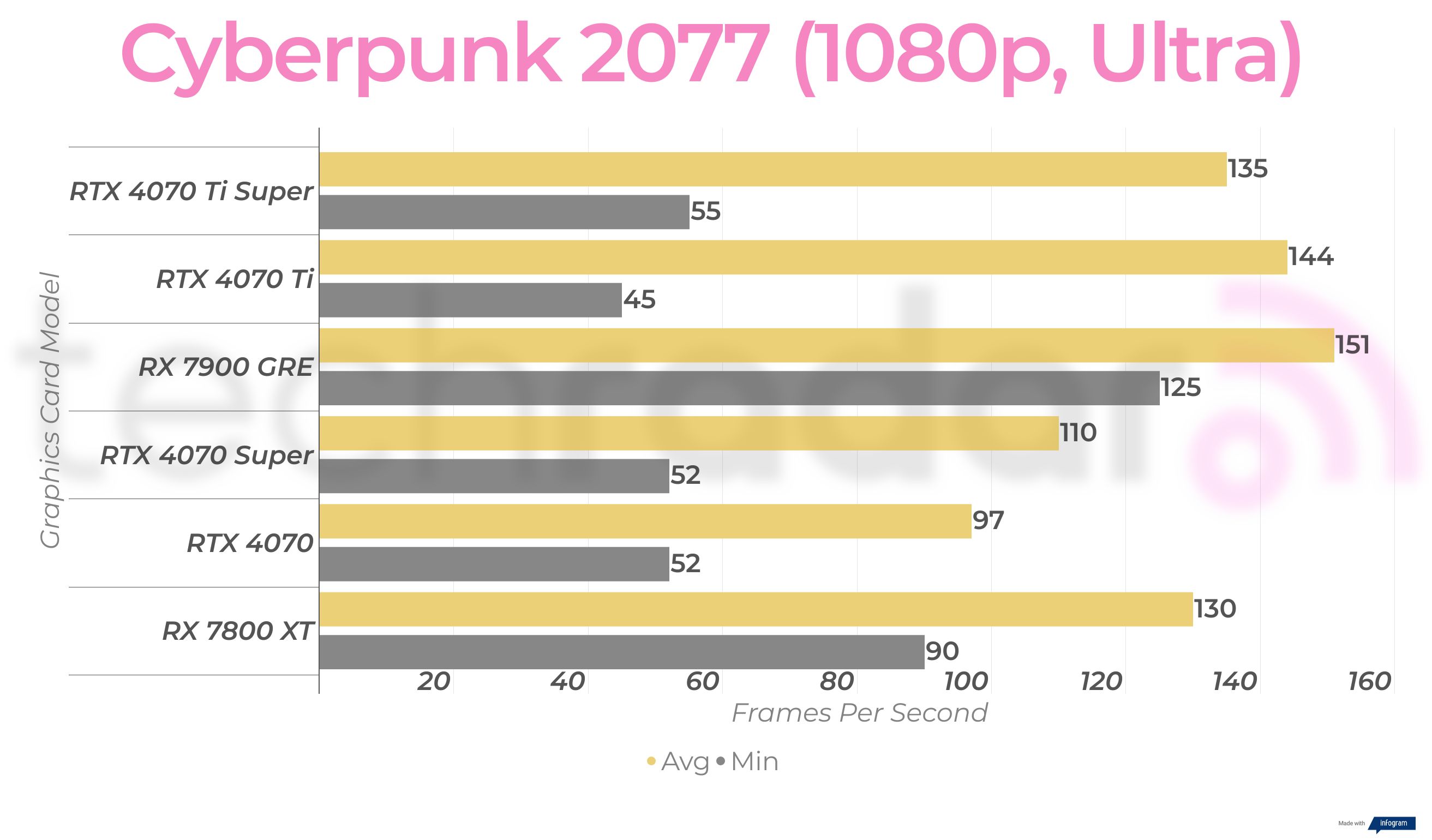

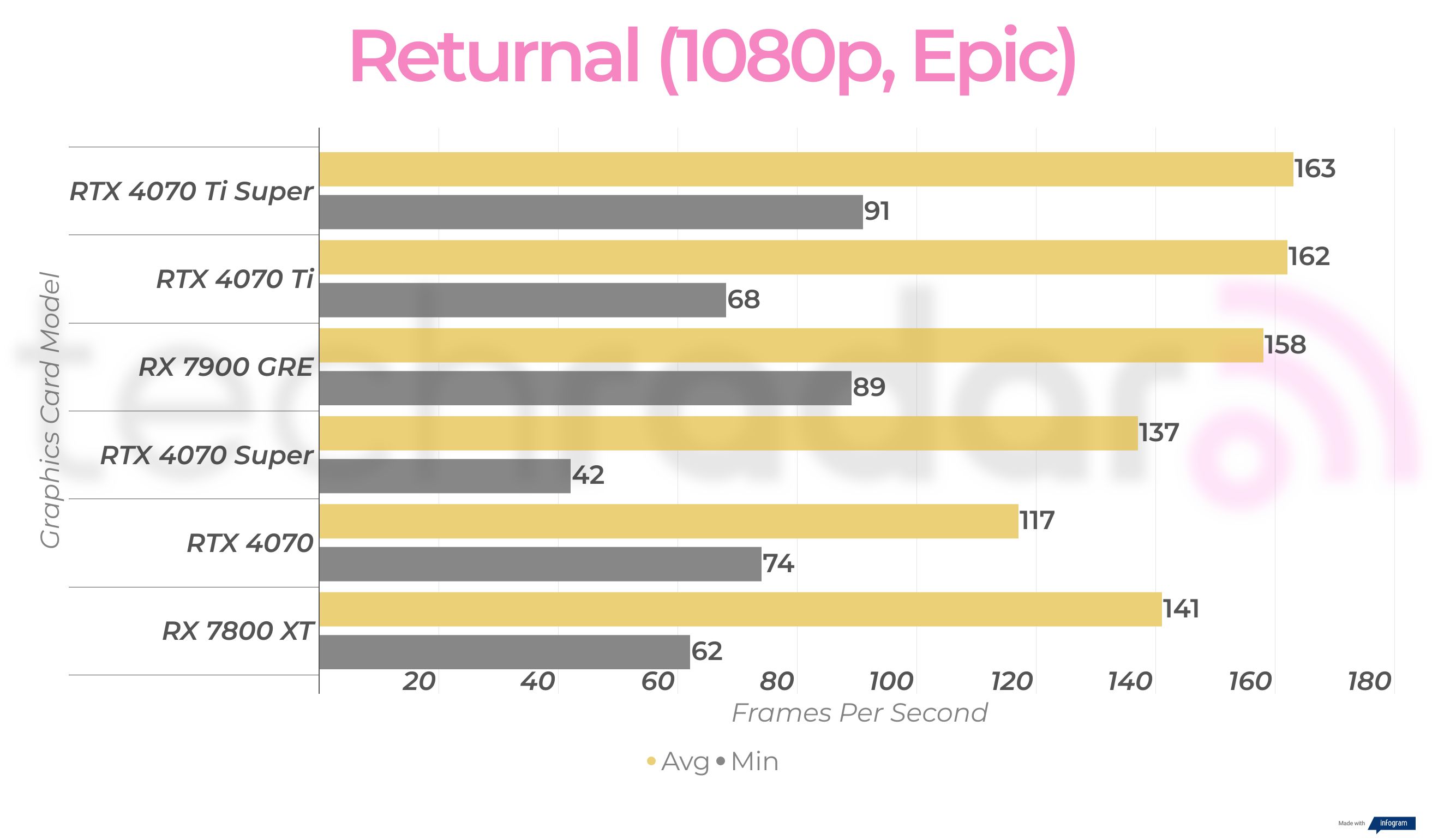

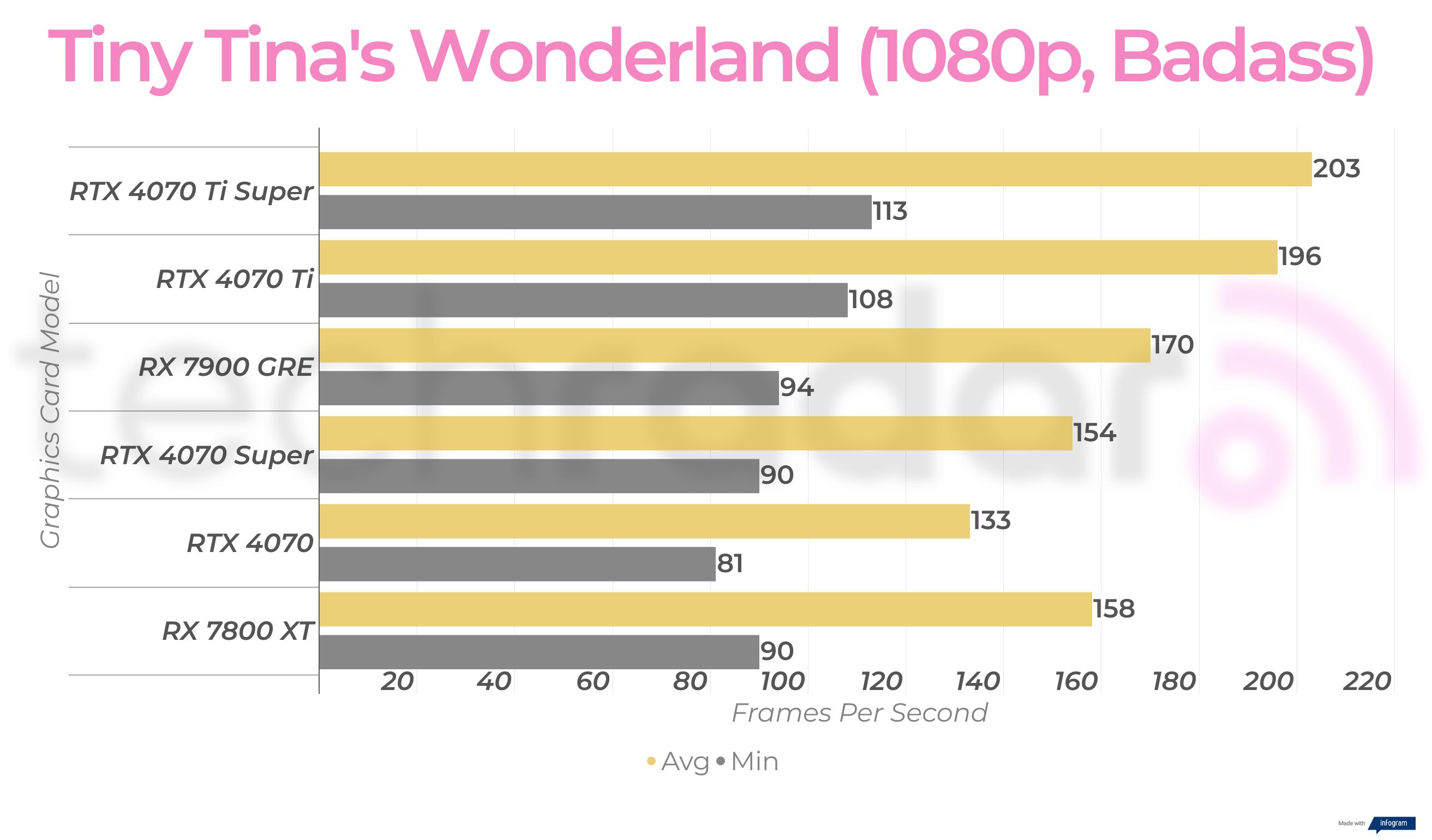

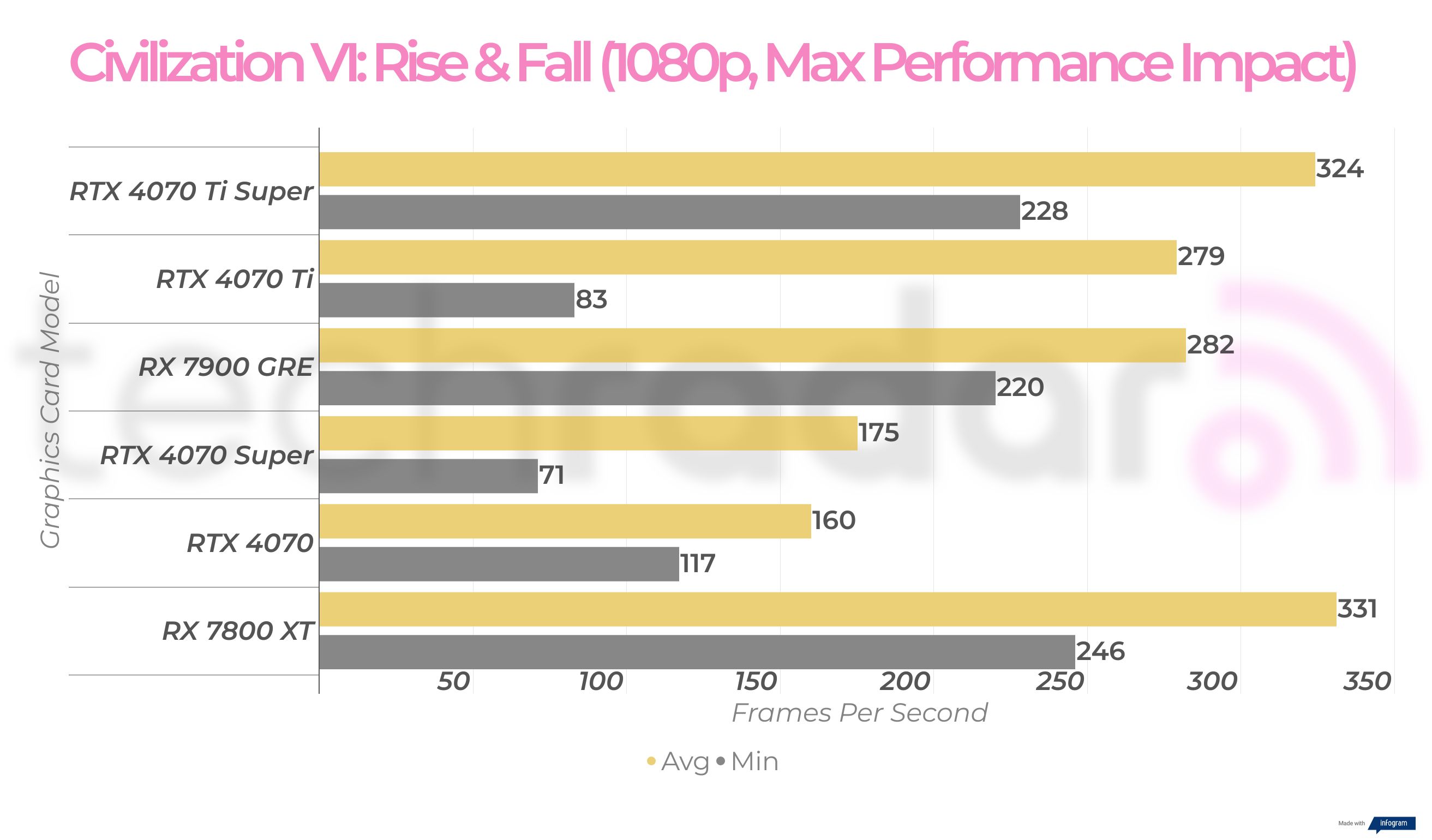

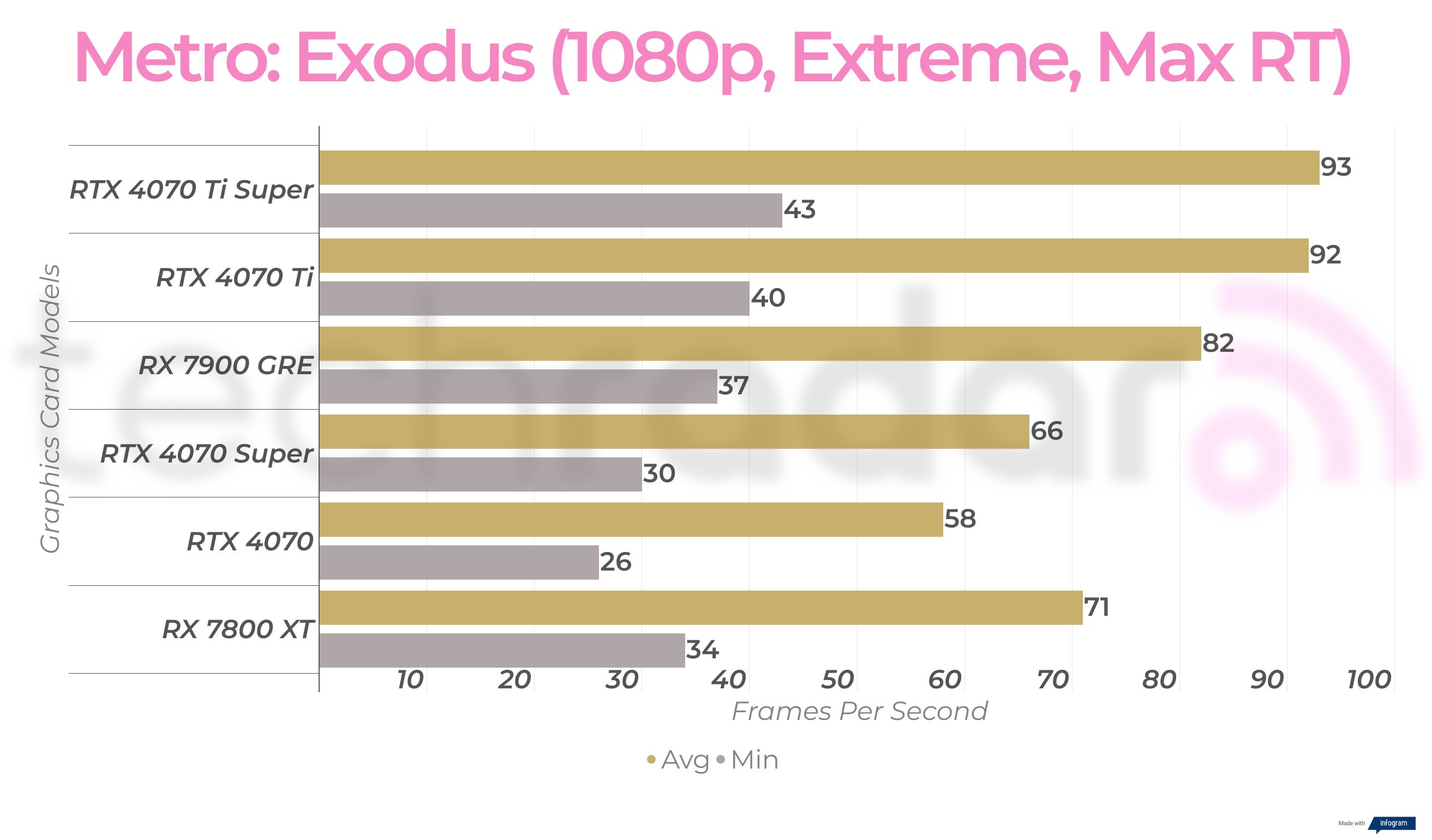

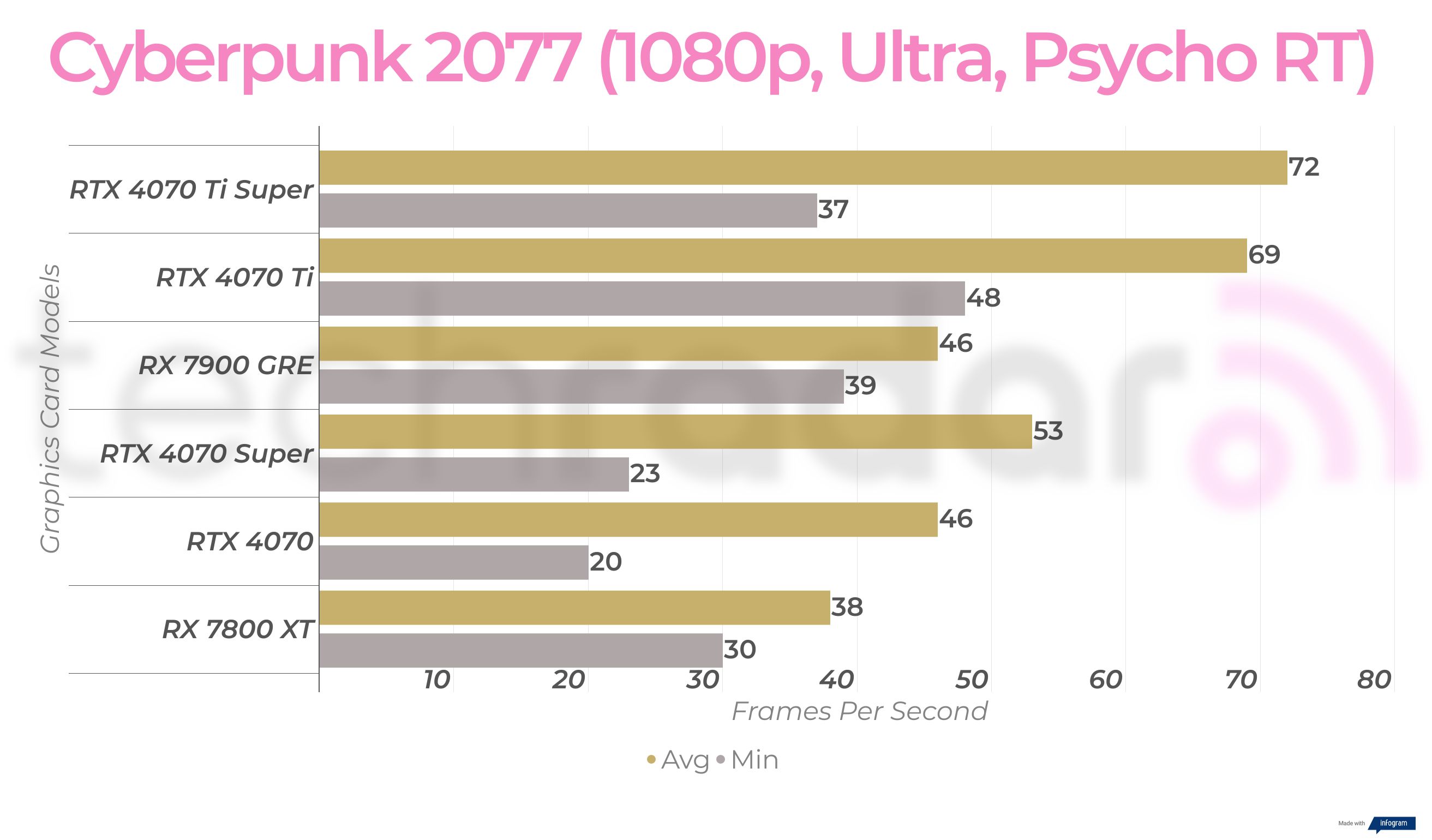

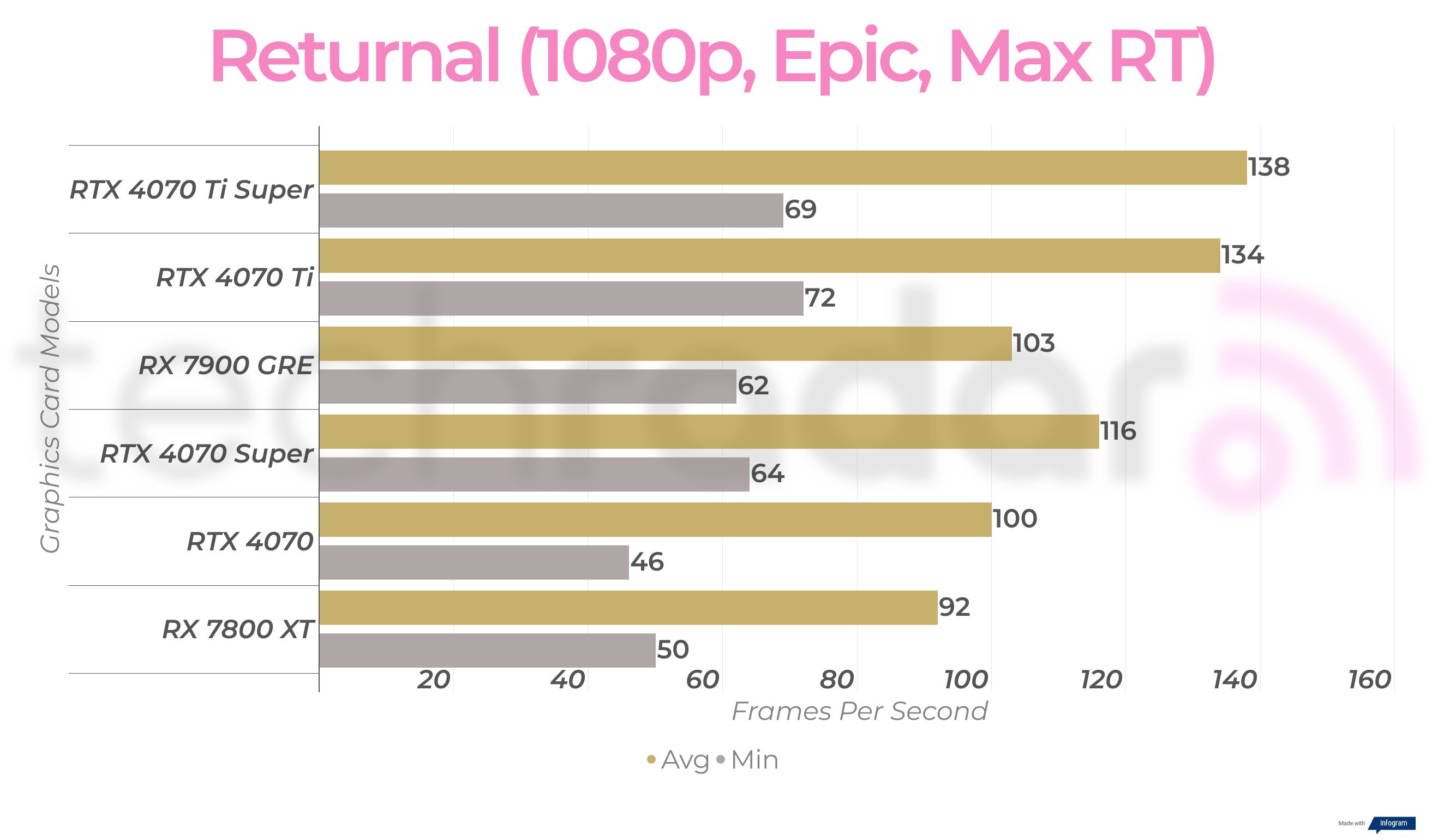

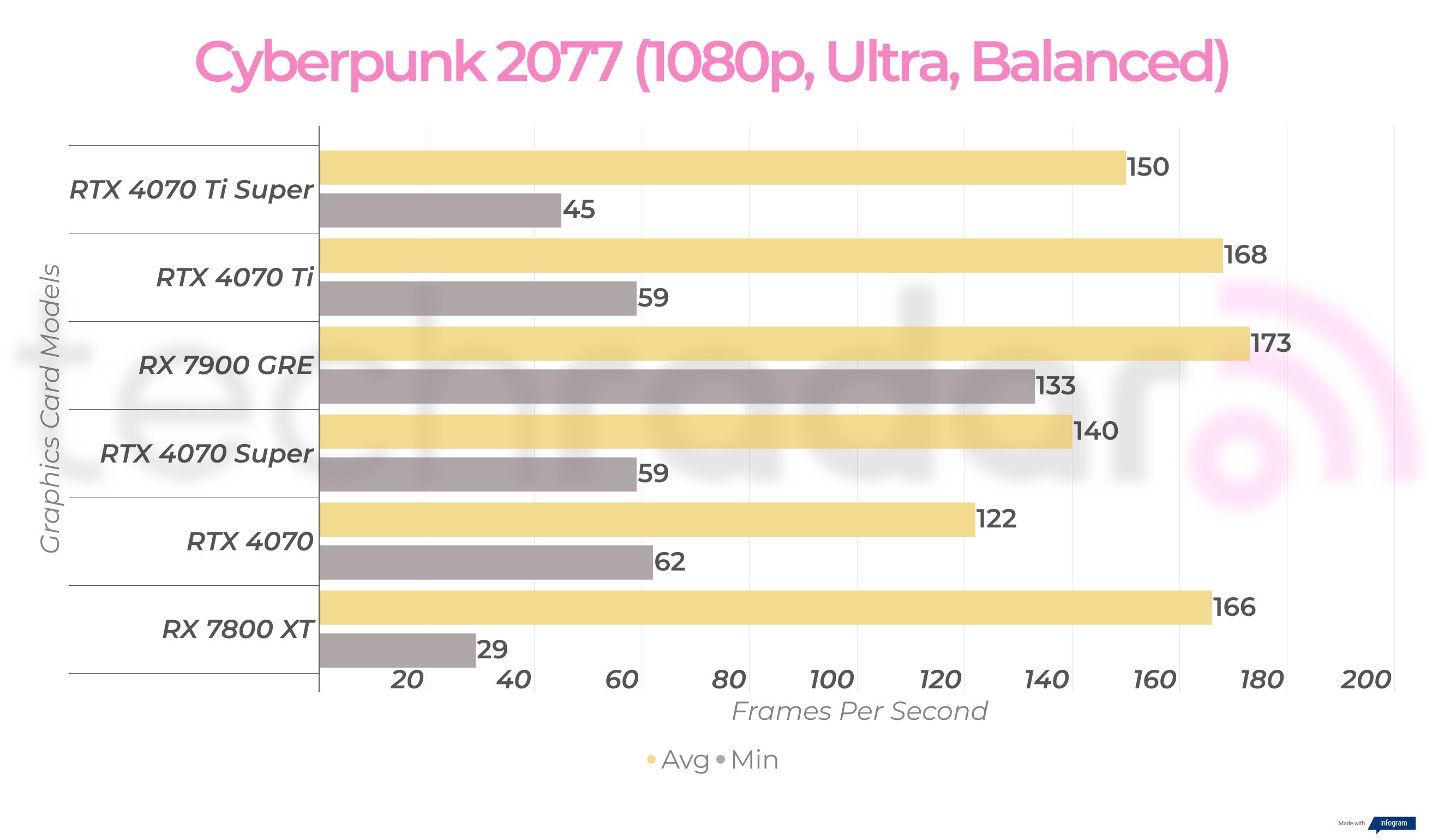

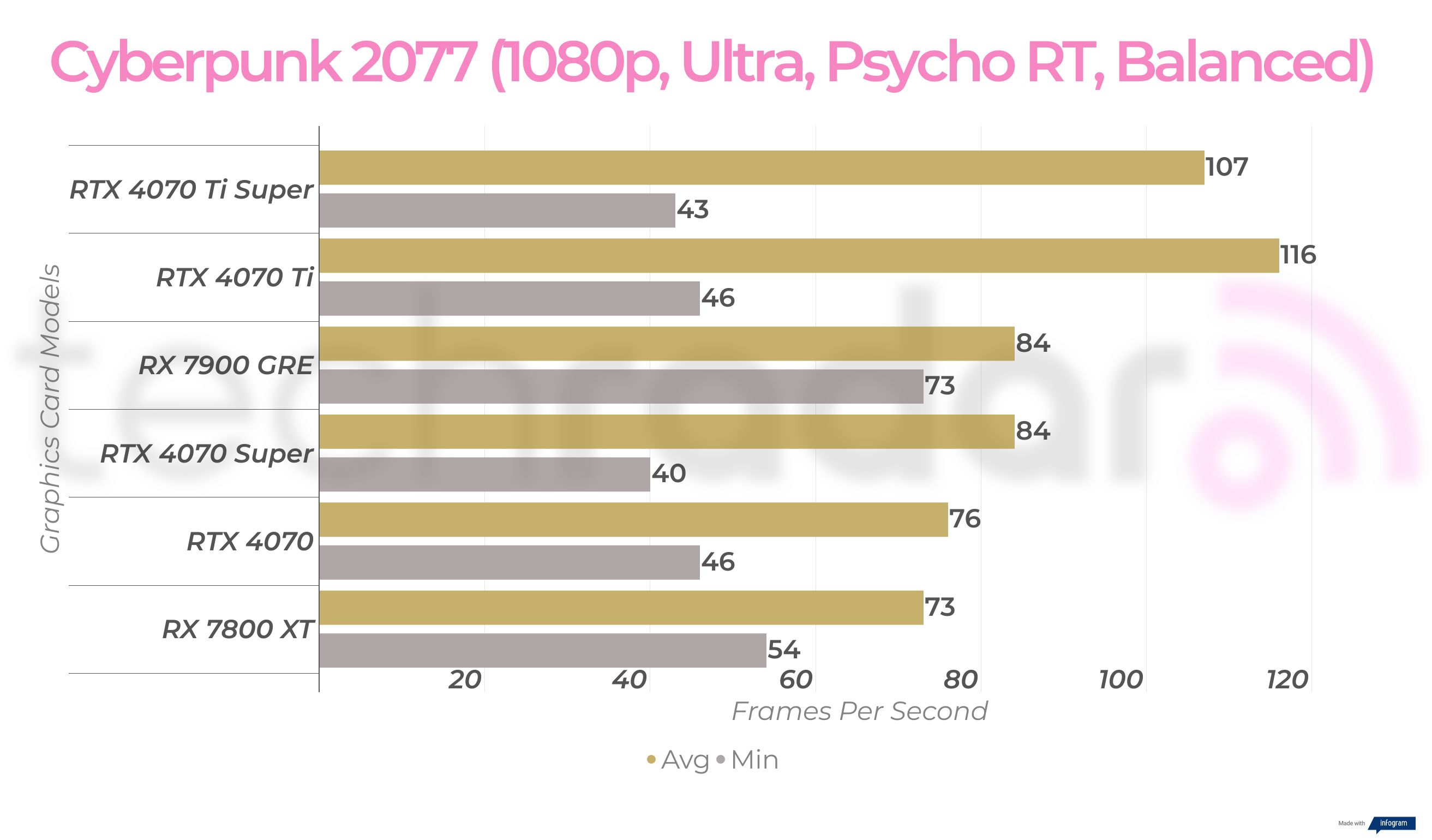

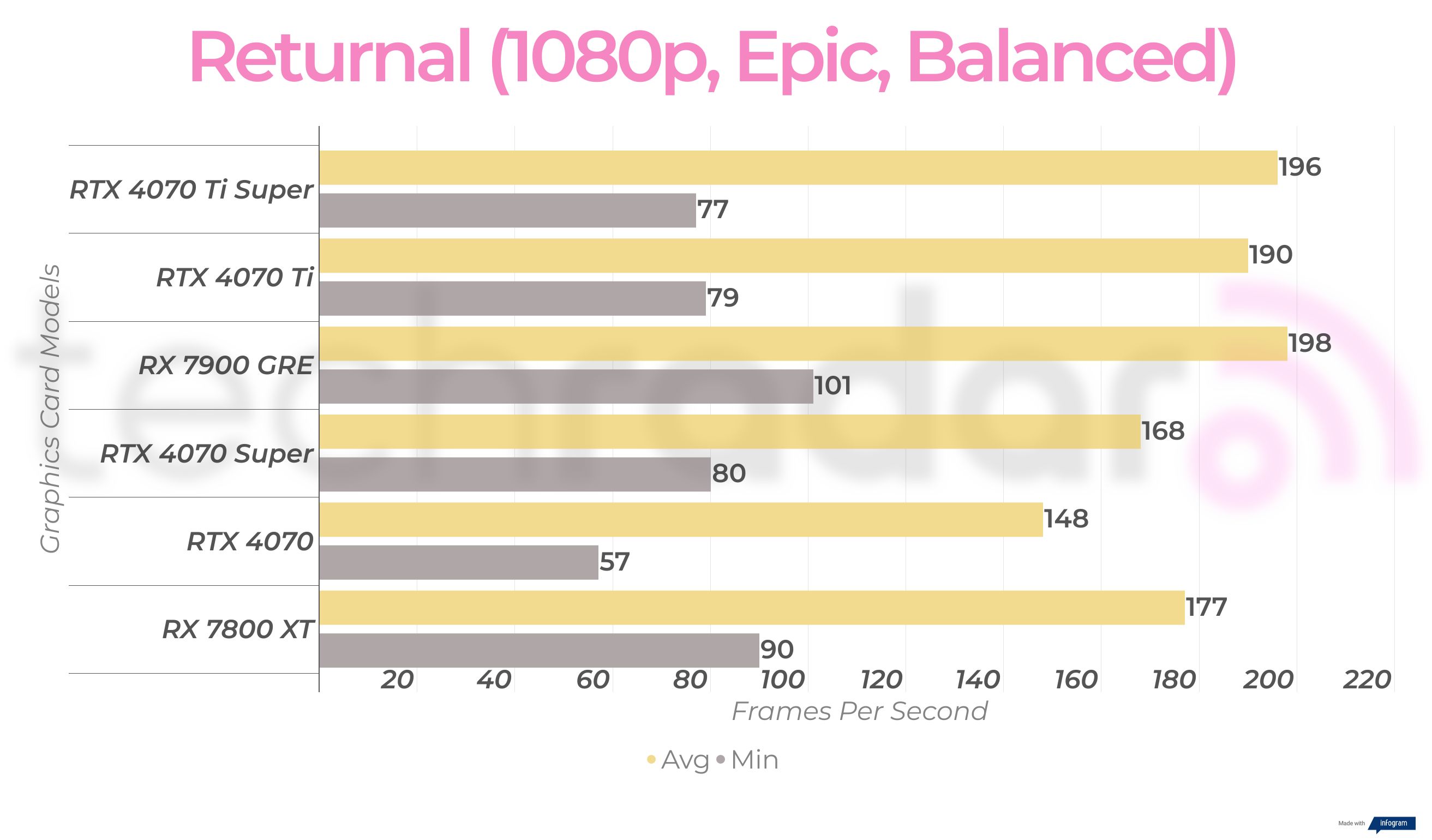

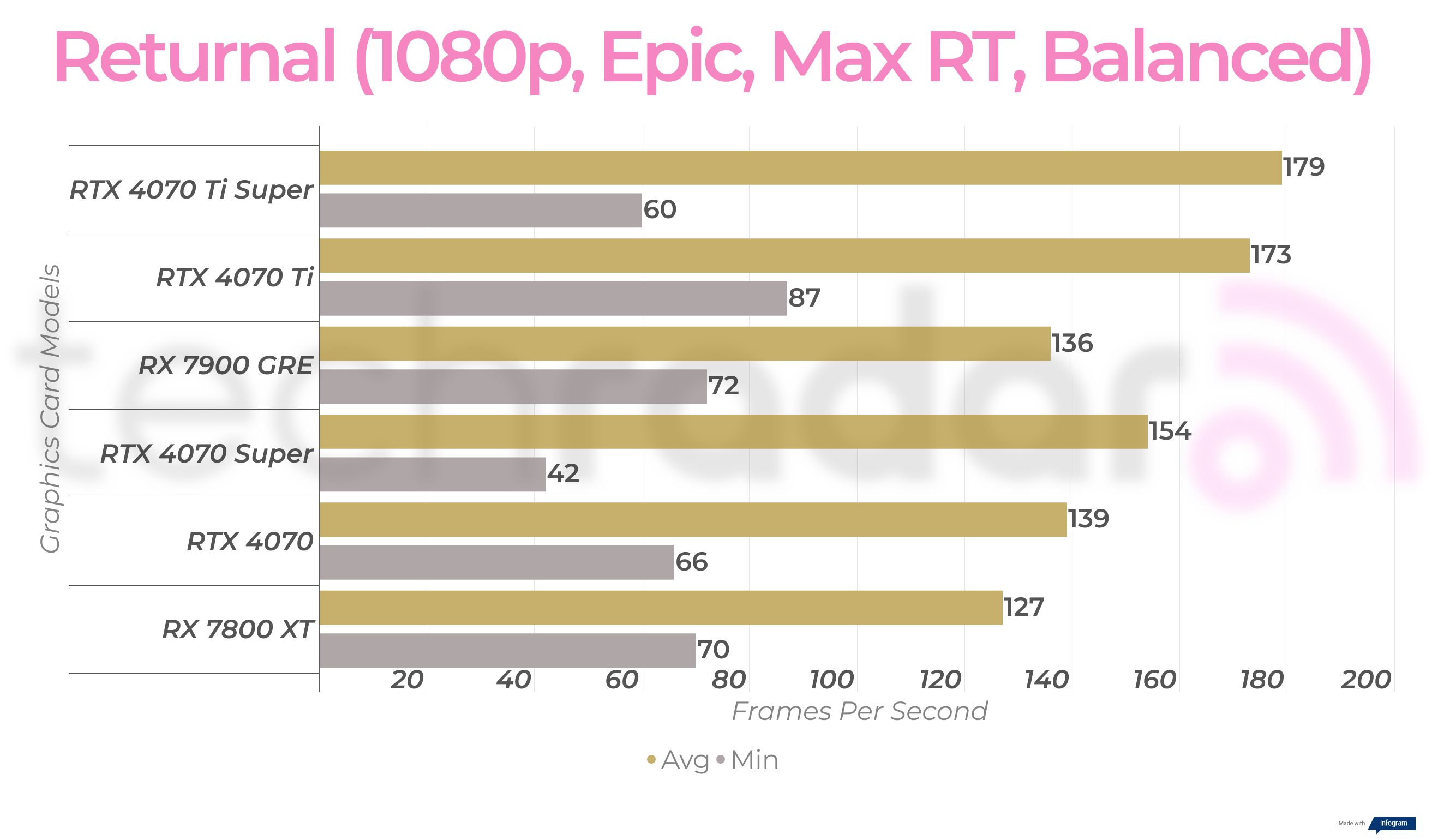

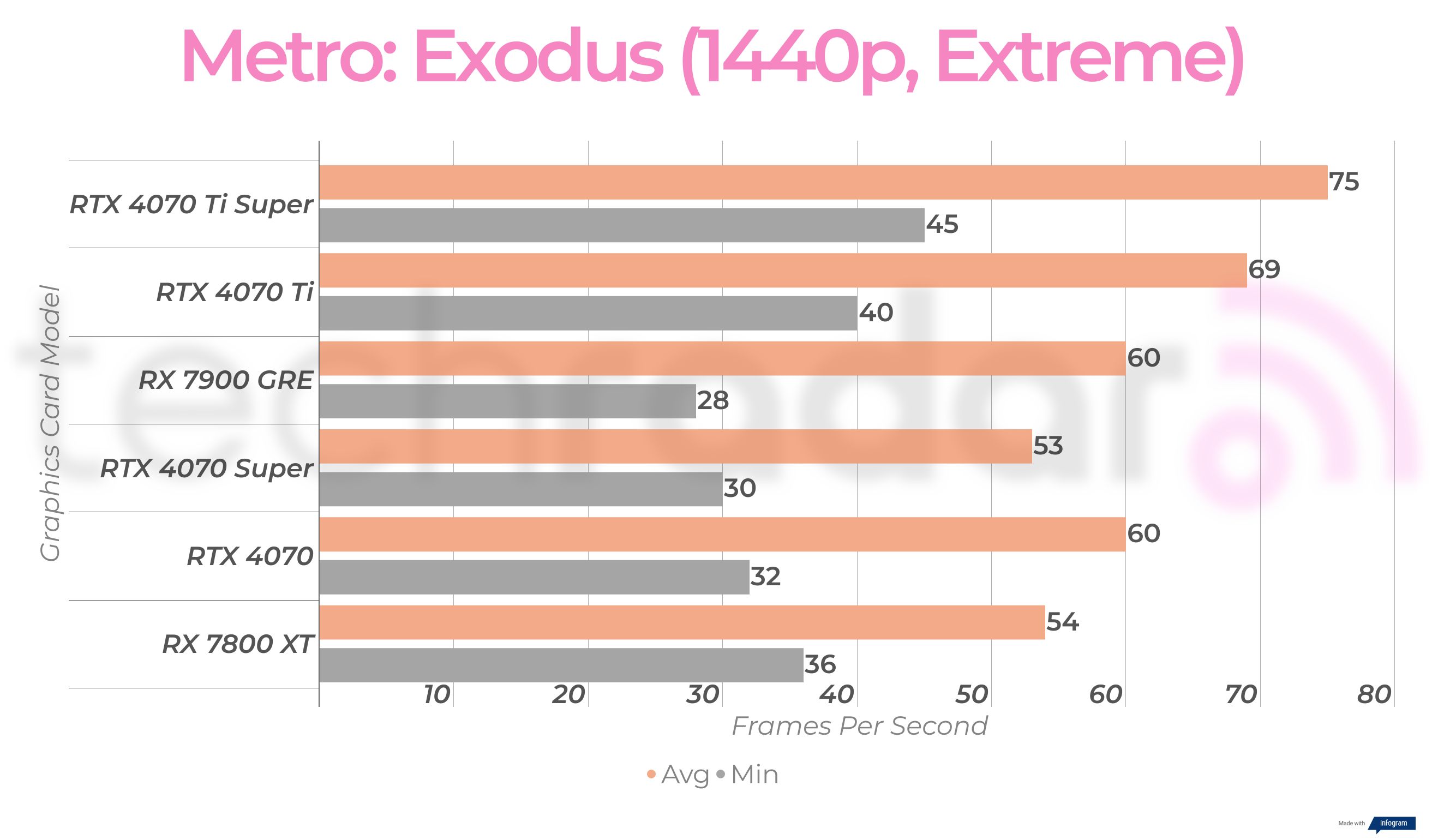

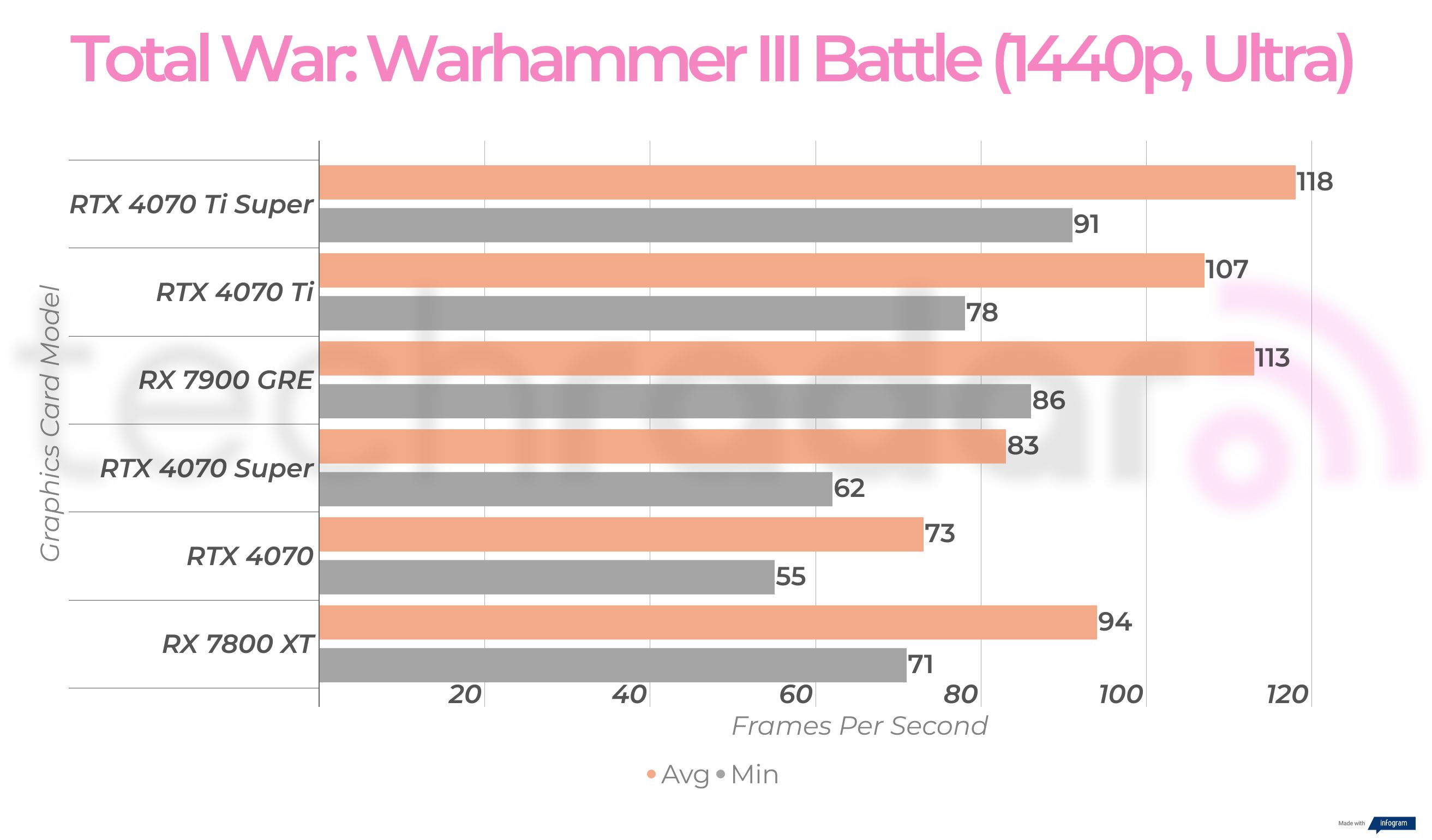

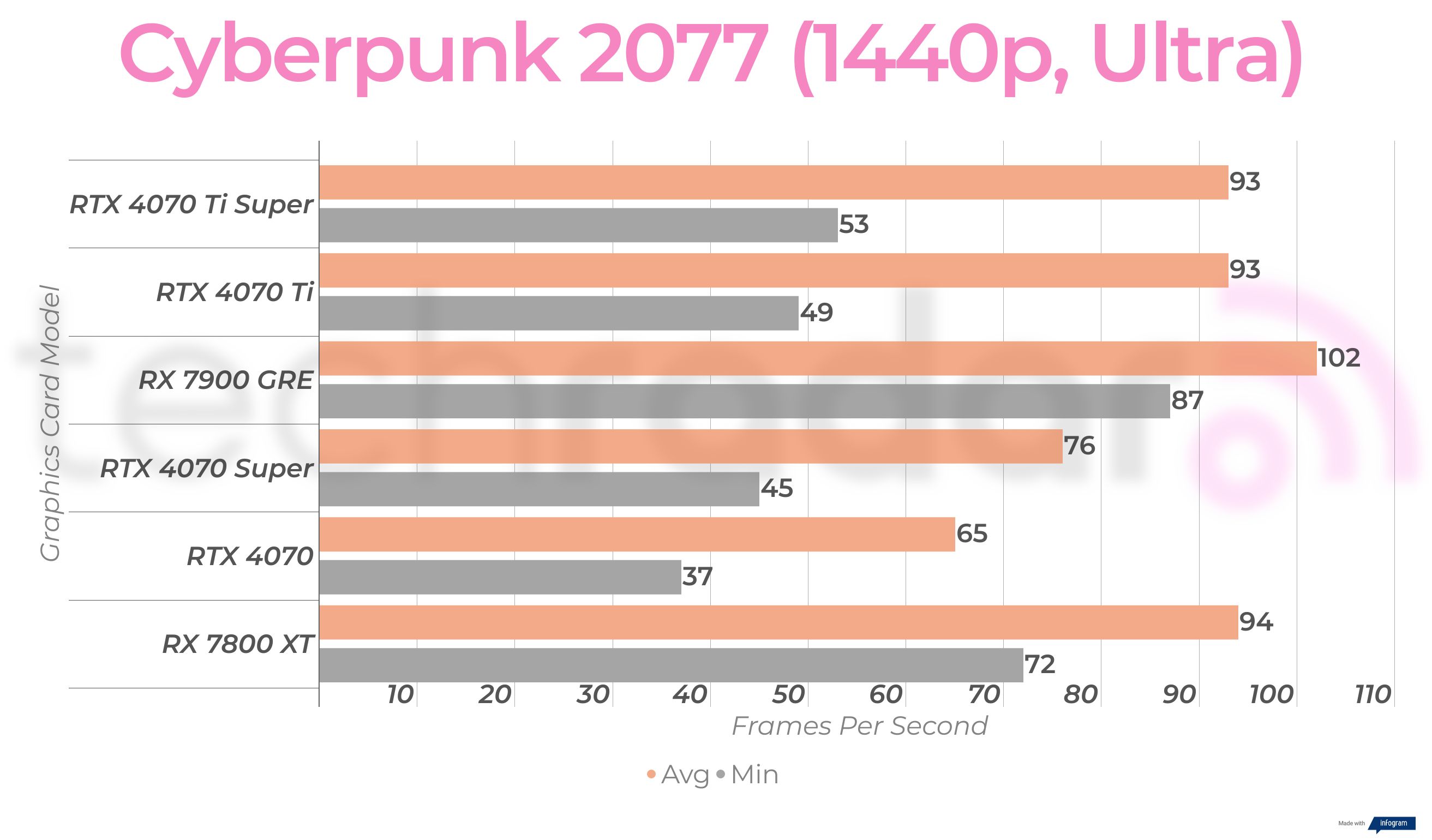

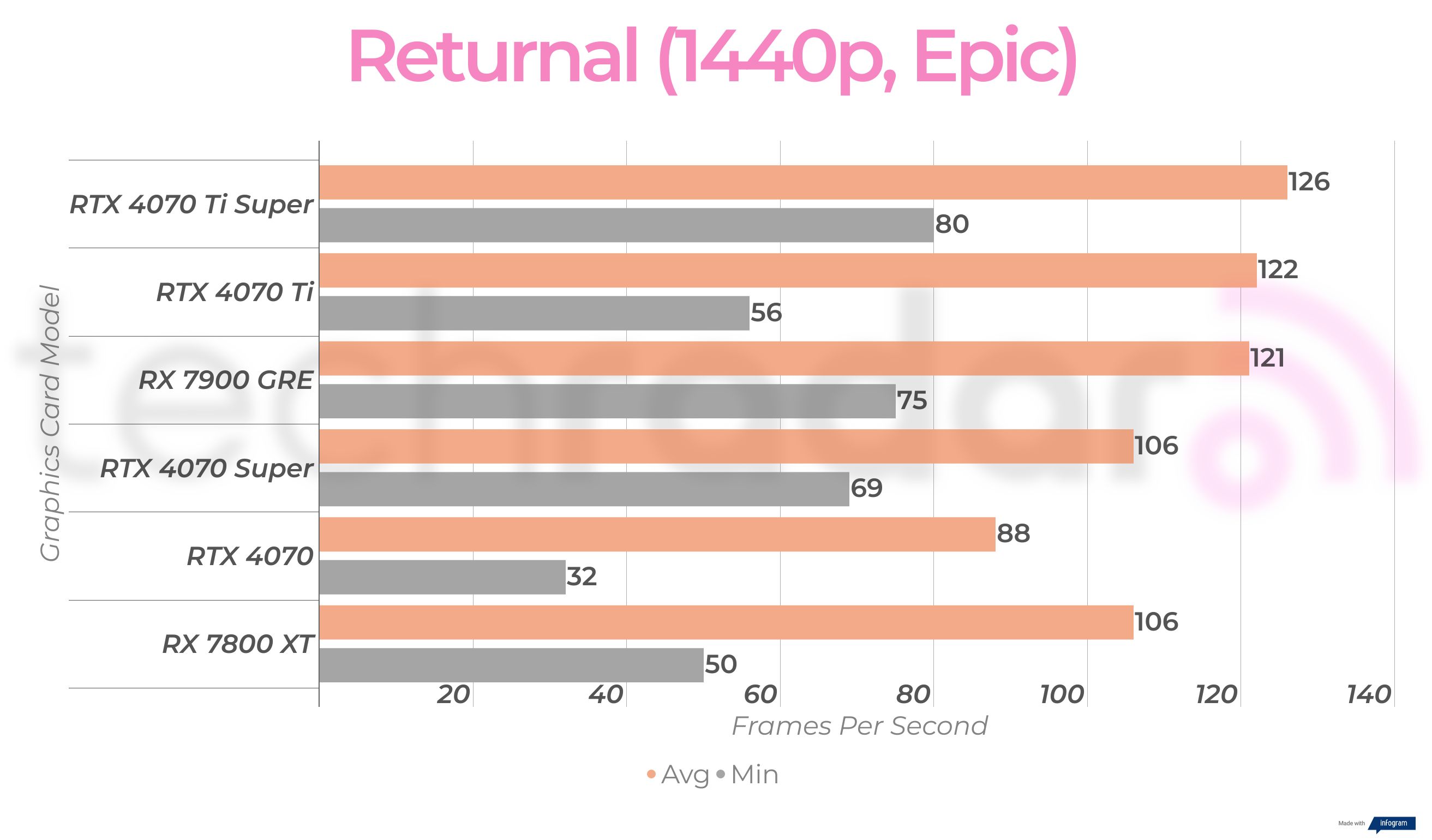

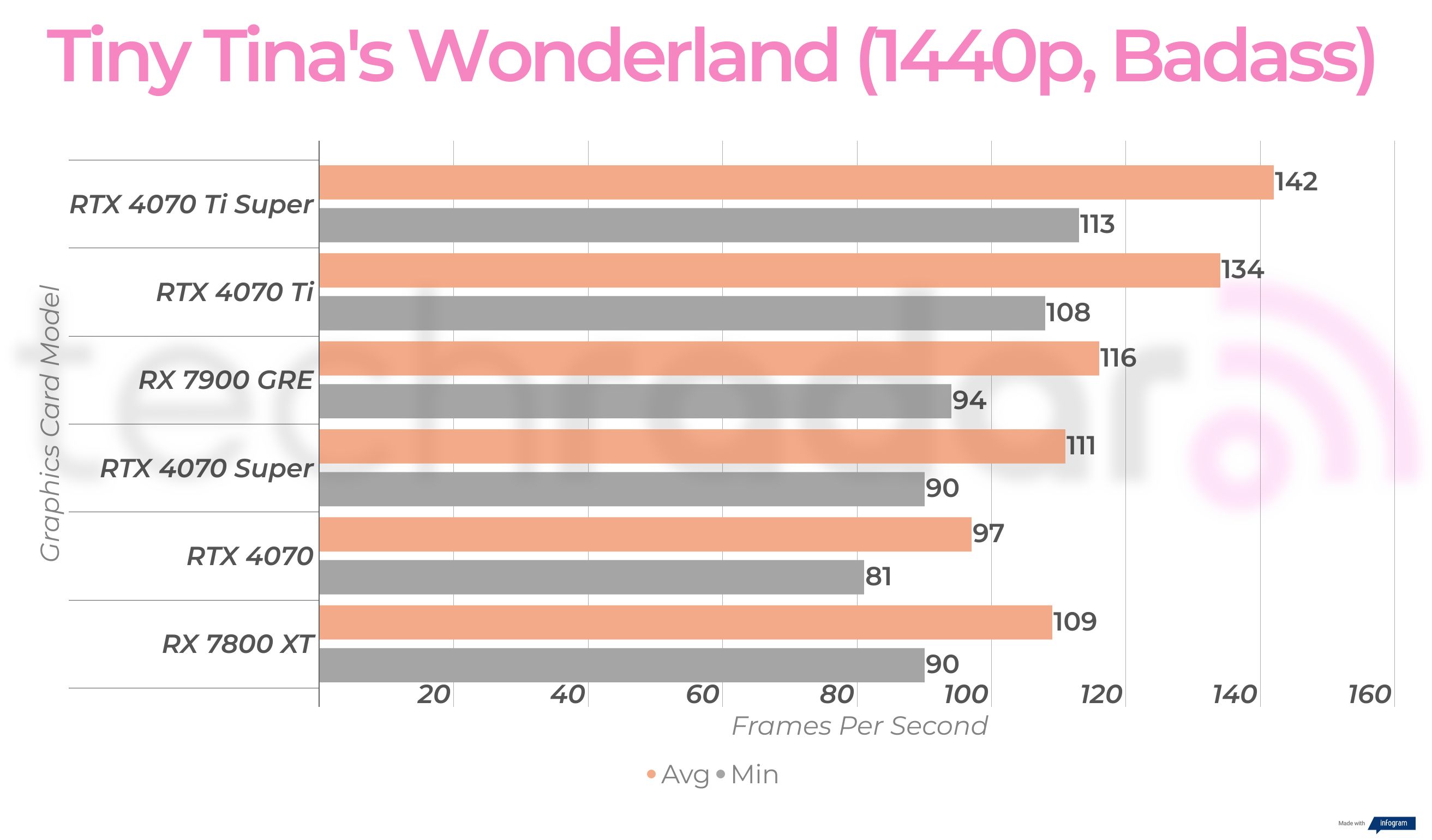

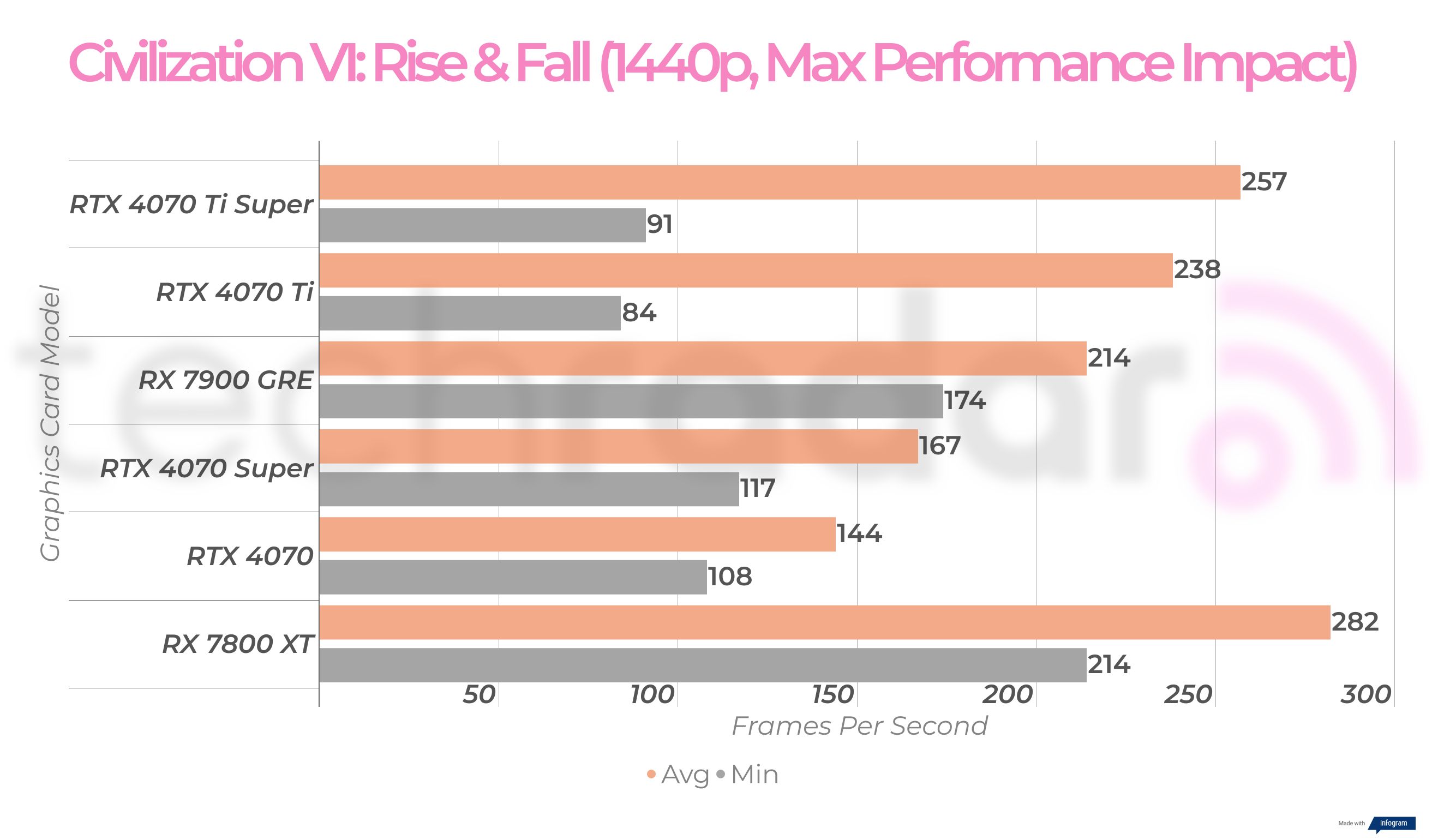

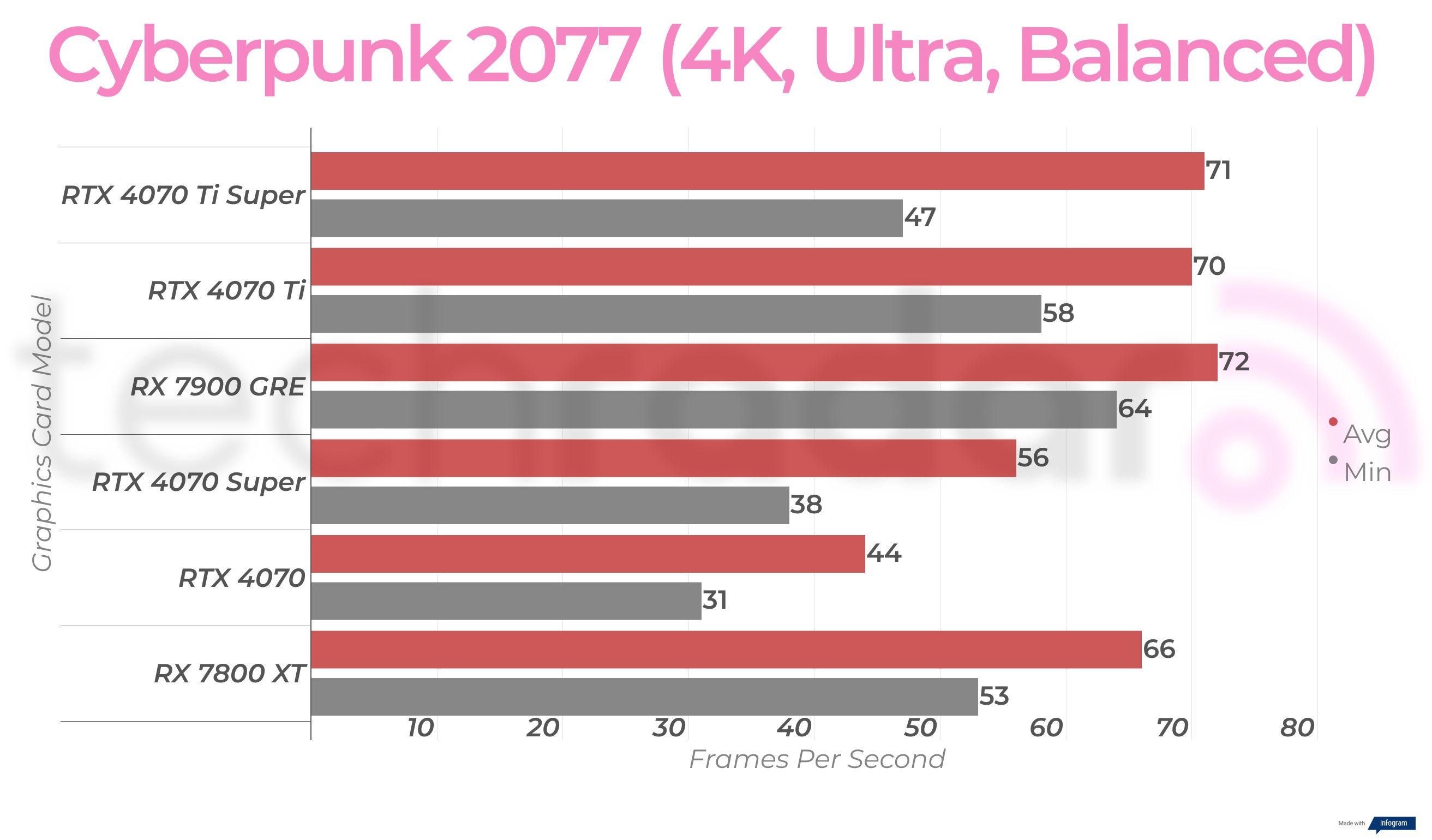

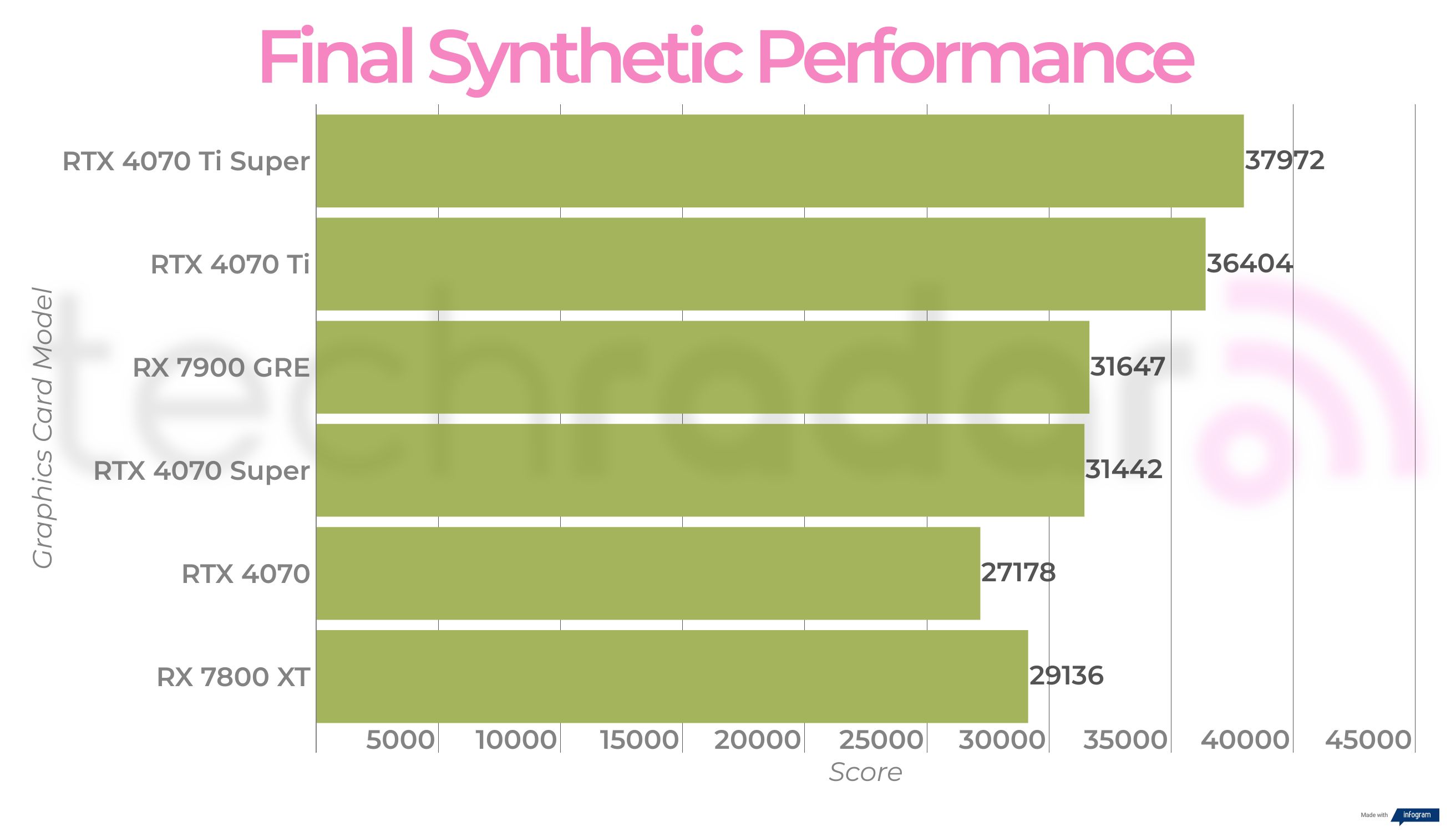

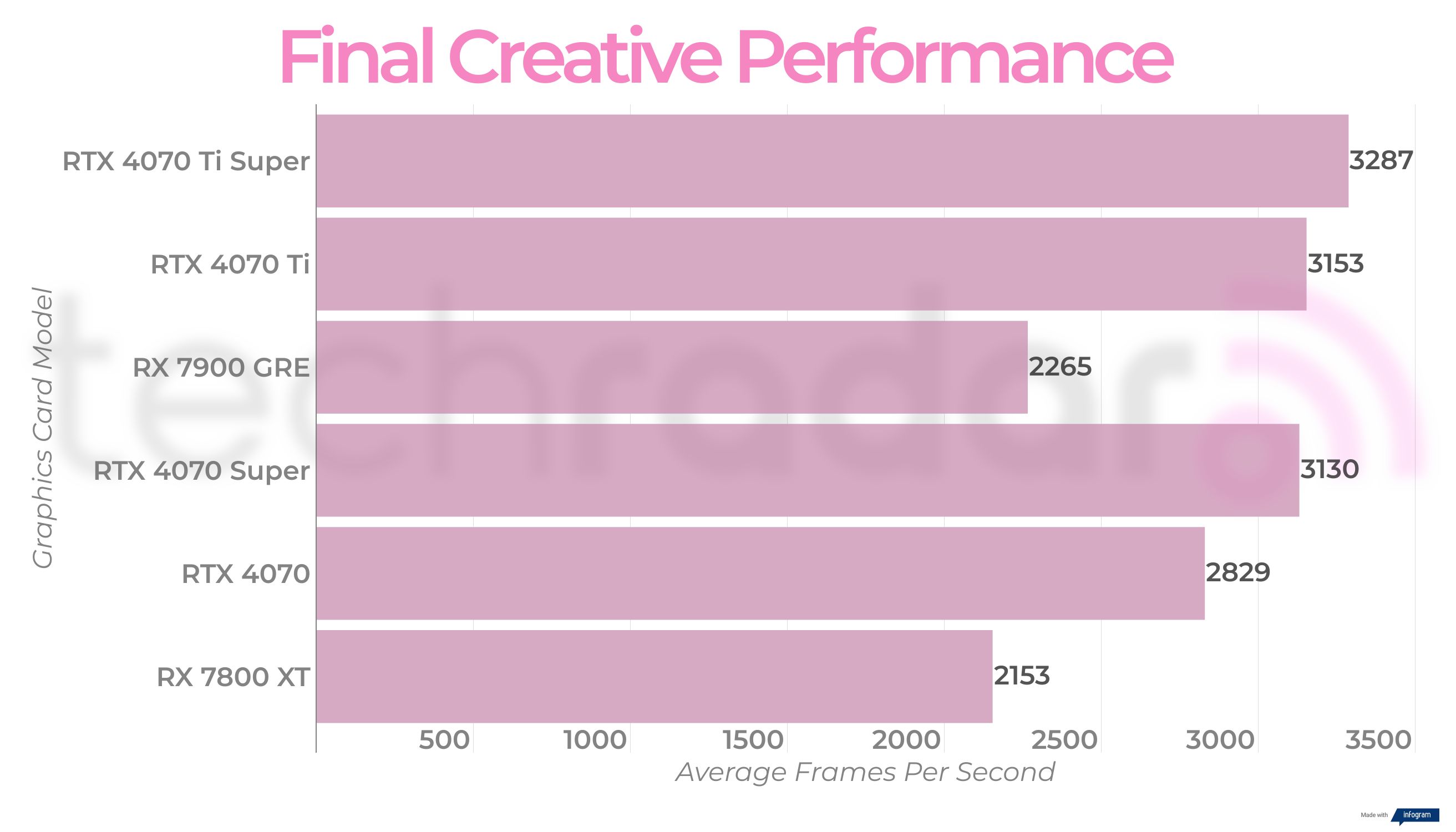

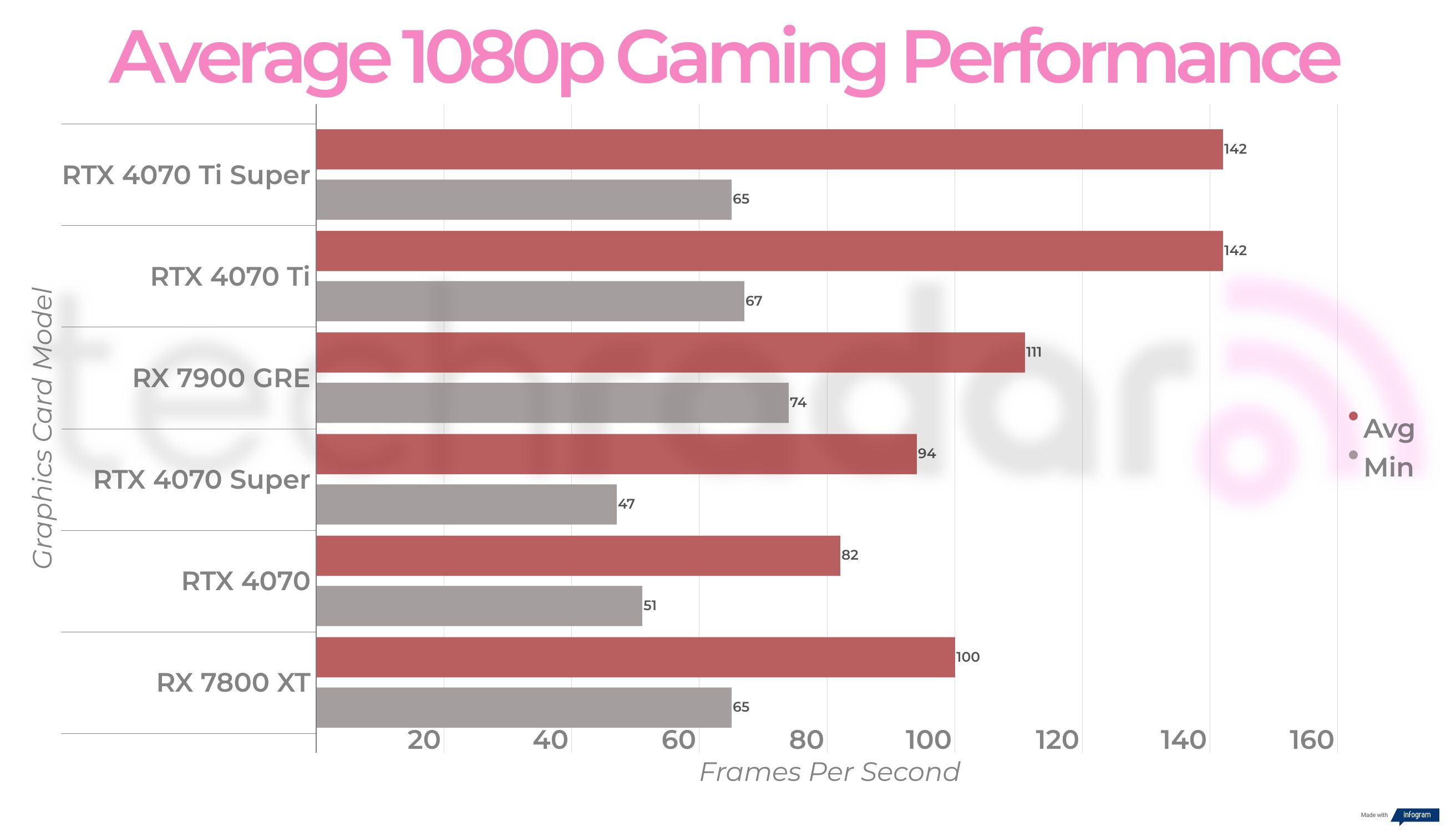

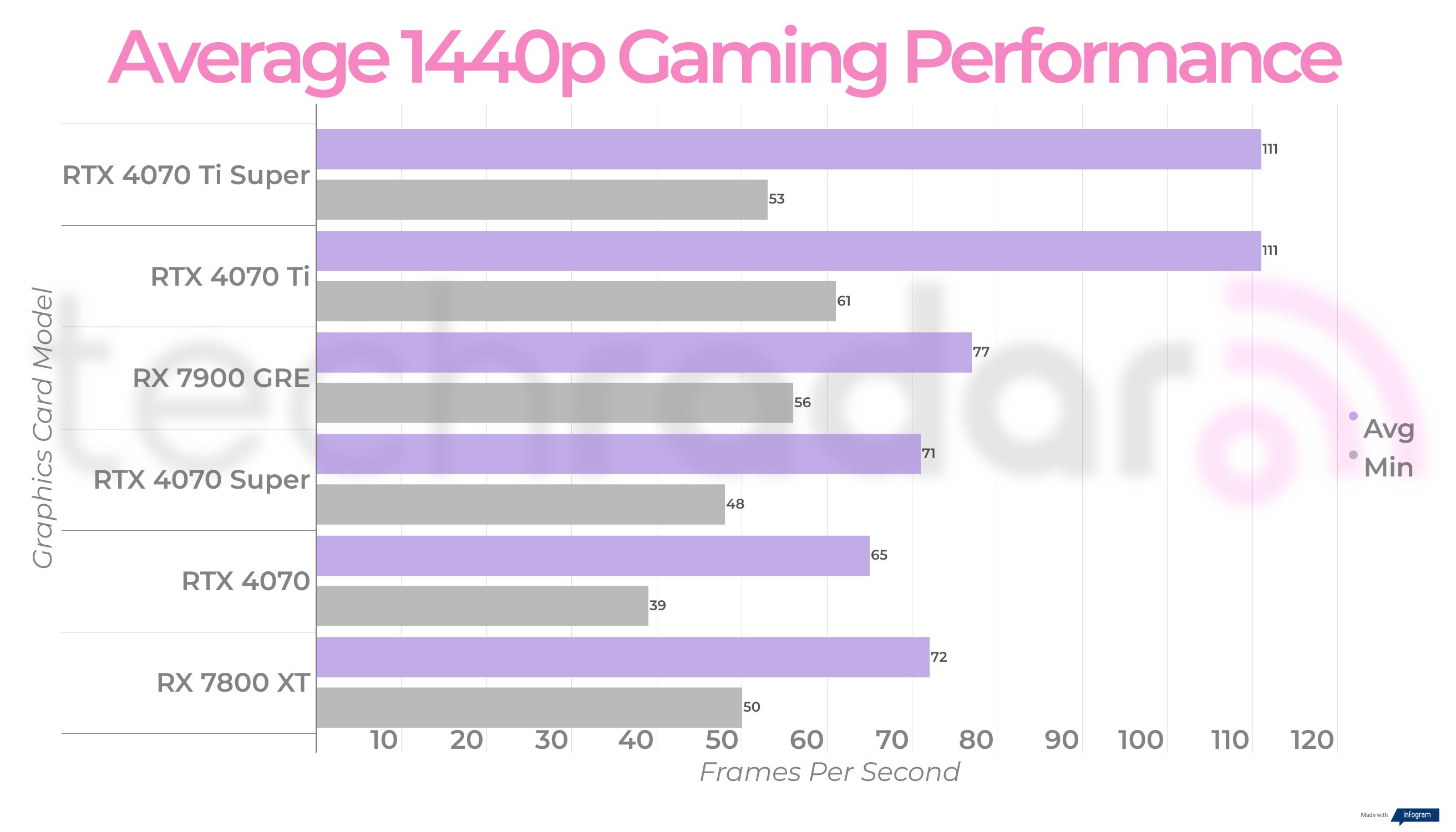

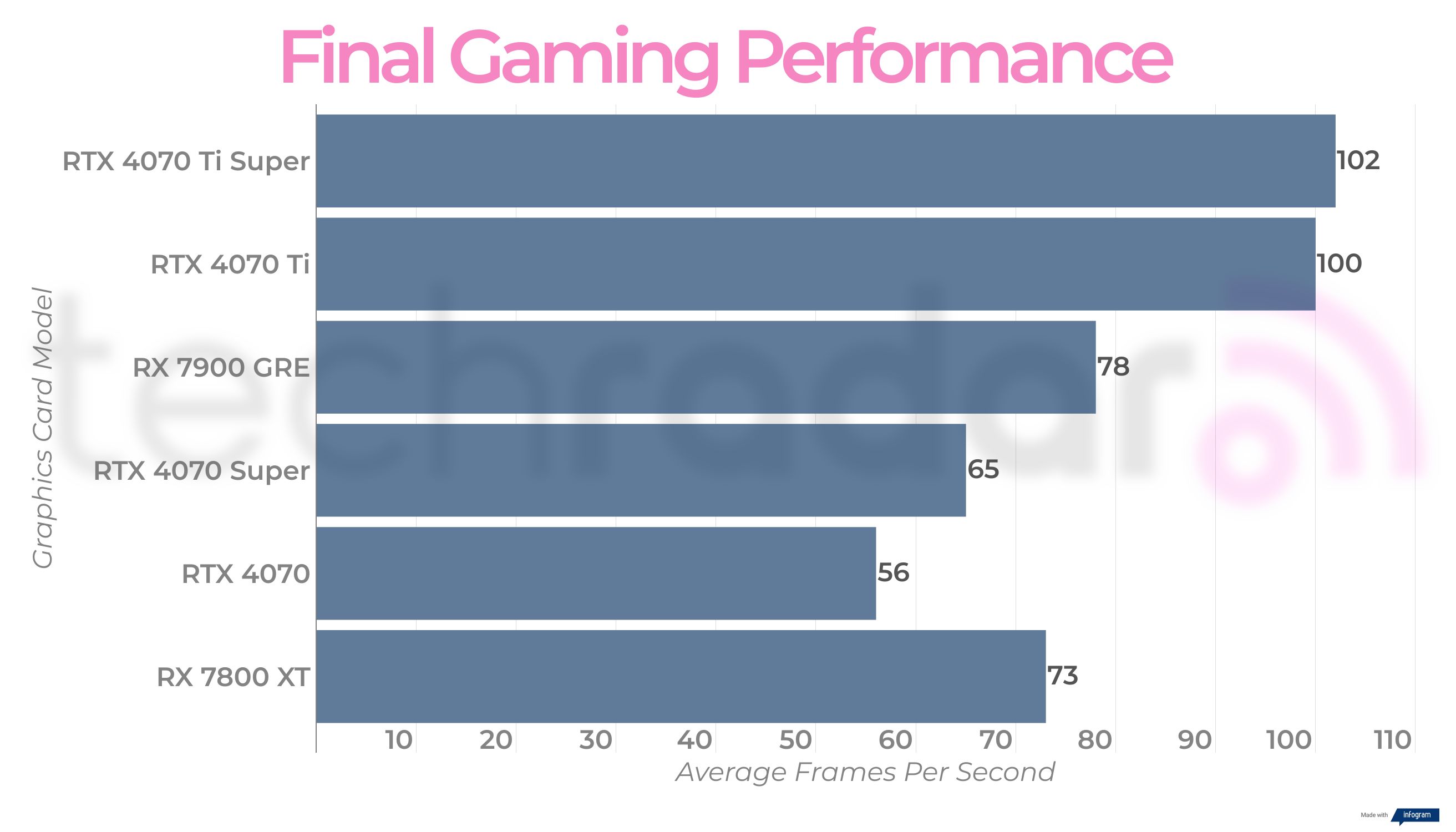

You won't be surprised to learn that a gaming laptop powered by the Intel Core i9-13900HX and Nvidia RTX 4090 with 64GB of LPDDR5 RAM absolutely mowed through our suite of benchmarks and games. The display for the laptop tops out at 250Hz, and you'll have all the horsepower necessary to achieve those kind of frame rates in 1080p, and drive very smooth gameplay at 1440p as well.

Here's how the Acer Predator Triton 17 X got on in our game testing.

Total War: Three Kingdoms (1080p) - 364fps (Low); 140fps (Ultra)

Total War: Three Kingdoms (1440p) - 290fps (Low) ; 92fps (Ultra)

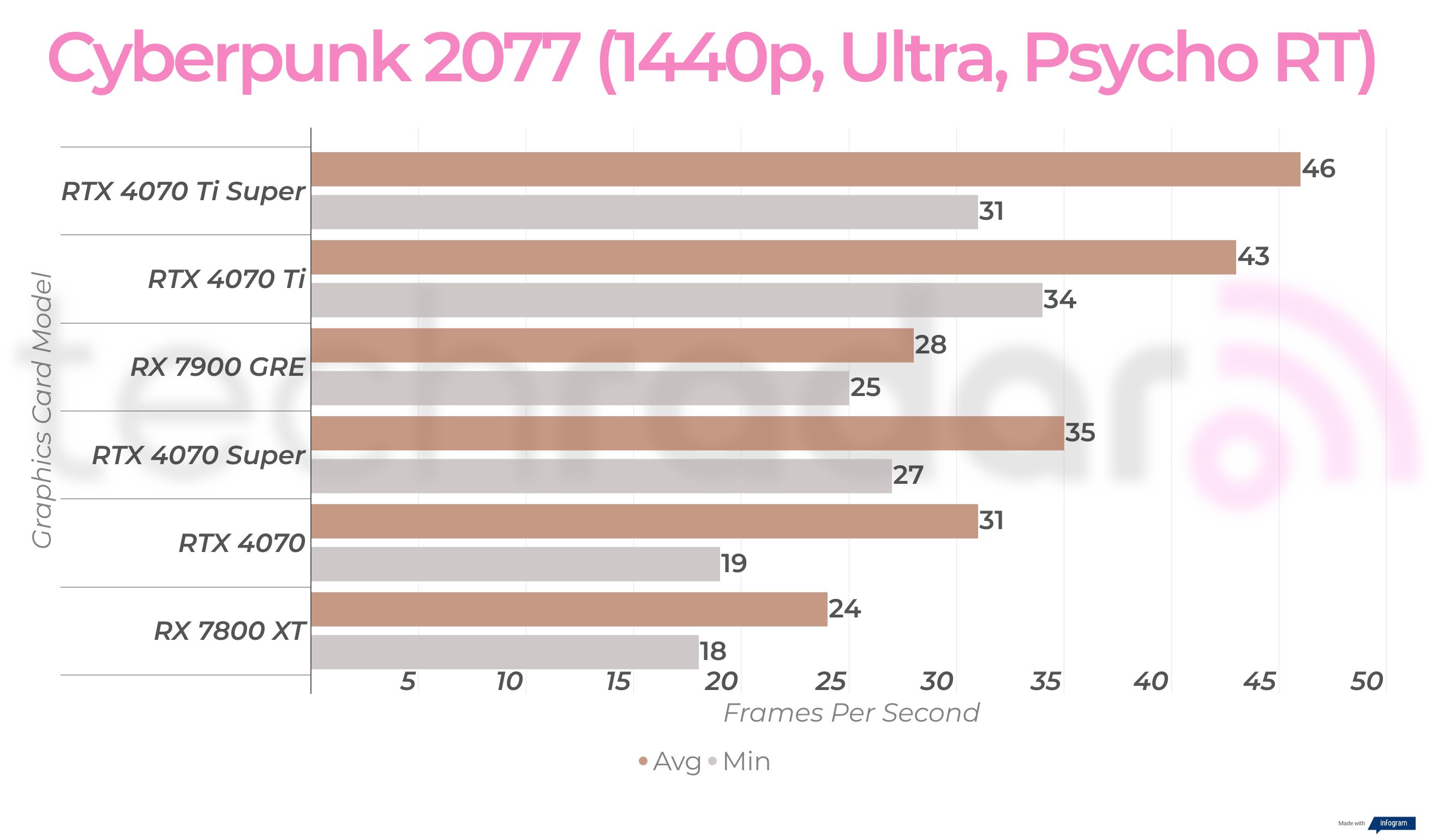

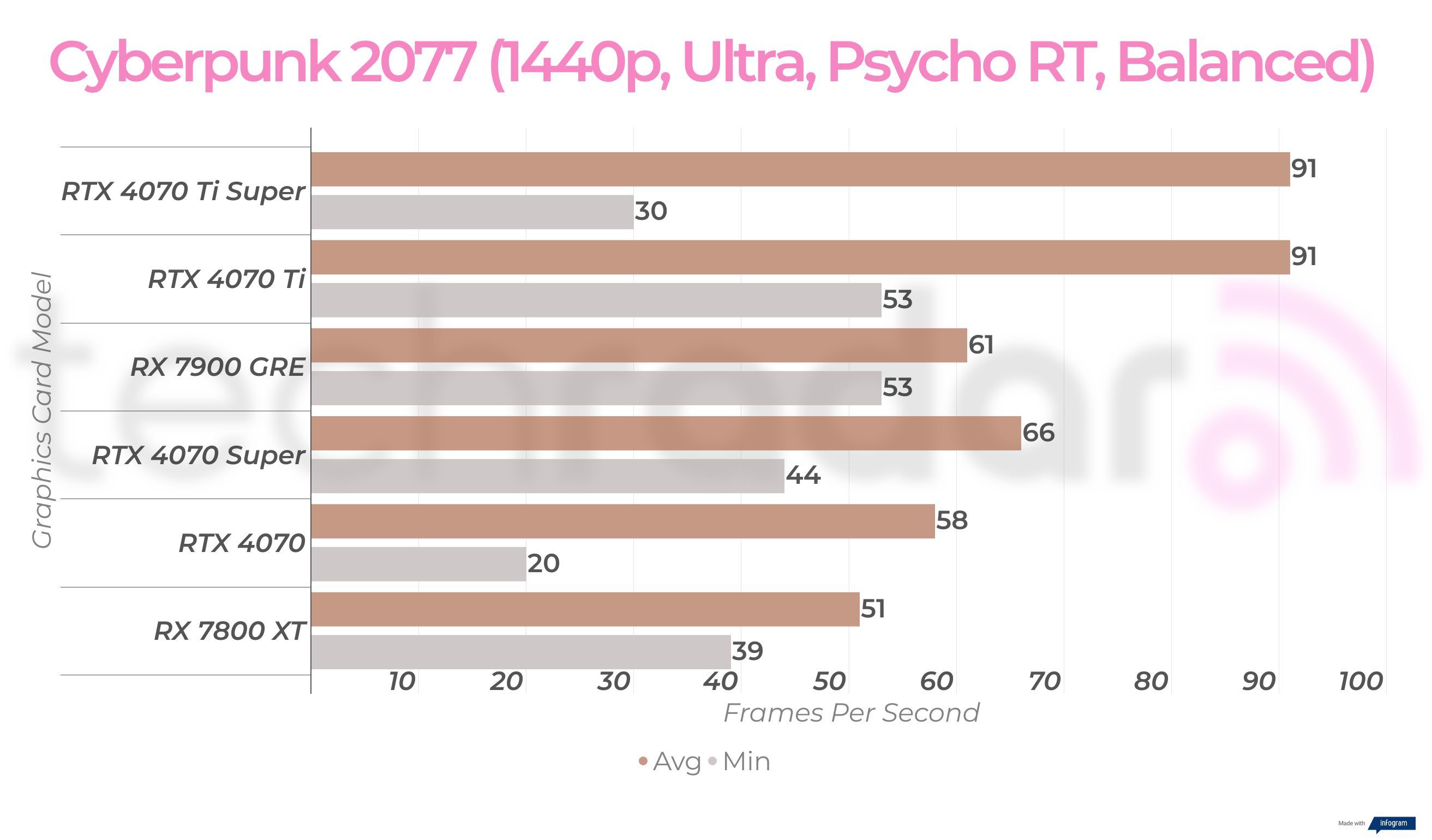

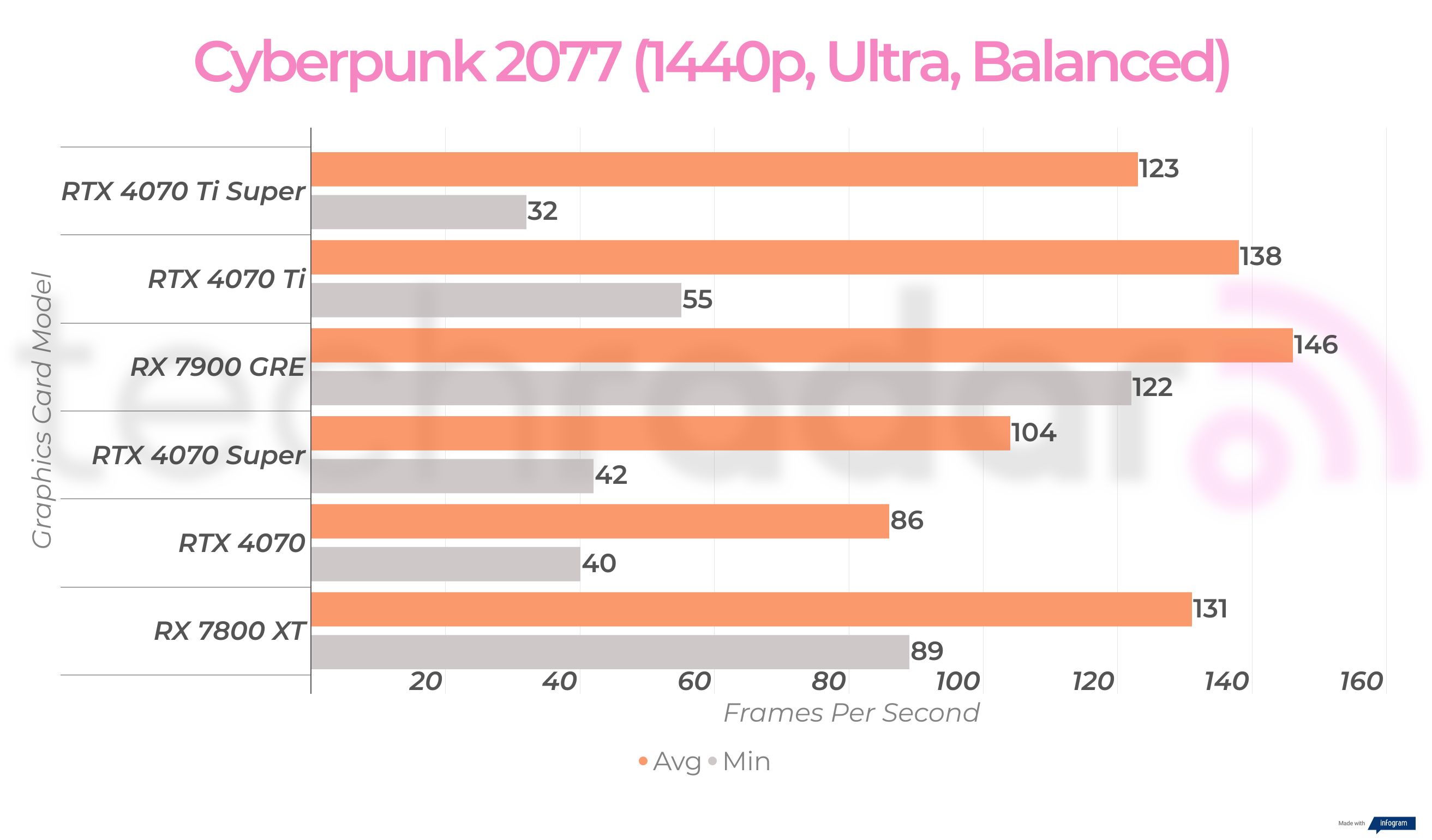

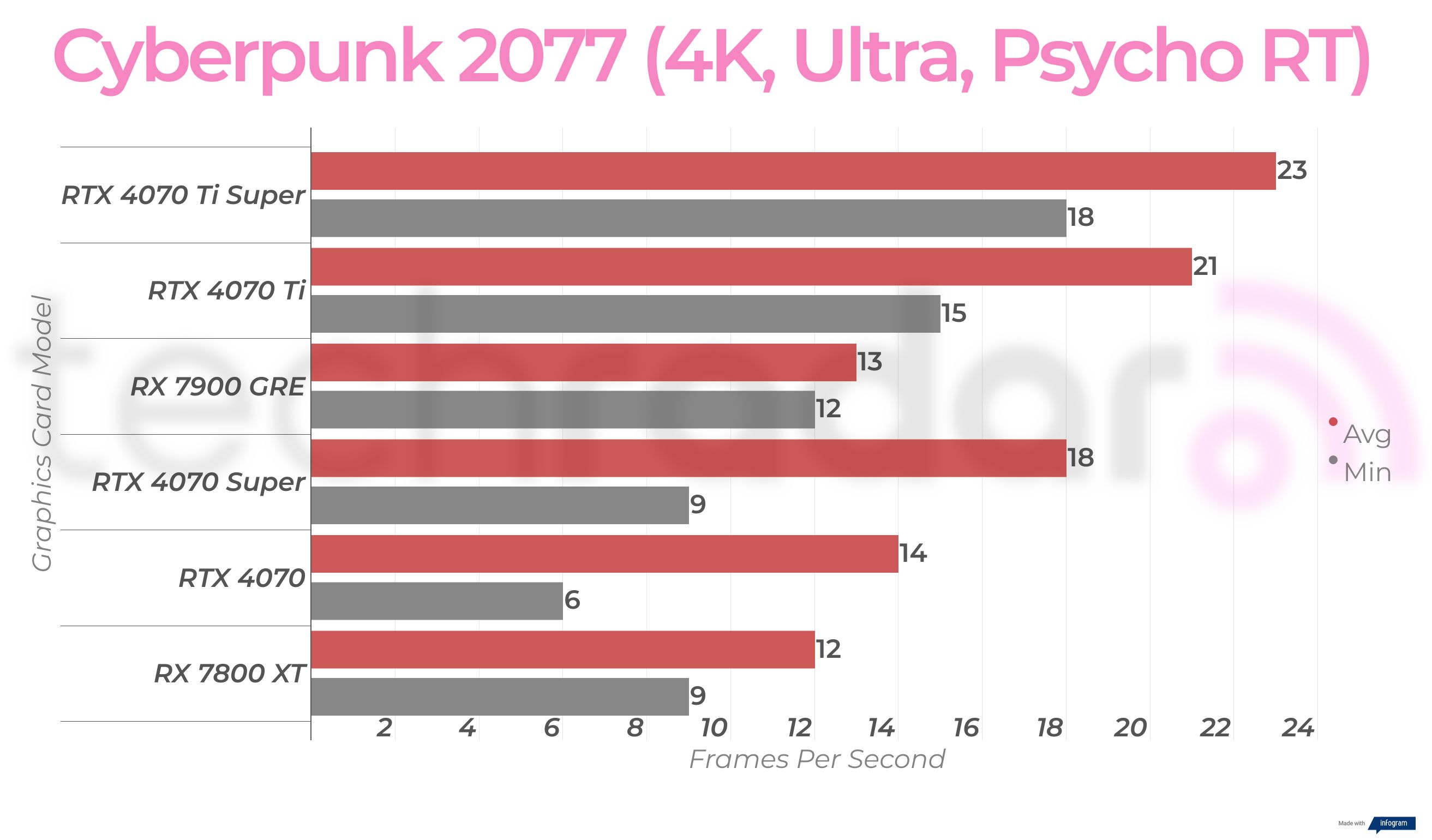

Cyberpunk 2077 (1080p) - 118fps (Low); 107fps (Ultra)

Cyberpunk 2077 (1440p) - 129fps (Low); 89fps (Ultra)

Cyberpunk 2077 RT Ultra - 85fps (1080p); 83fps (1440p)

Red Dead Redemption 2 (1080p) - 147fps (Low) ; 128fps (Ultra)

Red Dead Redemption 2 (1440p) - 108fps (Low); 86fps (Ultra)

Geekbench 6:

Single - 2,720

Multi - 17,308

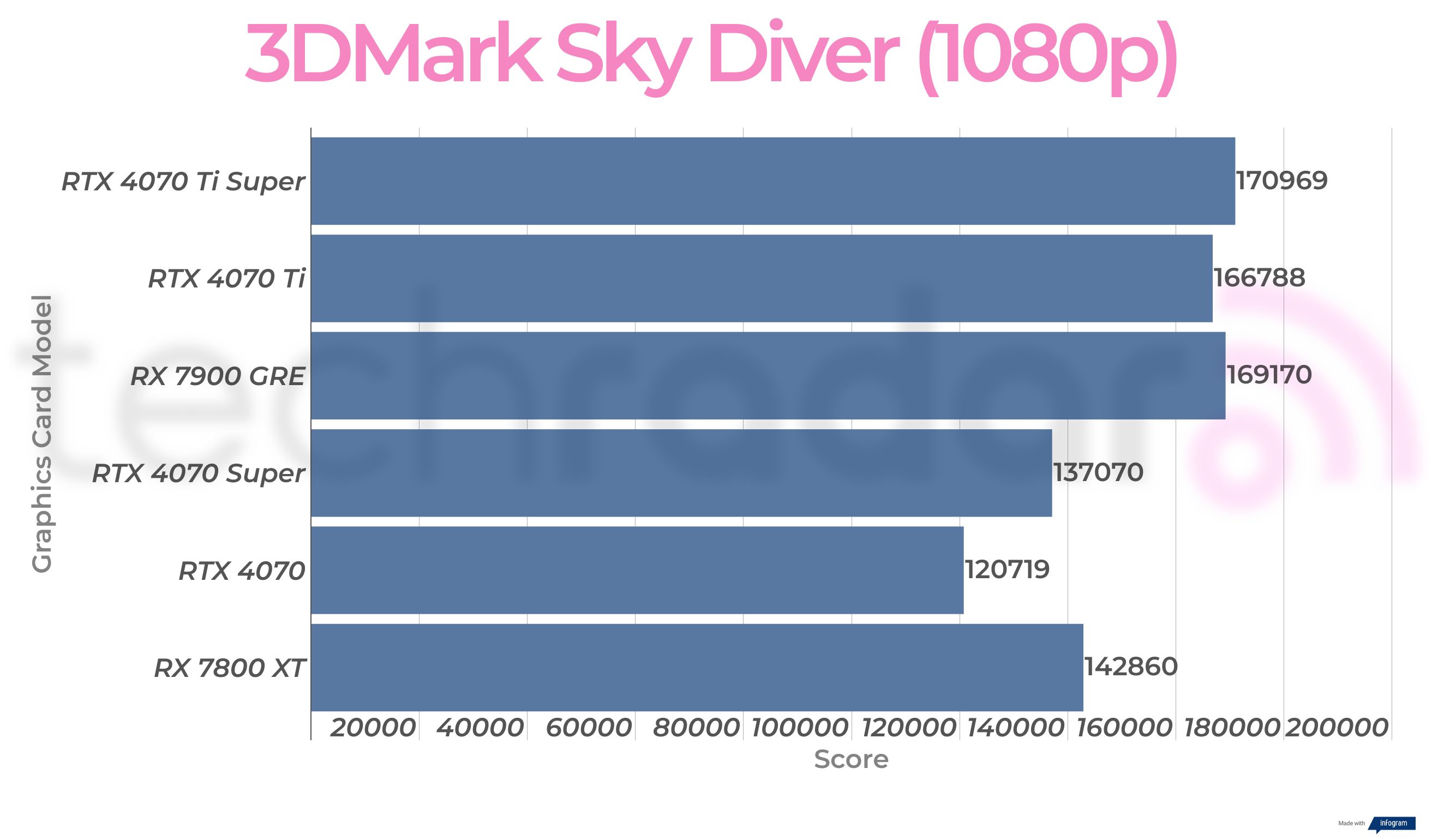

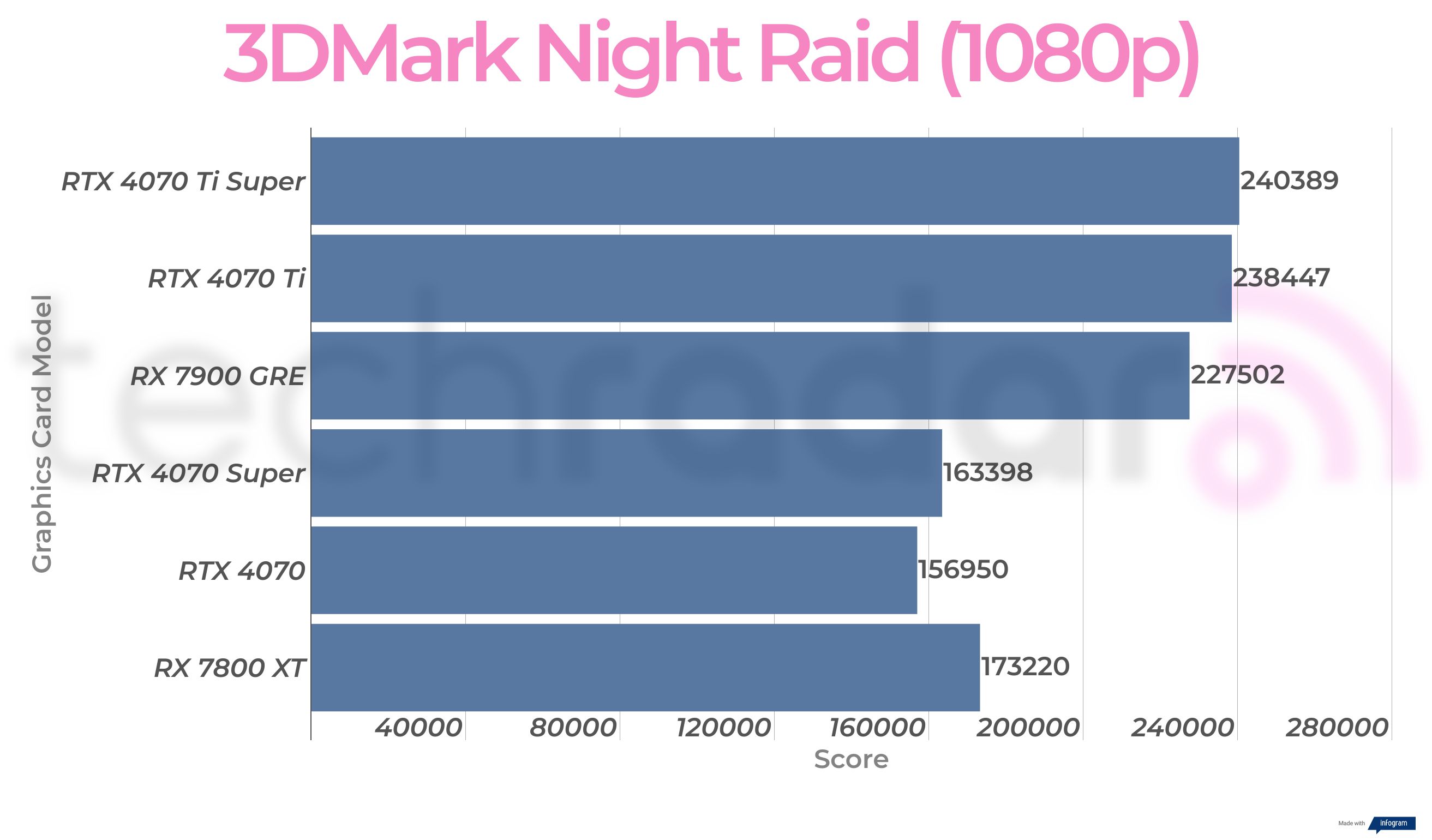

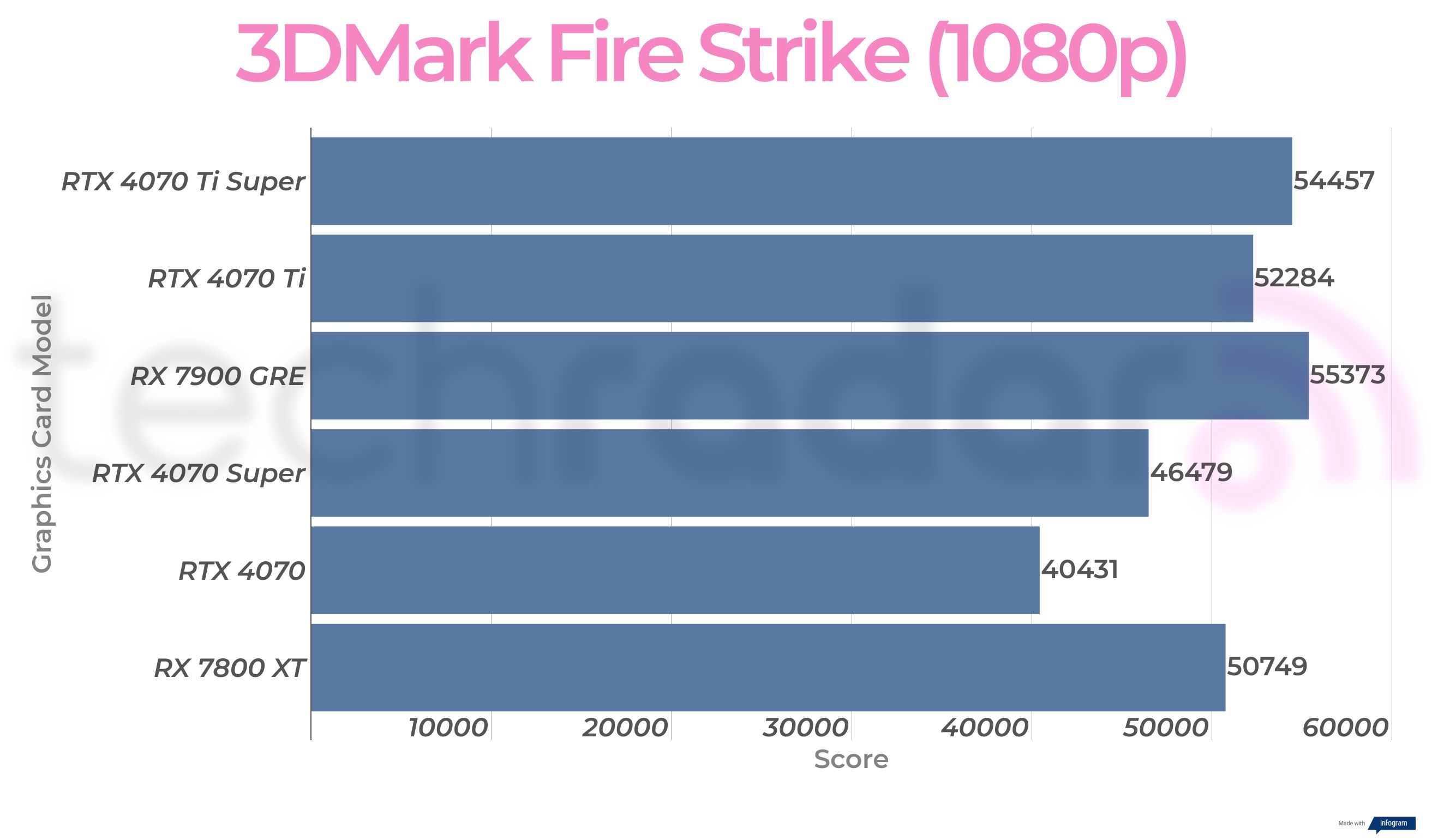

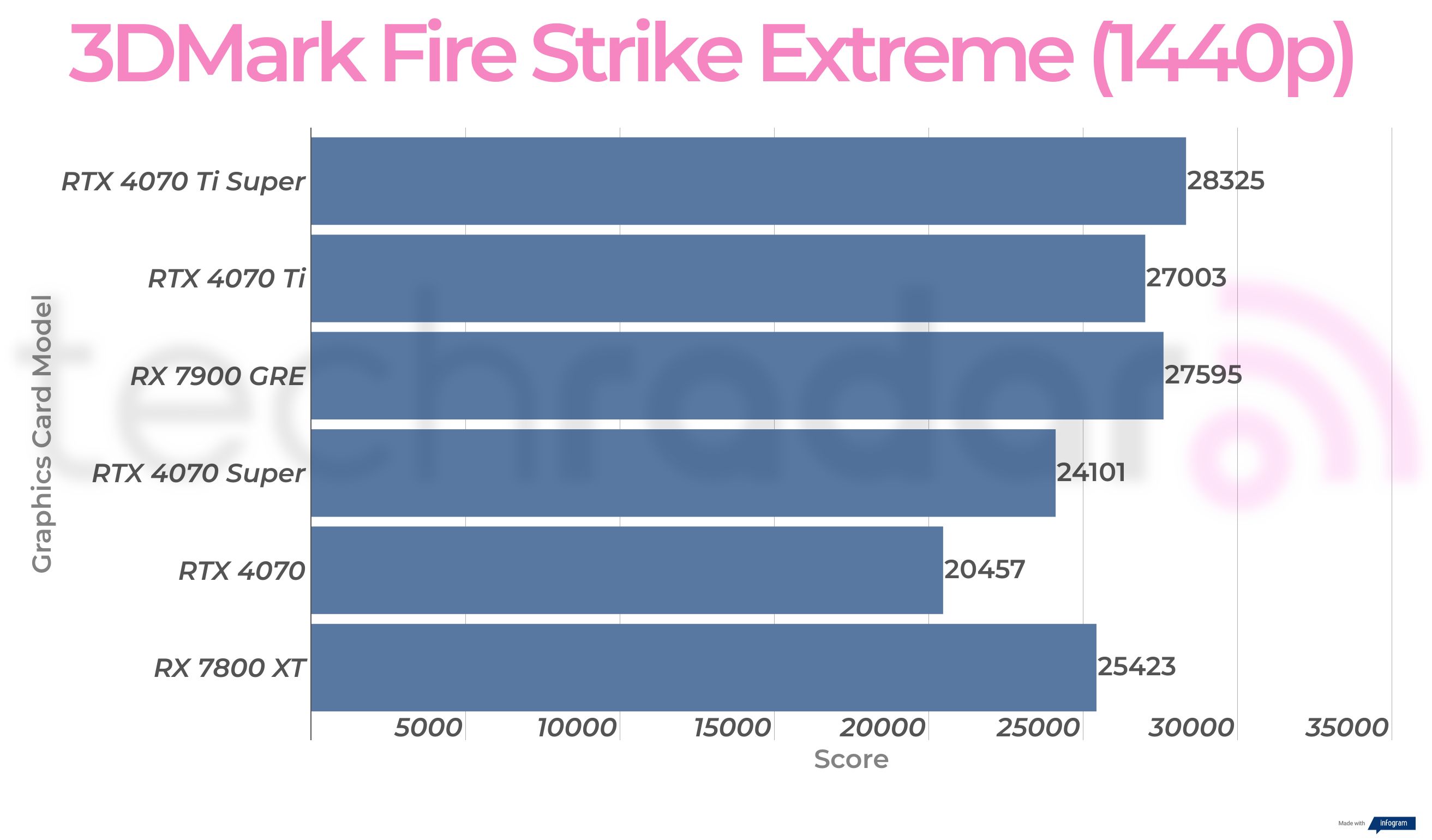

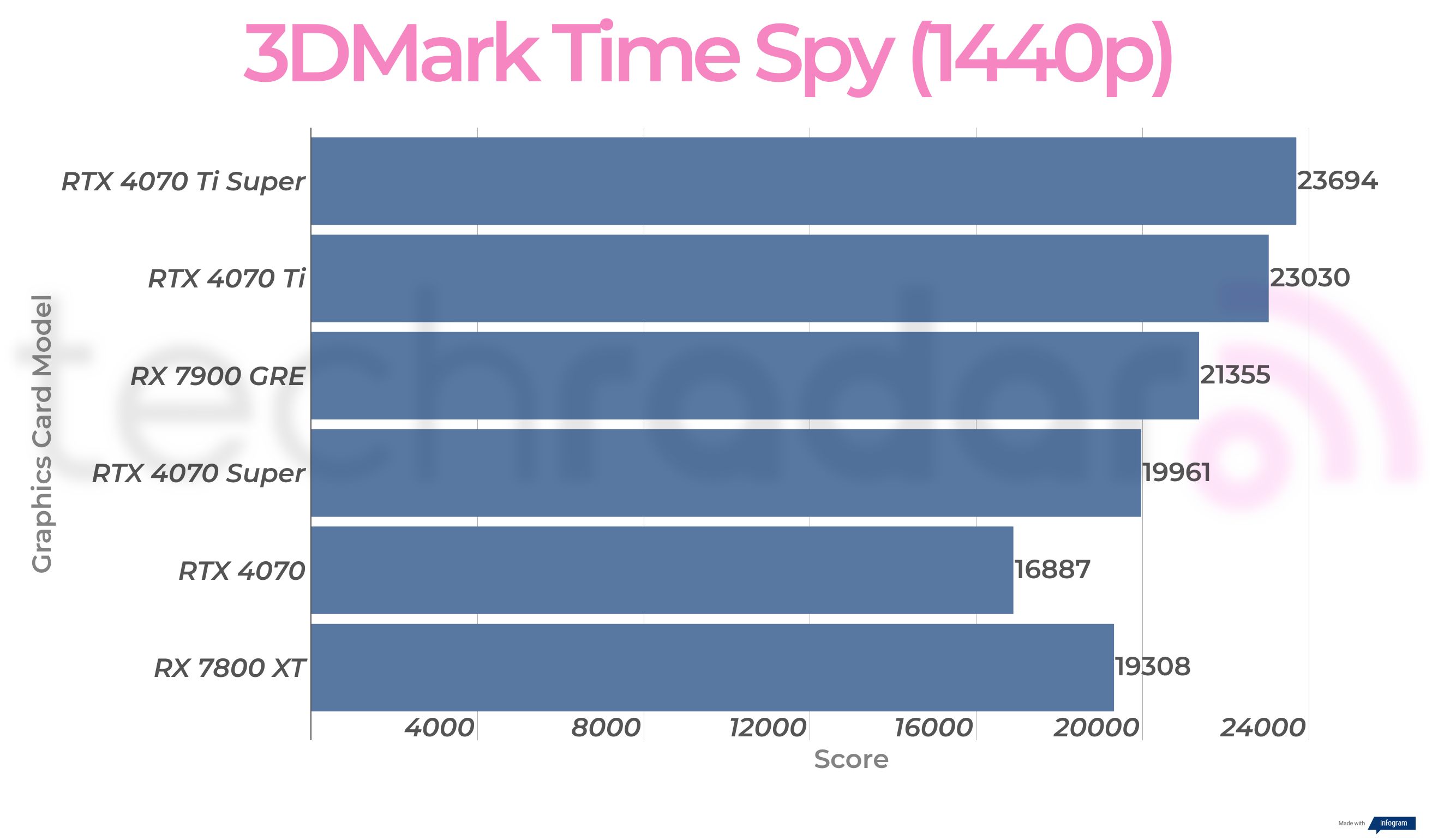

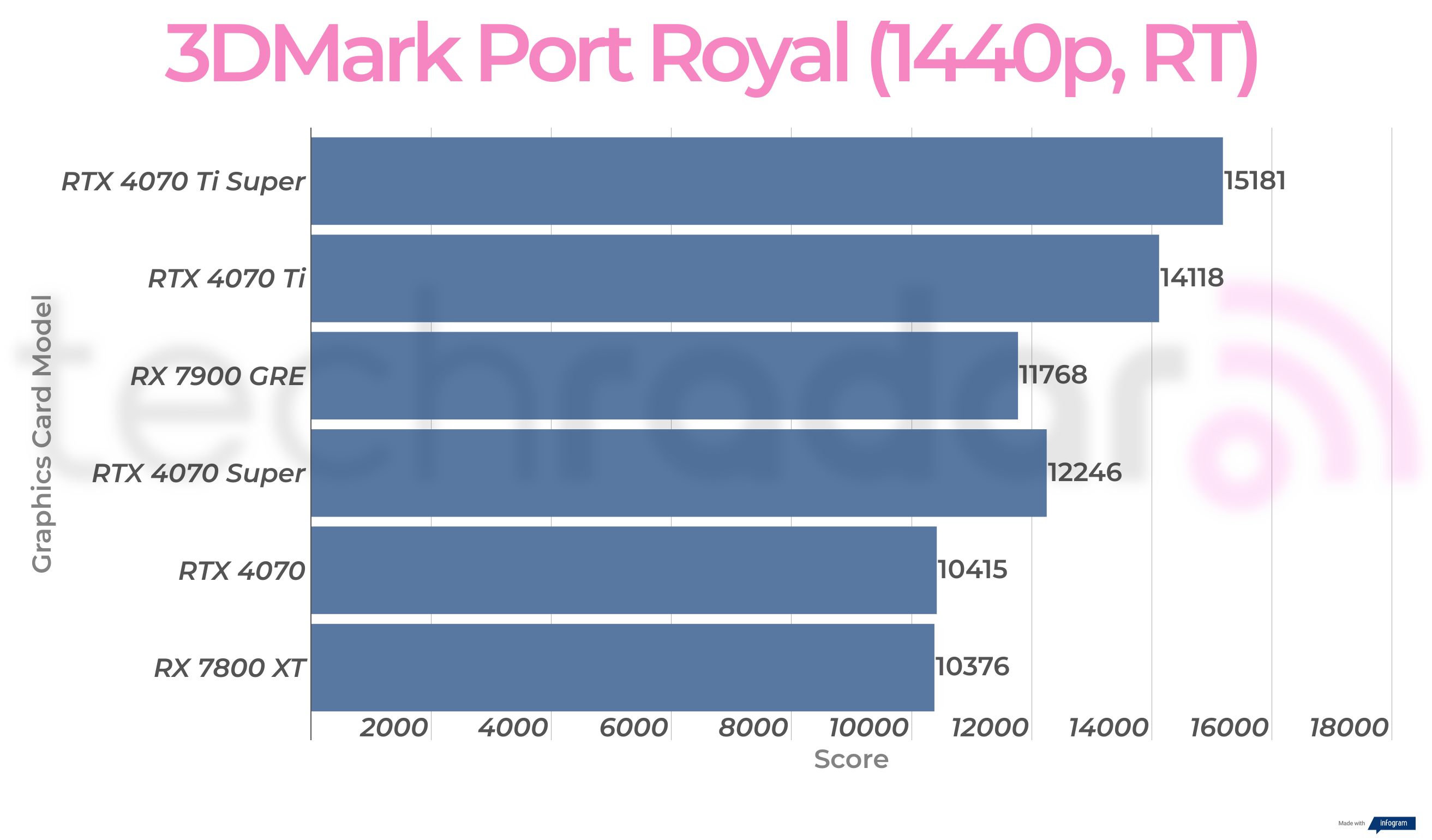

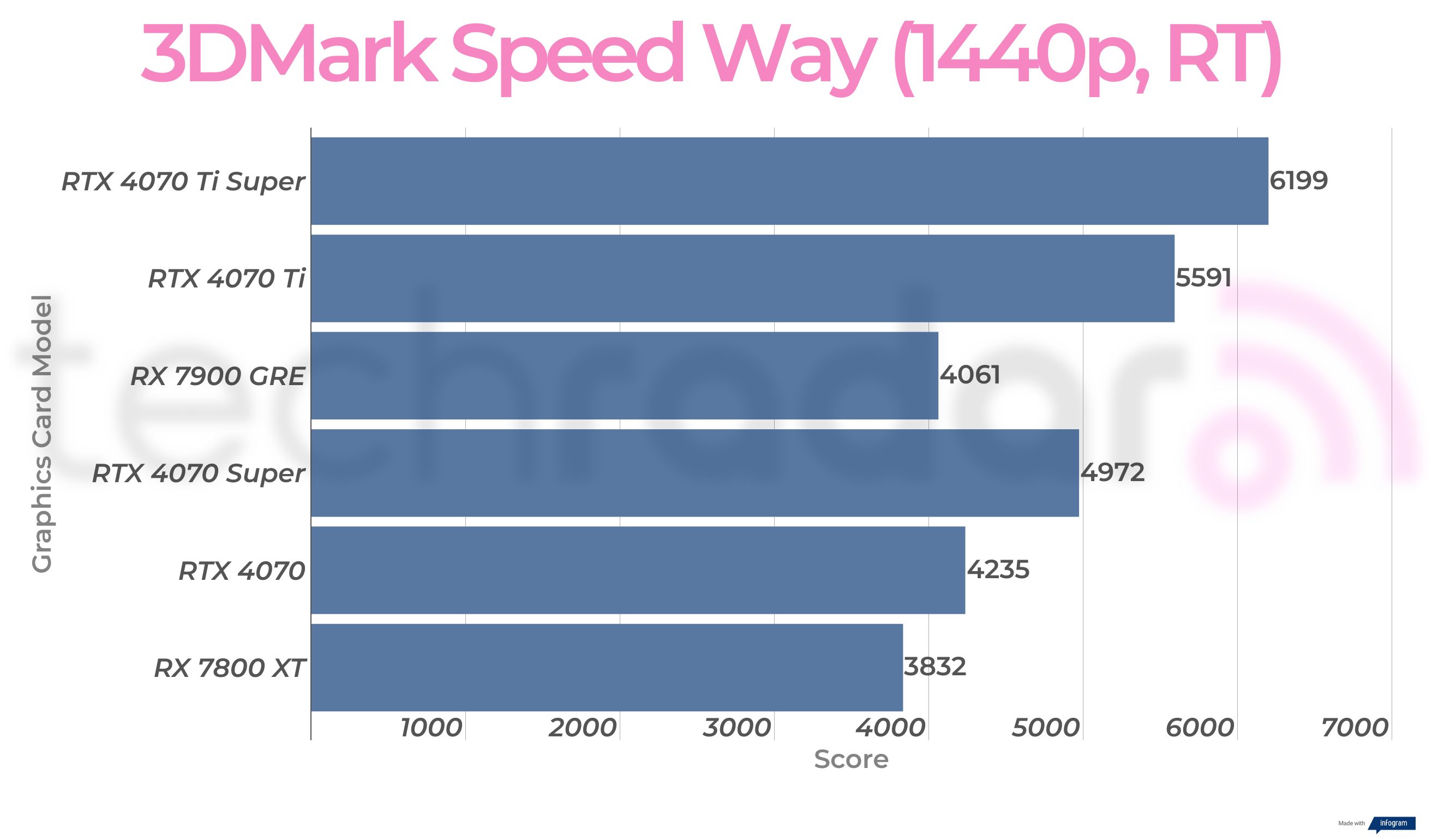

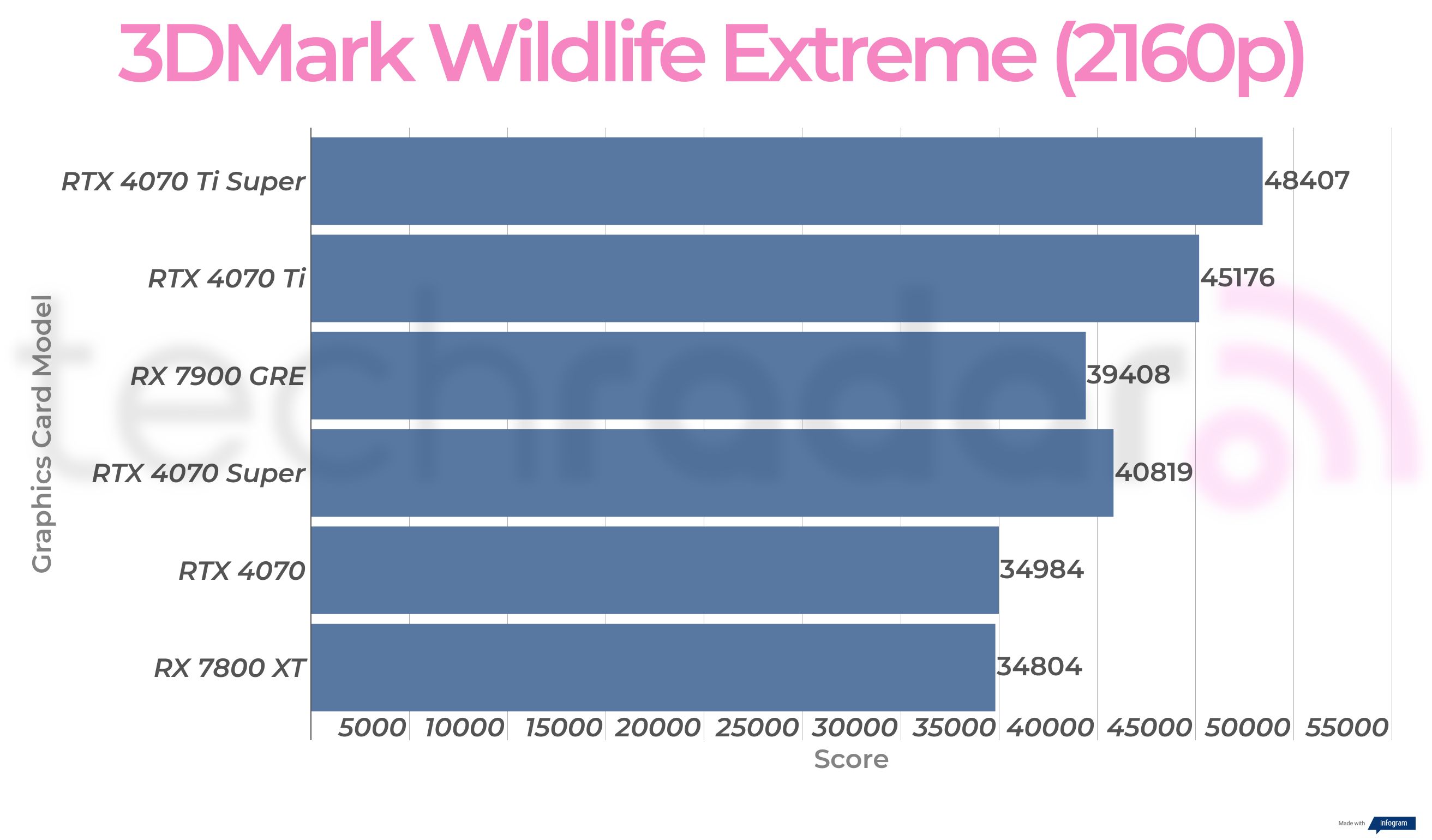

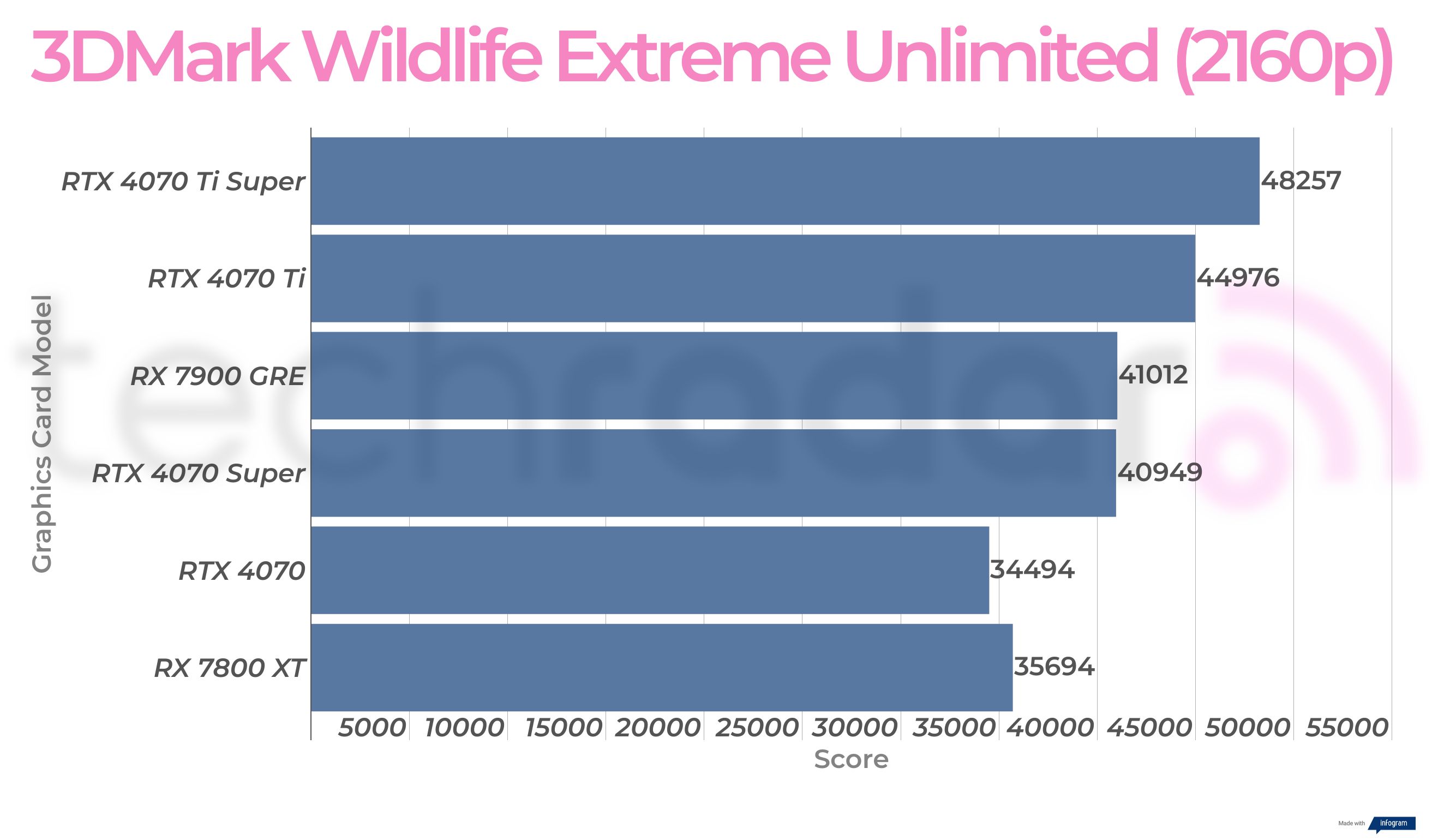

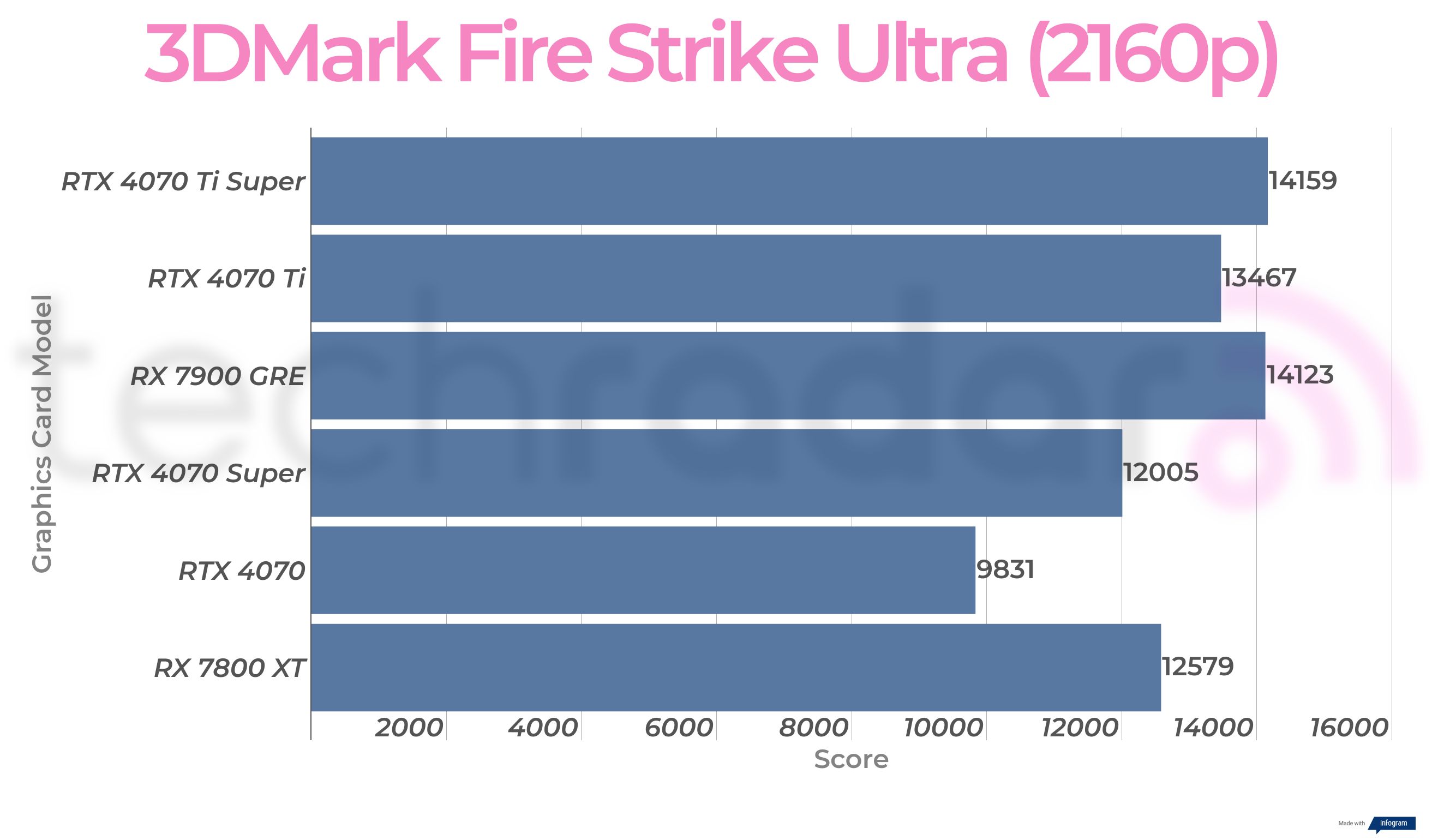

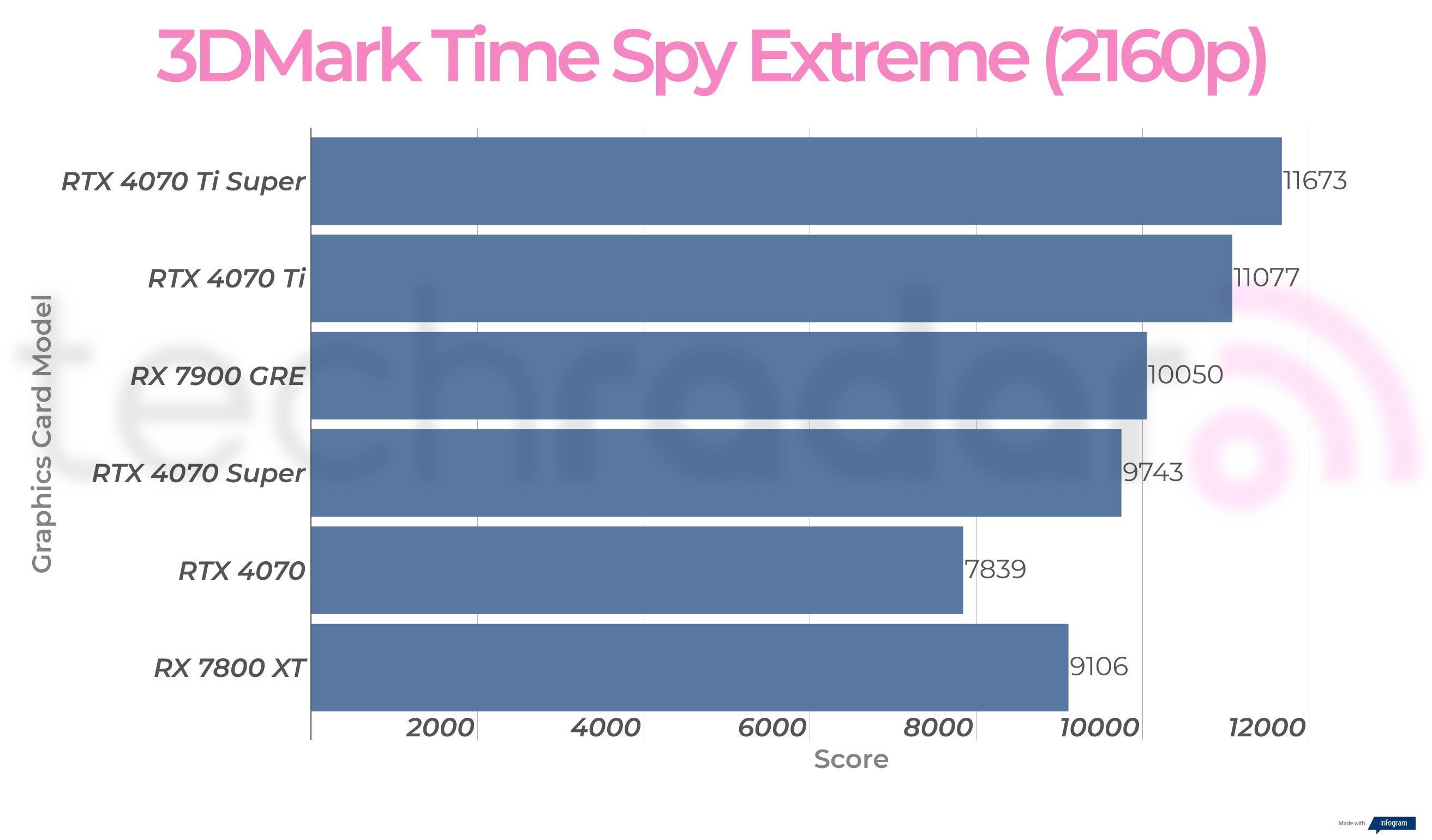

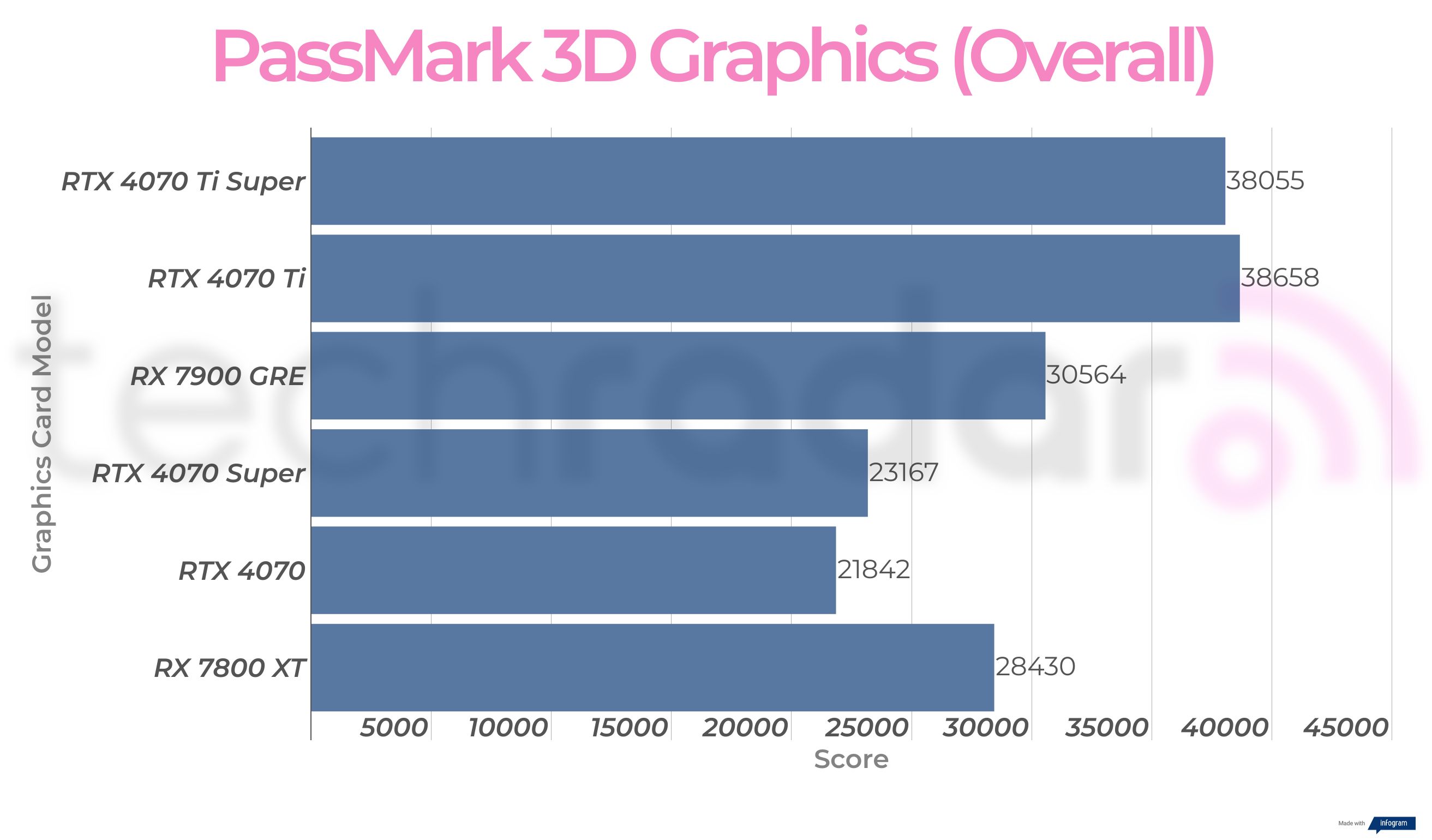

3DMark:

Night Raid - 72,575

Fire Strike - 31,498

Time Spy - 16,866

Port Royal - 11,261

PCMark10: 8,069

CrystalDiskMark: Read - 6,441.97; Write - 4,872.65

Cinebench R23:

Single - 1,941

Multi - 25,624

TechRadar battery test: 1 hour 8 minutes

It's comparable to what the MSI Titan 18 HX can do, albeit without the 4K resolution, not that you'll necessarily need 4K in such a small display anyway. It wasn't uncommon for the demanding games tested, such as Cyberpunk 2077 or Red Dead Redemption 2, to exceed 100fps when maxed out in 1440p. Even CPU-bound titles such as Total War: Three Kingdoms were no sweat for the 13900HX, as this game could exceed a lightning-fast 300fps.

Synthetic figures are equally strong as evidenced by 3DMark's range of GPU benchmarks alongside PCMark 10. Acer hasn't skimped on the choice of Gen 4.0 NVMe SSD either, with a strong performance of 6,441MB/s for reads and 4,872MB/s for writes. All told it's a very encouraging package showcasing the prowess of the hardware, but not without a few drawbacks.

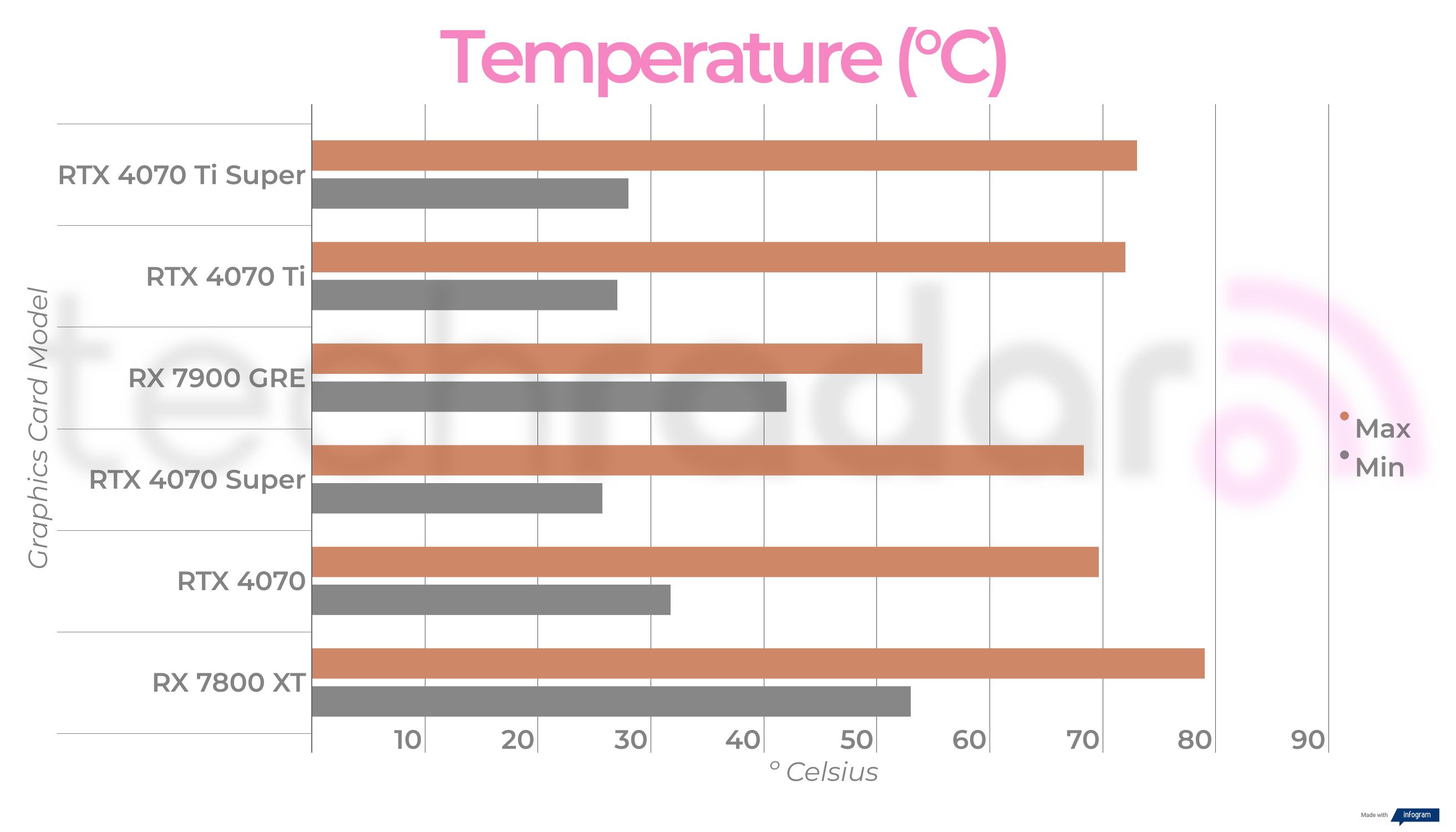

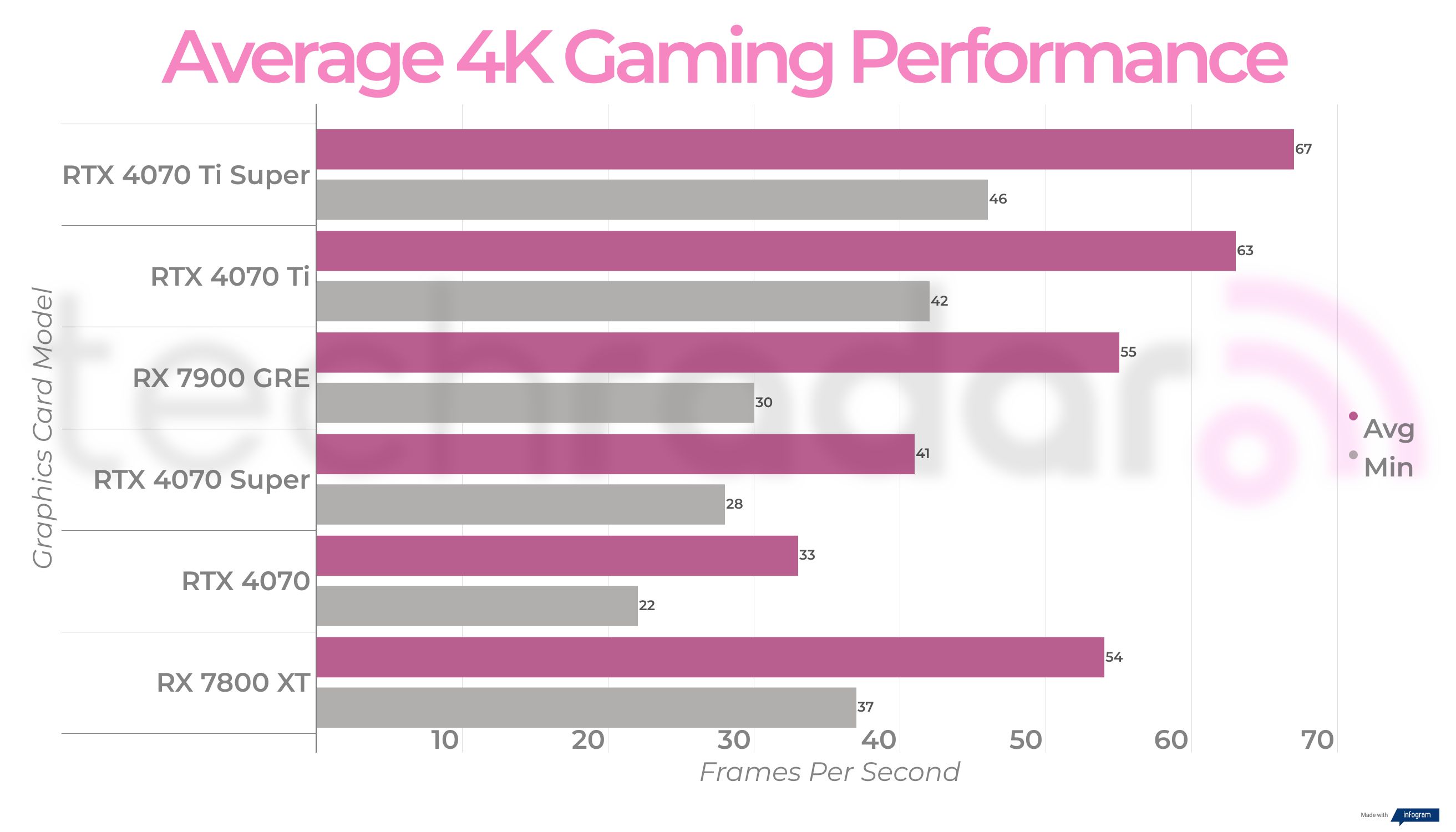

While the RTX 4090M is roughly equivalent to the desktop RTX 4080 with its 16GB GDDR6 VRAM and lower power draw, the combination of CPU and GPU here does result in excess heat and loudness. It wasn't uncommon for the rig to reach upwards of 90 degrees when under stress, with the fans drowning out the otherwise impressive six-speaker surround setup. This could be counteracted by employing the use of one of the best gaming headsets, but it's worth noting all the same.

Using the HDMI 2.1 port, you'll be able to hook up the Acer Predator Triton 17 X to one of the best gaming monitors for that big screen experience should the 17-inch display not be enough for you. You may also want to invest in a dedicated laptop riser to keep the fans of the machine elevated to aid cooling, too.

- Performance: 4 / 5

Acer Predator Triton 17 X: Battery life

- Lasts around two hours when web browsing or for media playback

- About an hour of gaming on battery power

What's most disappointing about the Acer Predator Triton 17 X is the battery life which just about manages two hours on a single charge with media playback or casual browsing. When gaming, you can expect about an hour or so, give or take, so you'll need to keep a charger handy if you want to have a full session of gaming for the evening.

Keeping the Acer Predator Triton 17 X plugged in at all times isn't ideal in terms of its portability factor, obviously, but as we already observed, it's a little too large and bulky for that anyway. The battery life is a shame considering there's a 99.98Wh four-cell power pack inside, but it's not too big a shock when factoring in that there's 175W of power drawn by the RTX 4090M GPU alone.

Simply put, if you're after excellent battery life for a portable machine then the Acer Predator 17 X won't be for you. Instead, we recommend considering one of the best Ultrabooks, even if you won't get anywhere near the same level of processing power.

- Battery: 2 / 5

Should you buy the Acer Predator Triton 17 X?

Buy it if...

You want a no-compromise gaming experience

The Acer Predator Triton 17 X packs a punch with its RTX 4090 GPU and 13th-gen Core i9 CPU backed with a staggering 64GB of RAM. All that power translates to commonly getting over 100fps in 1440p with maxed out details.

You want an out-and-out desktop replacement

With its powerful hardware and generous port selection, you'll be able to hook up the Triton 17 X to an external monitor for a big screen gaming experience.

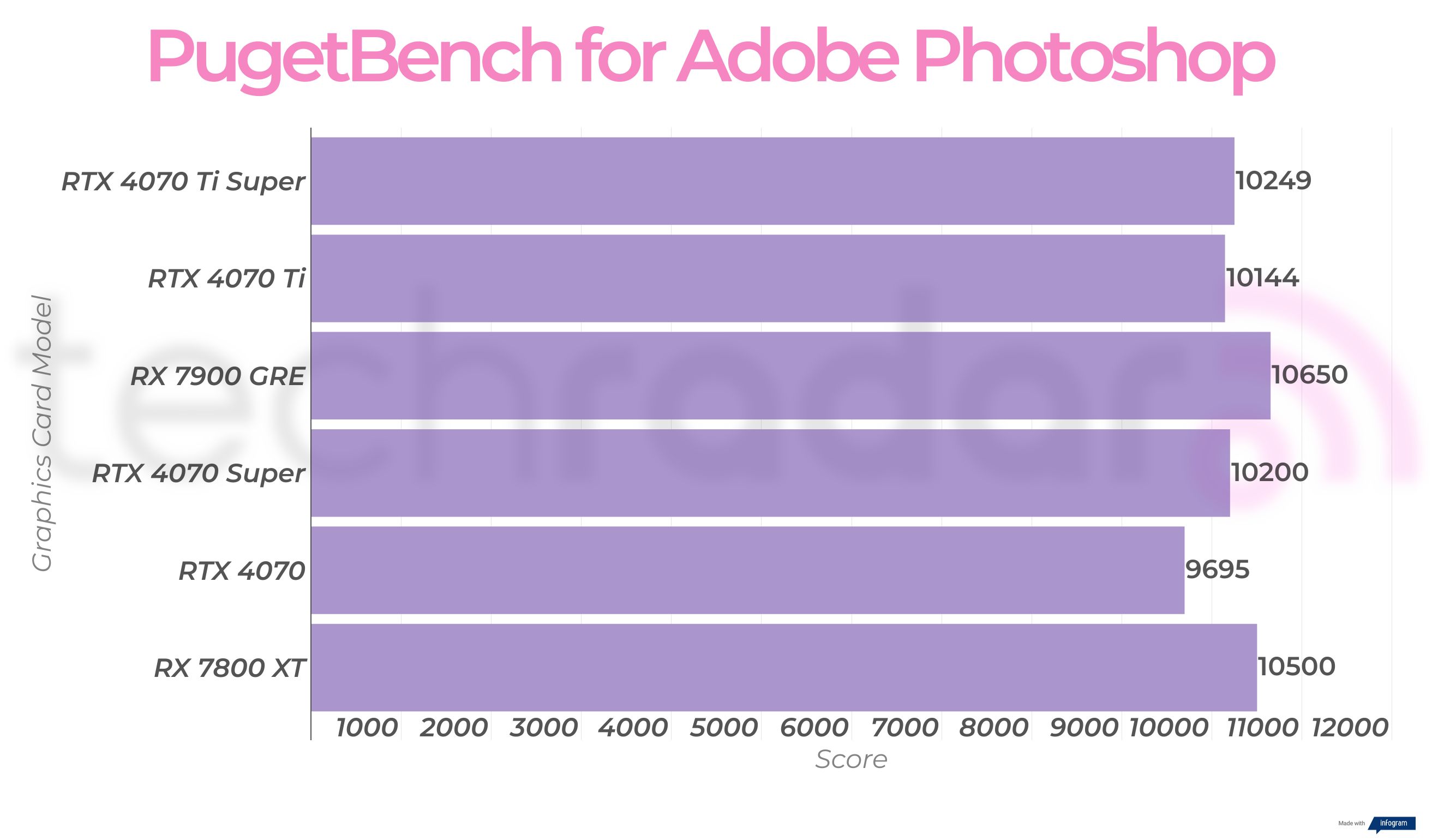

You're in the market for a productivity powerhouse

While the Acer Predator Triton 17 X is geared towards gamers, its 250Hz refresh rate and cutting-edge hardware make it a good choice for creatives who need all the VRAM and raw performance grunt they can get.

Don't buy it if...

You want the best value for money

There's no getting around the eye-watering MSRP of the Acer Predator Triton 17 X at $3,599.99 / £3,299.99 / AU$7,999. If you're on a tighter budget, you'll clearly want to consider a more mid-range model instead.

You want a laptop with a good battery life

Despite its 99.98Wh battery, you can expect only around an hour of gaming when not plugged in. Media playback doubles that to around two hours based on our battery test (conducted at 50% battery with half max brightness). Whatever the case, don't expect much longevity with the Triton 17 X.

Also consider

- First reviewed June 2024