Asus ProArt P16: 30-second review

CPU: AMD Ryzen™ AI 9 HX 370 Processor; AMD XDNA™ NPU up to 50TOPS

Graphics: NVIDIA® GeForce RTX™ 4070 Laptop GPU, 8GB GDDR6; AMD Radeon™ 890M Graphics

RAM: 64GB LPDDR5X on board

Storage: 2TB M.2 NVMe™ PCIe® 4.0 SSD

Left Ports: DC-in, HDMI 2.1 FRL, USB 4.0 Gen 3 Type-C, USB 3.2 Gen 1 Type-A, 3.5mm Combo Audio Jack

Right Ports: SD Express 7.0 card reader, USB 3.2 Gen 2 Type-A, USB 3.2 Gen 2 Type-C

Connectivity: Wi-Fi 7 (802.11be) (Triple band) 2x2, Bluetooth® 5.4

Audio: Built-in array microphone, Harman/Kardon (Premium)

Camera: FHD camera with IR function to support Windows Hello

Size: 35.49 x 24.69 x 1.49 ~ 1.73 cm (13.97" x 9.72" x 0.59" ~ 0.68")

Weight: 1.85 kg (4.08 lbs)

OS Installed: Windows 11 Home

Accessories: Stylus (Asus Pen SA203H-MPP2.0 support)

The Asus ProArt P16 is one of the fastest 16-inch laptops available with AI processing used to boost performance for demanding applications like Premiere Pro, After Effects, and DaVinci Resolve. ASUS has essentially designed this laptop to rival the Apple MacBook Pro, with features tailored specifically for creatives.

The ProArt P16 design supports easy connectivity to various accessories, monitors, projectors, and devices, making it ideal for travelling professionals. The intuitive keyboard and trackpad layout, along with the Asus DialPad, enhance usability in apps like Photoshop, with for example, the quick adjustments of brush sizes.

The touchscreen's 4096 pressure sensitivity and included stylus offer precise control for drawing and creative work. The Asus suite of creative software also provides AI-driven organisation of digital images and videos.

At its core, the ProArt P16 boasts superior power, outpacing many competitors, including the MacBook Pro. This is evident when handling 4K and 6K video footage from cameras like the Canon EOS R5C and Blackmagic Cinema Camera 6K. While an external SSD is necessary for large files, the processor and graphics card handle advanced editing seamlessly.

Like all the best video editing laptops and best laptops for graphic design we've reviewed, the ProArt P16 is targeted at videographers, photographers, designers, and content creators. It meets the high demands of the creative sector. It stands out as a great alternative to the best MacBook Pro laptops, not just for budget reasons but for its performance and features.

Asus ProArt P16: Introduction

The Asus ProArt P16 is marketed as a laptop designed for and aimed at the creative sector—ideal for anyone needing a portable, powerful machine capable of content creation. This is no small feat, as the processing and graphics power required to edit, manipulate, and enhance the latest 4K and 8K video footage, high-resolution photography, AI content, and traditional sketching and drawing is immense for one machine to handle.

With the ProArt P16, you have a machine capable of handling the latest video files from cameras such as the Canon EOS R5 and the Sony Alpha 7 Mark IV. This laptop's ability to easily manage files from these high-end mirrorless hybrid cameras highlights just how powerful the ProArt P16 is.

What truly sets this machine apart is not just its processing power, which enables it to handle large and complex file formats, but its design, which is fine-tuned for creatives. The large monitor, which is 100% P3 compliant, ensures that the colours you see on the screen will be accurately reproduced elsewhere. Small details, such as the Asus DialPad and an extensive array of ports around the sides, will all provide easy connectivity. Additionally, the full touchscreen and supplied stylus all add to the desirability of this laptop for creatives.

Compared with a traditional laptop, the Asus ProArt P16 seems to be in a league of its own, but can a laptop designed for a niche sector really live up to the demands of creatives?

Asus ProArt P16: Price & availability

The Asus ProArt P16 isn't cheap, with the standard model starting at $2700 / £2600. When it comes to availability, you can purchase it directly through the Asus website, and it is also widely available at most major retailers and online stores.

- Price: 5/5

Asus ProArt P16: Design & build

The Asus ProArt P16 boasts an incredibly sleek design, considering the size of the monitor and the powerful hardware it contains. The laptop measures 35.49 x 24.69 x 1.49 cm and weighs 1.85 kg, making it relatively easy to slip into a standard laptop or camera backpack or laptop bag. While the weight is on the heavier side compared to typical laptops, it is quite reasonable given its capabilities and on par with the MacBook Pro.

One important consideration is that this laptop requires a proprietary power adapter, which is especially crucial for intensive tasks like video editing that can drain the battery quickly. This adapter is needed as the laptop cannot be charged via a standard USB Type-C charger.

The laptop is extremely well-built and feels tough and durable. It meets the US MIL-STD 810H military-grade standard for durability, so it should withstand more than a knock or two out in the field.

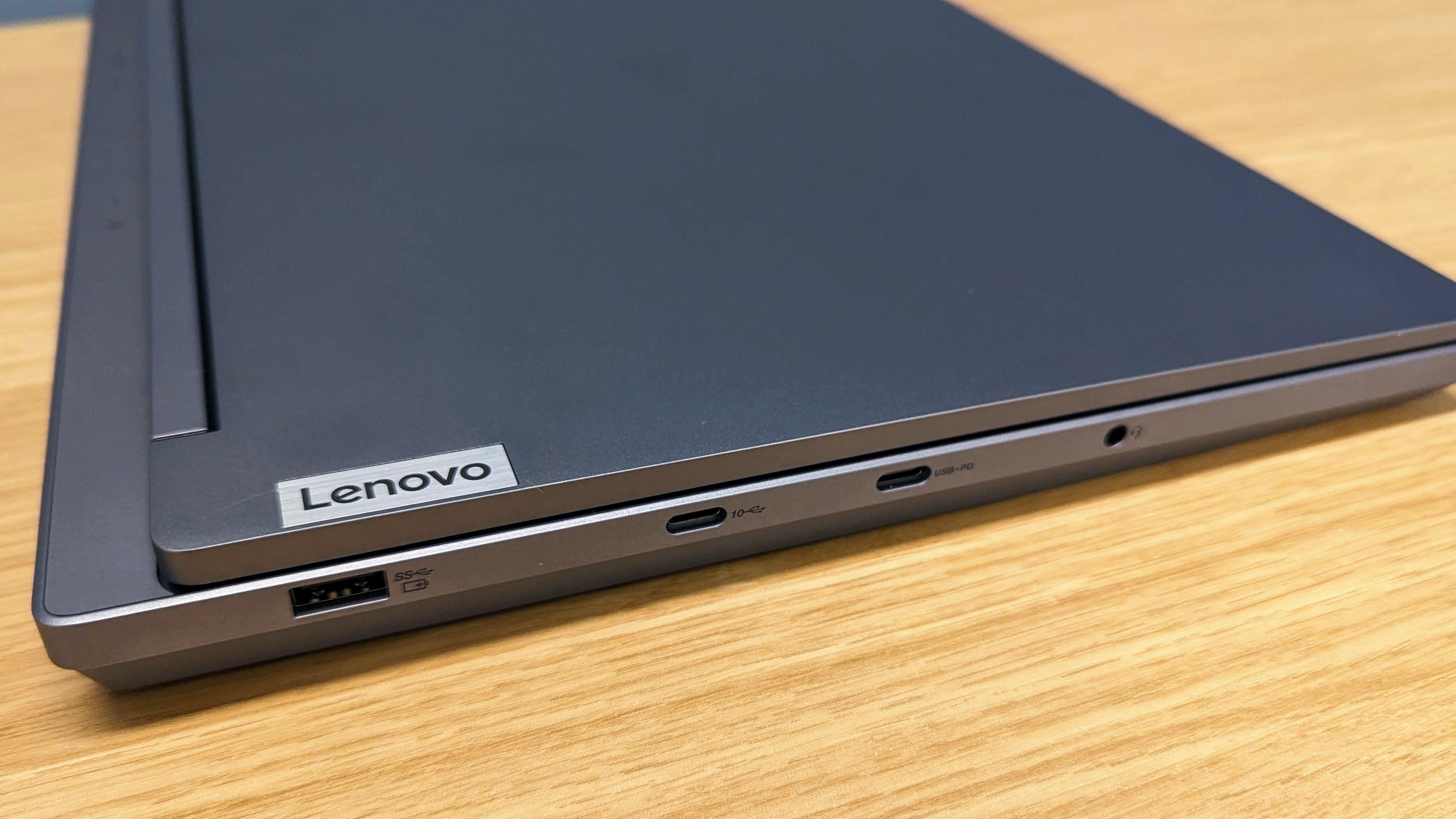

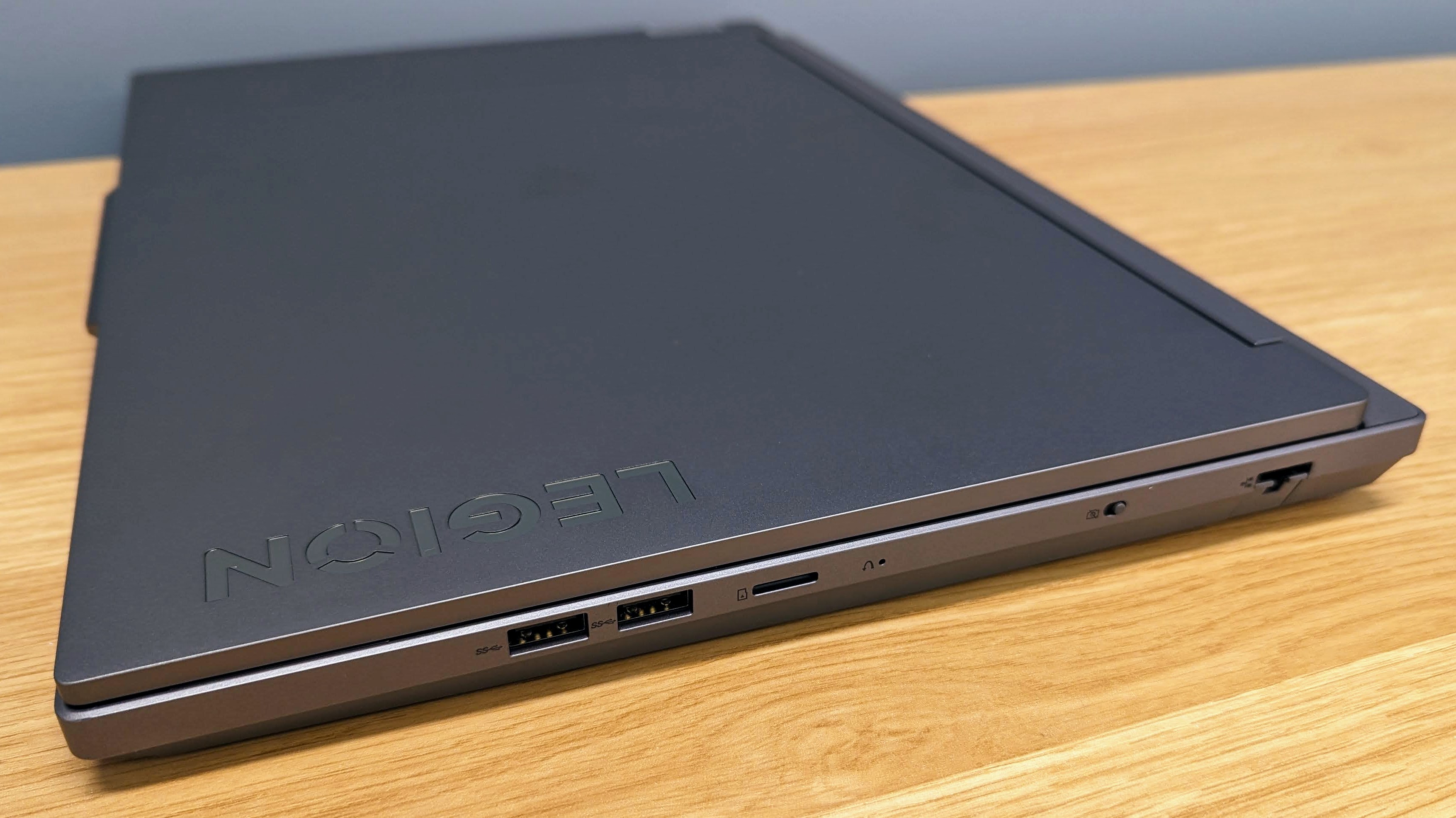

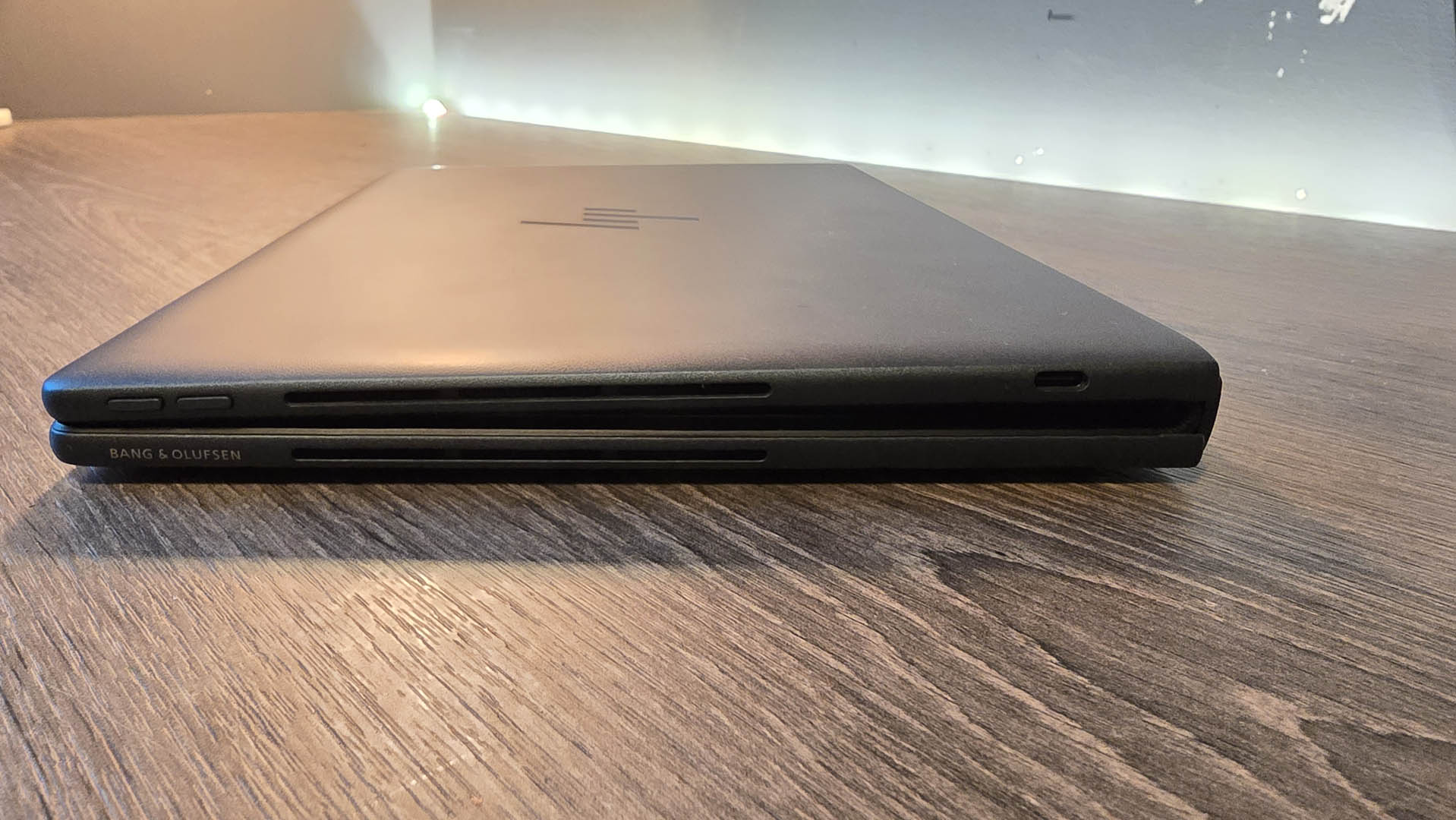

When it comes to some of the standard laptop features, the sides of the laptop offer a good variety of ports. On the left side, there is the DC-in, HDMI 2.1, a USB 4 Type-C, a USB 3.2 Gen 1 Type-A, and an audio combo jack. Flipping over to the right-hand side, there's an SD Express 7.0 card reader, a USB 3.2 Gen 2 Type-A, and a USB 3.2 Gen 2 Type-C. The only thing missing here is a standard Ethernet port, so if you need to connect to a wired network, you will need a USB Type-C to Ethernet adapter.

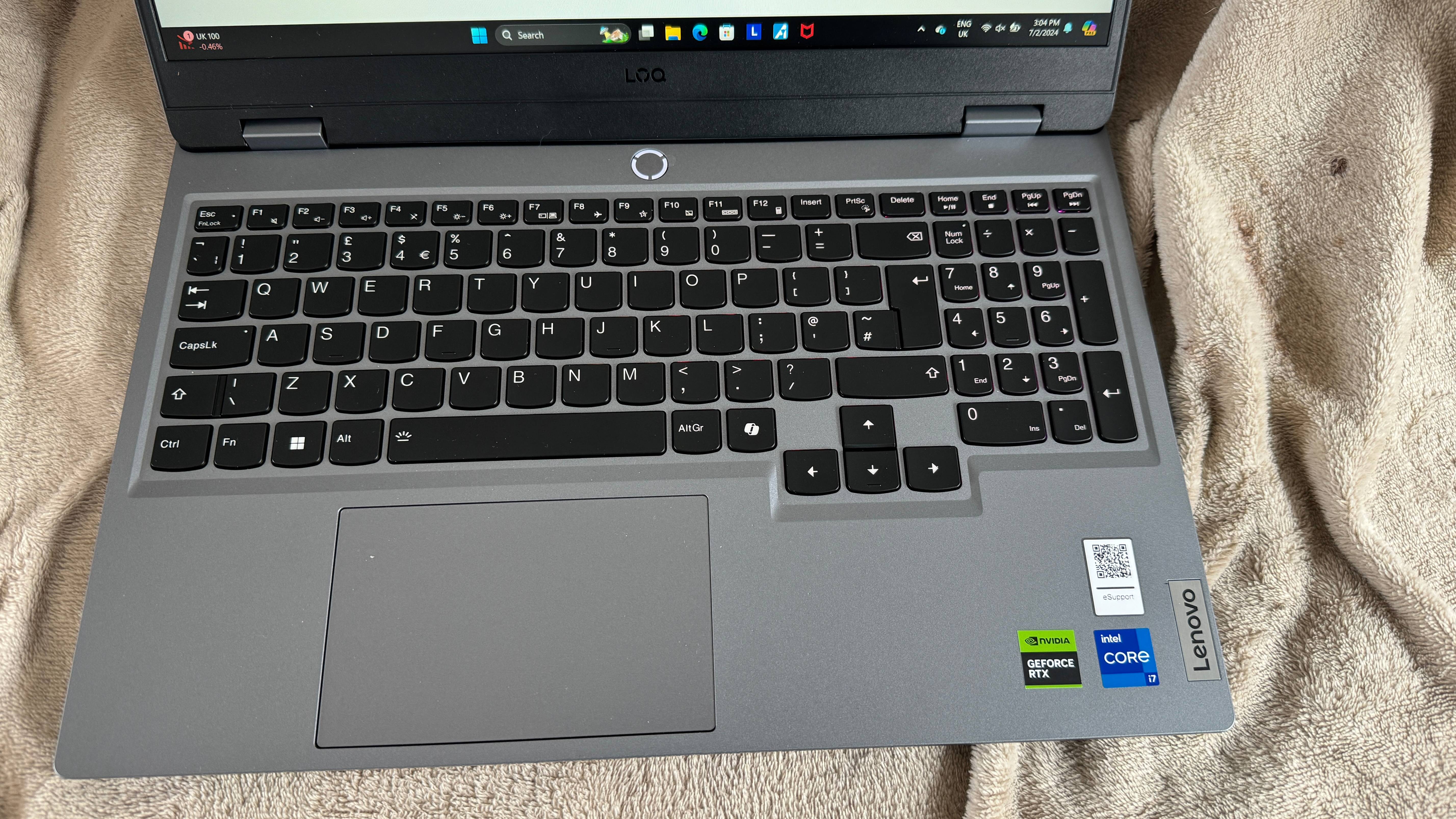

The design of the keyboard is nice and large, with a full layout and all the usual keys that you would want for general admin and office work, as well as shortcuts when using creative applications. A nice feature is the large touchpad, which is really sensitive. You can pop into the settings and adjust its sensitivity as needed. Integrated into this touchpad is the Asus DialPad, which is essentially a circular indent that acts as a touch-sensitive control wheel. A nice function of this is that you can adjust its options and functions through the ProArt Creator Hub and settings.

Another notable design feature is the two speaker panels located on either side of the keyboard. These panels house powerful, high-quality Harman Kardon speakers.

When it comes to the screen, it has an almost edge-to-edge design, which is really nice to see, giving you a big and clear, distraction-free workspace. The monitor itself meets the P3 display standard to 100%, and if you want to delve into the settings, there's plenty of adjustment available if you need to calibrate the monitor using tools like the DataColor SpyderX2. Another nice feature of the monitor is that it is fully touchscreen enabled and a stylus is included in the package. While this makes the screen relatively glossy, it is not overly so, and reflections are kept to a minimum.

Overall, the slim and relatively lightweight build, combined with its powerful capabilities, makes the Asus ProArt P16 an ideal option for creatives on the move who need a powerhouse of a machine to run the best video editing software or best graphic design software. When sitting down and working at the machine, the full keyboard, trackpad, control dial, and touchscreen with stylus support all contribute to a very appealing laptop for anyone working in the creative sector.

- Design: 5/5

Asus ProArt P16: Features

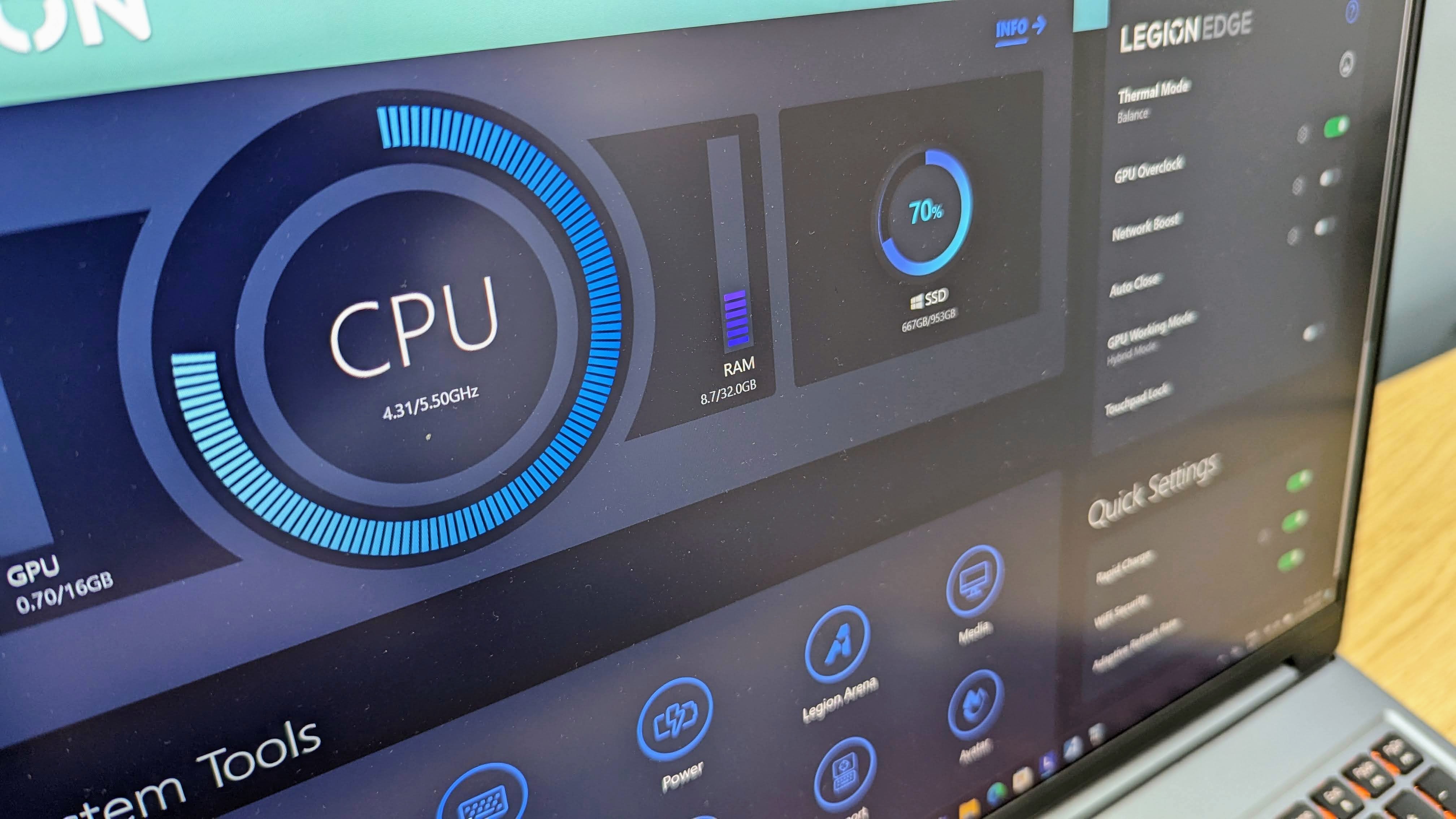

Many laptops are tuned for raw power, especially with the evolution of AI chips. The Asus ProArt P16 exemplifies this, featuring an AMD Ryzen AI 9 HX 370 processor coupled with an NVIDIA GeForce RTX 4070 GPU. The processor offers 50 TOPS, up to 70W CPU TDP, and 5.1 GHz with 12 cores, while the GPU delivers 321 TOPS and 8GB GDDR6 VRAM for real-time ray tracing and AI-augmented computing. Supporting these components is 64GB of LPDDR5X RAM and a 2TB NVMe M.2 SSD for fast storage.

A notable addition is a dedicated AI NPU chip that powers AI features like Copilot in Windows and the Asus AI applications. Support from Adobe and others for this chip is growing, enhancing capabilities in Photoshop and Premiere Pro. The AI-powered apps MuseTree and StoryCube add further value.

The ProArt P16 boasts a large 16-inch OLED touchscreen with 4096 levels of sensitivity and meets the P3 colour standard at 100%. It offers a 4K (3840 x 2400) resolution and supports MPP 2.0. The screen is protected with Corning Gorilla Glass 11, providing durability. Audio is impressive, too, with Harman Kardon speakers featuring Dolby Atmos and three array microphones.

Designed to be equally effective in the studio and field, the laptop meets US military-grade durability tests. Weighing 1.85 kg and measuring up to 14.9 mm thick, it is portable and robust, withstanding up to 95% humidity and temperatures from -32°C to 70°C.

The DialPad integrated into the touchpad and the ProArt Creator Hub software offer precise control for various tools, which is beneficial for photo retouching and other creative tasks. MuseTree allows AI-powered sketch realization, and StoryCube aids in managing digital assets. The ProArt Creator Hub provides access to all features, including a Pantone-developed colour management tool.

Despite its power, the laptop's advanced cooling system remains quiet, even under high-demand tasks like Adobe Premiere Pro. The ProArt P16 runs on Windows 11 Home, with an option to upgrade to Pro. It includes dedicated Asus applications like ProArt Creator Hub, MuseTree, and StoryCube, along with Asus DialPad control panel access.

- Features: 5/5

Asus ProArt P16: Performance

Crystal Disk Read: 5001.01MB/s

Crystal Disk Write: 3955.26MB/s

GeekBench CPU Single: 15197

GeekBench CPU Multi: 2882

GeekBench Compute: 1000704

PC Mark: 8149

CineBench CPU Multi: 21915

CineBench CPU Single: 1948

Fire Strike Overall: 238909

Fire Strike Graphics: 26226

Fire Strike Physics: 31027

Fire Strike Combined: 11672

Time Spy Overall: 9953

Time Spy Graphics: 9791

Time Spy CPU: 10988

Wild Life: 45337

Windows Experience: 8.3

Testing out the laptop involved some creative work in the field. We took it along on a video shoot and used it with Premiere Pro to edit footage shot on the Canon EOS R5 C in Canon Log 3 format. The footage was copied over to a Samsung T5 Evo 1TB SSD for storage from an OWC Atlas CFExpress Type-B Card. The initial handling of the footage was impressive, allowing us to copy the S-Log files directly into the timeline and start editing and grading. For a 15-minute production, the laptop handled the processing with ease and quickly rendered out the file after three hours of editing, ready for uploading to YouTube.

Editing using just the keyboard and the Asus DialPad was intuitive, making it a nice alternative compared to using an accessory like the Monogram Creator Kit that we usually use. While not quite as intuitive, the small pad works incredibly well, allowing customization so it does exactly what you need, which is great for adjusting settings with a finger touch. The full-size keyboard is also a nice addition, and anyone used to a MacBook Pro will find that the layout isn't too dissimilar. The transition, especially for a shortcut wizard, isn't too much of a leap.

Another noticeable feature of the ProArt P16 is its battery life. Even when editing footage in a high-demand application, we were able to get

an hour and eighteen minutes of power before needing to plug into a Bluetti AC200 for portable power for the rest of the edit. For Photoshop and general administration work, the battery life was between three and a half to four and a half hours.

Switching over to using Adobe Photoshop, the laptop handled applications, including Lightroom, with ease. Even with the 45-megapixel images from the Canon EOS R5 C, there was absolutely no slowdown, even as the layering of images increased during focus stacking for an upcoming book. Used on a two-day shoot, the laptop worked well for tethered shooting, taking the images directly into Photoshop, stacking, and then exporting them out, and wirelessly transferring them to the designer, who was able to layout pages on the fly. It was a nice workflow, and the transition from usually using a MacBook Pro to this ASUS ProArt P16 wasn't as large a leap as we had initially anticipated.

When it came to creative apps, the ProArt P16 was finely tuned. The power of those AI chips made easy work of extremely heavy processing tasks. What was impressive was that even when editing large 4K video files and the machine was drawing maximum power, the noise from the fans remained minimal.

Another point to note is that while monitoring audio for video is best done through headphones, the small internal speakers had clarity, quality, and decent volume, allowing us to hear the audio even when working outdoors in a busy environment.

To double-check performance, this review was written using Microsoft Office on the machine, and some work on Excel spreadsheets was done to check the computer's ability to handle general admin tasks. As expected, since this computer can easily handle 4K video editing with ease, there were no issues with administration tasks. Browsing the internet and streaming video content were equally well handled.

Finally, for downtime, we checked out the gaming performance with titles like Hogwarts Legacy and Tekken 8. Once both games were loaded, the machine handled the processing and graphics exceptionally well, far beyond what you'd usually expect from a non-gaming laptop.

After testing and being impressed by the laptop's performance, especially with creative applications, it was time to switch our attention to benchmarking tests to see if the performance of this laptop really was as good as it seemed.

- Performance: 5/5

Should you buy the Asus ProArt P16?

The ProArt P16 is specifically designed for the creative industry, offering a range of features finely tuned for this sector. Creatives—from illustrators and textile designers to photographers and videographers—will appreciate the ability to use a stylus accurately with various creative applications. This is a huge benefit, providing precision and enhancing workflow.

From the outset, this laptop impresses. It is slim, with a large screen and relatively light weight, making it highly portable. Its processing power and GPU capabilities enable the use of the best photo editors, design apps, and editing tools. If you're running high-end creative applications like Adobe Illustrator, Photoshop, Premiere Pro, and DaVinci Resolve, it's an appealing option for professionals.

The laptop includes a variety of ports, allowing easy connectivity to accessories, cameras, monitors, and more. This feature enhances its appeal to creatives who require a versatile and flexible workspace.

Durability is another strong point. With military-grade certification, the ProArt P16 can withstand knocks and bumps, ensuring it survives fieldwork. The full keyboard is excellent for shortcuts and navigation, though an application-specific keyboard for programs like Photoshop or video editing would be a welcome addition.

The Asus DialPad is a standout feature. While it takes some getting used to, it becomes a massive asset for adjusting settings and brush sizes in creative applications. The touchscreen display, with its high sensitivity, allows for direct interaction with artwork, providing precision and a more traditional feel compared to a keyboard and mouse.

Ultimately, the power of this laptop makes the user experience seamless. There is no waiting around, and the workflow is fluid and intuitive. While the Apple MacBook Pro has been a long-standing choice for creatives, the ProArt P16 challenges this dominance. With its powerful features, touchscreen, dial, and creative software suite—including MyAsus, MuseTree, and StoryCube—the ProArt P16 offers a compelling alternative that meets and exceeds the quality and functionality of its Apple counterpart.

Buy it if...

Don't buy it if...

We've tested the best laptops for photo editing - and these are our top picks