MSI MODERN 14 H: Two-minute review

The MSI Modern 14 H is a low-cost, business-focused laptop that aims at providing bang for buck rather than trying to pack in every last feature. The laptop has a 14-inch frame with 31.4 x 23.6 x 1.9cm (12.4 x 9.3 x 0.73 inches) dimensions and weighs 1.6kg (3.53 lbs), making it quite portable but larger than very slim or ultralight models.

The Modern 14 H configuration featured in this review (D13MG-045AU) boasts a relatively powerful Intel i9-13900H CPU with 14 cores, 20 threads and a boost frequency up to 5.4GHz. It also has 16GB of DDR4 3200MHz RAM (upgradeable to 64GB), a 1TB NVMe M.2 SSD and a 53.8Wh battery. The 14-inch display has a resolution of 1920 x 1200 (16:10 ratio) and uses a 60Hz IPS panel with a matte coating and no touchscreen.

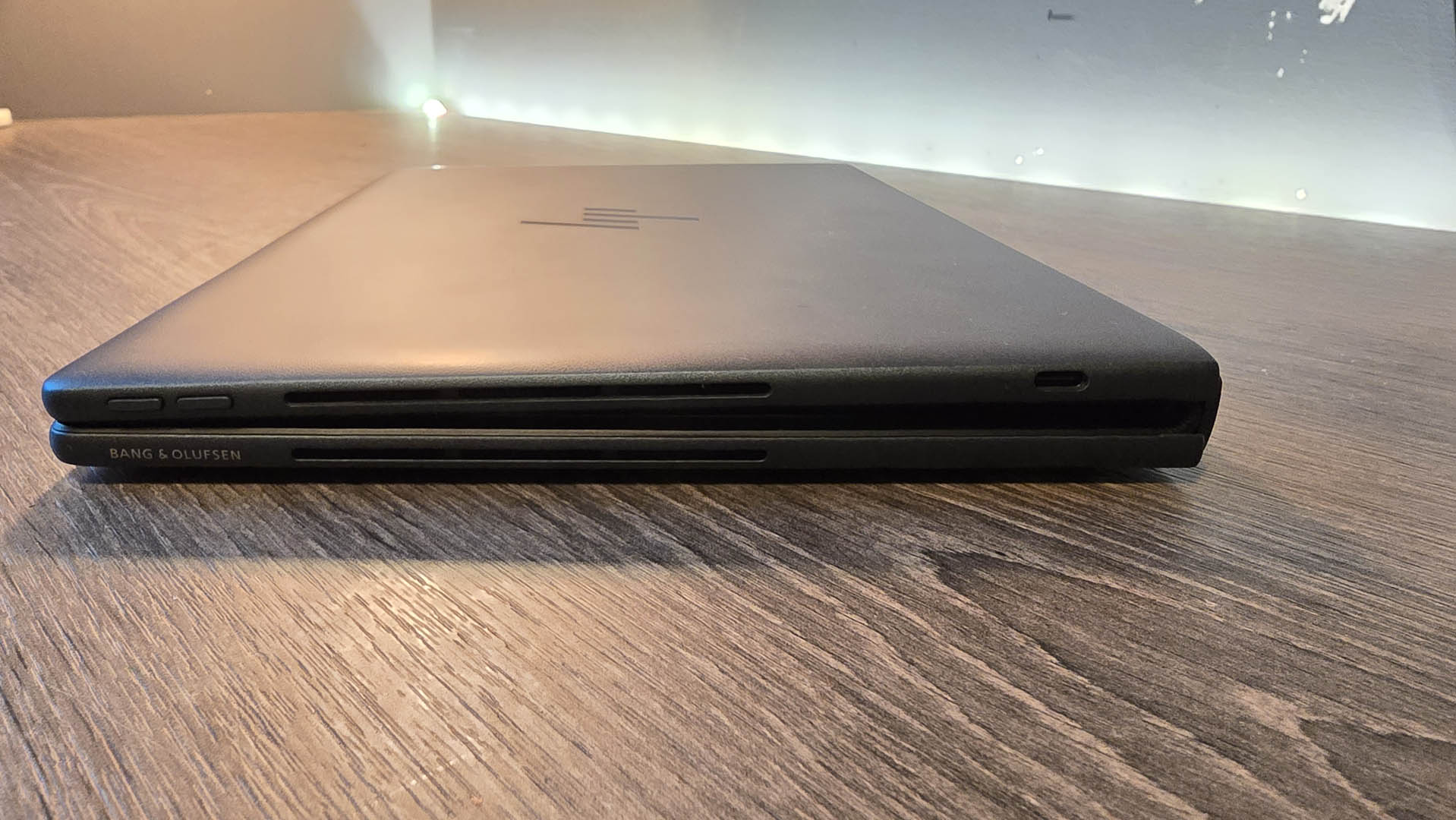

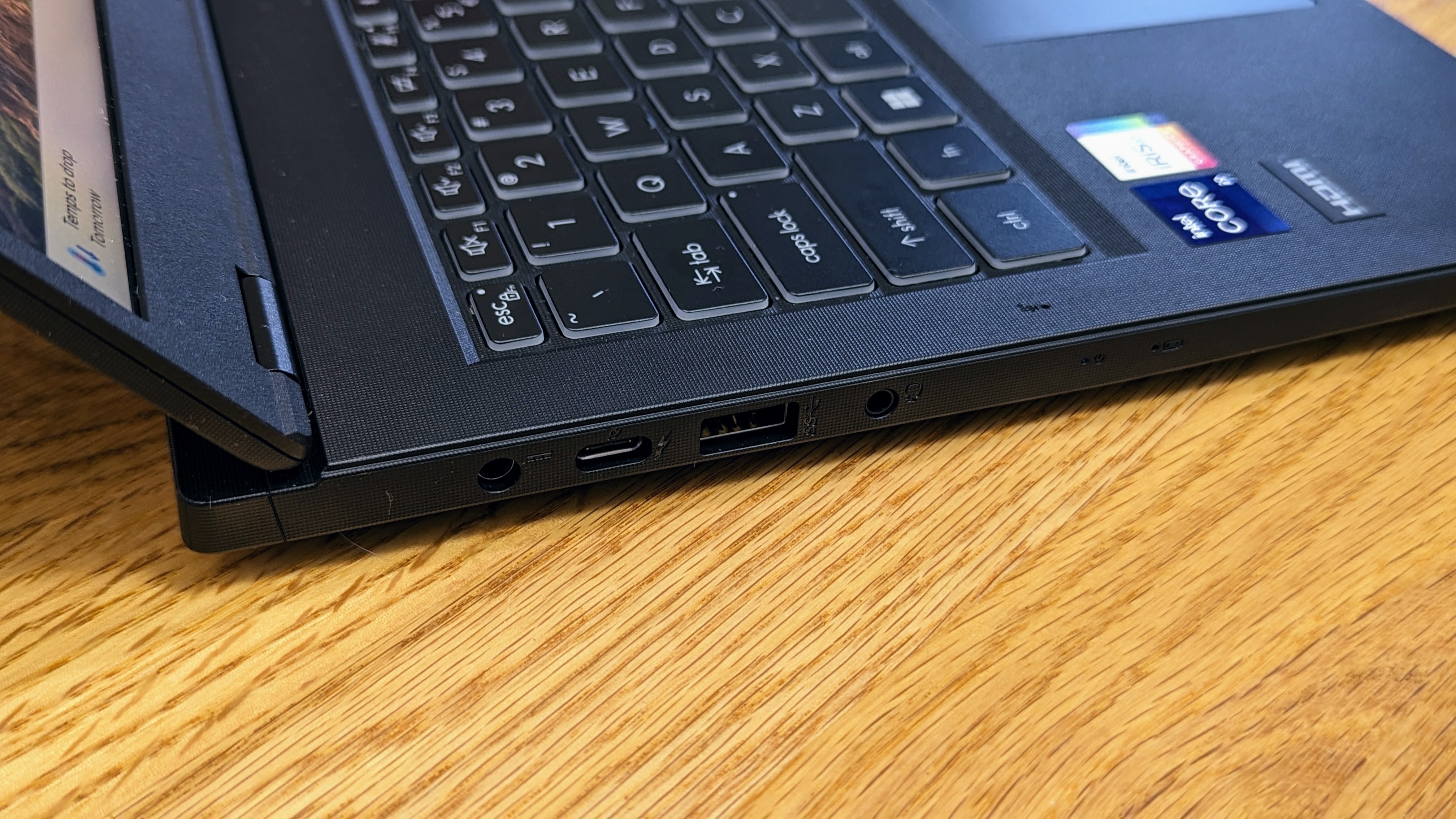

Along the left edge, the Modern 14 has the DC power input, a USB-C port (40Gbps Thunderbolt 4 with DisplayPort and 100W PD charging), a single USB 3.2 Type-A port and a 3.5mm headset jack. The right side has a vent and a Kensington lock, while the rear has HDMI 2.1, a 1Gb Ethernet connection and two USB-A ports. While I wish there was a USB-C connection on the rear as well as the side, the overall layout of the ports is quite neat. The top and bottom panels are metal, but the rest of the laptop shell is a surprisingly rigid, robust-feeling plastic. The included 90W power brick is a little chunkier than expected, but not outrageously large.

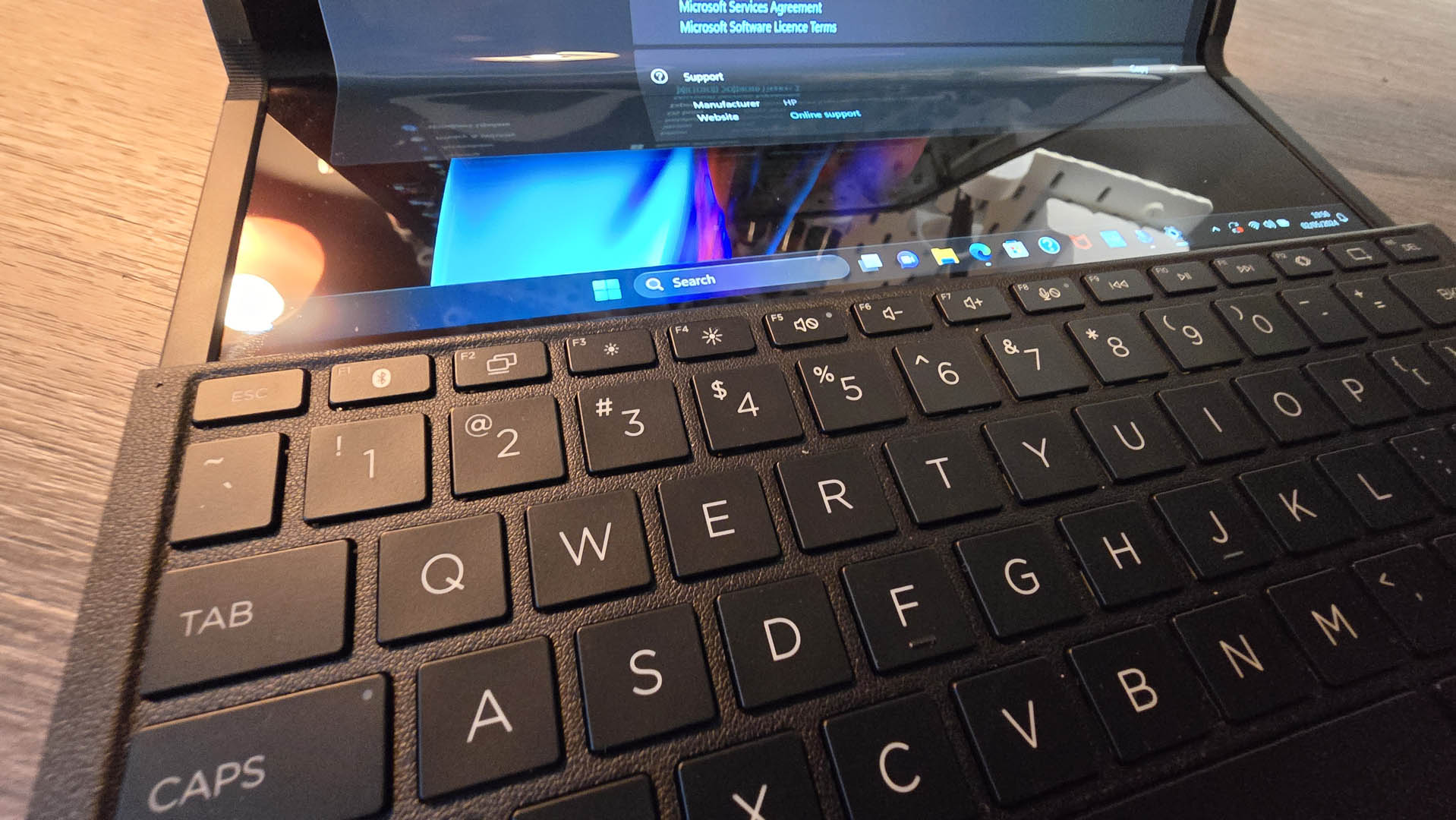

MSI doesn't provide the display brightness or color accuracy, but the screen is perfectly usable for office tasks. It can be laid down almost flat (but not quite a full 180º) and, when combined with the display flip hotkey, makes it easy to show what's on the screen to a person sitting across from you. The 720p webcam is serviceable (and has a physical shutter), but does not support facial recognition and there's no fingerprint reader for fast, secure logins. The keyboard is backlit and comfortable to use despite a little flex, while the trackpad is reasonably large and accurate. The speakers are nothing special but give clear audio and work well for tasks like video meetings.

Equipping the Modern 14 with an Intel i9-13900H is an interesting move, as this slightly older (2023) but relatively powerful CPU isn't often seen in more affordable laptops. Of course, real-world performance also depends on cooling, but the Modern 14 H system allows the the i9-13900H to sustain near-full CPU power in heavy workloads. While normally near-silent, the the fans become audible under load, but aren't too intrusive. The included MSI management software is straightforward and easy to use, and gives a reasonable depth of settings.

The 53.8Wh battery is not especially large, but the MSI laptop can manage a pretty decent 6 hours and 23 minutes unplugged when doing office tasks. Performance is limited to 30W on battery – though it will run at full speed on USB-C. A full charge takes up to 1.5 hours, but 50% capacity can be reached in just over 30 minutes.

MSI Modern 14 H: Price & availability

- How much does it cost? $999 / £999 / AU$1,599

- When is it available? Available now

- Where is it available? Available in the US, the UK and Australia

The i9-13900H MSI Modern 14 H (D13MG) tested is still hard to buy in the US and UK and costs $999 / £999 where available. It's a little more widely available in Australia, where it will set you back AU$1,599 at full price.

When shopping around, keep in mind that there are many MSI Modern 14 variants that don’t have the mighty Intel i9-13900H CPU. Models equipped with an Intel Core i5, i7, or AMD Ryzen CPUs are still great laptops, but only the H spec offers higher-end performance and 13th Gen CPUs like the i7-13620H and i9-13900H.

For those who want a larger screen, the MSI Modern 15 H (B13M) can be found for a very similar price ($999 / £999 / AU$1,599) and otherwise near-identical spec. Again, the 15 H with the i9-13900H is easiest to buy in Australia, but is available in the US without too much trouble and in the UK from limited retailers.

In Australia, the Modern 14 H stands out as excellent value amongst its often feature-packed but more expensive peers. The comparatively limited availability in the US and UK means the Modern H is often not as price competitive.

- Value score: 4.5 / 5

MSI Modern 14 H: Specs

The Modern 14 H doesn’t have a huge number of configurations, and even then not all are for sale. The main difference are the CPUs available, and the H spec laptops can have the i9-13900H, the i7-13620H and i5-13420H – though only the i9-13900H gets Intel Iris Xe graphics.

Below is the list of specs for the configuration tested for this review:

- Specs score: 4 / 5

MSI Modern 14 H: Design

- Fold-flat screen

- Thunderbolt 4

- 100W USB-C charging

The Modern 14 does an admirable job of keeping the design simple, with a focus on higher performance at an affordable price, so the lack of an IR webcam for facial login support and no fingerprint reader are minor frustrations. I wish there was a 32GB model available, but at least the laptop uses upgradeable RAM.

The Modern 14 H measures in at 31.4 x 23.6 x 1.9cm (12.4 x 9.3 x 0.73 inches), and while it does have chunky rubber feet to ensure adequate cooling space, they don’t cause any issues with even very slim laptop bags. The laptop's 1.6kg (3.53 lbs) feels very light in hand compared to the overall size.

The Modern 14 H layout is quite clean, and I appreciate the way the ports are all on the left and rear (though left handers may feel the opposite!). Still, having an extra USB-C port (with PD charging) on the rear would have been handy. The lack of SD or microSD card reader may also frustrate some buyers. On the plus side, the choice to have the USB-C port support Thunderbolt 4 with 100W PD charging means the Modern 14 H is ideal for connecting to a dock with an extra monitor or other high-speed external devices.

The Modern 14 H has a mostly plastic body, with metal top and bottom panels. The plastic is quite stiff itself and, combined with the metal, the 14-inch form doesn’t exhibit much flex at all. MSI also reports the laptop is rated to the MIL-STD-810H Military Grade Standard for toughness, and I didn't find any compromises that could result in premature failure or wear.

The Modern 14 H uses a 16:10 ratio display to give a small but welcome amount of extra vertical space compared to a 16:9 screen. The resulting resolution is 1920 x 1200, and while that may seem low, the display is quite sharp and perfectly usable in the 14-inch size. The (almost) 180º fold-flat screen is a nice addition, as well as the hotkey for flipping the display orientation to show it to someone sitting opposite. The fold-flat screen also means the laptop works great with a dock and vertical stand, though I do wish the hinge allowed a bit of extra folding so it could lay fully flat.

- Design score: 3.5 / 5

MSI Modern 14 H: Performance

- Powerful CPU

- Not too noisy

- Fast 1TB SSD

Here's how the MSI Modern 14 H performed in the TechRadar suite of benchmark tests:

3DMark: Time Spy: 1,809

Cinebench R23 Multi-core: 13,337 points; Single-core: 2,029

PCMark 10: 6,510

CrystalDiskMark 8 NVMe: 5,000 MB/s (read); 3,576MB/s (write)

Sid Meier's Civilization VI: Gathering Storm (1080p, Ultra): 25fps

PCMark 10 Battery life: 6 hours and 25 minutes

1080p video playback battery life: 6 hours and 57 minutes

It’s important to remember the relatively affordable nature of the MSI Modern 14 H when considering the performance. It’s not common to see a higher-end CPU like the Intel Core i9-13900H CPU in a thin and light chassis, though the Modern 14 H opts for DDR4 RAM over DDR5 (both are supported by the CPU) to help keep costs down. The RAM runs at 3200MHz and, in this case, faster DDR5 wouldn’t give much of a performance boost.

The MSI Modern 14 H benchmarks results are excellent and it bested more expensive laptops – even those with the same or a similar CPU. This largely comes back to the MSI engineers making sure the i9-13900H is kept cool enough to run at or above its 45W thermal design power. Unfettered, the CPU can boost up to 115W, but that’s reined in and total system power is limited by the 90W adapter.

The laptop vents hot air out the rear and right side, and at full power that can result in a warmer-than-usual mousing hand. That's delightful in winter perhaps, but not as nice in a hotter environment. The MSI software in 'AI' mode does an admirable job of keeping the laptop as quiet as possible without limiting performance when needed. For those who like manual control, in performance mode, the CPU can sustain the 45W TDP for extended period, and under load the fans are plainly audible but not excessively loud.

Balanced mode drops the CPU power back to 30W, and even with heavy use, the fans are only just able to be heard. Quiet mode limits the CPU to a much more modest 20W, ensuring that even under sustained load the fans never spin fast enough to be audible. Super battery mode limits the CPU to just 15W, making many apps noticeably slower, but it still works well if maximizing battery life during lower impact tasks like video playback.

On battery, the system automatically limits the CPU to 30W – though the impact of this is only noticeable in very CPU-heavy apps. The Modern 14 H doesn’t throttle performance at all when connected to a 100W USB-C charger, and peaks at just over 90W when pushed hard.

The Modern 14 H SSD is reasonably fast overall and the laptop is well suited to work that demands higher-than-average performance. Despite the decently powerful Intel Iris Xe graphics, the overall cooling of the laptop does mean gaming performance is limited. This is expected for a business-focused laptop, but nevertheless you can still get playable frame rates out of older or less demanding games.

- Performance score: 4 / 5

MSI Modern 14 H: Battery life

- 6 hours and 25 minutes work when unplugged

- 6 hours and 57m of video playback

The Modern 14 H has a fairly normal sized 53.8Wh battery and it gives decent but not outstanding battery life. Connected to Wi-Fi, I managed over 6 hours of office work on battery. This isn’t quite enough to get through a full day's work without a charger, but is more than enough to spend plenty of time unplugged. For less demanding tasks like video playback, the Modern 14 H can just reach 7 hours. If working the CPU hard, expect as little as 2 hours on battery, or 3 to 4 hours with medium level workloads.

The Modern 14 H will fast charge on USB-C or using its power adapter, and from 4% charge it can hit 50% in not much more than 30 minutes. A full charge takes around 1.5 hours. The battery charge efficiency is above average, and from 4% capacity it took 59.5Wh to charge the 53.8Wh battery. This means you can fully charge the laptop on a suitable 20,000mAh (74Wh) or larger power bank.

The Modern 14 H doesn’t throttle performance when connected to USB-C – meaning you can get full performance for heavy workloads when on the go by using a power bank. In my testing, a 20,000mAh (74Wh) power bank gives an extra 5 hours or so of demanding work, like video editing.

- Battery life score: 4 / 5

Should you buy the MSI Modern 14 H?

Buy it if...

You need above-average performance

Thanks to the powerful Intel Core i9-13900H CPU, the Modern 14 H offers better performance compared to several more expensive laptops.

You use a USB-C dock

The Modern 14 H runs at full performance on USB-C, and has a 40Gbps Thunderbolt 4 connection with DisplayPort, so is ideal for use with a dock.

You want value for money

Despite the modest price tag, the Modern 14 H offers a mix of features that mean it is well suited to handling heavy workloads.

Don't buy it if...

You need all-day battery life

6 hours on battery is nothing to sneeze at, but it won’t get you through a full day's work without charging along the way.

You can't live without a fancy screen

The Modern 14 H display is perfectly fine for office use and folds back through 180º, but doesn’t wow compared to much more expensive OLED or higher-resolution panels.

You need 32GB of RAM

The MSI Modern 14 H doesn’t have a configuration equipped with 32GB of RAM – though the memory is user upgradable.

MSI Modern 14 H: Also consider

If our MSI Modern 14 H review has you considering other options, here are some other gaming laptops to consider...

How I tested the MSI Modern 14 H

- I tested the MSI Modern 14 H for two weeks

- I used it both on a desk with a dock, and when on the go

- I tested it with benchmarking tools, as well as battery and power logging

I ran the MSI Modern 14 H through the usual comprehensive array of TechRadar benchmarks, as well as using it for actual day to day work.

I used the TechRadar movie test for assessing battery life during video playback, as well as running productivity-focused battery benchmarks. I also logged power use in a variety of scenarios, including when charging from USB-C, or running from a power bank.

First reviewed May 2024