Saatva RX mattress: Two-minute review

My first thought when I saw the Saatva RX mattress was, "Wow, this sounds like a mattress made just for me!" Well, people like me, anyway. This luxury innerspring hybrid is designed for sleepers who have chronic back or joint issues. I have mild scoliosis and for the last several years I've struggled with recurring lower back pain. In other words: I fit well within the RX's target demographic.

Saatva produces what's regarded as the best mattress in the country, the Saatva Classic. The Saatva RX is very similar in construction to the Classic, but uses more materials and therefore comes at a much higher cost. A queen retails for $3,295, which is a lot to spend for a mattress, even one as luxe and comfy as this one claims to be. Is it worth the cost? I slept on a Saatva RX mattress for one month to find out

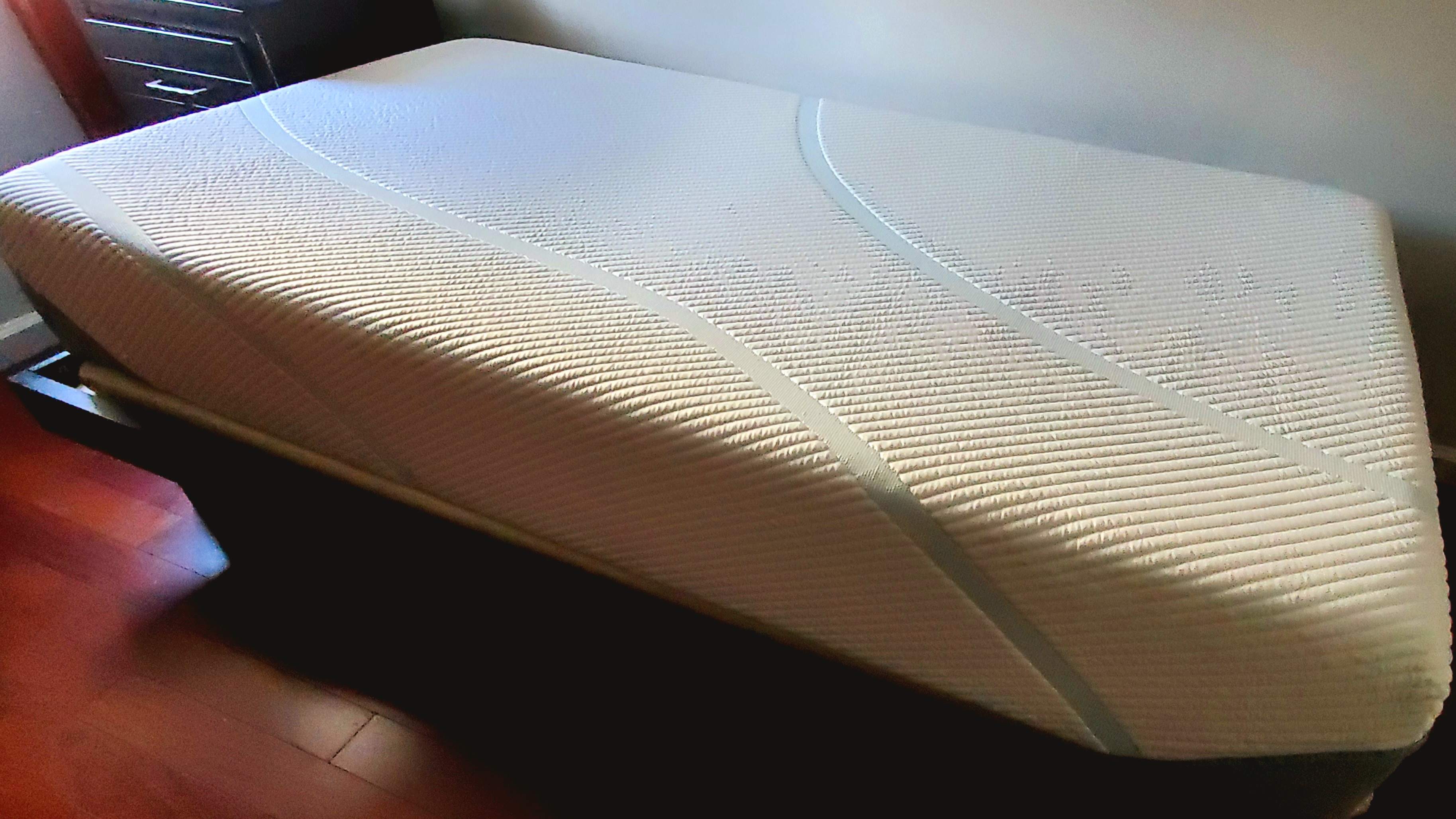

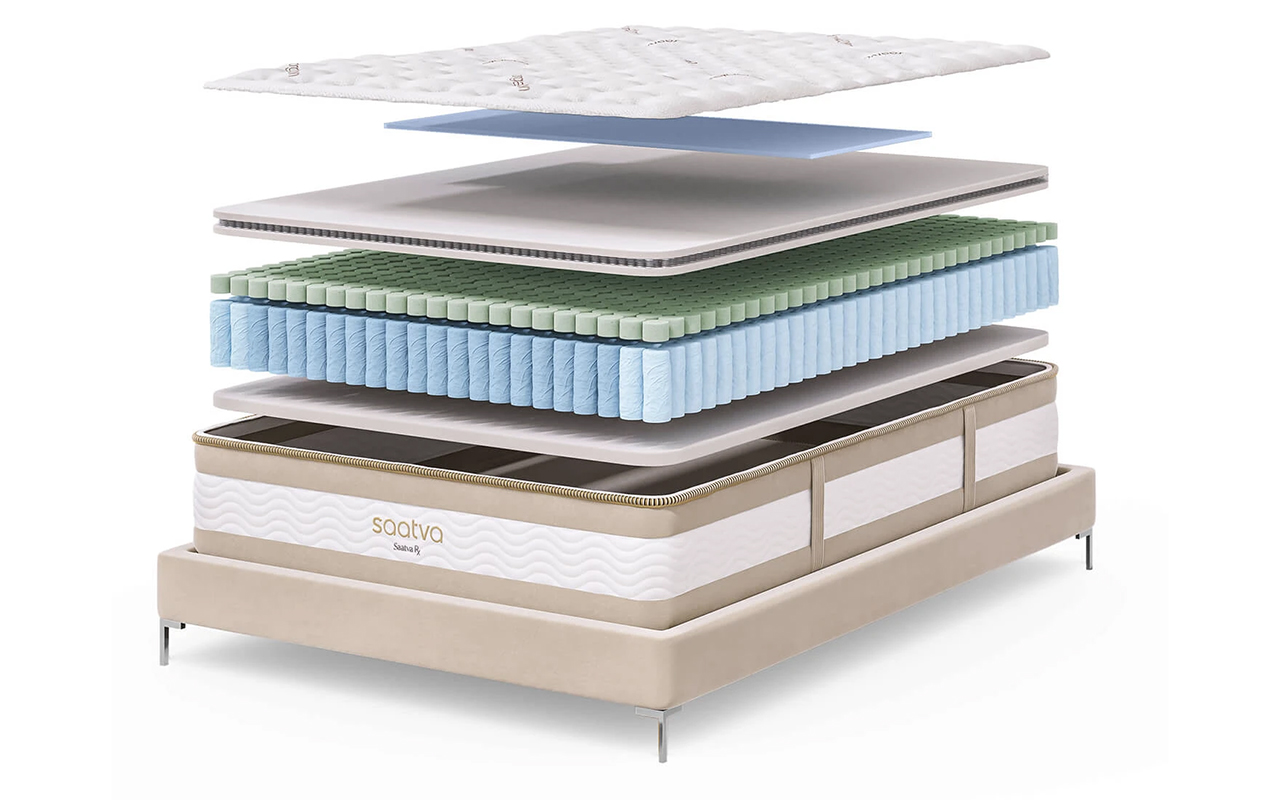

The Saatva RX is 15 inches tall and packed with 8-inch coils, 2-inch foam modules, 1-inch micro-coils, two three-quarter-inch layers of high-density foam, and a thin strip of gel-infused memory foam across the middle. Like all of its mattresses, Saatva handcrafts the RX to order and delivers it flat via complimentary white glove delivery.

Within the first week of sleeping on the Saatva RX, I noticed that I was no longer waking up with stiffness in my lower back – a carryover from a less accommodating mattress. Whether I slept on my side or front, I was well supported. That said, most of my fellow testers and I were most comfortable resting on our backs. The Saatva RX nicely redistributed our weight in this position.

Saatva calls the RX 'supportive plush;' I call it 'medium-firm.' Either way, it may not be comfortable enough for lightweight side sleepers with back or joint pain. One of my smallest testers, who also deals with chronic pain, felt pressure buildup in her hips when on her side (yet she was fine on her back).

The firmer caliper coils that surround the Saatva RX yield exceptional edge support, and the mattress will sleep cool enough for most people thanks to its organic cotton cover, cooling foam, and a dual layer of springs. There's plenty of bounce, but its motion isolation won't be enough to dampen moderate to strong movement.

Is this Saatva's best mattress for back pain? I think so – but you can't control the feel of the RX. The Saatva Loom & Leaf mattress comes in two levels of firmness while the Saatva Classic mattress includes three choices, along with two height profiles. If you want a more customized approach, go with either of those (cheaper) options.

That $3,295 MSRP for a queen may make your eyes water, but Saatva is a frequent participant in year-long mattress sales, so you'll always be able to save money. You'll get a 365-night trial and a lifetime warranty, along with free white glove delivery and mattress removal. If money is no object when it comes to soothing your nightly aches and pains, go for the Saatva RX. You'll get a lot in return for your investment.

Saatva RX mattress review: Design & materials

- A 15-inch hybrid with high density foam and two layers of coils

- Specialized lower back crown for pain relief

- Fiberglass-free and handcrafted in the USA

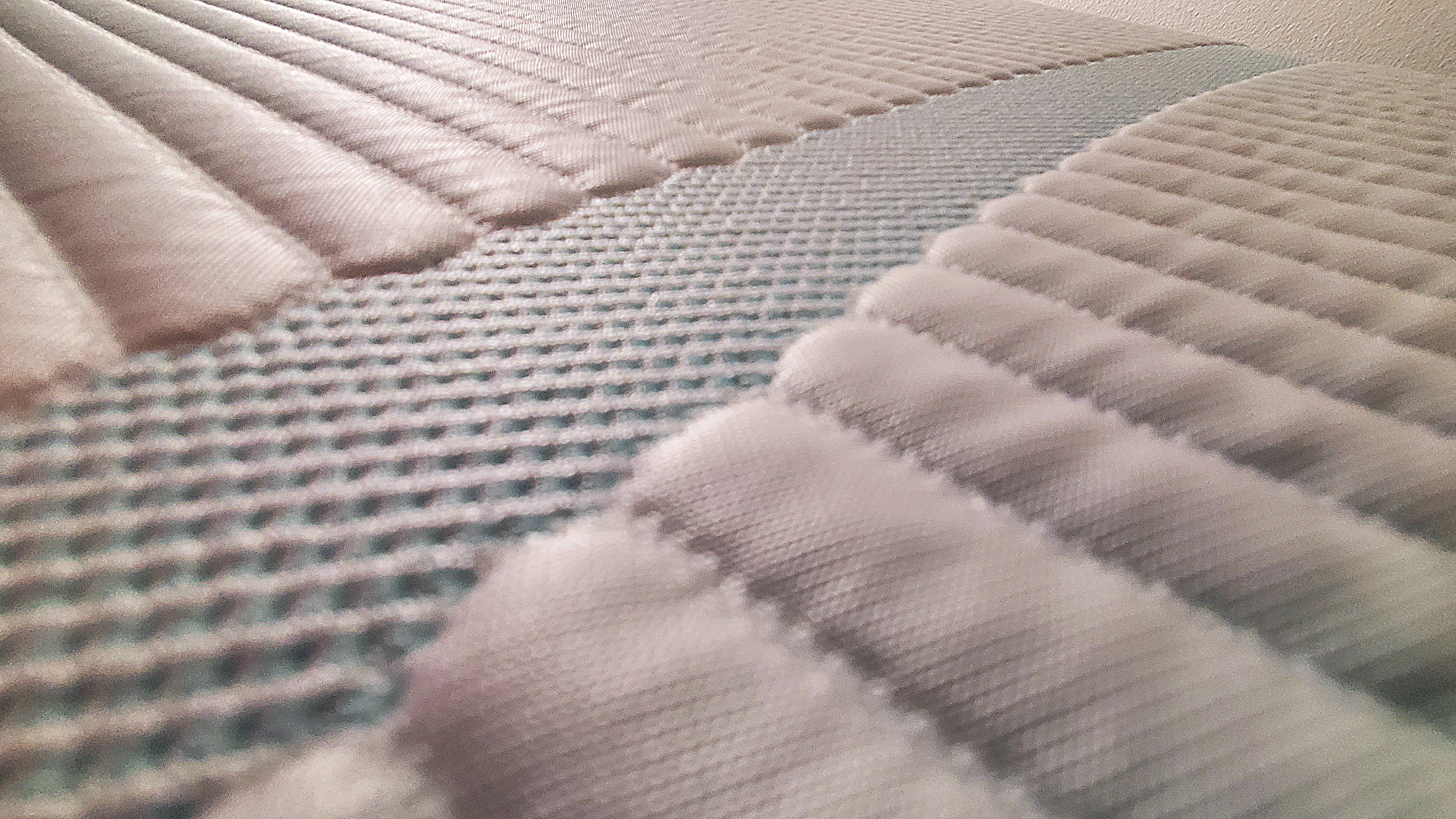

The 15-inch Saatva RX is designed to provide relief from the discomfort of chronic conditions and serious injuries. At its core is a series of 8-inch, triple-tempered recycled steel coils set on a non-woven base layer. Firmer caliper spring coils line the perimeter for stable edges, making it easier to push off when you're getting in or out of bed. Each coil is topped with a 2-inch module of open-cell foam infused with graphite and phase-change material for contouring and cooling.

Following that are two layers of three-quarter-inch high-density foam, separated by a layer of 1-inch micro-coils. This combo offers pressure relief, enhanced support, and adaptability to all of your movements. A 1-inch strip of gel-infused memory foam races across the center for lower back relief, and is complemented by specialized quilting in the RX's organic cotton cover, which is treated with a botanical antimicrobial agent for hygienic sleep, though not removable.

This structurally sound mattress prioritizes safe, sleep-friendly materials. It's handcrafted in the USA using CertiPUR-US certified foams free from harmful chemicals and high levels of VOC (volatile organic compound) emissions. The Saatva RX is also a fiberglass-free mattress, instead using plant-based thistle pulp as a flame retardant.

- Design score: 5 out of 5

Saatva RX mattress review: Price & value for money

- Saatva's second-most expensive mattress

- Regularly discounted, up to $400 off

- Comes with a 1-year trial, forever warranty, white-glove delivery

The Saatva RX is a premium-priced mattress in the wider market; a queen retails for $3,295 while a twin goes for $1,995. The RX is Saatva's most expensive model behind the adjustable Solaire.

Here are the official MSRPs for the Saatva RX mattress:

- Twin MSRP: $1,995

- Twin XL MSRP: $2,195

- Full MSRP: $2,695

- Queen MSRP: $3,295

- King MSRP: $3,795

- California king MSRP: $3,795

- Split king MSRP: $4,390

However, it's very unlikely you'll ever need to pay full price – there's almost always a Saatva mattress sale on. The best times to shop are during major shopping events, during which we'll often have a semi-exclusive link for $400 off. Definitely keep an eye out during the Presidents' Day mattress sales in February, the Memorial Day mattress sales in May, the 4th of July mattress sales, the Labor Day mattress sales in September, and of course the Black Friday mattress deals (these traditionally deliver the cheapest prices of the year).

Are there good mattresses for back pain you can find for less than the Saatva RX? Absolutely. Just take a look at the Saatva Classic mattress. It's still a premium mattress, but much closer to the upper-mid border than the RX is, and offers a broader range of customizable features, along with targeted back support. If you'd rather have more control over the feel of your bed, this is the way for you to go.

You not only get 365 nights to try the Saatva RX at home, but you also get a warranty for life – those are industry-best amenities, especially compared to luxury rivals Tempur-Pedic and Stearns & Foster. Free white glove delivery is standard and optional mattress removal is included.

Not to mention – the Saatva RX is a gorgeous luxury mattress. But it's more than just its good looks. It's specially designed for sleepers who want relief from their back pain. If money is no object and you want a hotel-quality mattress that'll ease your aches in the process, the RX is worth the investment.

- Value for money score: 4 out of 5

Saatva RX mattress review: Comfort & support

- A 'supportive plush' (or medium-firm) mattress

- Most of the pressure relief is situated in the middle

- May be too firm for smaller side sleepers with pain

Saatva classifies the RX as 'supportive plush' – which you could perhaps argue is another way of saying 'medium-firm.' However you phrase it, my fellow testers and I rate it a 7.5 out of 10 on the firmness scale, although several of us found it a hair firmer (closer to an 8).

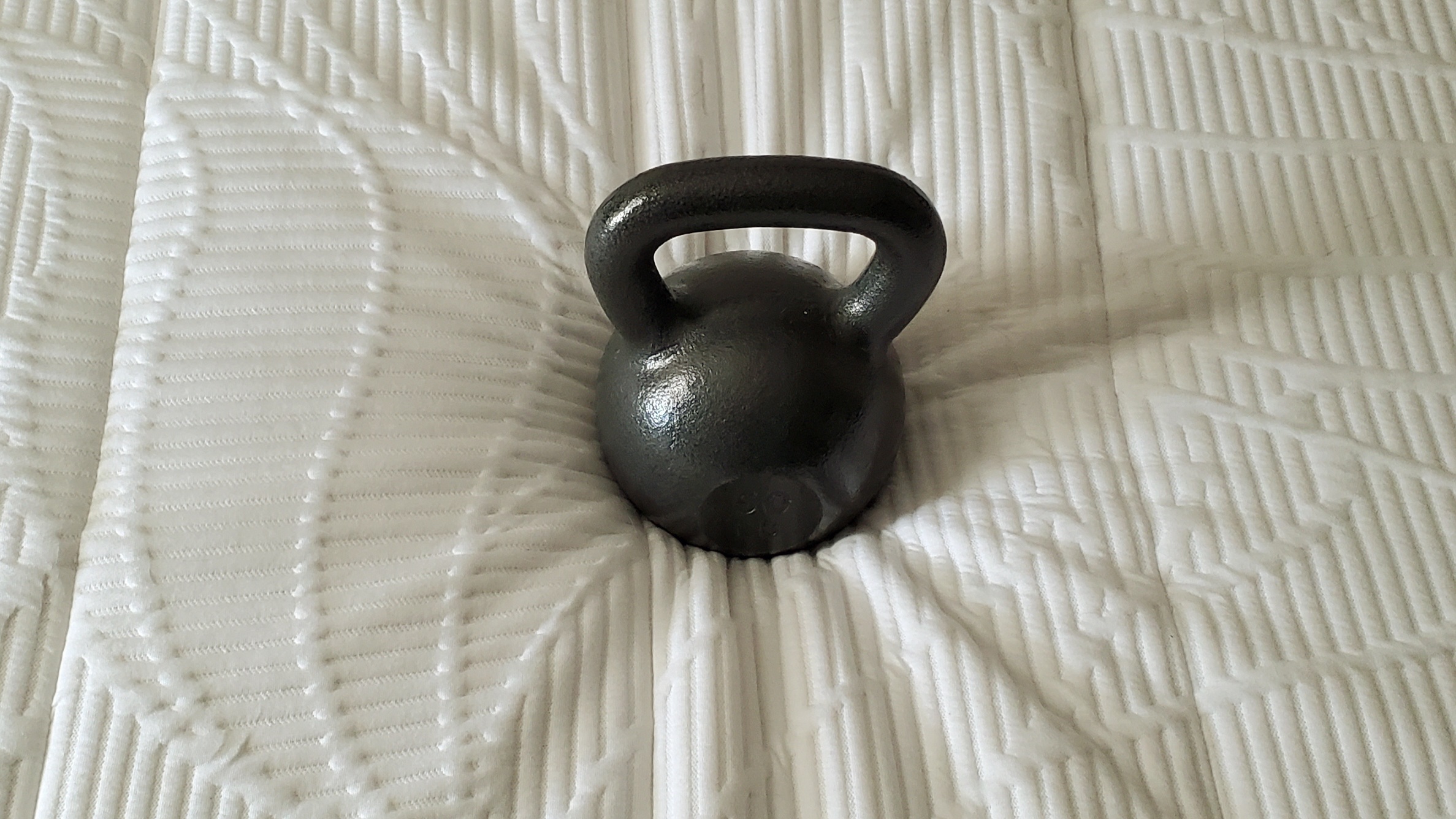

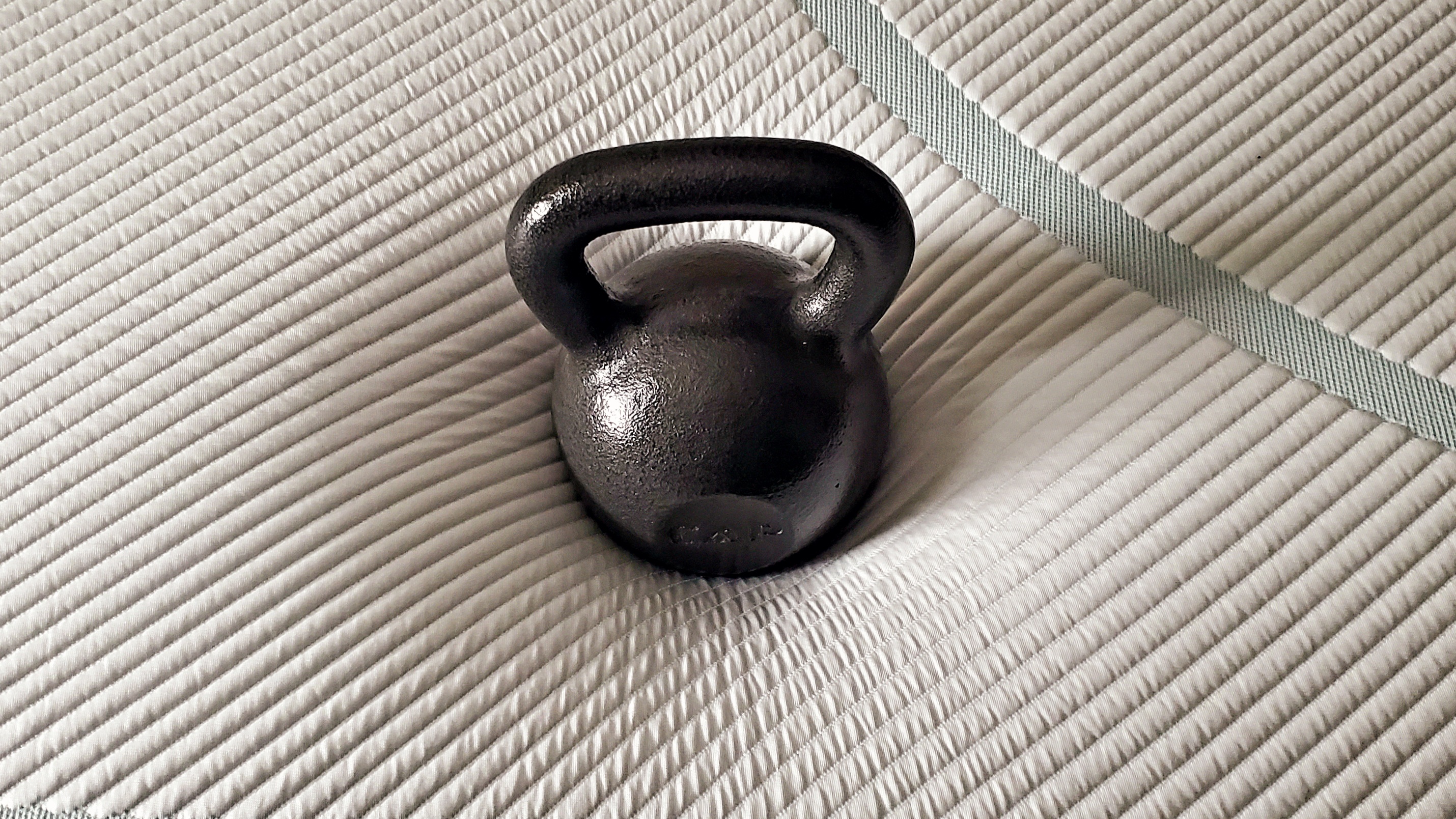

To objectively measure the Saatva RX's pressure relief, I dropped a 50lb weight in the center of the mattress (where the lumbar crown is) and it sank 3.5 inches. I also wanted to observe any differences in pressure relief outside of the targeted lumbar zone. Placing the weight at the lower third of the bed showed a more shallow drop (2.5"). That's still quite plush but a subtler hug than what you'll find in the middle.

According to Saatva, side, back, and combination sleepers will find the RX most comfortable – and my experience corroborates this. I'm a side/front sleeper, and I was comfortable no matter how I lay. I had sufficient support with just enough pressure relief in my knees, lower back, and shoulders. The responsive surface made it easy to switch positions, and the coils were nice and quiet.

Although I'm not a habitual back sleeper, I found the Saatva RX most comfortable in this position. My fellow testers agree. Our weight was well-distributed and we felt the tension in our joints just melt away. One of my testers likened it to lying on a pool float.

Several of us deal with the conditions that the RX targets. I have mild scoliosis and recurring lower back pain and was no longer waking up with stiffness. Another tester who's roughly the same size as me has arthritis and marveled at the RX's pressure relief. However, one of the smallest testers, who has RSD and several herniated discs, felt better resting on her back than her preferred side, as the latter resulted in mild hip pain.

Firmness and comfort are subjective. That said, the Saatva is a lofty mattress that skews a little firmer. If you're a smaller side sleeper seeking relief from back pain, consider taking a look at my Saatva Loom & Leaf mattress review for a memory foam bed with two levels of sink-in comfort.

Saatva RX mattress review: Performance

- Motion isolation is lacking – this is one bouncy bed

- Will keep most sleepers cool at night

- Sturdy edges, particularly along the middle

I slept on a twin Saatva RX for one month. Naturally, I can only speak from my own experience as a 5-foot-4, 145lb side/front sleeper with mild scoliosis and lower back pain, so I asked six other adults to nap on it for at least 15 minutes. Though this is still quite a small sample size, my group consists of diverse body types and sleep preferences, and several participants struggle with regular aches and pains.

In addition to my personal experiences regarding overall comfort, I also ran several objective tests to measure its motion isolation and edge support. Here's what I found out...

Temperature regulation

A lot of the Saatva RX's materials focus on maintaining a reasonable sleeping temperature. Two layers of coils aid airflow, while the foam modules are infused with graphite and phase-change material to help wick away heat. The cover is made from organic cotton, a breathable fiber.

I'm prone to occasional overheating, and at the time I tested the Saatva RX (October 2023), there were still a few warm evenings interspersed with more season-appropriate temperatures. Either way, I didn't break a sweat or feel the need to kick off my covers and comforter.

For sleepers with back pain, temperature regulation is crucial as you risk torquing your back the more you toss and turn in a futile attempt to cool off. The Saatva RX isn't quite on the level of the best cooling mattresses but it comes really close. It'll be comfortable enough for most sleepers who don't have excessive night sweats.

- Temperature regulation score: 4.5 out of 5

Motion isolation

One of the first things I noticed about the Saatva RX was its bounciness. This was fine for me, a solo sleeper who switches positions at night, but I wondered how it could potentially affect couples or families who share a bed.

I have a twin, so the best way for me to test the Saatva RX's level of motion isolation was to place an empty wine glass at the center of the bed and drop a 10lb weight from six inches above the surface.

I dropped the weight from four, 12, and 25 inches away from the base of the glass. From four inches away, the empty glass toppled over – twice. (I ran a re-test to be sure.) The glass remained more upright when I dropped it 12 and 25 inches away but still noticeably wobbled. What really struck me was how much the weight bounced before it settled after every drop.

Would I recommend the Saatva RX for couples, based on these results? Not if one or either of you are light sleepers who wake up the moment you feel even the slightest movement. Memory foam mattresses are often your best bet if you want something to effectively isolate motion. Read my Tempur-Pedic Tempur-Adapt mattress review for one such example.

There is one potential workaround – the Saatva RX comes in split king and split California king. This will separate the overall sleep surface so each person essentially has their own bed without being apart.

- Motion isolation score: 3 out of 5

Edge support

The Saatva RX has firmer caliper coils along the perimeter. This reinforces the edges so you can sit on them comfortably without fear of falling over. It'll prevent the mattress from sagging prematurely, as well.

In my objective edge support test, I put a 50lb weight in the middle perimeter and it sank roughly three inches. (However, this was a bit tricky to measure as the outer material bunched up considerably.) The most important thing was that it didn't exceed the amount of pressure relief I observed at the exact center of the mattress.

But what are the edges of the Saatva RX like to sit on? Overall, my fellow testers found the middle perimeter comfortable, with just enough sinkage and plenty of support. I often sit at the edge of my bed before waking up and with the RX, I didn't feel like I'd topple over. Plus, whenever I rolled toward the edges as I slept, I didn't suddenly wake up in fear.

If you have mobility issues that require you to sit at the edge of the bed to get up or down, you'll have loads of support with the Saatva RX. The one potential issue here could be its height – at 15", this could be difficult for shorter sleepers to maneuver. Unlike the Saatva Classic, the RX doesn't come in multiple height levels.

- Edge support score: 4 out of 5

Saatva RX mattress review: Customer service

- Free in-home delivery to a room of your choice

- Optional mattress removal is also included

The Saatva RX is one heavy mattress. Saatva doesn't disclose any exact weights but trust me when I say it's one solid bed. Fortunately, I didn't have to deal with setting it up as it arrived flat courtesy of free white glove delivery. All I had to do was schedule a time for a local logistics company to come to my house and clear a path for the delivery crew.

It took less than five minutes for a two-person crew to drop a twin Saatva mattress onto my platform bed and haul away my previous mattress. (Yes, mattress removal is included if you need it, but you'll have to let Saatva know ahead of time that you'd like to request this service.) I didn't have to wait for the mattress to inflate, nor did I detect any obvious off-gassing. Per the tag on my test unit, it was manufactured in September 2023 – all of Saatva's mattresses are handmade to order in the USA.

Once the mattress is in your home, you get 365 nights to test it out. If you don't get on with it, you can return it for a full refund, minus a $99 returns fee. Otherwise, your purchase will be backed by a lifetime warranty, though you'll need to pay for a percentage of any replacement costs starting in year three. (Repairs on your original mattress will remain free outside of a $149 processing fee starting in year three.)

Saatva arguably offers the best assortment of extras in the industry. It's not very often we see free in-home delivery bundled with a 1-year sleep trial and a lifetime warranty. Luxury beds tend to be stingy with their trial and warranty periods, while bed-in-a-box brands seldom offer white glove service (and if they do, it costs extra).

- Customer service score: 4.5 out of 5

Saatva RX mattress review: Specs

Saatva RX mattress review: Other reviews

As of November 2023, the Saatva RX has fewer than 30 reviews and a 4.8 out of 5-star rating at Saatva.com. The lone 2-star review is related to a delivery issue, but most sleepers with aches and pains absolutely enjoy sleeping on this mattress.

Considering the RX just came out in summer 2023, it'll be a while before the reviews begin to accumulate.

Should you buy the Saatva RX mattress?

Buy it if...

✅ Your back always hurts: Saatva sought to create a mattress that's the perfect blend of support and comfort for sleepers with chronic or serious back pain. The RX eliminated the stiffness in my lower back that developed after I spent weeks on a less comfortable bed.

✅ You're willing to splurge for a luxe hotel-style bed: The Saatva RX wouldn't seem out of place in a ritzy 5-star suite. This is likely the closest you'll get to achieving that luxury feel at home, outside of ordering an actual hotel mattress that comes with less attractive amenities.

✅ You're a combi sleeper: The responsive surface of the Saatva RX made it comfortable for me to shift from my side to my stomach during the night. Though it has two layers of springs, I didn't hear a single squeak.

Don't buy it if...

❌ You're a shorter, lighter side sleeper: The Saatva RX may be too firm for lightweight side sleepers with back pain to get truly comfortable. Plus, at 15 inches tall, it could make getting in or out of bed more of a challenge for shorter people with mobility issues.

❌ Every movement your partner makes wakes you up: If you're on the brink of sleep divorce, the Saatva RX won't do much to mend those fences. Look for a mattress with better motion isolation, namely one made exclusively of foam.

❌ You want some control over the feel of the mattress: The Saatva RX comes in one firmness level, which I already noted may be off-putting for smaller side sleepers. The Saatva Classic has a similar build but comes in three firmness levels and two height profiles – all for hundreds of dollars less than the RX.

Saatva RX mattress review: Also consider

Tempur-Pedic Tempur-Adapt Mattress

Tempur Material is known for its outstanding pressure relief, and heralded by sleepers with back pain. The Tempur-Adapt is among the most affordable Tempur beds out there – it's comfortably supportive and boasts excellent motion isolation. However, it does trap heat easily. Though it's less than the RX outright, Tempur-Pedic's extras are comparatively underwhelming (90-night trial, 10-year warranty.)

Read more: Tempur-Pedic Tempur-Adapt mattress reviewView Deal

Helix Midnight Mattress

For smaller side sleepers, the medium feel of the Helix Midnight may be more welcoming. This hybrid is roughly a third of the cost of the Saatva RX and boasts exceptional pressure relief. It's also a more manageable height (12 inches). On the flip side, edge support is weak, and unless you're a dedicated side sleeper you might have a harder time getting comfortable.

Read more: Helix Midnight mattress reviewView Deal

Saatva Classic Mattress

The Saatva Classic and Saatva RX have similar builds, including dual layers of coils and targeted lower back support. If you can't quite justify the extravagant cost of Saatva's top-of-the-line innerspring, the Classic is an excellent alternative at hundreds of dollars less. You'll still get all of Saatva's industry-leading perks and also have the ability to make the mattress as tall or firm as you like it. (You can't customize the RX at all.)

Read more: Saatva Classic mattress reviewView Deal

How I tested the Saatva RX mattress

As someone with mild scoliosis and recurring lower back pain, I was especially intrigued to try the Saatva RX, a mattress made for individuals with issues similar to mine. Throughout October 2023, I slept on a twin Saatva RX every night and also performed a series of tests to objectively test its pressure relief, edge support, and level of motion transfer.

I'm the type of sleeper who can't sleep without being covered up, regardless of the temperature. I slept with cotton sheets and a mid-weight polyester blend comforter, and kept my bedroom temperature around 71 degrees F.

To add to my experience, I also asked six adult volunteers to nap on the Saatva RX for at least 15 minutes in their usual positions, then sit on the edges as they got in and out of bed. Our testers ranged in size from 5ft 4 and 125lbs to 6ft and 185lbs, and several of them deal with chronic pain in their everyday lives.

- First reviewed: October 2023