MSI Vector A18 HX A9W: Two-Minute Review

It's simple. If you're out for a powerful gaming laptop, capable of reaching high and smooth frame rates with minimal performance issues, the MSI Vector A18 HX A9W is your answer. Using Nvidia's RTX 5080 laptop GPU and AMD's Ryzen 9 9955HX processor, gaming is a breeze at its native 2560x1600 resolution without using Team Green's DLSS upscaling technology; however, when it is used, achieving high frame rates is a cakewalk.

It shines best in games like Cyberpunk 2077, Indiana Jones: The Great Circle, and Resident Evil 4 remake. It can struggle when attempting to use ray tracing at maximum graphics settings and at a 4K resolution without enabling DLSS, but that's exactly what Nvidia's tools are there for.

Call of Duty: Black Ops 6 had very little trouble reaching over 100fps, and edged close to the 100fps mark when running on ECO-Silent mode (which is incredible as I'll dive into later), helping reduce the workload and high temperatures, thanks to MSI Center's user scenario options.

With a 240Hz refresh rate, playing less demanding titles like Hades that can reach such frame rates, was an immersive and astonishing gaming experience. I know I've previously said that 144 or 165Hz refresh rates are more than enough for gaming, but with any game that can stay at 240fps without any significant dips, it's very impressive.

It's not all perfect though; this laptop is not ideal for long trips or playing on the go, as it's incredibly heavy (especially when including its 400W power adapter), and you'd need a large backpack to fit the 18-inch laptop in for travels. I found it difficult to use for long hours on a sturdy mini bed desk, with worries that it would snap its legs in pieces, and even at my main gaming desk, with its power adapter taking up space – so, portability isn't the best here.

This isn't an inexpensive system either - however, if you can afford the expense and you're looking for one of the best gaming laptops to provide great gaming performance, look no further.

MSI Vector A18 HX A9W: Price & Availability

- How much does it cost? Starting at $2,999.99 / £3,199 / AU$6,599 (for RTX 5080 configuration)

- When is it available? Available now

- Where can you get it? In the US, UK, and Australia

There's no denying that the MSI Vector A18 HX A9W is a very expensive piece of hardware, instantly locking most gamers out of a potential purchase. However, for those who can afford it, you're getting the bang for your buck with a system that is capable of matching a variety of desktop builds.

With both a powerful AMD processor and Nvidia GPU, it's more than enough to satisfy most gamers' performance needs; the Ryzen 9 9955HX excels at single-core and multi-core processes, which you'll see later below.

With this configuration (A9WIG-006UK), there is no OLED or mini-LED display available, which would've slightly softened the blow of the high price, with an immersive and brighter screen – but that shouldn't hinder the gaming experience here.

The issue is that it's more or just as expensive as some pre-built gaming PCs, which is somewhat reasonable since it's using a CPU as powerful as those in high-end desktops – but the RTX 5080 discrete desktop version is the stronger GPU.

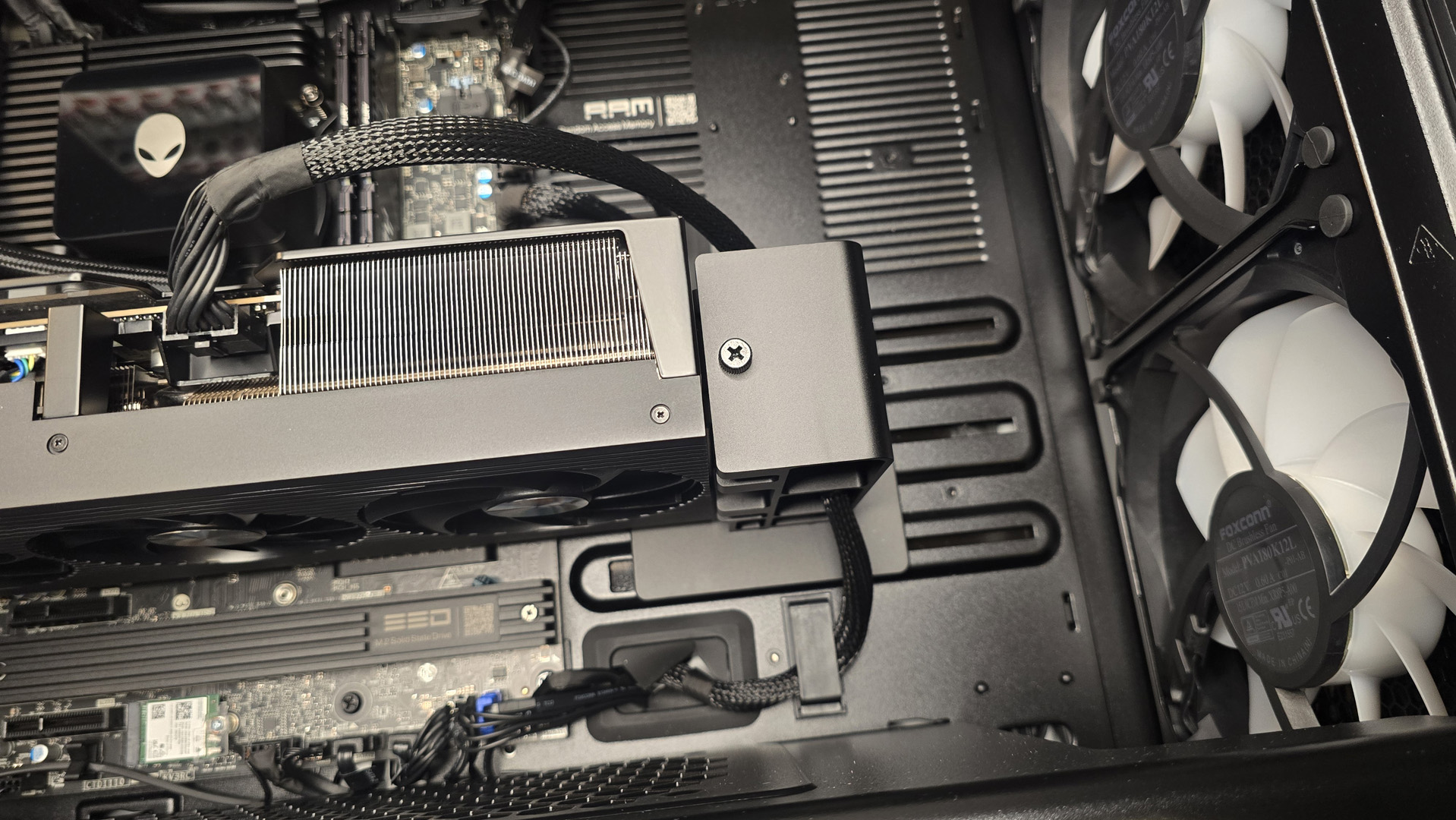

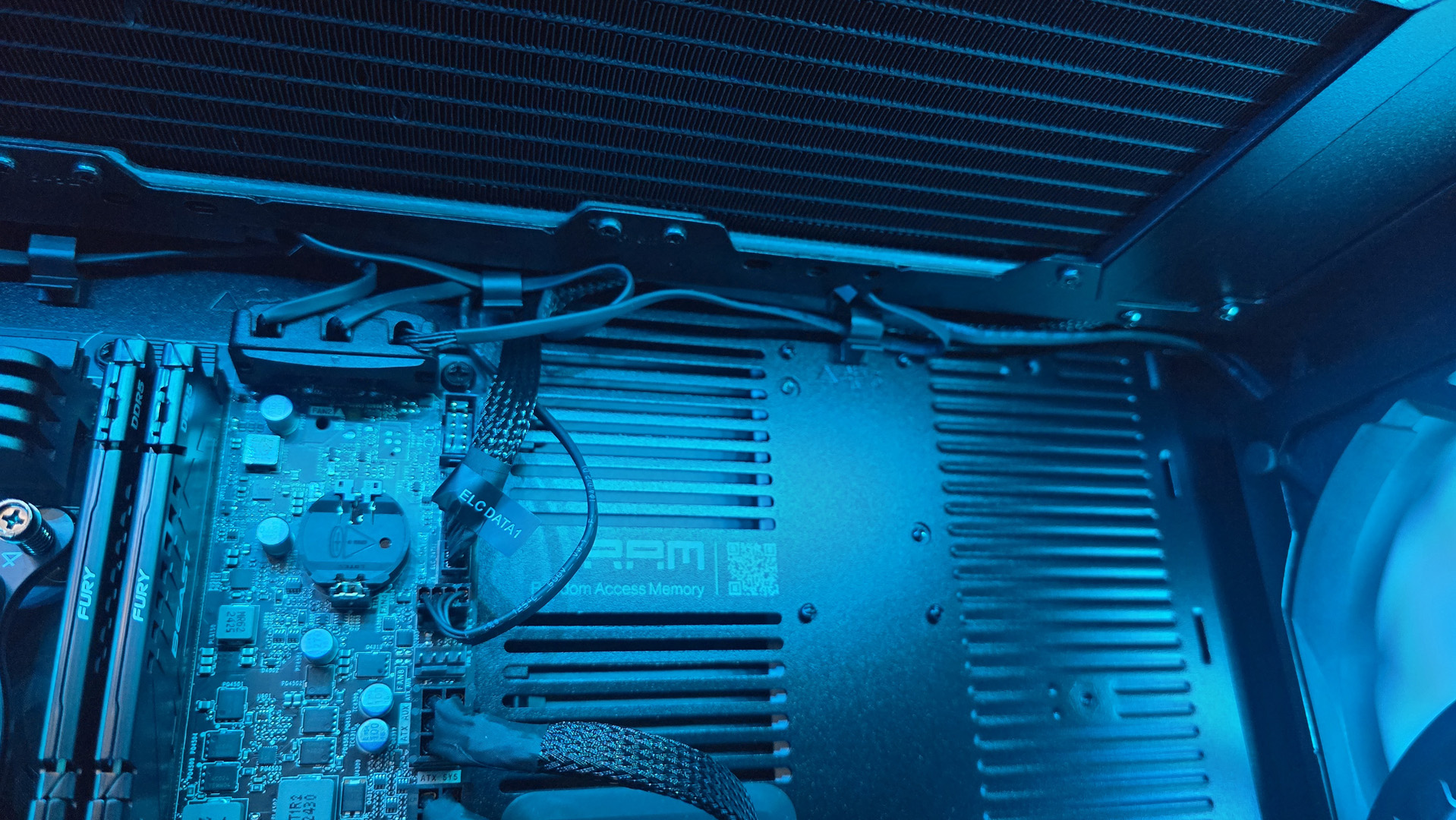

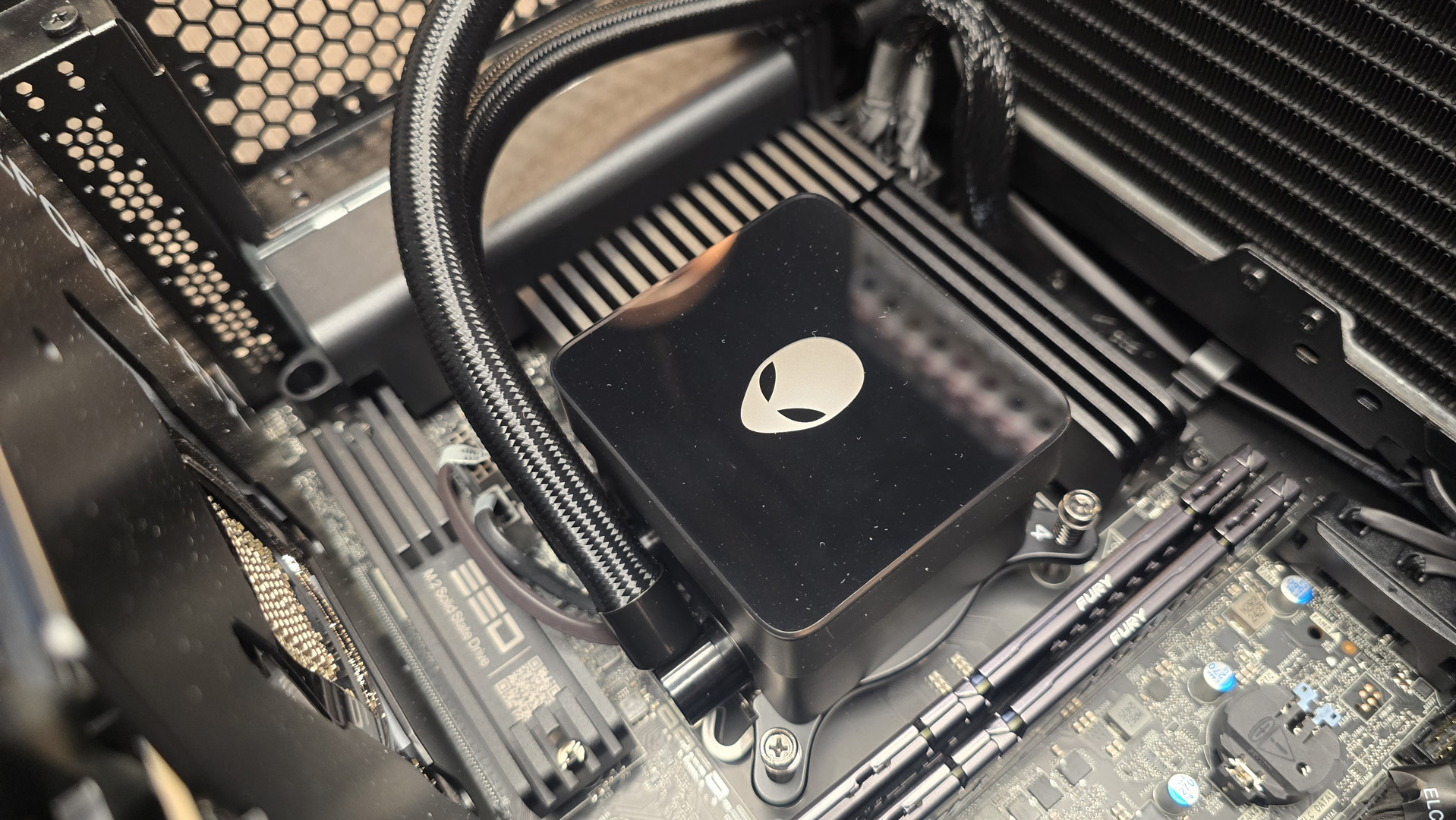

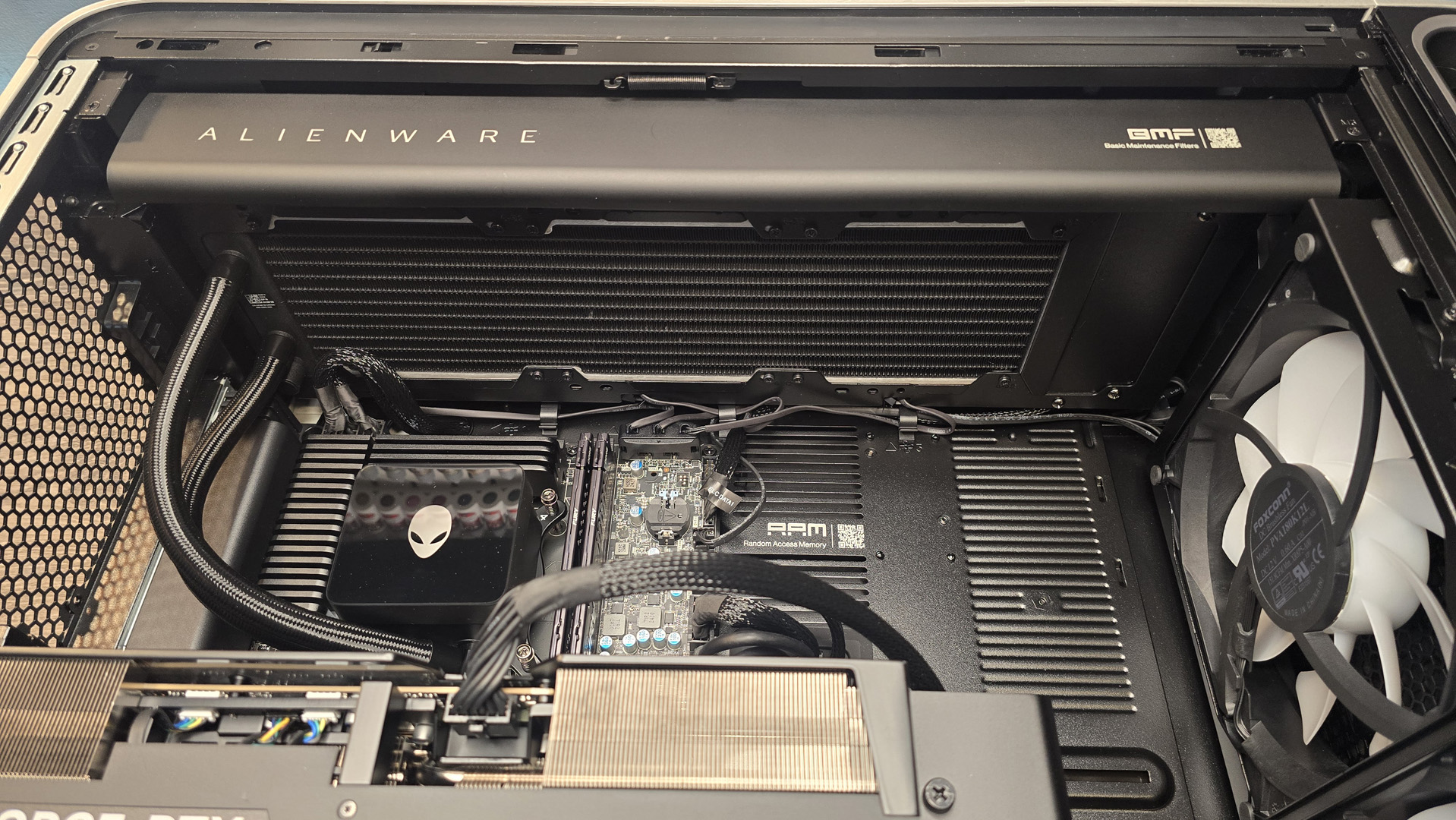

Regardless, this is a gaming laptop that packs plenty of processing power in a beefy and sturdy chassis, cooled very well with its Cooler Boost 5 tech using a 'Dedicated Cooling Pipe', so it's not very surprising to see it cost so much.

Still, the point remains; unless you're adamant on travelling with the Vector A18 HX A9W and using it on the go (which I wouldn't recommend because portability isn't that great here), or just want a PC you can move around your home easily, it might be a better choice to buy a desktop rig.

- Value: 2.5 / 5

MSI Vector A18 HX A9W: Specs

MSI Vector A18 HX A9WIG-006UK (Review model UK) | MSI Vector A18 HX A9WIG-223US (Base model US) | MSI Vector A18 HX A9WIG-076US (Highest config) | |

|---|---|---|---|

Price | £3,199 | $2,999.99 | $3,959 |

CPU | AMD Ryzen 9955 HX | AMD Ryzen 9955 HX | AMD Ryzen 9955 HX |

GPU | Nvidia RTX 5080 | Nvidia RTX 5080 | Nvidia RTX 5080 |

RAM | 32GB DDR5 | 32GB DDR5 | 64GB DDR5 |

Storage | 2TB NVMe Gen 4x4 | 1TB NVMe Gen 4x4 | 2TB NVMe Gen 4x4 |

Display | 18" QHD+(2560x1600), 240Hz Refresh Rate, IPS-Level, 100% DCI-P3(Typical) | 18" 16:10 QHD+(2560 x 1600), 240Hz, 100% DCI-P3 IPS-Level Panel | 18" 16:10 QHD+(2560 x 1600) 240Hz 100% DCI-P3 IPS-Level Panel |

Battery | 4-Cell, | 4-Cell, | 4-Cell, |

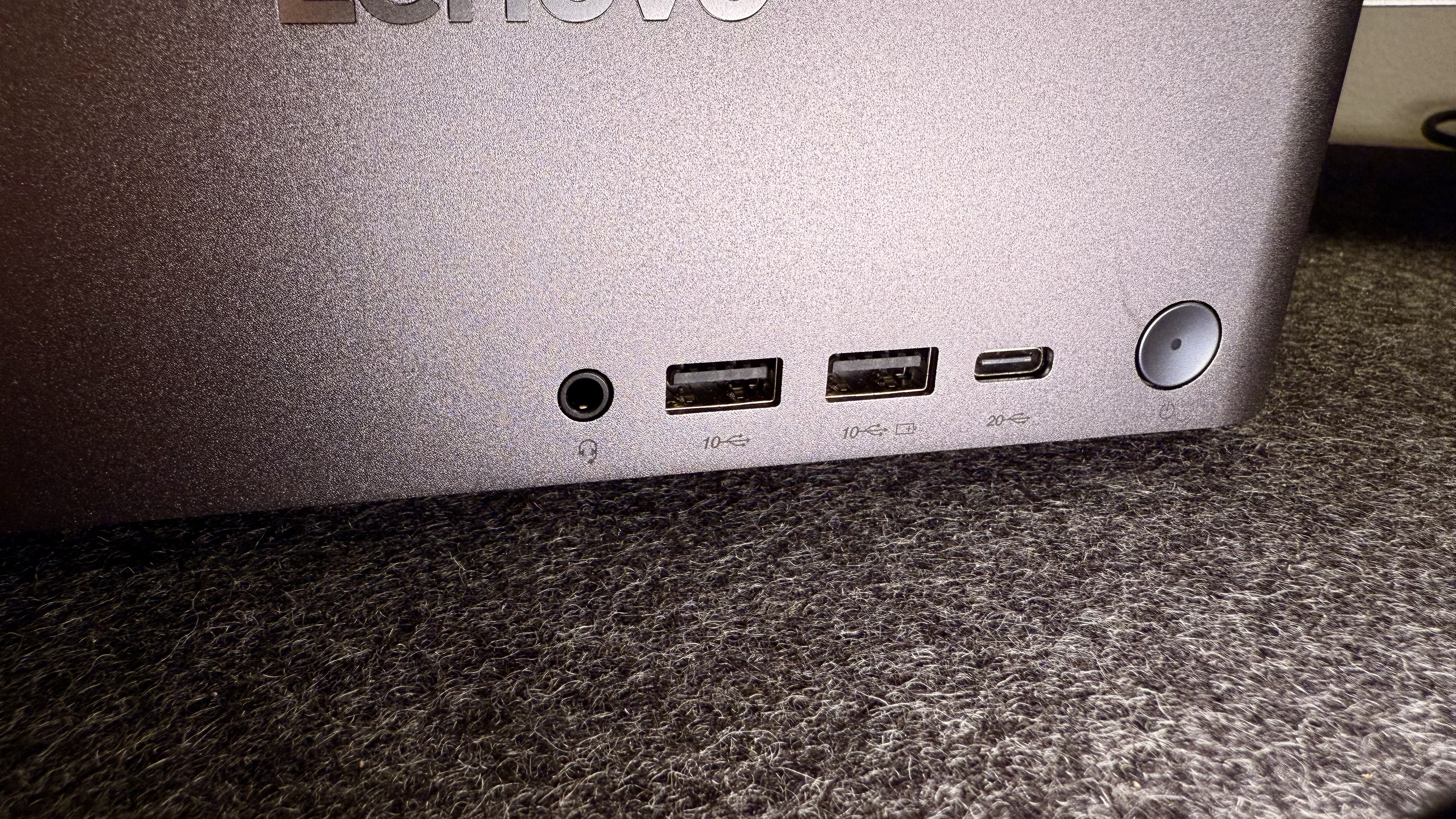

Ports | 2x Type-A USB3.2 Gen1, 1x Type-A USB3.2 Gen2, 2x USB 4/DP&PD 3.1 (Thunderbolt 4 Compatible), HDMI 2.1 | 2x Type-A USB3.2 Gen1, 1x Type-A USB3.2 Gen2, 2x USB 4/DP&PD 3.1 (Thunderbolt 4 Compatible), HDMI 2.1 | 2x Type-A USB3.2 Gen1, 1x Type-A USB3.2 Gen2, 2x USB 4/DP&PD 3.1 (Thunderbolt 4 Compatible), HDMI 2.1 |

Dimensions | 15.91" x 12.09" x 1.26" | 15.91" x 12.09" x 1.26" | 15.91" x 12.09" x 1.26" |

Weight | 3.6 kg / 7.9lbs | 3.6 kg / 7.9lbs | 3.6 kg / 7.9lbs |

MSI Vector A18 HX A9W: Design

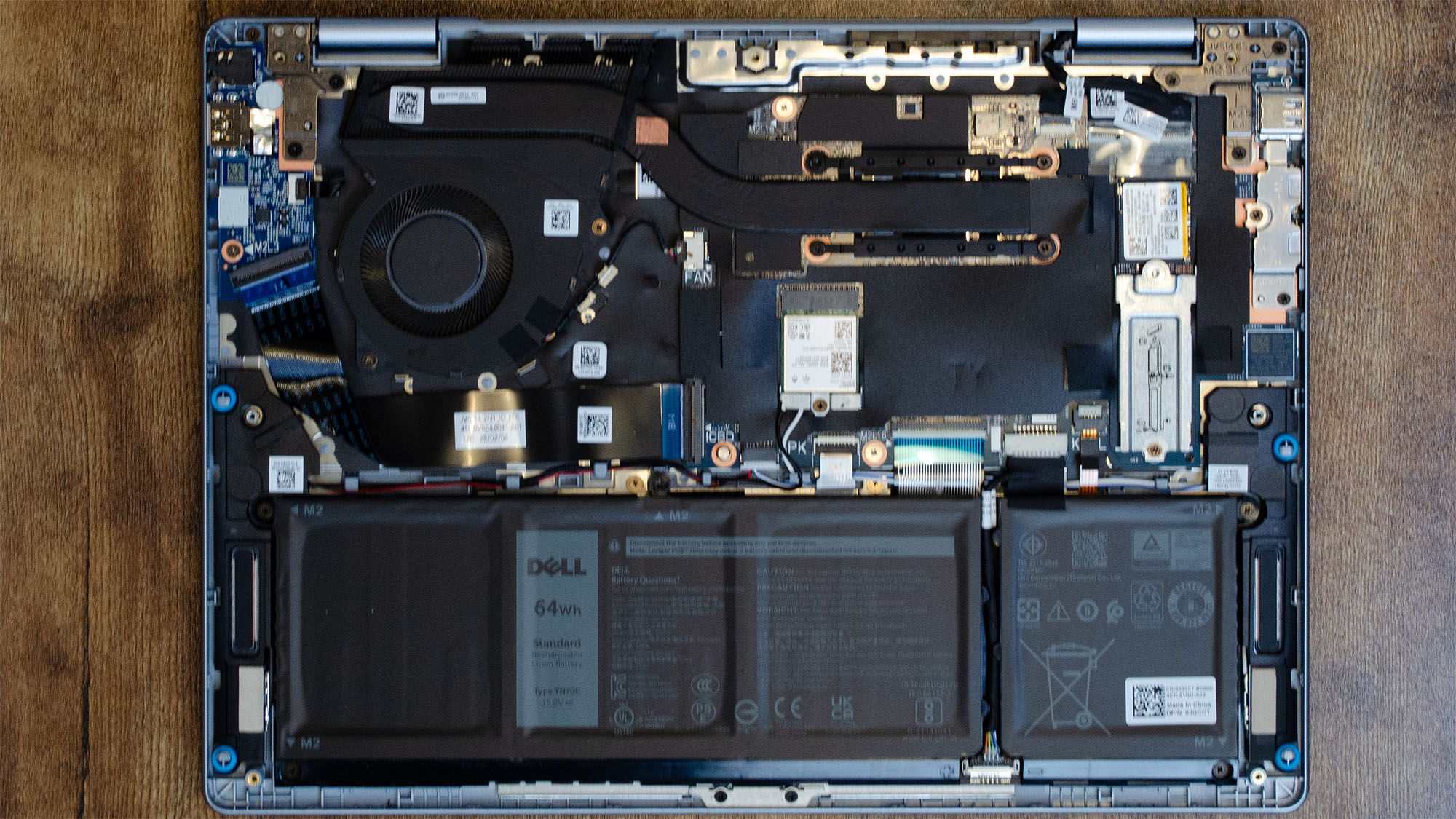

I absolutely love the chassis of the Vector A18 HX A9W as it has a sturdy build, and perhaps most importantly, a great cooling pipe design. Even when gaming on Extreme Performance, temperatures were never too high, often hitting a maximum of 77 degrees Celsius.

Its speakers aren't particularly a standout, but are serviceable and get the job done, especially with an equalizer and 3D surround sound available to improve audio immersion. It's also always easier to use Bluetooth speakers or headphones that have much better bass and clarity.

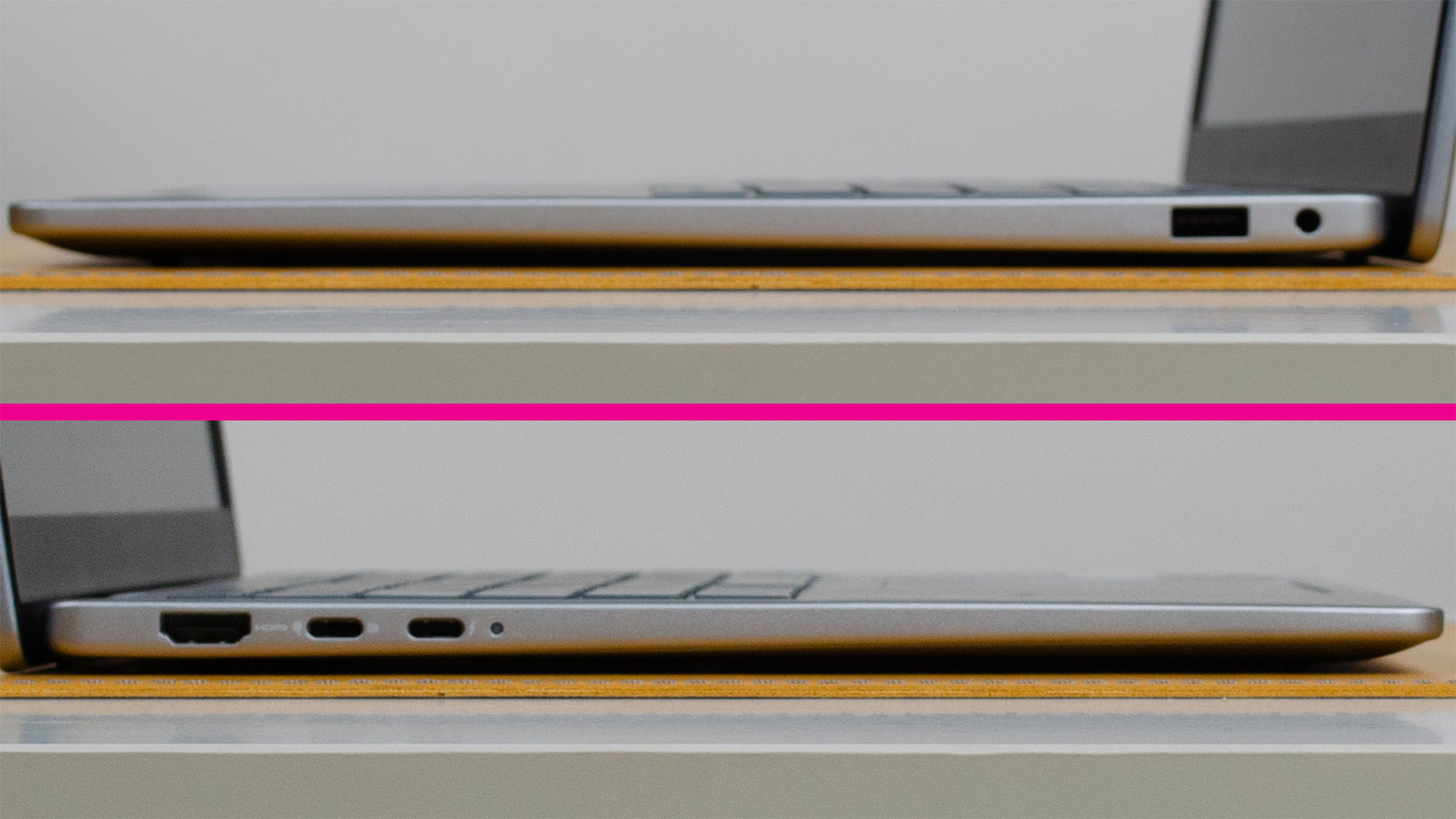

The two Thunderbolt 4 USB-C ports present are ideal for fast file transfers and for those who aren't keen on expanding internal storage with a new SSD, who would rather use an external one. It also features an HDMI 2.1 port on its rear, right next to its power adapter port – and one particular aspect I don't like is the slightly short length of the wire, which often forced me to place the chunky adapter on the desk.

With an 18-inch screen, you're getting the best you could possibly ask for when it comes to portable gaming – and if you've got a spacious desktop setup, it's a great experience.

However, the screen size and the weight are the only two main issues I have in this region, as you're going to have trouble fitting this in most backpacks for travel, and it's very heavy to carry around.

At 7.9 lbs, it had me paranoid that it would make my mini desk meet its demise, and while it's understandable considering all the powerful components, you should be aware that this laptop isn't built for portability (especially while carrying the adapter around, too).

Regardless, this is a beefy gaming laptop power-wise, and these gripes weren't too significant to spoil my experience overall.

- Design: 4 / 5

MSI Vector A18 HX A9W: Display

My review model of the Vector A18 doesn't exactly have the best display available, as it's neither OLED nor mini-LED – both of which offer greater contrast and brightness. However, that doesn't stop it from being an immersive display that I found ideal for intense and competitive multiplayer gaming sessions.

It's thanks to the 240Hz refresh rate and 2560x1600 resolution, which matches up well with the horsepower of the RTX 5080. There aren't many games that are going to run at 240fps (unless you significantly lower graphics settings or resolution), but the ones that do, look absolutely incredible.

I must admit, coming from the consistent use of an OLED ultrawide, it took a little bit of an adjustment to become accustomed to an IPS LCD panel again – but that's to be expected when scaling down from one of the best display types (and it would be unfair to knock points off here because of that).

It's worth noting that HDR isn't present either, which often goes a long way at providing better color accuracy and detail in both brighter and darker images.

Regardless, the Vector A18 HX A9W has a 100% DCI-P3 coverage, so I never once felt dissatisfied or urged to calibrate or adjust color profiles. It also helps that you're getting a full 18-inch screen, which I believe is the sweet spot for portable laptop gaming (besides it probably being why it's so heavy).

The one downside I ran into is playing games that don't have 16:10 aspect ratio support. To put it simply, if you dislike black bars filling out portions of your screen while gaming, you're not going to like it here. So, it's definitely worth considering before committing to a purchase, but there are no other gripes from me.

- Display: 4 / 5

MSI Vector A18 HX A9W: Performance

- RTX 5080 is a beast of a laptop GPU

- The combo with AMD's Ryzen 9955HX processor is fantastic

- The CPU's performance is incredible, matching desktop processors

The biggest highlight of the MSI Vector A18 HX A9W is its performance, almost across the board. It shouldn't come as a surprise for me to say that the RTX 5080 laptop GPU is an absolute powerhouse, and crushes the 2560x1600 resolution in most triple-A games – and DLSS 4 with Multi Frame Generation is a game changer when used where necessary (essentially anything as demanding as Cyberpunk 2077).

In CPU-bound games, AMD's Ryzen 9955HX processor shines bright with 16 cores and 32 threads, ensuring the Blackwell GPU can do its job without any major bottleneck. In synthetic tests, both single-core and multi-core scores soared above chips like Apple's M1 Max in Cinebench 2024, and in real-world gaming tests, the results were consistent, as I didn't notice anything ominous regarding performance frame rates and frame pacing.

Most importantly, MSI Center features three different user scenario modes: ECO-Silent, Balanced, and Extreme Performance, which can all be used in any of the three Discrete, MSHybrid, or Integrated Graphics modes.

For the best results, I stuck with Discrete Graphics mode, and I must say I was pleasantly surprised at how great ECO-Silent was, in particular. Using a lower TDP (power consumption), temperatures are significantly decreased, and the fans are silent, hence the name ECO-Silent – but I came away shocked at the frame rates I was hitting using this mode.

At 1600p on ECO-Silent, Cyberpunk 2077 at maximum graphics settings without ray tracing and DLSS Quality, it ran at a solid 77fps, sometimes reaching the low 80fps mark, with a 1% low of 66fps.

In Assassin's Creed Shadows – a game that is arguably nearly as demanding on PC hardware as Cyberpunk 2077, and frankly, needs Frame Generation for higher FPS – ran at an average of 40fps on maximum graphics settings at 1600p, using DLSS Quality on ECO-Silent.

With the same graphics settings applied to Indiana Jones and the Great Circle, it hit an average of 62fps; if that doesn't indicate how impressive ECO-Silent mode is, then I don't know what will. It's the best option for gamers who are bothered by fan noise and higher temperatures, while you still get very impressive performance results.

It gets even better with Balanced and Extreme Performance; the former has fans only a little louder than ECO-Silent, and is the way you should use the Vector A18 HX A9W for gaming (it's also MSI's recommended option), as it gives you a true reflection of what this machine is capable of, evident in the performance charts above.

Sticking with Indiana Jones and the Great Circle, Balanced mode was 41% faster than ECO-Silent, with an average of 94fps and 1% low at 77fps.

In the case of Extreme Performance, expect a whole lot of fan noise and higher temperatures, in favor of the best possible performance. In most cases of casual play, I hardly needed to use this mode, as the FPS boost wasn't significant enough coming from Balanced mode. However, it's absolutely essential for gaming at 4K, especially if you're using ray tracing.

It mustn't go without mention that DLSS Multi Frame Generation (when you have a decent base frame rate) is an absolute treat. It makes Cyberpunk 2077's Overdrive path tracing preset playable without needing to adjust graphics settings; yes, increased latency is worth noting, but I honestly didn't think it was too impactful in this case.

- Performance: 5 / 5

MSI Vector A18 HX A9W: Battery

As it is with most gaming laptops I've used, the battery life isn't terrible, but it's also not great either – so, decent at best. We're still seemingly a long way off from battery tech improving, especially for gaming laptops and handheld gaming PCs, but I give the Vector A18 HX A9W its plaudits for being at least average here.

It has a 99.9WHr battery, and switching MSI Center's user scenarios, battery life can vary. While playing Resident Evil 4 remake on Hybrid mode and Extreme Performance, the laptop lasted a full hour, starting at 85% before draining. Knocking the scenario settings down to Discrete mode and Balanced (with 50% brightness) allowed it to last another 10 minutes from the same battery percentage.

If you ask me, I wouldn't even bother gaming without the power adapter because, as expected, performance is significantly worse. Bear in mind, there's a 400W power adapter ready to handle the combined 260W of power from the RTX 5080 and the Ryzen 9 9955HX.

On the other hand, while web surfing in Balanced mode and Integrated Graphics, results were a little bit more impressive; at 59% battery, it took exactly 51 minutes to fall to 6%. When at 100%, it lasted 3 hours and 25 minutes during YouTube playback, so it's decent enough for those who want to use this laptop for work or multitasking.

However, the biggest downside is the charging time, as it takes a total of 2 hours to fully charge. It's already not great that the battery drains within 3 hours outside of gaming, but the addition of a long charge time isn't beneficial if you're looking to use it while on the go.

Portability isn't a strong suit, and these battery results are one of the main reasons why, especially when paired with the hefty design.

- Battery: 3 / 5

Should you buy the MSI Vector A18 HX A9W?

Buy it if...

You're looking for one of the best gaming laptops

The MSI Vector A18 HX A9W is one of the best gaming laptops you can find on the market, providing fantastic performance results in games with Nvidia's powerful Blackwell RTX 5080 GPU and AMD's desktop-rivalling Ryzen 9955HX processor.

You want an immersive high refresh rate display

It's great for games like Call of Duty: Black Ops 6 or Counter-Strike 2, which rely on fast refresh rates, with access to 240Hz here. The laptop GPU is capable of achieving high frame rates with the right graphics settings.

You plan on moving around your home with a powerful gaming machine

The Vector A18 HX A9W is great for those who wish to move around their home using different displays in different rooms, without a big desktop PC case taking up space.

Don't buy if...

You need a laptop with long battery life

While the Vector A18 HX A9W's battery life isn't bad, it's also not the best either, as you might find with most gaming laptops, and you shouldn't consider this if that is a dealbreaker.

You want to game or work on the go

Coinciding with its average battery life, you won't get great performance without using its 400W power adapter, which is required to utilize the RTX 5080 and Ryzen 9955HX's combined 260W of power.

It's also very heavy and won't fit in most backpacks due to the display's 18-inch size.

You want an affordable gaming laptop

Affordability and the MSI Vector A18 HX A9W are completely distant, as all configurations have an eye-watering cost using either the RTX 5070 Ti or the RTX 5080.

How I tested the MSI Vector A18 HX A9W

I tested the MSI Vector A18 HX A9W for three weeks, running multiple games and synthetic benchmarks. It kept me away from my main desktop gaming PC for a while, with Multi Frame Generation being a significant reason in why, and while I don't want game developers to become over-reliant on Nvidia's DLSS tech, it's great when implemented properly.

Navigation and web browsing was a breeze too, with 32GB of RAM and a powerhouse CPU giving me all I needed to acknowledge that this was a gaming laptop that enthusiasts won't want to miss out on.