The Google Android XR can’t do very much… yet. At Google I/O 2025, I got to wear the new glasses and try some key features – three features exactly – and then my time was up. These Android XR glasses aren’t the future, but I can certainly see the future through them, and my Meta Ray Ban smart glasses can’t match anything I saw.

The Android XR glasses I tried had a single display, and it did not fill the entire lens. The glasses projected onto a small frame in front of my vision that was invisible unless filled with content.

To start, a tiny digital clock showed me the time and local temperature, information drawn from my phone. It was small and unobtrusive enough that I could imagine letting it stay active at the periphery.

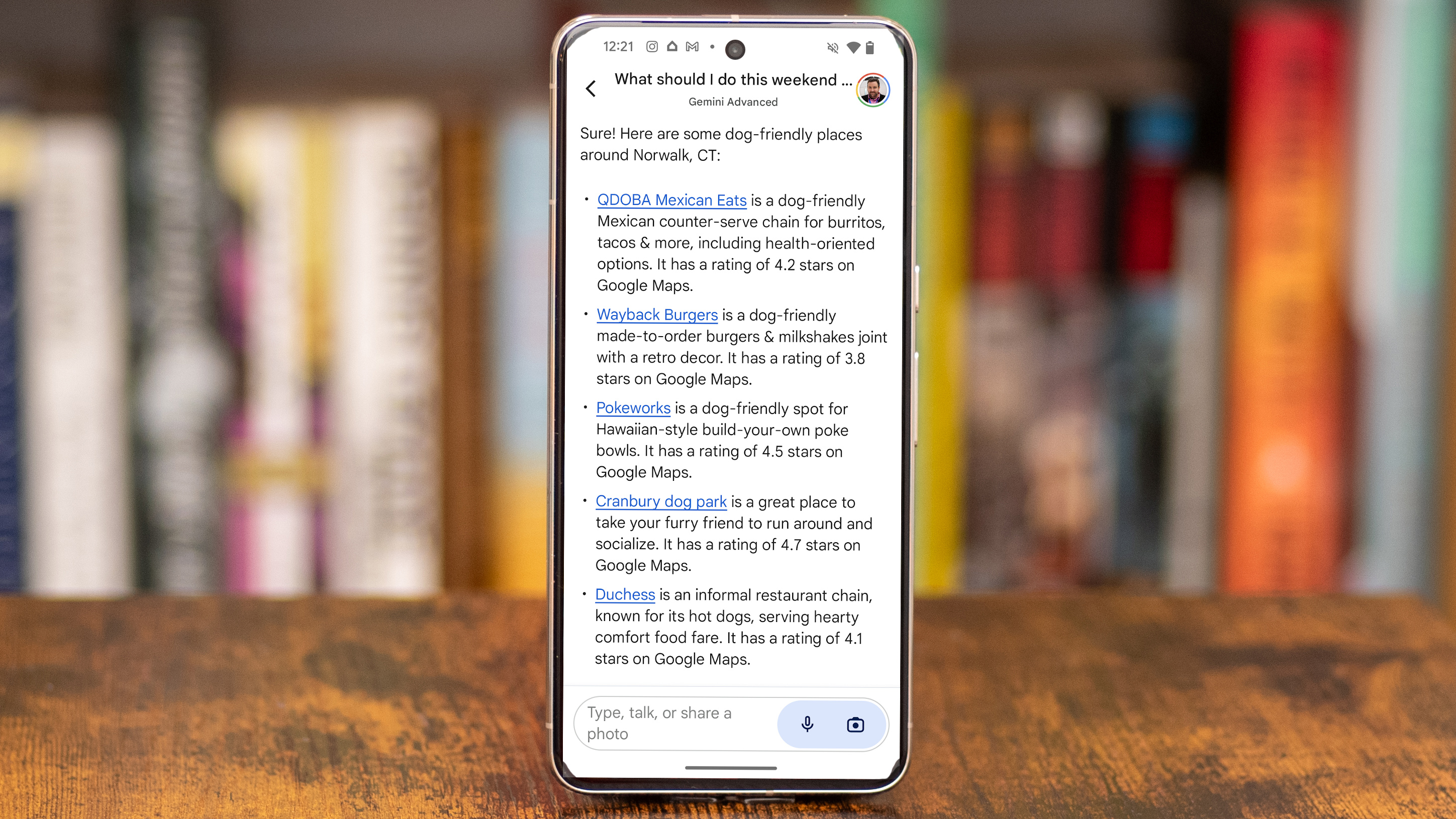

Google Gemini is very responsive on this Android XR prototype

The first feature I tried was Google Gemini, which is making its way onto every device Google touches. Gemini on the Android XR prototype glasses is already more advanced than what you might have tried on your smartphone.

I approached a painting on the wall and asked Gemini to tell me about it. It described the pointillist artwork and the artist. I said I wanted to look at the art very closely and I asked for suggestions on interesting aspects to consider. It gave me suggestions about pointillism and the artist’s use of color.

The conversation was very natural. Google’s latest voice models for Gemini sound like a real human. The glasses also did a nice job pausing Gemini when somebody else was speaking to me. There wasn’t a long delay or any frustration. When I asked Gemini to resume, it said ‘no problem’ and started up quickly.

That’s a big deal! The responsiveness of smart glasses is a metric I haven’t considered before, but it matters. My Meta Ray Ban Smart Glasses have an AI agent that can look through the camera, but it works very slowly. It responds slowly at first, and then it takes a long time to answer the question. Google’s Gemini on Android XR was much faster and that made it feel more natural.

Google Maps on Android XR wasn’t like any Google Maps I’ve seen

Then I tried Google Maps on the Android XR prototype. I did not get a big map dominating my view. Instead, I got a simple direction sign with an arrow telling me to turn right in a half mile. The coolest part of the whole XR demo was when the sign changed as I moved my head.

If I looked straight down at the ground, I could see a circular map from Google with an arrow showing me where I am and where I should be heading. The map moved smoothly as I turned around in circles to get my bearings. It wasn’t a very large map – about the size of a big cookie (or biscuit for UK friends) in my field of view.

As I lifted my head, the cookie-map moved upward. The Android XR glasses don’t just stick a map in front of my face. The map is an object in space. It is a circle that seems to remain parallel with the floor. If I look straight down, I can see the whole map. As I move my head upward, the map moves up and I see it from a diagonal angle as it lifts higher and higher with my field of view.

By the time I am looking straight ahead, the map has entirely disappeared and has been replaced by the directions and arrow. It’s a very natural way to get an update on my route. Instead of opening and turning on my phone, I just look towards my feet and Android XR shows me where they should be pointing.

Showing off the colorful display with a photograph

The final demo I saw was a simple photograph using the camera on the Android XR glasses. After I took the shot, I got a small preview on the display in front of me. It was about 80% transparent, so I could see details clearly, but it didn’t entirely block my view.

Sadly that was all the time Google gave me with the glasses today, and the experience was underwhelming. In fact, my first thought was to wonder if the Google Glass I had in 2014 had the exact same features as today’s Android XR prototype glasses. It was pretty close.

My old Google Glass could take photos and video, but it did not offer a preview on its tiny, head-mounted display. It had Google Maps with turn directions, but it did not have the animation or head-tracking that Android XR offers.

There was obviously no conversational AI like Gemini on Google Glass, and it could not look at what you see and offer information or suggestions. What makes the two similar? They both lack apps and features.

Which comes first, the Android XR software or the smart glasses to run it?

Should developers code for a device that doesn’t exist? Or should Google sell smart glasses even though there are no developers yet? Neither. The problem with AR glasses isn’t just a chicken and egg problem of what comes first, the software or the device. That’s because AR hardware isn’t ready to lay eggs. We don’t have a chicken or eggs, so it’s no use debating what comes first.

Google’s Android XR prototype glasses are not the chicken, but they are a fine looking bird. The glasses are incredibly lightweight, considering the display and all the tech inside. They are relatively stylish for now, and Google has great partners lined up in Warby Parker and Gentle Monster.

The display itself is the best smart glasses display I’ve seen, by far. It isn’t huge, but it has a better field of view than the rest; it’s positioned nicely just off-center from your right eye’s field of vision; and the images are bright, colorful (if translucent), and flicker-free.

When I first saw the time and weather, it was a small bit of text and it didn’t block my view. I could imagine keeping a tiny heads-up display on my glasses all the time, just to give me a quick flash of info.

This is just the start, but it’s a very good start. Other smart glasses haven’t felt like they belonged at the starting line, let alone on retail shelves. Eventually, the display will get bigger, and there will be more software. Or any software, because the feature set felt incredibly limited.

Still, with just Gemini’s impressive new multi-modal capabilities and the intuitive (and very fun) Google Maps on XR, I wouldn’t mind being an early adopter if the price isn’t terrible.

How the Android XR prototype compares to Meta’s Ray Ban Smart Glasses

Of course, Meta Ray Ban Smart Glasses lack a display, so they can’t do most of this. The Meta Smart Glasses have a camera, but the images are beamed to your phone. From there, your phone can save them to your gallery, or even use the Smart Glasses to broadcast live directly to Facebook. Just Facebook – this is Meta, after all.

With its Android provenance, I’m hoping whatever Android XR smart glasses we get will be much more open than Meta’s gear. It must be. Android XR runs apps, while Meta’s Smart Glasses are run by an app. Google intends Android XR to be a platform. Meta wants to gather information from cameras and microphones you wear on your head.

I’ve had a lot of fun with the Meta Ray Ban Smart Glasses, but I honestly haven’t turned them on and used the features in months. I was already a Ray Ban Wayfarer fan, so I wear them as my sunglasses, but I never had much luck getting the voice recognition to wake up and respond on command. I liked using them as open ear headphones, but not when I’m in New York City and the street noise overpowers them.

I can’t imagine that I will stick with my Meta glasses once there is a full platform with apps and extensibility – the promise of Android XR. I’m not saying that I saw the future in Google’s smart glasses prototype, but I have a much better view of what I want that smart glasses future to look like.

You might also like...

- Google finally gave us a closer look at Android XR – here are 4 new things we've learned

- Google’s Android XR glasses look like its most exciting gadget in years – but the headset leaves me wanting more

- Google I/O will be a major event for Android XR glasses and headsets – here are 3 announcements I'm expecting to see