Synology DiskStation DS925+: Two-minute review

CPU: AMD Ryzen V1500B

Graphics: None

RAM: 4GB DDR4 ECC SODIMM (Max 32GB)

Storage: 80TB (20TB HDD x4), 1.6TB (800GB M.2 x2)

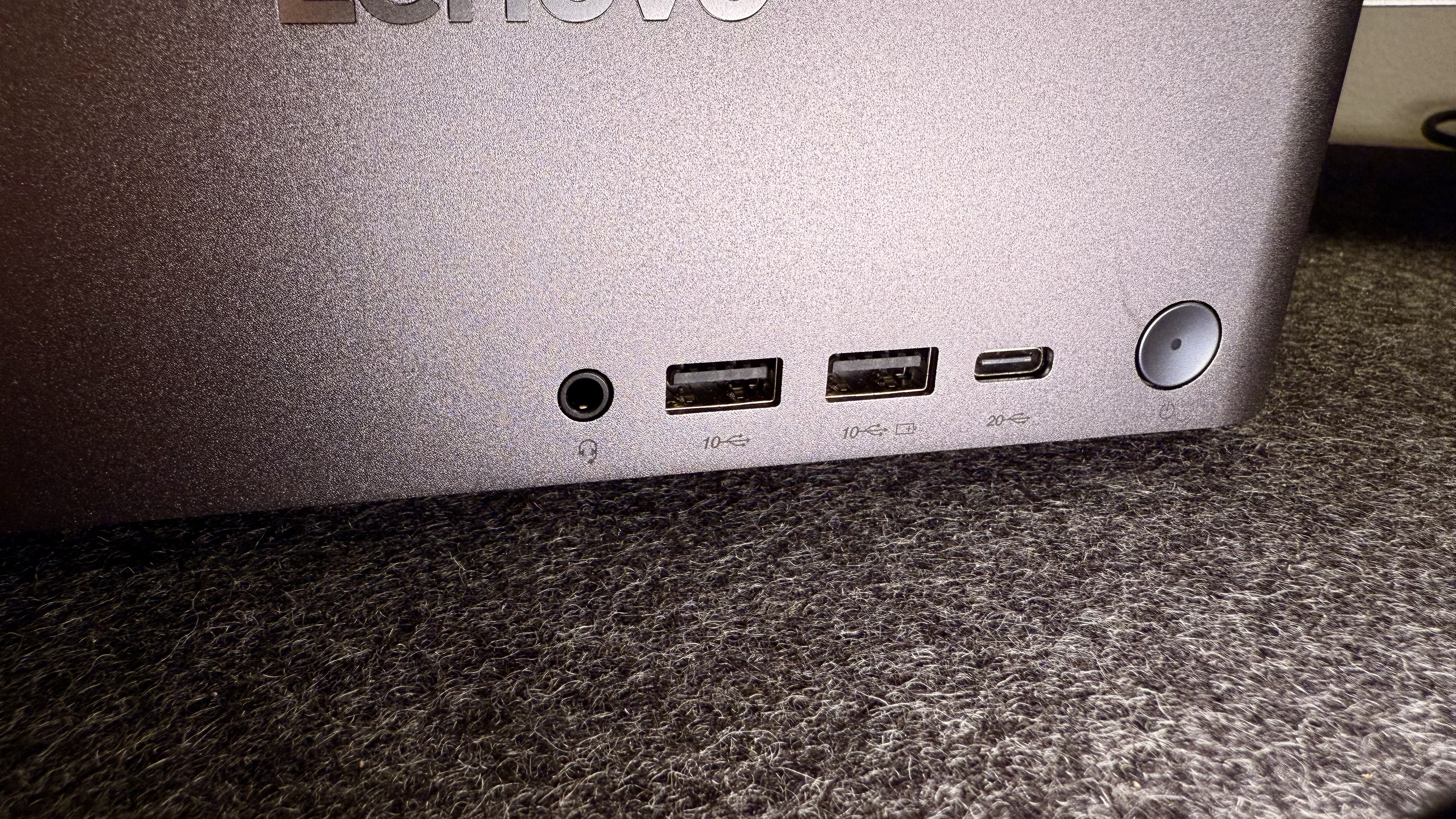

Ports: 2x Type-A (5Gbps), 1x Type-C (5Gbps), 2x 2.5GbE Ethernet ports

Size: 166 x 199 x 223mm (6.5 x 7.8 x 8.8 inches)

OS installed: DSM 7

Accessories: 2x LAN cables, 2x drive-bay lock keys, AC power cord

Synology has been producing network attached storage (NAS) technology for over 20 years, and its devices have maintained a consistent look and feel. In that time, the company has developed a highly polished operating system that’s packed with a wide variety of bespoke and third-party apps that do everything from simply backing up files on a home or office network, through managing a household’s multimedia requirements, to running a business’s entire IT stack. The latter includes enterprise-grade backup, all kinds of server functionality, email and web-hosting, virtual machine management, surveillance camera management and much, much more.

In more recent years, Synology has hunkered down in its own segment of the NAS market – eschewing broad compatibility with third-party hardware providers and a Wild-West application community in favor of a more closed and professional operating environment, where you have to buy expensive Synology drives to populate the boxes. While these compatibility changes have driven some users away, what remains is still an incredibly robust, well-supported and well-documented ecosystem that has a huge community following.

The new DiskStation DS925+ is something of a popular, sweet-spot size that can suit new users and network admins alike. At a glance, it looks exactly like several generations of its predecessors and it operates very much like them. Its most significant features include four bays that support both 3.5-inch and 2.5-inch drives and two M.2 NVMe SSD slots. Its tool-less design and simple setup wizards make it quick and easy to build, and you can be up and running in less than 15 minutes. It runs quietly and can be positioned on top of a desk or hidden away (in a ventilated location) discreetly. To casual users (with deep pockets) who want access to Synology’s apps and need only basic NAS functionality, we could stop there. Enthusiasts will want to know more.

The list of compatible drives is now smaller than ever. While Synology has kicked certification of third-party drives over to vendors like Seagate and WD, it doesn’t appear to have resulted in more choice… yet. At the time of writing, you’re still limited to Synology’s latest Plus Series consumer drives (which go up to 16TB), its Enterprise drives (up to 20TB), its 2.5-inch SSDs (up to 7TB) and its Enterprise-level M.2 NVMe drives (up to 800GB). All of them are considerably more expensive than incompatible third-party counterparts.

The DS925+ comes with one of its two SO-DIMM slots populated with 4GB of DDR4 ECC RAM. You can upgrade to two sticks of Synology’s own 16GB ECC RAM (for 32GB total) if required, but not if you’re looking to save money. The introduction of error-correcting (ECC) RAM at this level is a boon, nonetheless.

It should be noted that if you’re looking to the DS925+ as an upgrade for an older Synology NAS, it will allow third-party drives if it recognizes an existing DSM installation. However, you’ll get constant drive compatibility warnings in return.

Positive new hardware features include a beefed-up, quad-core, eight-thread AMD Ryzen V1500B processor (note that there’s no integrated GPU) and its two network ports are finally 2.5GbE. There are both front and rear-mounted USB-A 3.2 Gen 1 ports plus a new, slightly controversial, USB-C port for connecting an external, 5-bay extension unit (replacing old eSATA-connected options). What’s most bothersome, though, is the lack of a PCIe network expansion for upgrading to 10GbE connectivity, which limits the possible network transfer speeds.

Ultimately, the initial outlay for a DS925+ can be enormous compared to rivals on the market. However, if you’re going to make use of the vast libraries of free software applications and licenses, it’ll represent great value.

Synology DiskStation DS925+: Price & availability

Synology’s DiskStation DS925+ only recently launched and has limited availability in only a few markets. It’s listed at $830 / £551 / AU$1,099.

Overall, it's well priced compared to its closest competitors such as the TerraMaster F4-424 Max, as well as other Synology NAS devices.

- Value score: 4.5 / 5

Synology DiskStation DS925+: Design & build

While the DiskStation DS925+ looks like its predecessors, I felt that it was somewhat more robust and less rattly than some of its forebears. Regardless of that, it’s still a small and discreet black box that will not draw attention to itself, wherever it’s located.

The tool-less design makes adding hard drives simple. For 3.5-inch drives, you just lift up the drive bay door, pull out the drive tray, unclip the tray’s side bars, put the drive in the tray, clip the bars back on (they use rubber grommets to reduce sound and vibrations) and slide it back in. If you want, you can ‘lock’ each drive bay with a key to deter opportunistic thieves.

Adding RAM involves removing the drive bays and adding SO-DIMMs to the internal slots on the side. Adding the M.2 NVMe drives involves simply unclipping the covers on the base and sliding them in. Adding six drives takes less than five minutes.

A fully populated DS925+ runs very quietly – Synology says just 20dB – and I can attest that there’s only a very quiet whooshing noise made by the dual 92mm fans, and the drives only make occasional, very low clicking and popping sounds.

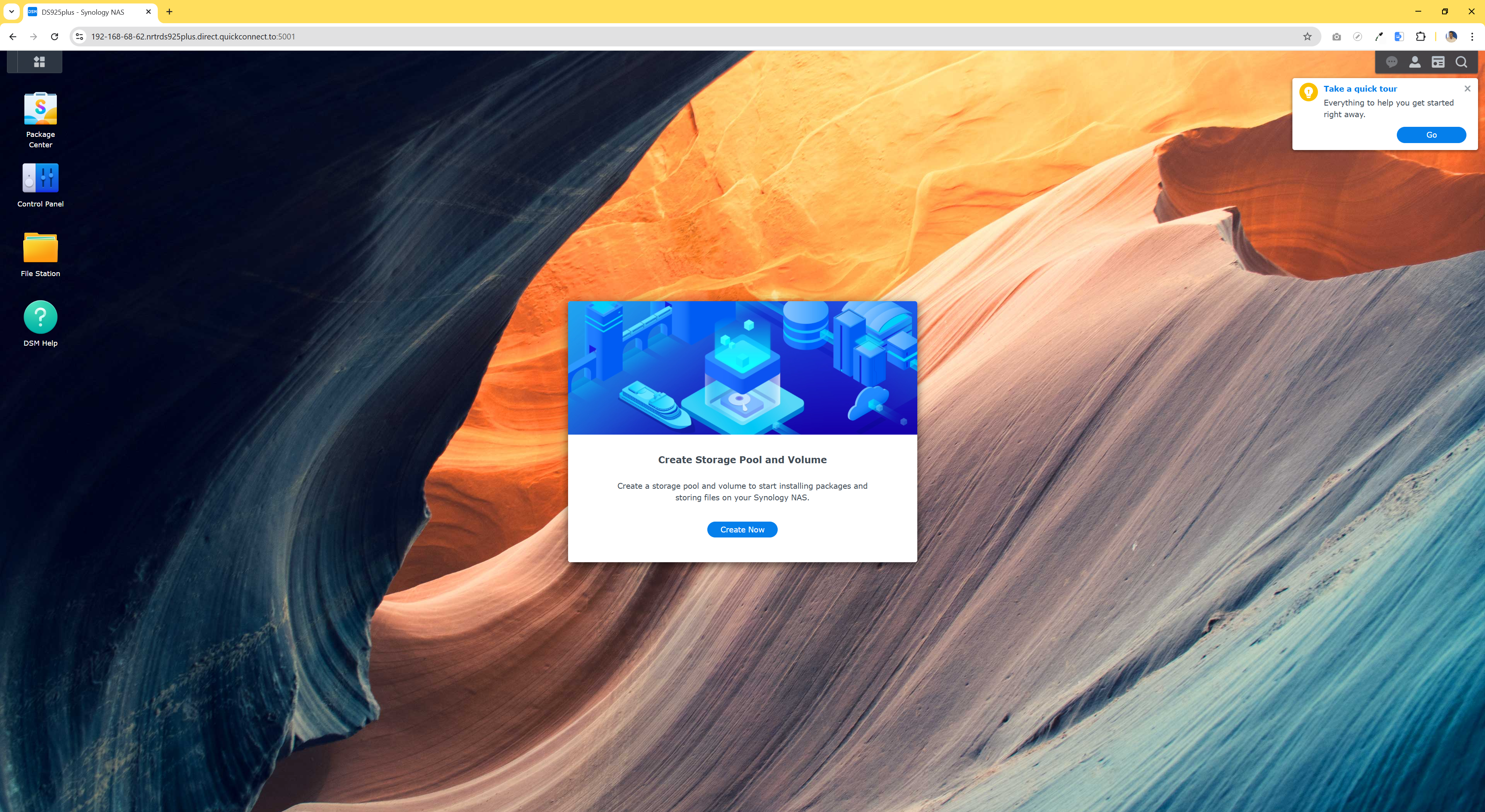

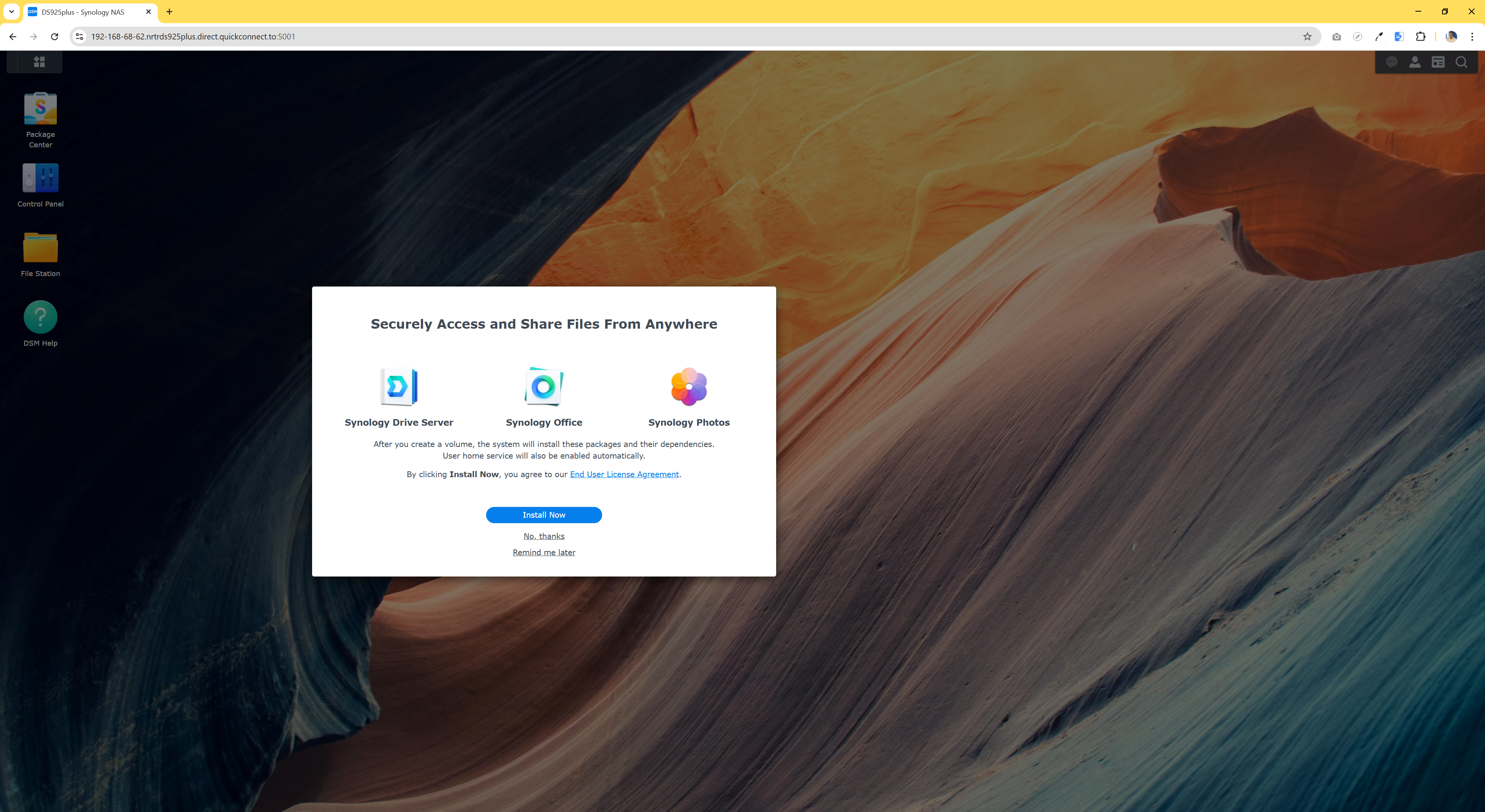

Installing the operating system is also simple. A QR code in the box provides access to an online setup document with a link that automatically finds your NAS on the network before offering to install everything for you. After a quick firmware update, it reboots and you’ll be looking at the DSM desktop, in a web browser, just a few minutes later. The NAS will then prompt you to sign into a Synology account, set up SSO and MFA log-ins and install some basic apps.

Newcomers might struggle at first with the terminology surrounding the initial setup of the drives, but (at the basic level) the NAS walks you through the process. It involves organizing the drives into a storage pool, then creating a volume and then adding folders. You’ll also be prompted to list which users can have View, Read or Write access. At this point you’ve got functional network-attached storage that can be accessed across your network.

The operating system is well-polished and installing bespoke and third-party apps is simple thanks to the Package Center application which operates like a free App Store. It’s also simple to enable remote access using Synology’s QuickConnect ID short-web-link system.

An interesting change with the DiskStation DS925+ is the removal of the (6Gbps) eSATA port for connecting Synology’s optional, legacy, external drive bays and its replacement with a single (5Gbps) USB-C port which connects to a Synology DX525 5-bay expansion unit. I didn't have an expansion bay on hand to test this, but the performance difference should be minimal.

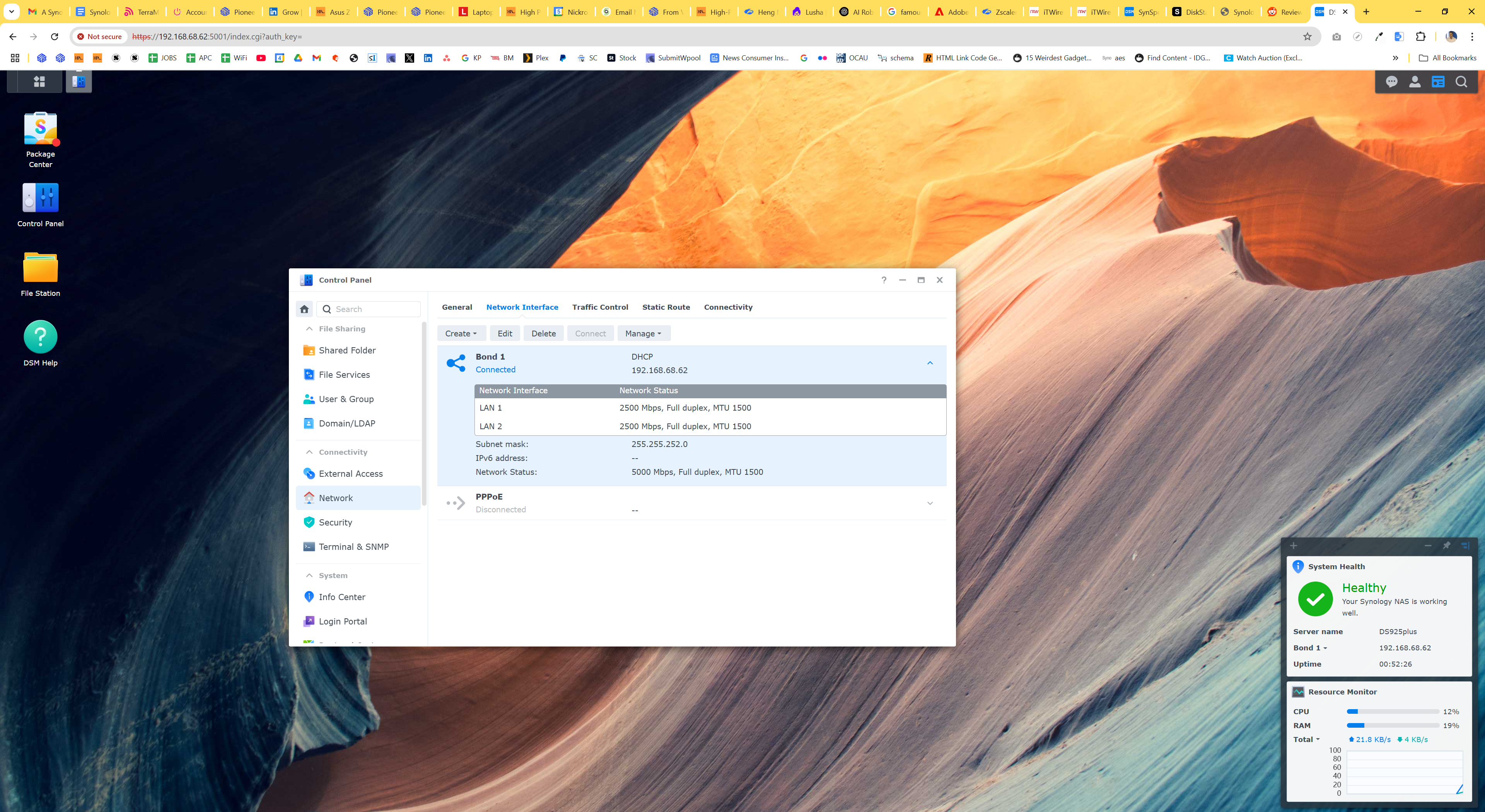

While there are only two 2.5GbE network ports, you can combine them in various ways, with the easiest (load balancing) becoming operational in just a few clicks. It’s more suited to multiple connections rather than improving top speed, though.

Finally, it’s worth noting that compatibility with third-party drives is now strictly limited. At the time of writing, the DS925+ was only compatible with its own (up to 16TB) prosumer Plus Series hard drives, its (up to 20TB) Enterprise Series hard drives and (up to 7TB) SSDs, plus its 400GB and 800GB M.2 NVMe drives. All of these cost considerably more than third-party equivalents. Synology is blunt about why it has limited compatibility so much – it got sick of dealing with support requests that often boiled down to conflicts and crashes caused by drive failures and subsequent arguments with third-party drive vendors. While it’s annoying, I can sympathize with that.

- Design & build score: 5 / 5

Synology DiskStation DS925+: Features

It’s tricky to know just where to start with the numerous features that are available with the DiskStation DS925+. It performs just about every business and consumer task you can imagine. For many smart-home users, the various multi-device backup utilities, multimedia organization tools and media-server functionality (including Plex, Emby and Jellyfin) will be used more than anything else.

For prosumer and business users, it can be your full-stack IT service provider. There are apps that can transform your NAS into an enterprise-grade backup solution with off-site (third-party and Synology C2) cloud capabilities; email server; web server; Synology Office application provider; surveillance camera manager; anti-malware protection; virtual machine manager and a VPN server.

In all of these applications, multiple user licenses are included, which boosts the value proposition through the roof.

There’s a multitude of third-party applications and high-quality documentation (covering just about everything) that has been created by a large and mature Synology-enthusiast community.

Network admins will also like the numerous drive-formatting options, granular user permission management and SSO and MFA security options.

Storage capacity can be increased via a USB-C connected, five-drive-bay expansion unit.

The two USB-A ports (front and rear) have had many functions removed so they can no longer be used to connect potential security nightmares like printers, media devices, or network adapters, but they can still be used for connecting external USB storage devices.

The twin 92mm fans are quiet and, in conjunction with well-designed vents, do a good job of cooling the NAS. That said, be sure to place it in a location where airflow isn’t impeded and the vents won’t get clogged with dust.

- Features score: 5 / 5

Synology DiskStation DS925+: Performance

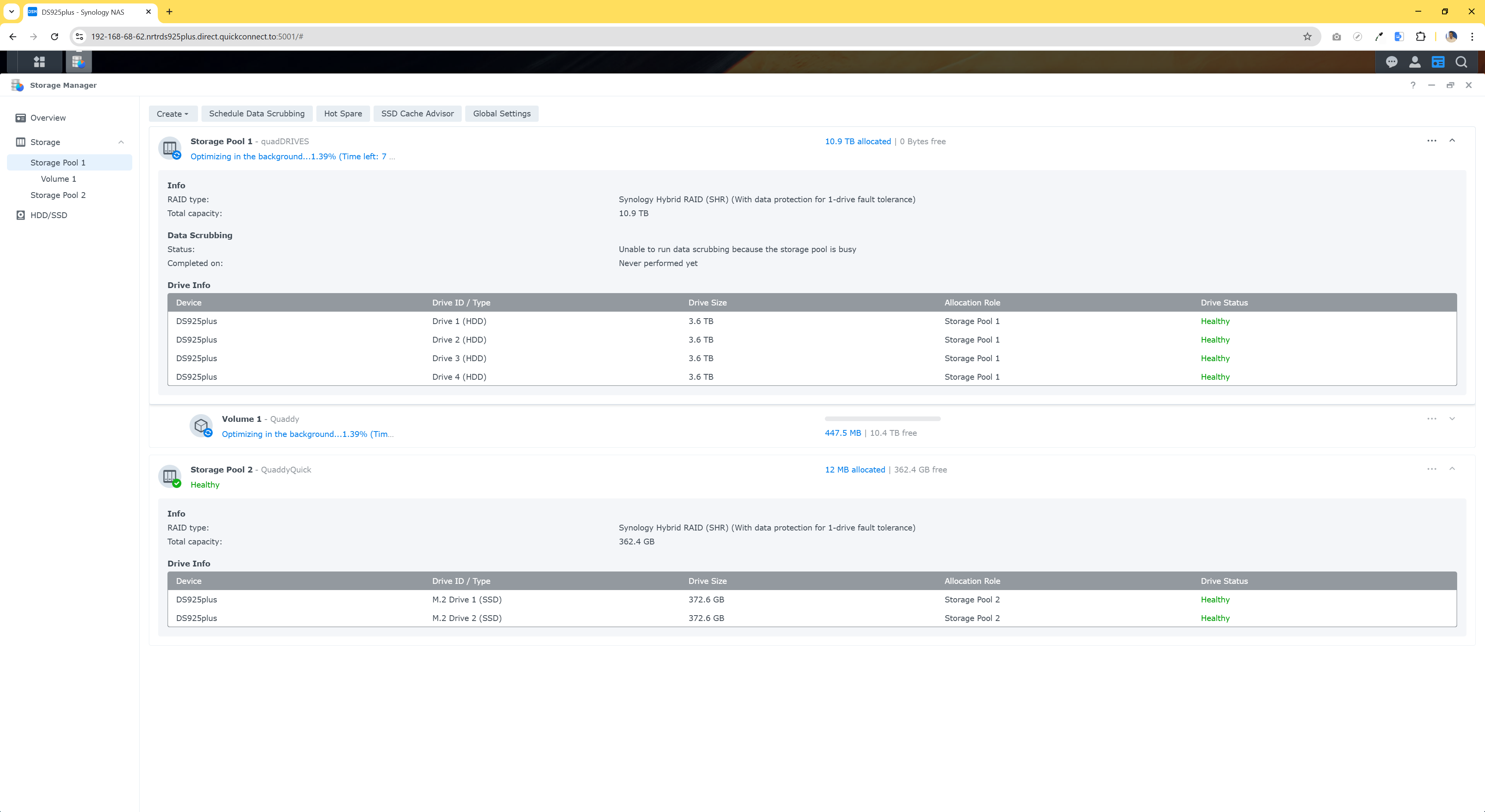

To test the DiskStation DS925+, I installed four Synology 3.5-inch, 4TB hard drives and formatted them with Synology’s own RAID-5-like Btrfs file system which offers striped performance boosts, disk-failure redundancy protection and numerous enhancements that work with Synology’s backup utilities. It left me with a 10.4TB volume and meant I could lose/remove any one drive without suffering data loss.

I also installed two 400GB M.2 NVMe SSDs as a single Btrfs storage volume (they can also be used for caching) which gave me a usable capacity of 362.4GB.

I transferred files from one volume to the other and hit sustained transfer speeds that peaked at 435MB/s, but most people will be moving data externally.

I subsequently performed multiple tests to find its real-world limits, see what doing without a 10GbE port option meant and discover what benefits the two (configurable) 2.5GbE LAN ports offered. I did this by connecting the NAS to a high-end, TP-Link Deco BE85 Wi-Fi 7 Router (with 10GbE LAN ports) via Ethernet and downloading large video files using various wired and wireless configurations.

I compared the DS925+’s performance to that of an older, two-bay Synology DiskStation DS723+ that has a 10GbE wired connection, a dual 3.5-inch hard drive volume and a newly fitted, single, 800GB Synology M.2 NVMe SSD-based volume.

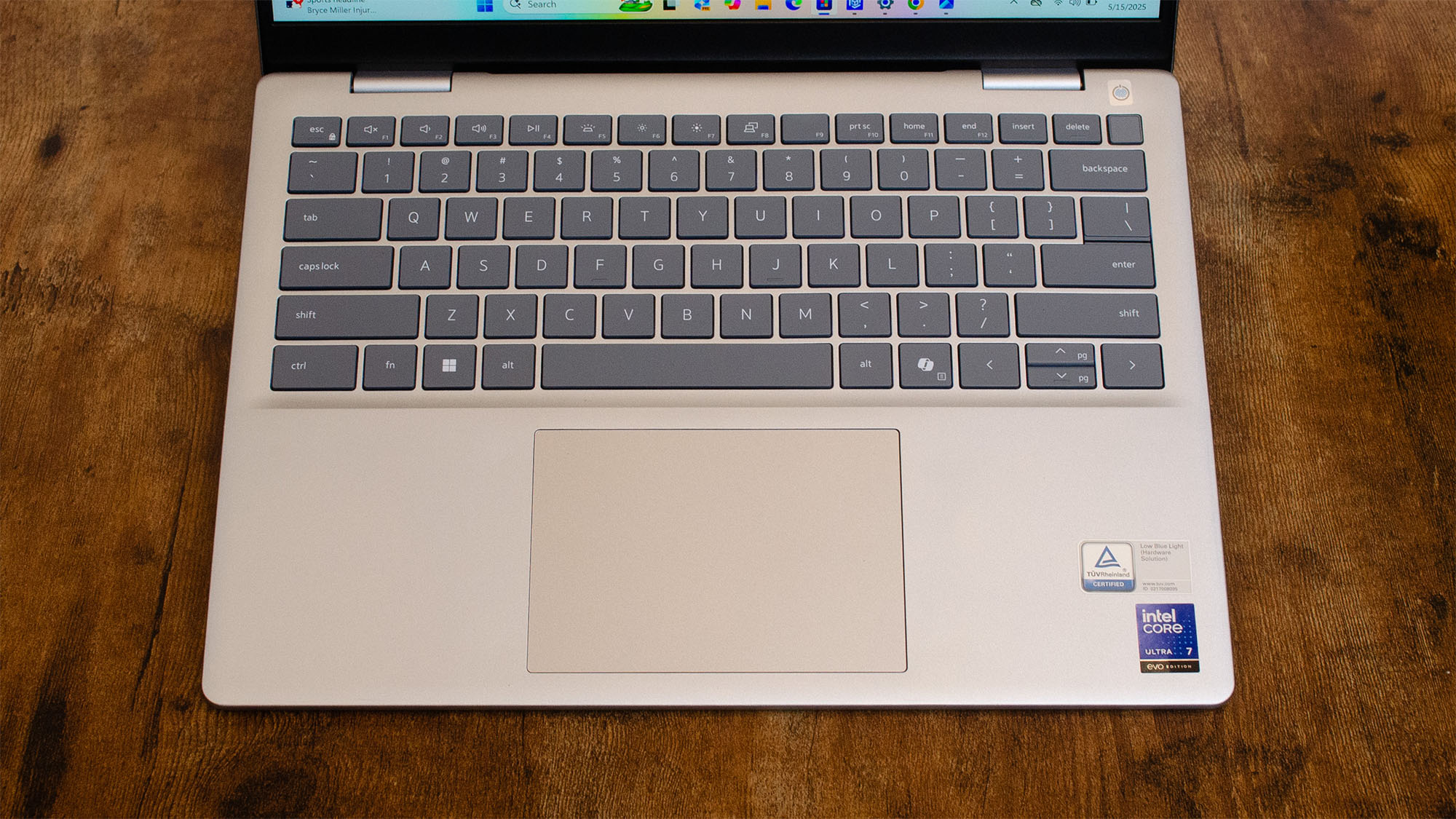

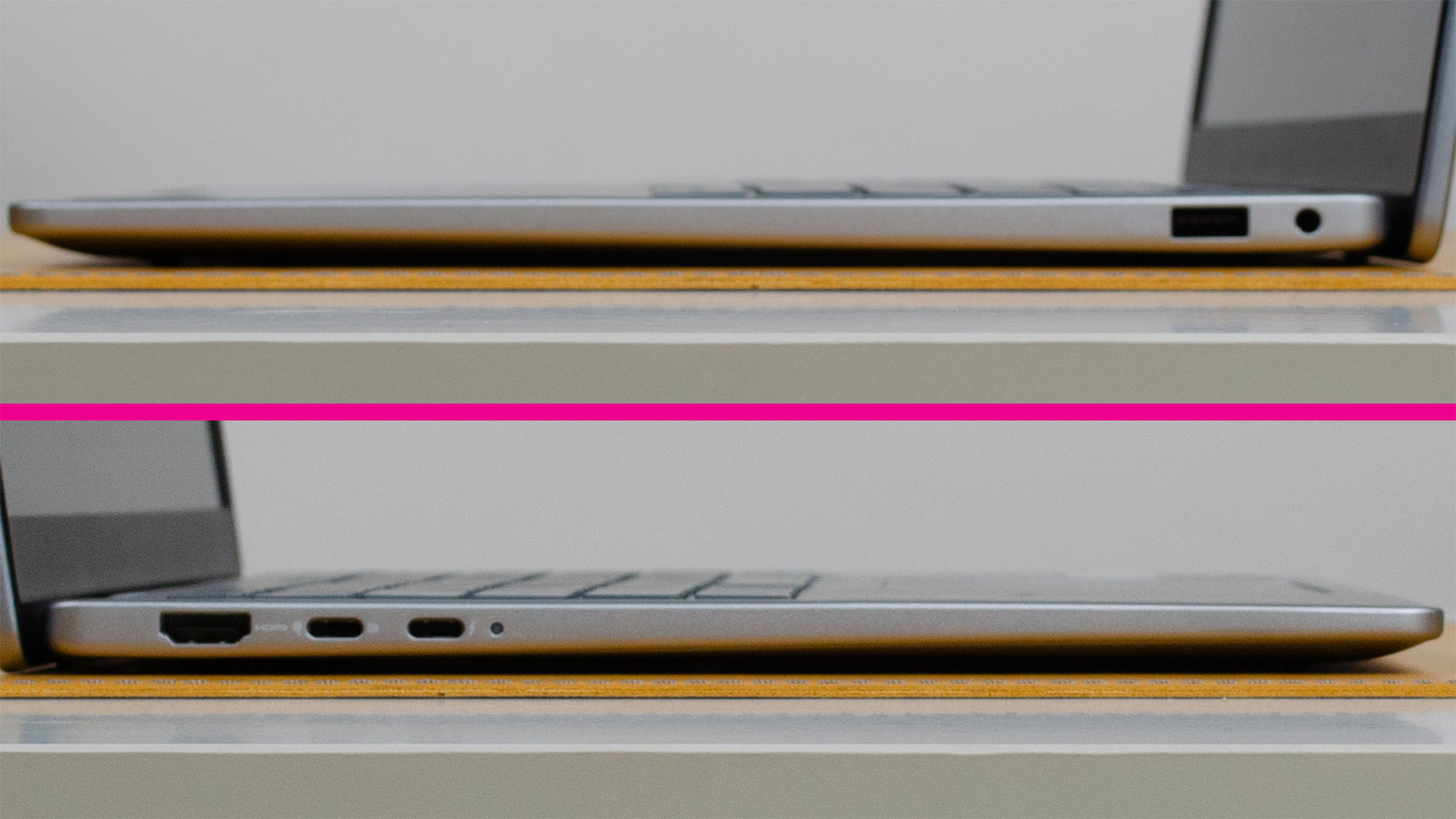

I tested using a high-end Asus ROG Strix Scar 17 X3D gaming laptop with a 2.5GbE LAN connection and Wi-Fi 6E, as well as a new Core Ultra (Series 2) Asus Vivobook 14 Flip with Wi-Fi 7.

I started with the DS723+ which I’ve been using for testing Wi-Fi routers. With the Scar’s 2.5GbE Ethernet port connected by wire to the Deco router, I saw sustained transfer speeds that hit 245MB/s for both the DS723+’s HDD volume and its NVMe volume. Over a 5GHz Wi-Fi 6E wireless connection, this dropped to 194MB/s for both volumes. Over a 6GHz Wi-Fi 6E wireless connection, it achieved 197MB/s using the HDD volume and 215MB/s for the NVMe volume.

Switching to the Wi-Fi 7 VivoBook, on the 5GHz wireless connection, it managed 180MB/s for both the HDD and NVMe volumes. However, when using the 6GHz Wi-Fi 7 network, it achieved 244MB/s for the HDD volume and an astonishing 347MB/s for the NVMe volume. That right there is the power of having a 10GbE-equipped NAS (with an NVMe drive) connected to a Wi-Fi 7 network. Cables, schmables! That’s more than enough for editing multiple streams of UHD video at once.

So, how did the newer DS925+ compare? When it was connected to the Deco via a single 2.5GbE port, the 2.5GbE LAN connected Asus laptop reached 280MB/s for the HDD volume and 282MB/s for the NVMe volume – a good 35MB/s quicker than the two-bay DS723+. Over 5GHz Wi-Fi these scores both dropped to 190MB/s which is similar to the DS723+, illustrating a likely 5GHz Wi-Fi bottleneck. Interestingly, performance was consistently slower during the Scar’s 6GHz tests, where it hit 163MB/s (HDD volume) and 172MB/s (NVMe volume), but this is again likely caused by the network, not the NAS.

When the VivoBook connected via the 5GHz network, it managed 186MB/s transfers for both volumes. Over Wi-Fi 7 this jumped up to 272MB/s for the HDD volume and 278MB/s for the SSD volume. So, thus far, the file transfer performance benefits of having NVMe storage on the DS925+ aren’t significant when passing through the bottleneck of its 2.5GbE LAN port.

Consequently, I bonded the two 2.5GbE connections together to form a ‘single’ 5GbE connection in an effort to boost performance. This takes just a few clicks in DSM’s control panel. I opted for the basic Adaptive Load Balancing option, but there are several other configurations for various types of network topology.

So, with the DS925+’s two Ethernet cables forming a single 5Gbps connection to the Deco router, I ran the tests again. The 2.5GbE-connected ROG Strix Scar saw transfer speeds (to both the HDD and SSD volumes) only reach 168MB/s, which is around 120MB/s slower than when the NAS was connected via a single 2.5GbE wired connection. Over 5GHz Wi-Fi this boosted (slightly) to 188MB/s for both volumes and over the 6GHz network, it dropped back to 170MB/s for both volumes. It’s fair to say that combining the DS925+’s two 2.5GbE ports is better suited to handling multiple network streams rather than boosting performance of a single connection.

Nonetheless, I repeated the test with the VivoBook. Over 5GHz Wi-Fi, both volumes saw transfer speeds of 176MB/s. Over 6GHz Wi-Fi 7, it hit 283MB/s.

So, what have we learned about the DS925+’s file transfer-speed potential? Using a 2.5GbE wired connection to and from the router, it tops out at 280MB/s. Connecting the laptop via 5GHz Wi-Fi typically sees transfer speeds of between 160MB/s and 185MB/s, but over 6GHz Wi-Fi 7, up to 283MB/s is possible. This means that, in the right circumstances, Wi-Fi 7 can match 2.5GbE connection speeds.

We also learned that combining the DS925+’s two 2.5GbE connections (at least, in my particular setup) reduces the top transfer speed. Most importantly, that means losing the option for a 10GbE upgrade stops us hitting insane 347MB/s speeds over Wi-Fi 7, and 283MB/s is the reduced ceiling. As such, it’s straight-up not worth using the M.2 drives for file transfer performance boosting as the 2.5GbE connection(s) act as a bottleneck.

That all said, the M.2 drives can still improve performance through caching functionality and Synology notes it can give a 15x improvement to random read and write IOPS. This will be a much bigger deal in situations with multiple connections occurring simultaneously.

While some high-end users will miss the option to upgrade to a 10GbE performance ceiling, I found it’s still more than enough to facilitate very high bitrate, 60FPS, UHD+ video playback (and multiple UHD video stream editing) in addition to having multiple simultaneous connections performing numerous lesser tasks.

- Performance score: 4.5 / 5

Should you buy the Synology DiskStation DS925+?

It has become normal to gripe about the design decisions and limitations that Synology imposes on each generation of its prosumer NAS boxes. However, if we eliminate the potential purchasers it won’t suit – i.e. those who need a 10GbE connection and those who cannot afford all the expensive Synology hard drives required to populate it – it’s absolutely worth buying.

Its highly evolved chassis is deceptively well built in terms of tool-less access, rigidity, cooling and airflow. Its operating environment remains secure, robust and intuitive and is packed with features. Its software library will satisfy casual and demanding consumers plus network admins alike and almost all of it is free.

While it’s lost the hodgepodge, stick-your-old-hard-drives-in-a-box-and-hack-a-NAS-together old-school vibe, it’s now a reliable (and scalable) professional backbone for any business or smart-home.

As an all-around package, it’s the best on the market for its target audience. Yes, it can be expensive to populate with drives, but the subsequent total cost of ownership borne through reliability, software licensing and built-in security features help offset the burden of the initial outlay. This means that the DS925+ is, once again, a highly desirable winner from Synology.

Attribute | Notes | Score |

Value | A closed market makes buying drives expensive. But, the free software library can make it a bargain, regardless. | 4.5 |

Design | It’s incredibly simple to put together thanks to its tool-less design. The software is voluminous, polished, mature, secure and reliable. It also runs cool and quiet. | 5 |

Features | Whether you’re an undemanding consumer or a network admin, the DS925+ can do it all. | 5 |

Performance | The lack of an upgrade option to a 10GbE port limits peak performance, but it’s still not slow. | 4.5 |

Total | Synology’s latest 4-bay NAS is as attractive as its predecessors, which is high praise indeed. | 5 |

Buy it if...

You want a one-stop box that can support your smart home

Its ease of setup, friendly multimedia apps, security, reliability and ability to operate almost every facet of a smart home make it a winner.

You want a one-stop box that can support your entire organization

Whether it’s enterprise-grade backups, hosting web or email servers or virtual machines or even providing free office software, this one box can do it all.

Don't buy it if...

You need the fastest file transfers

The lack of 10GbE connectivity means that top transfer speeds are no longer available.

You're on a tight budget

Getting access to Synology’s incredible value and mostly free software library now involves an even heavier initial outlay for compatible drives.

For more network-attached storage options, we've also tested the best NAS & media server distro.